Regional Climate Projections in the IPCC AR4

How does anthropogenic global warming (AGW) affect me? The answer to this question will perhaps be one of the most relevant concerns in the future, and is discussed in chapter 11 of the IPCC assessment report 4 (AR4) working group 1 (WG1) (the chapter also has some supplementary material). The problem of obtaining regional information from GCMs is not trivial, and has been discussed in a previous post here at RC and the IPCC third assessment report (TAR) also provided a good background on this topic.

How does anthropogenic global warming (AGW) affect me? The answer to this question will perhaps be one of the most relevant concerns in the future, and is discussed in chapter 11 of the IPCC assessment report 4 (AR4) working group 1 (WG1) (the chapter also has some supplementary material). The problem of obtaining regional information from GCMs is not trivial, and has been discussed in a previous post here at RC and the IPCC third assessment report (TAR) also provided a good background on this topic.

The climate projections presented in the IPCC AR4 are from the latest set of coordinated GCM simulations, archived at the Program for Climate Model Diagnosis and Intercomparison (PCMDI). This is the most important new information that AR4 contains concerning the future projections. These climate model simulations (the multi-model data set, or just ‘MMD’) are often referred to as the AR4 simulations, but they are now officially being referred to as CMIP3.

One of the most challenging and uncertain aspects of present-day climate research is associated with the prediction of a regional response to a global forcing. Although the science of regional climate projections has progressed significantly since last IPCC report, slight displacement in circulation characteristics, systematic errors in energy/moisture transport, coarse representation of ocean currents/processes, crude parameterisation of sub-grid- and land surface processes, and overly simplified topography used in present-day climate models, make accurate and detailed analysis difficult.

I think that the authors of chapter 11 over-all have done a very thorough job, although there are a few points which I believe could be improved. Chapter 11 of the IPCC AR4 working group I (WGI) divides the world into different continents or types of regions (e.g. ‘Small islands’ and ‘Polar regions’), and then discusses these separately. It provides a nice overview of the key climate characteristics for each region. Each section also provides a short round up of the evaluations of the performance of the climate models, discussing their weaknesses in terms of reproducing regional and local climate characteristics.

Africa.

Evaluations of the GCMs show that they still have significant systematic errors in and around Africa, with excessive rainfall in the south, a spurious southward displacement of the Atlantic inter-tropical convergence zone (ITCZ), and insufficient upwelling in the seas off the western coast.

Evaluations of the GCMs show that they still have significant systematic errors in and around Africa, with excessive rainfall in the south, a spurious southward displacement of the Atlantic inter-tropical convergence zone (ITCZ), and insufficient upwelling in the seas off the western coast.

The report asserts that the extent to which regional models can represent the local climate is unclear and that the limitation of empirical downscaling is not fully understood. It is nevertheless believed that land surface feedbacks have a strong effect on the regional climate characteristics.

For the future scenarios, the median value of the MMD GCM simulations (SRES A1b) yields a warming of 3-4C between 1980-1999 and 2080-2099 for the four different seasons (~1.5 times the global mean response). The GCMs project an increase in the precipitation in the tropics (ITCZ)/East Africa and a decrease in north and south (subtropics).

Europe and the Mediterranean.

In general, chapter 11 states that the most rapid warming is expected during winter in Northern Europe and during summer in southern Europe. The projections also suggest that the mean precipitation will also increase in the north, but decrease in the south. The inter-annual temperature variations is expected to increase as well.

In general, chapter 11 states that the most rapid warming is expected during winter in Northern Europe and during summer in southern Europe. The projections also suggest that the mean precipitation will also increase in the north, but decrease in the south. The inter-annual temperature variations is expected to increase as well.

A more detailed picture was drawn on the results from a research project called PRUDENCE, which represents a small number of TAR GCMs. The time was too short for finishing new dynamical downscaling on the MMD.

The PRUDENCE results, however, are more appropriate for exploring uncertainties associated with the regionalisation, rather than providing new scenarios for the future, since the downscaled results was based on a small selection of the GCMs from TAR.

Therefore, I was surprised to see such an extensive representation of the PRUDENCE project in this chapter, compared to other projects such as STARDEX and ENSEMBLES. (One explanation could be that the STARDEX results are used more in WGII, although apparently not cited. The results from ENSEMBLES are not yet published, and besides STARDEX is mentioned twice in section 11.10.)

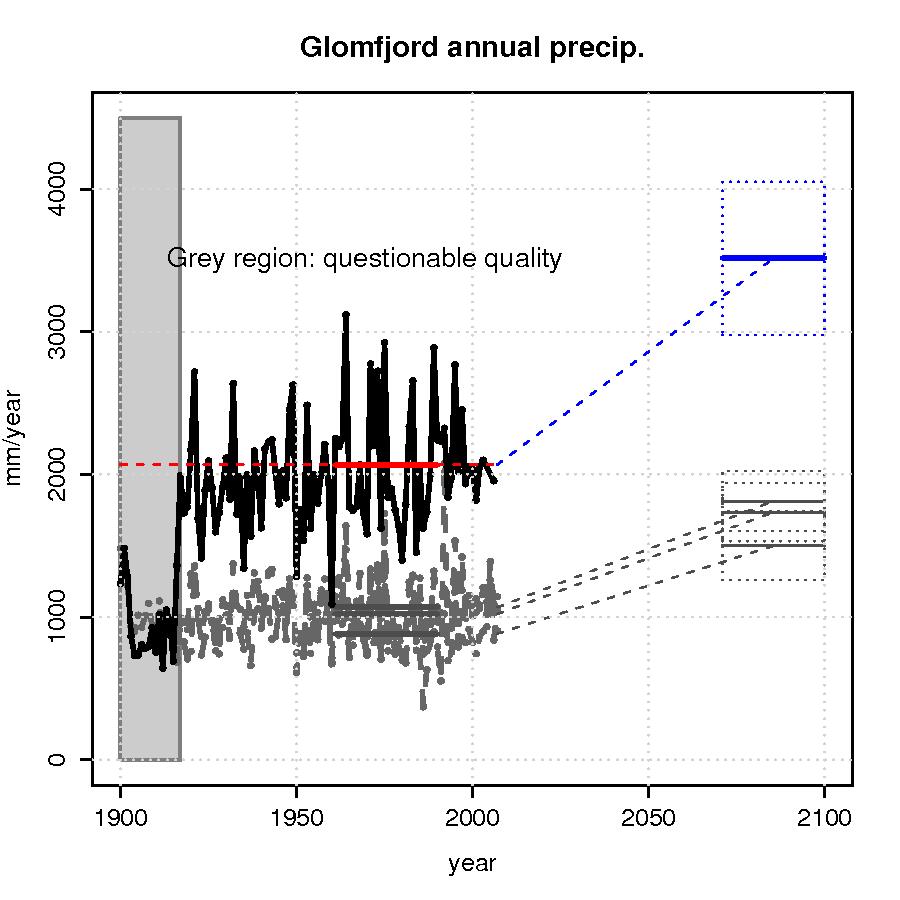

There are some results for Europe presented in chapter 11 of IPCC AR4 which I find strange: In Figure 11.6 the RCAO/ECHAM4 from the PRUDENCE project yields an increase in precipitation up to 70%(!) along the west coast of mid-Norway. Much of this is probably due to an enhanced on-shore wind due to a systematic lowering of the sea level pressure in the Barents Sea, and an associated orographic forcing of rain.

The 1961-90 annual total precipitation measured at the rain gauge at Glomfjord (66.8100N/13.9813E; 39 m.a.s.l.) is 2069 mm/year, and a 70% increase will therefore imply an increase to 3500mm/year (Left figure) which in my opinion is unrealistic . Apart from a sudden jump in the early part of the Glomfjord record, there are no clear and prominent trends in the historical time series (Figure left). The low values in the early part are questionable as the neighbouring station series do not exhibit similar jumps/breaks and is probably a result of a relocation of the rain gauges.

An increase of annual rainfall exceeding 1000mm would imply either that evaporation from the Norwegian Sea area must increase dramatically, or the moisture convergence must increase significantly since the water must come from somewhere. However, the whole region is already a wet region (as indicated by the annual rainfall totals) in the way of the storm tracks.

There are large local variations here (see grey curves in left Figure for nearby stations) and Glomfjord is a locations with high annual rainfall compared to other sites in the same area, but even a 70% increase of the rainfall with annual totals exceeding 1000mm at nearby sites (adjacent valleys etc) is quite substantial.

However, one may ask whether the rainfall at Glomfjord may change at a different rate to that of its surroundings. This question can only be addressed with empirical-statistical downscaling (ESD) at present, as RCMs clearly cannot resolve the spatial scales required.

To be fair, another PRUDENCE scenario presented in the same figure, but based on the HadAM3H model rather than the ECHAM4, suggests an upper limit for precipitation increase over northern Europe of 20% over northern Sweden.

Asia.

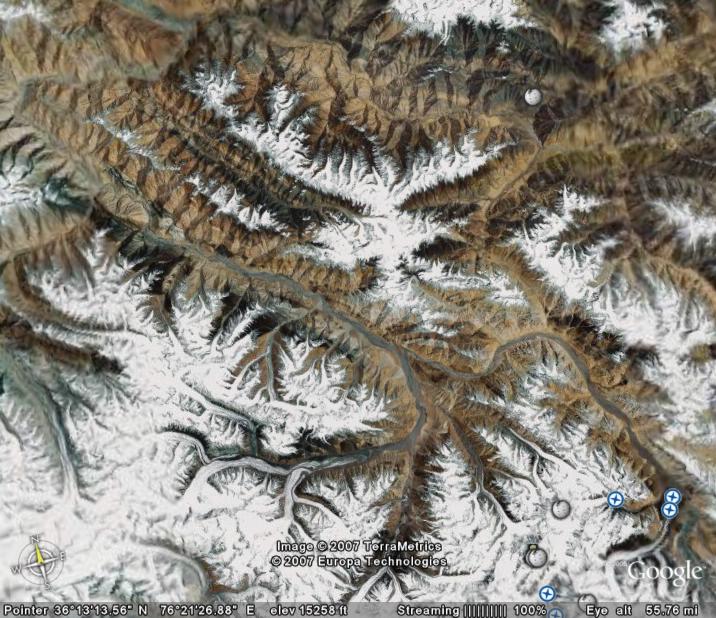

One of the key climate characteristics of Asia is the southeast Monsoon system. Chapter 11 suggest that the circulation associated with the Monsoon may slow down, but the moisture in the air may increase. However, while the general seasonal migration of rain is simulated by most climate models, the representation of the observed monsoon maximum rainfall along the west coast of India, northern parts of Bay of Bengal and north India is poor in many models (probably because of too coarse spatial resolution in GCMs).

One of the key climate characteristics of Asia is the southeast Monsoon system. Chapter 11 suggest that the circulation associated with the Monsoon may slow down, but the moisture in the air may increase. However, while the general seasonal migration of rain is simulated by most climate models, the representation of the observed monsoon maximum rainfall along the west coast of India, northern parts of Bay of Bengal and north India is poor in many models (probably because of too coarse spatial resolution in GCMs).

The GCMs also most likely have significant problems describing the precipitation over Tibet, due to large small-scale spatial geographical features and distorted albedo feedbacks. The net effect may therefore be an increase in the rainfall associated with the Monsoon.

The GCMs also most likely have significant problems describing the precipitation over Tibet, due to large small-scale spatial geographical features and distorted albedo feedbacks. The net effect may therefore be an increase in the rainfall associated with the Monsoon.

The Asian climate is also influenced by ENSO, but uncertainties in how ENSO will be affected by AGW cascades to the Asian climate.

There are, however, indications that heat waves will become more frequent and more intense. Furthermore, the MMD models suggest a decrease in the December-February precipitation and an increase in the remaining months. The models also project more intense rainfall over large areas in the future.

North America.

The general picture is that the GCMs provide realistic representation of the mean SLP and T(2m) over North America, but that they tend to over-estimate the rainfall over the western and northern parts.

The general picture is that the GCMs provide realistic representation of the mean SLP and T(2m) over North America, but that they tend to over-estimate the rainfall over the western and northern parts.

The MMD results project strongest winter-time warming in the north and summer-time warming in the southwest USA. The annual mean precipitation is, according to AR4, likely to increase in the north and decrease in southwest.

A stronger warming over land than over sea may possibly affect the sub-tropical high-pressure system off the west coast, but there are large knowledge gaps associated with this aspect.

The projections are associated with a number of uncertainties concerning dynamical features such as ENSO, the storm track system (the GCMs indicate a pole-ward shift, an increase in the number of strong cyclones and a reduction in the medium strength storms poleward of 70N & Canada), the polar vortex (the GCMs suggest an intensification), the Great Plains low-level jet, the North American Monsoon system, ocean circulation and the future evolution in the snow-extent and sea-ice. Some of these phenomena are not well-represented by the GCMs, as their spatial resolution is too coarse. The same goes for tropical cyclones (hurricanes), for which the frequency, intensity and track-statistics remain uncertain.

A number of RCM-based studies provide further regional details (North American Regional Climate Change Assessment Program). Despite improvements, AR4 also states that RCM simulations are sensitive to the choice of domain, the parameterisation of moist convection processes (representation of clouds and precipitation), and that there are biases in the RCM results when GCM are provided as boundary conditions rather than re-analyses.

Furthermore, most RCM simulations have been made for time slices that are too short to provide a proper statistical sample for studying natural variability. There are no references to ESD for North America in the AR4 chapter except for in the discussion on the projections for the snow.

Latin America.

AR4 states that the annual precipitation is likely to decrease in most of Central America and southern Andes. However, there may be pronounced local effects from the mountains, and changes in the atmospheric circulation may result in large local variations.

AR4 states that the annual precipitation is likely to decrease in most of Central America and southern Andes. However, there may be pronounced local effects from the mountains, and changes in the atmospheric circulation may result in large local variations.

The projections of the seasonal mean rainfall statistics for eg the Amazon forest are highly uncertain. One of the greatest sources of uncertainty is associated with how the character of ENSO may change, and there are large inter-model differences within the MMD as to how ENSO will be affected by AGW. Furthermore, most GCMs have small signal-to-noise ratio over most of Amazonia. Feedbacks from land use and land cover (including carbon cycle and dynamic vegetation) are not well-represented in most of the models.

Tropical cyclones also increase the uncertainty for Central America, and in some regions the tropical storms can contribute a significant fraction to the rainfall statistics. However, there has been little research on climate extremes and projection of these in Latin America.

According to AR4, deficiencies in the MMD models have a serious impact on the representation of local low-latitude climates, and the models tend to simulate ITCZs which are too weak and displaced too far to the south. Hence the rainfall over the Amazon basin tends to be under-estimated in the GCMs, and conversely over-estimated along the Andes and northeastern Brazil.

There are few RCM-simulations for Latin America, and those which have been performed have been constrained by short simulation lengths. The RCM results tend to be degraded when the boundary conditions are taken from GCMs rather than re-analyses. There is, surprisingly, no reference to ESD-based studies from Latin America, despite ESD being much cheaper to carry out and length of time interval being not an issue (I’ll comment on this below).

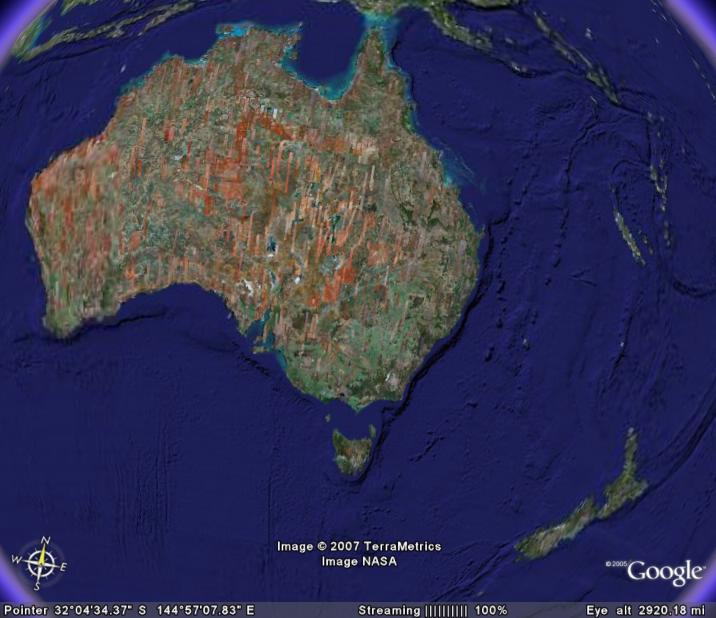

Australia & New Zealand.

The projections for Australia and New Zealand suggest weaker warming in the south and increased frequency of high daily temperatures. The precipitations will, according to MMD, decrease in most of Australia, and it is likely that there will be more drought conditions in southern Australia in the future as a result of a poleward shift in the westerlies and storm track.

The projections for Australia and New Zealand suggest weaker warming in the south and increased frequency of high daily temperatures. The precipitations will, according to MMD, decrease in most of Australia, and it is likely that there will be more drought conditions in southern Australia in the future as a result of a poleward shift in the westerlies and storm track.

The MMD projections for the monsoon rainfall show large inter-model differences, and the model projections for the future rainfall over northern Australia are therefore considered to be very uncertain.

Little has been done to asses the MMD skill over Australia and New Zealand, although analysis suggest that the MMD models in general have a systematic low-pressure bias near 50S (hence a southward displacement of the mid-latitude westerlies). The simulated seas around Australia has a slight warm bias too, and most models simulate too much rainfall in the north and too little on the east coast of Australia.

The quality of the simulated variability is also reported to be strongly affected by the choice of land-surface model.

The projections of changes is the extreme temperatures for Australia and New Zealand has followed a simple approach where the range of variations has been assumed to be constant while the mean has been adjusted according the the GCMs, thus shifting the entire statistical distribution. The justification for this approach is that the effect on changes in the range of short-term variations has been found to be small compared with changes in the mean.

Analysis for rainfall extremes suggest that the return period for extreme rainfall episodes may halve in late 21st century, even where the average level to some extent is diminishing. AR4 anticipates an increase in the tropical cyclone (TC) intensities, although there is no clear trends in frequency or location.

Furthermore, TCs are influenced by ENSO, for which there are no clear indications for the future behaviour. AR4 also states that there may be up to 10% increases in the wind over northern Australia.

AR4 states that downscaled MMD-based projections are not yet available for New Zealand, but such results are very much in need because of strong effects from the mountains on the local climate. High-resolution regional modeling for Australia is also based on TAR or ‘recent ‘runs with the global CSIRO glimate model. A few ESD studies by Timbal and others suggest good performance at representing climatic means, variability and extremes of station temperature and rainfall.

Polar regions.

The Arctic is very likely to warm at a higher rate than the global mean (Polar amplification), and the precipitation is expected to increase while the sea-ice will be reduced. The Antarctic is also expected to become warmer albeit more moderate, and the precipitation is projected to increase too.

The Arctic is very likely to warm at a higher rate than the global mean (Polar amplification), and the precipitation is expected to increase while the sea-ice will be reduced. The Antarctic is also expected to become warmer albeit more moderate, and the precipitation is projected to increase too.

However, the understanding of the polar climate is still incomplete, and large endeavors such as the IPY hope to address issues such as lack of decent observations, clouds, boundary layer processes, ocean currents, and sea ice.

AR4 states that all atmospheric models have incomplete parameterisations of polar cloud microphysics and ice crystal precipitation, however, the general improvement of the GCMs since TAR in terms of resolution, sea-ice modelling and cloud-radiation representation has provided improved simulations (assessed against re-analysis, as observations are sparse).

Part of the discussion about the Polar regions in AR4 relies on the ACIA report (see here , here and here for previous posts) in addition to the MMD results, but there are also some references to RCM studies (none to ESD-based work, although some ESD-analysis is embedded in the ACIA report). There has been done very little work on polar climate extremes and the projected changes to the cryosphere is discussed in AR4 chapter 10.

The models do in general provide a reasonable description of the temperature in the Arctic, with the exception of a 6-8C cold bias in the Barents Sea, due to the over-estimation of the sea-ice extent (the lack of sea-ice in the Barents Sea can be explained by ocean surface currents that are not well represented in GCMs). The MMD models suggest the winter-time NAO/NAM may become increasingly more positive towards the end of the century.

One burning question is whether is the response of the ice sheet will be enhanced calving/ice stream flow/basal melt. More snow will accumulate in Antarctica, as this ‘removes’ water from the oceans that otherwise would contribute to a global sea level rise. The precipitation in Antarctica, and hence snowfall, is projected to increase, thus partly offsetting the sea-level rise.

Small islands.

AR4 concludes that sea levels will continue to rise, although the magnitude of the sea level rise is not expected to uniform. Furthermore, large inter-model differences make regional sea level projections more uncertain. AR4 states that the Caribbean islands in the vicinity of the Greater Antilles and Mauritius (JJA) will likely face drier summer-time conditions in the future whereas those in northern Indian Ocean are believed to become wetter.

AR4 concludes that sea levels will continue to rise, although the magnitude of the sea level rise is not expected to uniform. Furthermore, large inter-model differences make regional sea level projections more uncertain. AR4 states that the Caribbean islands in the vicinity of the Greater Antilles and Mauritius (JJA) will likely face drier summer-time conditions in the future whereas those in northern Indian Ocean are believed to become wetter.

Most GCMs do not have sufficiently high spatial resolution to represent many of the islands, therefore the GCM projections really describe changes over ocean surfaces rather than land. Very little work has been done in downscaling the GCMs for the individual islands. However, there have been some ESD work for the Caribbean islands (AIACC).

Two sentences stating that ‘The time to reach a discernible signal is relatively short (Table 11.1)’ is a bit confusing as the discussion was referring to the 2080-2099 period (southern Pacific). After having conferred with the supplementary material, I think this means that the clearest climate change signal is seen during the first months of the year. Conversely when stating ‘The time to reach a discernible signal’ for the northern Pacific, probably refers to the last months of the year.

Other challenges involve incomplete understanding of some climatic processes, such as the midsummer drought in the Caribbean and the ocean-atmosphere interaction in the Indian Ocean.

Many of the islands are affected by tropical cyclones, for which the GCMs are unable to resolve and the trends are uncertain – or at least controversial.

Empirical-statistical or Dynamical downscaling?

AR4 chapter 11 makes little reference to empirical-statistical downscaling (ESD) work in the main section concerning the future projections, but puts most of the emphasis on RCMs and GCMs. It is only in Assessment of Regional Climate Projection Methods section (11.10) which puts ESD more into focus.

I would have thought that this lack of balance may not be IPCC’s fault, as it may be an unfortunate fact that there are few ESD studies around, but according to AR4 ‘Research on [E]SD has shown an extensive growth in application…’. If it were really the case that ESD studies were scarce, then it would be somewhat surprising, as ESD is quick and cheap (here, here, and here), and could have been applied to the more recent MMD, as opposed to the RCMs forced with the older generation GCMs.

ESD provides diagnostics (R2-statistics, spatial predictor patterns, etc.) which can be used to assess both the skill of the GCMs and the downscaling. I would also have thought that ESD is an interesting climate research tool for countries with limited computer resources, and it would make sense to apply ESD to for climate research and impact studies to meet growing concerns about local impacts of an AGW.

Comparisons between RCMs and ESD tend to show that they have similar skills, and the third assessment report (TAR) stated that ‘It is concluded that statistical downscaling techniques are a viable complement to process-based dynamical modelling in many cases, and will remain so in the future.’ This is re-phrased in AR4 as ‘The conclusions of the TAR that [E]SD methods and RCMs are comparable for simulating current climate still holds’.

So why were there so few references to ESD work in AR4?

I think that one reason is that ESD often may unjustifiably be seen as inferior to RCMs. What is often forgotten is that the same warnings also go for the parameterisation schemes embedded in the GCMs and RCMs, as they too are statistical models with the same theoretical weaknesses as the ESD models. AR4 does, however, acknowledge this: ‘The main draw backs of dynamical models are … and that in future climates the parameterization schemes they use to represent sub-grid scale processes may be operating outside the range for which they were designed’. However, we may be on a slippery slope with parameterisation in RCMs and GCMs, as the errors feed back to the calculations for the whole system.

Moreover, a common statement heard even among people who do ESD, is that the dynamical downscaling is ‘dynamical consistent’ (should not be confused with ‘coherent’). I question this statement, as there are issues of gravity wave drag schemes and filtering of undesirable wave modes, parameterisation schemes (often not the same in the GCM and the RCM), discretisation of continuous functions, and the conservation associated with time stepping, or lateral and lower boundaries (many RCMs ignore air-sea coupling).

RCMs do for sure have some biases,yet they are also very useful (similar models are used for weather forecasting). The point is that there are uncertainty associated with dynamical downscaling as well as ESD. Therefore it’s so useful to have two completely independent approaches for studying regional and local climate aspects, such as ESD and RCMs. One should not lock onto only one if there are several good approaches. The two approaches should be viewed as two complementary and independent tools for research, and both ought to be used in order to get a better understanding of the uncertainties associated with the regionalisation and more firm estimates on the projected changes.

Thus, the advantage of using different methods has unfortunately not been taken advantage of in chapter 11 of AR4 WGI to the full extent, which would have made the results even stronger. When this points was brought up during the review, the response from the authors of the chapter was:

This subsection focuses on projections of climate change, not on methodologies to derive the projections. ESD results are takan into account in our assessment but most of the available studies are too site- or area-specific for a discussion within the limited space that is available.

So perhaps there should be more coordinated ESD efforts for the future?

On a different note, the fact that the comments and the response are available on-line demonstrates that IPCC process is transparent indeed, showing that, on the whole, the authors have done a very decent job. The IPCC AR4 is the state-of-the-art consensus on climate change. One thing which could improve the process could also be to initiate more real-time dialog and discussion between the reviewers and the authors, in additional to the adopted one-way approach comment feed.

Uncertainties

One section in IPCC AR4 chapter 11 is devoted to a discussion on uncertainties, ranging from how to estimate the probability distributions to the introduction of additional uncertainties through downscaling. For instance, different sets of solutions are obtained for the temperature change statistics, depending on the approach chosen. The AR4 seems to convey the message that approaches based on the performance in reproducing past trends may introduce further uncertainties associated with (a) models not able to reproduce the historical (linear) evolution, and (b) the relationship between the observed and predicted trend rates may change in the future.

Sometimes the uncertainty has been dealt with by weighting different models according to their biases and convergence to the projected ensemble mean. The latter seems to focus more on the ‘mainstream’ runs.

AR4 stresses that multi-model ensembles only explore a limited range of the uncertainty. This is an important reminder for those interpreting the results. Furthermore, it is acknowledged that trends in large-area and grid-box projections are often very different from local trends within the area.

[[Isn’t the sea level pretty constant across the globe?]]

Surprisingly, no. It varies locally depending on latitude (Earth’s differential rotation rate north and south), local gravitational anomalies, currents, differences in salinity and temperature, and occasionally exotic happenings like underwater earthquakes or volcanoes. The general sea level rises under global warming, but not uniformly in all places.

[[Leading scientists have said that all of our efforts to reduce CO2 emissions won’t make a bit of difference. ]]

WHAT leading scientists? The ones I’ve heard say we can mitigate the worst effects if we act now.

Timothy Chase said…

Well, one point that gets mentioned quite often is the fact that the climate models themselves are grounded in the actual physics.

Tim, real physical systems are dynamical systems. The meaning of dynamical is quite broad, but basically they are all the same in that they mean time-dependent functions.

They aren’t statistical but based upon the principles of physics such as the equations describing radiation emission, fluid flow and partial pressures.

Yes, Physics principles are still applied. Dynamical systems with known structures (structural equations) are built using Physics principles. Here is an example.

In the application of Newton’s laws of motion for an object moving horizontally on a surface which one end is attached to a wall with a spring is such:

m*y”(t) = F(t) – a*y'(t) – b*y(t)

y(t) = position

y'(t) = velocity

y”(t) = acceleration

a = viscosity frictional coefficient constant

b = spring constant

m = mass of the object

F(t) = applied force

You can pretty much solve the above linear dynamical mass-spring-wall system to find a solution for “y(t)”. Once a solution is found, one can pretty much observe the behavior at any time instant, such as when t = t1, y(t1) = y1, etc.

Note that the above dynamical system is a monovariable one, and not coupled. Climate systems are multi-coupled (nested coupled) and highly non-linear.

Also note that if “a”, “b” and “m” are dynamical parameters, ie, “a(t)”, “b(t)” and “m(t)”, then the whole equation becomes non-linear:

m(t)*y”(t) = F(t) – a(t)*y'(t) – b(t)*y(t)

with this mono-variable non-linear dynamical system, you already facing difficulties in finding a numerical solutions.

The climate sensitivity is a dynamical parameter and I don’t see why hooha here at RealClimate about Dr. Schwartz paper on sensitivity. Real climate is multi-variable as opposed to our simple mono-variable example above and also, it is also a multi-coupled (nested) feed-back dynamical systems. Note that you still involve some statistical algorithms is dynamical systems analysis.

I am awaiting Gavin Schmidt’s reply to why he thinks that Schwartz’s AR(1) process with a single timescale is an over-simplification, which is issue that I have raised in the other thread.

Re 63 – I think there may be points I could better clarify, but that will have to wait a day or two – except for this:

If you divide the climate system into a number of boxes, then you can define fluxes into and out of the boxes of various quanties, including SW and LW radiative fluxes and convective fluxes. There is also some quantity present in the box. A climatic equilibrium exists if, averaged over time (At least one year to average over all seasons, but several years would be better to average over interannual variabilities), the quantity present in each box is not changing, which means the combined effect of all fluxes is balanced. This will also imply that the average over time of each individual flux is not changing, because some variation in climate would be necessary to change a flux, except for the solar radiation flux (although it can be altered by climate via albedo, etc.), which would cause climate changes upon variation anyway.

For local or regional boxes, there will be horizontal fluxes of heat. Radiation is not important in horizontal fluxes because temperature gradients in the horizontal are too weak for much net LW radiant flux. Winds are currents are the most important in horizonal fluxes (for heat transfer, also for momentum and kinetic energy, and angular momentum). Globally averaged, horizontal fluxes cancel out.

If increased evaporation at the surface occurs, there will be a faster water cycle and the convective flux from the surface will increase – at equilibrium, this must be balanced by either an increase in downward SW or LW flux or a decrease in upward flux. This is in part determined by the lapse rate itself and also by the changes in atmospheric optical properties (which includes water vapor feedback and clouds as well as the initial change in greenhouse gasses). So the equilibrium evaporation rate is cannot be determined only by the surface temperature – the whole system changes.

Water vapor also absorbs some SW flux, and a part of water vapor feedback would be to reduce the SW flux reaching the surface – this would tend to require a reduced convective flux at equilibrium. (However, this doesn’t affect the surface temperature so much because most of the SW flux removed from the surface is still being absorbed within the troposphere, and as mentioned before, the temperature changes at all levels in the troposphere and at the surface are coupled via the tendency of convection to maintain a particular lapse rate.) But the LW feedback from water vapor at the surface, which is, when warm enough and moist enough, a decrease in net upward LW flux from the surface (especially at tropical temperatures), is a stronger effect than the SW flux change, and that would tend to enhance convection. (PS see also http://www.gfdl.noaa.gov/reference/bibliography/2006/wdc0602.pdf – although keep in mind that much of that is not for globally averaged conditions) (See also “Global Physical Climatology”, Hartmann, 1994, especially p.246)

In the tropics, the temperature dependence of the moist adiabatic lapse rate (which is the lapse rate the convection will tend to maintain in the troposphere) is such that surface warming is less than mid-to-upper tropospheric warming (PS that could tend to require greater convective fluxes from the surface via it’s effect on LW fluxes). However, it is the reverse in the polar regions – not because the moist adiabatic lapse rate behaves oppositely (although if I have this correctly, it should be less sensitive to temperature changes at lower temperatures – and for that matter, evaporation rates should also be less sensitive at lower temperatures). Rather, I think it is because the air in polar regions tends to be more stable to convection to begin with. Some heating of the poles comes from the lower latitudes through the atmosphere itself. So the positive feedback from greater solar radiation absorption (from decreases in snow and ice), because it occurs at the surface, should raise the temperature near the surface, and the stability of the air allows near surface temperature increases without strong convective coupling to the rest of the troposphere.

Re #96: [You sit in front of a computer knowing there is a hospital down the road…]

I think you’re conflating a lot of issues involving stable political & social systems into your definition of poverty. It’s certainly quite possible to be killed by a roving band of looters in the US or other wealthy countries, but unheard of in many “poor” countries. Likewise, being in an immigration camp is a matter of social systems and personal choices (and in any case, would be made far more likely by AGW).

[…but don’t jump all over people showing true compassion for the poverty stricken.]

I had no slightest intention of jumping on people showing true compassion – not that I’ve seen many of those lately. It’s the hypocrites weeping crocodile tears about how they can’t change their precious lifestyles to help alleviate AGW because it might “hindering the rise of millions from poverty” that I was aiming at.

And re #97: [I would contend that those without access to adequate healthcare, nutrition or education could be termed poor.]

And I would agree. My point is that those can be had at a very small fraction of the cost – measured either in money or in energy – of maintaining a western consumerist lifestyle. Though I do see a certain irony in the fact that although most in the west have access to those, damned few choose to make use of them.

Re: #103 (Falafulu Fisi)

Are you sure you know the difference between a linear and nonlinear differential equation?

On the contrary, this is a linear diff.eq., because its homogeneous part m(t)*y”(t) + a(t)*y’(t) + b(t)*y(t) = 0 has the property that if f(t) and g(t) are any two solutions, then so is c1*f(t) + c2*g(t) for any two constants c1 and c2.

re 93

We are still fouling our nests to the point where it will no longer sustain us.

It’s a pessimistic view, not invalid, but only one way of looking at it.

==================

No.

It is a realistic view. There is a difference.

You continue: “The human race is nothing short of miraculous. Around six billion and counting, and our worst impact is to raise CO2 levels”

I would again disagree. The rise of CO2 and like GHGs is a BIPRODUCT of the manner in which we have been conducting business, including the fouling of our nest.

There is nothing “miraculous” to what we are doing, by the way, only the likely inevitable biproduct of how we’ve evolved as toolmakers.

re 68

Leading scientists have said that all of our efforts to reduce CO2 emissions won’t make a bit of difference. I don’t think you consider the practicality of what is proposed.

====================

Not to belabor the obvious, but you might find this interesting:

“Confronting Climate Change: Avoiding the Unmanageable and Managing the Unavoidable”

http://www.unfoundation.org/SEG/

See also

http://www.sigmaxi.org/about/news/UNSEGReport.shtml

I (#70) wrote:

Falafulu Fisi (#107) wrote:

I (#70) wrote:

Falafulu Fisi (#107) wrote:

If we were trying to predict the weather on a particular day in a particular place forty years from now your criticism might carry some weight. But we are not. The climate models are used to predict the climate forty years from now or a hundred years from now based on a given emissions scenario. They vary the initial conditions. The will have multiple runs. The will use different models. They may use as many as a thousand different runs.

Based upon the similarities between the results they are able to determine what aspects of the results are robust – such as the average global temperature, the severity of the weather in Europe, the expansion of the Hadley cells and consequent drying out of the United States, the variability of the weather, the propensity and severity of heat waves, droughts, etc.. Not the weather on a given day or hour for a particular city, but the climate for a given latitude, region, and time of the year. However, climatologists are beginning to get confident that they can predict what will happen next year (for example, the Hadley Centre, when they initialize different runs with consecutive days of real world data) or for particular cities (Nasa is beginning to do this for some east coast cities).

The weather is a largely unpredictable and chaotic moving point nested in an attractor which evolves in phase space over time in a fairly predictable manner. Ensembles of runs give them the chance to explore that attractor in a fairly systematic fashion. This has been explained quite often at RealClimate. In fact, we probably have to explain it several times a week to “newcomers.”

And as a matter of fact, I had already explained as much to you in the post that you were responding to.

I (#70) had written:

However, you may wish to check:

4 November 2005

Chaos and Climate

https://www.realclimate.org/index.php/archives/2005/11/chaos-and-climate/

12 January 2005

Is Climate Modelling Science?

https://www.realclimate.org/index.php/archives/2005/01/is-climate-modelling-science/

… and for some more recent posts which touch on this:

20 August 2007

Musings about models

https://www.realclimate.org/index.php/archives/2007/08/musings-about-models/

15 May 2007

Hansen’s 1988 projections

https://www.realclimate.org/index.php/archives/2007/05/hansens-1988-projections/

Falafulu Fisi (#107) wrote:

Schwartze’s highly oversimplified approach has been analyzed elsewhere. The Friday Roundup has a couple of links. So if you just can’t wait…

Please see:

Friday Roundup

https://www.realclimate.org/index.php/archives/2007/08/friday-roundup-2/

I would recommend, for example, James Annan’s:

Sunday, August 19, 2007

Schwartz’ sensitivity estimate

http://julesandjames.blogspot.com/2007/08/schwartz-sensitivity-estimate.html

You write, “The climate sensitivity is a dynamical parameter…”

Climate sensitivity falls out of the models – it isn’t something which they assume.

Please see:

3 August 2006

Climate Feedbacks

https://www.realclimate.org/index.php/archives/2006/08/climate-feedbacks/

With regard to realistic estimates of its value based upon empirical research, you might try:

24 March 2006

Climate sensitivity: Plus ça change…

https://www.realclimate.org/index.php/archives/2006/03/climate-sensitivity-plus-a-change/

… or James Annan’s:

Thursday, March 02, 2006

Climate sensitivity is 3C

http://julesandjames.blogspot.com/2006/03/climate-sensitivity-is-3c.html

Re 63 –

So as implied by discussion of polar tropospheric stability, the heat transported by the air to high latitudes obviously must come from lower latitudes, and that will affect tropical and subtropical heat energy budgets. Middle latitudes are in there too. So locally and regionally, even in the time average, climatic equilibrium will still allow that the vertical fluxes are not all balanced (that includes water vapor and it’s latent heat content, etc., and goes for momentum and angular momentum as well, etc.) That relaxes the constraint on regional convective fluxes I had discussed earlier – but globally averaged, the horizontal fluxes (of all conserved quantities) cancel (or for non-conserved quantities, rates of creation and destruction globally and time averaged will balance in equilibrium), and so the vertical fluxes must be balanced globally in an equilibrium state.

——–

MORE ON ALBEDO FEEDBACKS

The reason why near surface temperature increases at high latitudes will be greatest around wintertime:

1. a conceivable reason would be that this is when the lapse rate is least conducive to vertical convection of heat away from the surface (remember the positive surface albedo feedback acts to increase SW heating of the surface). However, I’m guessing reason 2 is a bigger factor:

2. SW heating at high latitudes occurs mainly in the sunny seasons, not winter. And the increase in SW heating from loss of snow and ice area would be stronger in the summer than in the winter. Another reason for that would occur in areas where snow and ice is present year-round to begin with and thus the feedback starts in summer only. However, over the ocean, the temperature will not change much because of the large heat capacity of the water that will be absorbing more SW radiation. But in winter, the water must lose heat to the air upon freezing, and after freezing over, the air is insulated from the water and can drop in temperature further; more summer heating means it will require greater loss of heat in winter to freeze and it will take a longer time to freeze, so the winter temperature of the air is increased. Now, it occurs to me that this mechanism does not apply so much to land – snow has some ability to insulate, of course, but the heat capacity is not the same as the ocean’s. However, it also occurs to me that a little equatorward, where snow and ice are mainly winter phenomena (except in the mountains), and where there is still some SW radiation in winter, this could explain a wintertime maximum in the temperature increase from the albedo feedback. However, albedo feedback will tend to be less on land because land itself has a higher albedo than water (although water at high latitudes will have a higher albedo than in the tropics because of the more glancing angle of the sun, but I think the water still has a relatively low albedo compared to many land surfaces) – this is not so much the case for forests, but forest trees tend to stick out above snow and so the snow’s effect on albedo is not so great (an idea I heard once for a possible ice age trigger, or at least a cooling initiator, is the spreading of bogs into high-latitude forests (which could be caused by some kind of climate change) – because they have a more flat surface that can be covered by snow in the winter).

Of course, there will be some albedo feedbacks from climate effects on vegetation. And then there’s clouds.

——–

Another visualization of LW radiation:

Within the atmosphere, at any level, there is LW flux going up and LW flux going down. The net upward LW flux is the upward flux minus the downward flux – it is a net energy flow rate. One can subdivide the atmosphere into arbitrary layers for visualization and numerical modelling purposes, and on that note, at any given level within the atmosphere, the net upward LW flux is the sum of net LW fluxes between each pair of levels (in this case, counting only the fluxes which are emitted by one layer absorbed by the other layer in the pair) that has a member above and a member below (including pairs that include the surface and space as ‘layers’, where space ‘absorbs’ all LW flux that reaches it and emits essentially no LW back (like a very cold, very thick layer), and the surface also acts like a rather thick isothermal layer (absorbing most of the LW flux which reaches it)). The net LW flux for any such pair will be from the warmer layer to the colder layer, and will be greater for a greater temperature difference – this is because it is the difference between the LW flux from one to the other and the LW flux in the reverse. This is true even if the layers are of different thicknesses, optically speaking – because at any given wavelength, the ability to emit is proportional to the ability to absorb (a very thin hot layer may not emit much but then will not absorb much either). The net LW flux will be smaller for thinner layers in general, but then there will be more such layers so the sum will be the same (in numerical modelling, the sum may not be quite the same depending on the mathematical formulation, and the many thinner layers would generally give a more accurate result). The net LW flux will also be greater for a pair of layers that are closer together, with fewer intervening layers in between; this last point is key for increasing opacity – if one keeps the layers at a set optical thickness, then increasing opacity requires dividing the atmosphere into a greater number of such layers, and across the same distance between two such layers, there will be a greater number of intervening layers. For a given temperature profile, this results in reduced net LW flux within a region where the temperature varies sufficiently slowly with distance, because more of the net LW fluxes between pairs are between pairs that are closer together and thus at more similar temperatures. However, for any given pair, if the temperature of both layers is raised by some amount, then the net LW flux can increase from the warmer pair to the colder pair, because emission is not linearly proportional to temperature; this is especially true toward shorter LW wavelengths, less so at the longer wavelengths.

——–

Visualization N (N=? I don’t remember now how many ways I’ve described it):

For a given degree of opacity, you can (pretending you can see at LW wavelengths) see only so much at any distance, less and less going farther away. If everything you can see is at the same temperature, then the LW flux in any direction is the same, and so there is no net LW flux transporting a net amount of radiant energy. If there is a nonzero temperature gradient somewhere close enough to see, then there will be a nonzero net LW flux at your point of view; it will be larger the better the temperature variance can be seen – if it is closer in location or if it is a larger gradient, and the net flux will be from warmer to colder. For a given gradient, the net flux will be larger if the average temperature is raised, because the LW emission rises faster than a linear proportion to temperature – this is especially true at shorter wavelengths, not so much at the longer wavelengths.

As opacity increases and reduces the amount of variation in temperature that can be seen, the general tendency (before the temperature distribution changes in response to the effect) is to reduce the net LW flux. However, if there is a relatively strong temperature variation within a small space relative to the distances one can see (an inline comment mentioned mean free paths of photons – this would be in proportion to visibility distances), then as opacity is increased:

For an anomalously warm or cold region within otherwise isothermal field, the net LW fluxes out of or into that region will grow in size as the anomalous region becomes more opaque and thuse a more effective emitter and absorber.

For a sharp gradient across a flat plane between two expansive regions of different temperatures extending as far as visibility limits, the net flux across the gradient will not change, but the region across which that net flux exists will shrink, as the distance from which the gradient can be seen shrinks. Because of that, while the net flux is unchanged across the gradient itself (for sufficiently sharp gradient relative to the amount of opacity), the rate of variance over space of the net flux must grow – that is, the convergence of the flux on one side and divergence of the flux on the other side of the gradient become concentrated in a smaller space close to the gradient, so that the trend is from complementary large spaces of small cooling and heating rates toward complementary small spaces of large cooling and heating rates (the volume integral of the heating rate or cooling rate on either side will stay constant until the visibility distance shrinks too much for the gradient to be considered relatively sharp in comparison).

on my comment 25:

I think I was wrong about a thicker tropopause causing horizontally larger sytems to be more likely; it is the increase in vertical static stability that would cause this (if that happens due to decreasing moist adiabatic lapse rate at warmer temperatures) – and I expect it to affect cyclones and anticyclones assymetrically… (because moist convection associated with cyclones would partly negate the effect of increased vertical static stability).

Anyway, I’m now wondering if larger horizontal scale anticyclones might tend to exist, and if so, and if combined with thicker lower level thermal perturbations due to reduced lower level wind shear, and if those thicker layers decay more slowly by LW radiative fluxes, and if other factors (SW absorption, etc.) do not counteract the effect, then … would bringing in air masses from greater distances toward developing fronts allow the occurence of some frontal zones as strong as are found now even while the average thermal gradient in the lower troposphere is reduced?

Models of atmospheric heat capture have evolved until we can debate them in detail without looking at the big picture. If just the atmosphere warms, then farmers can substitute sorghum for wheat, and civilization can go on. In the big picture, we have to consider what the follow on effects to atmospheric warming are, and how fast they occur.

Ice sheet modeling that really works, is critical to understanding how global climate change is going to affect us. For now, our ice sheet models do not work. (Start with the excellent RC post of 26 June 2006 by Michael Oppenheimer.) Why are we spending our time refining atmospheric models that have some functionality when our ice sheet dynamic models do not work?

Consider for example, that melt water (or rain) can rapidly carry heat to the interior or to the base of an ice sheet – even during a brief polar summer. That heat facilitates deformation and lubrication processes that allow the ice to flow. However, the processes (mostly conduction and radiation) that can remove that heat operate at much lower rates. Then, once an ice sheet starts moving down hill, it converts potential energy to heat, which tends to facilitate the deformation and lubrication processes. The insulation provided by a huge thickness of ice tends to hold the heat within the body of the ice. In short, once a river of ice starts moving downhill, it takes a lot of very cold air to remove the heat and to slow it down. I just do not see a lot of chill coming down the pike to help us hold our ice sheets in place.

Some of our ice sheets (Larson B & Ayles) started moving a few years ago; when we had less accumulated heat than we have today. While the current speeds of the ice sheets are low, the acceleration over the last 5 years has been huge. This year is warm, and the ice sheets will accelerate in response. Next year we will accumulate more heat, and the ice sheets will soften more and move faster. It is not a good trend.

I think the guess of ice sheet melt in this century being equal to one meter of sea level change is just that – a guess, and one that will prove to be very wrong. I think it is a linear extrapolation of the last few years of observational data. These guesses do not seem to reflect quantitative estimates of known non-linear feedback loops in ice behavior. When such feedback loops in ice sheets are considered, then several and perhaps many meters of sea level change is possible in the next century.

I wish you wizards of the air, and mavens of FORTRAN would put all of your formidable brainpower to work on getting some kind of a WORKING dynamic model of ice sheets. I would very much like you to prove me wrong on this.

Timothy Chase said…

If we were trying to predict the weather on a particular day in a particular place forty years from now your criticism might carry some weight. But we are not. The climate models are used to predict the climate forty years from now or a hundred years from now based on a given emissions scenario. They vary the initial conditions. They will have multiple runs.

Tim I perfectly understood very well of having to start with different initial conditions. In fact if you run your MIMO (multiple inputs – multiple outputs) climate data thru a purely black box model such as artificial neural network (ANN) with different initial conditions, then you would be guaranteed to get different solutions. The question to ask is what initial conditions is the right one? This is the most difficult question and no one has been able to provide an answer. If someone has already worked out this difficulty, then he deserves a Nobel Prize.

Timothy said…

The will use different models.

In Physical reality, there may exist only one true model, but in saying that, however different models would result in almost similar outcome, but all of them don’t correspond or to physical reality. Laws of Physics would be self-contradictory if different models describe the same Physical phenomena.

Example: In early last century , Neil Bohr formulated his hydrogen atom model. His model worked perfectly in single electron atom, and it was hailed as a success because they (scientists) thought that they had nailed the model of the physical reality. Bohr’s model failed miserably when applied to spectra of multi-electron atoms. This problem wasn’t solved until the emergence of wave-mechanics (Quantum Mechanics), in the 1920s. Niel Bohr’s model is completely wrong, that it doesn’t correspond to any Physical reality at all. He assumed that electron as in hydrogen, circulates the nucleus in a Newtonian fashion, ie, planetary system. The model only works in single electron, since coupling effects (electron to electron), plus other dynamics were missing as to have any effect in actual observation. The main point here is to note that Bohr’s model or Schrodinger’s equation both different models could be used to analyze the spectra of single electron atom such as Hydrogen , the outcome (results) would be the same. However not both models correspond to physical reality, one must represent physical reality and the other one must be ditched, since Physics would be self-contradictory if we accept that same physical reality is represented by different models. Bohr’s model is ditched and Schrodinger’s equation is retained, since it is a more generalize into multi-electron models.

So, history of science has taught us, that if we run different models and they seem to have similar outcome, don’t get carried away too soon, as there will be actually one model that could stood the test in comparison to others, where that model might come on top as the one that seemed fit to represent the approximate or true model of physical reality.

So, I advise here Tim about too carried away with similar outcome of multi-models, since they don’t represent the same physical reality, only one of them does represent true physics, but are we getting closer to finding out which one? May be so, but I wouldn’t want to jump too early and declared we have, since science history has told us (Bohr’s case), not to do so. Physical reality and models that represent them must be generalizable and this is something that is still lacking in climate models. It doesn’t matter whether emergent behavior in complex systems such as climate, makes it unknowable to know the models that represent reality, however it doesn’t change the fact that emergent behavior or chaos itself is caused by physical laws in the first place. If we don’t accept that emergent behavior is physical in nature, then we have to accept that God (very unlikely) is the invisible hand in playing mind games with the citizens of this planet regarding climate systems.

Timothy said…

The weather is a largely unpredictable and chaotic moving point nested in an attractor which evolves in phase space over time in a fairly predictable manner.

I already know that Tim, in fact this is something that I do in developing software to model economic/finance economic systems. Chaos frequently pops up economic systems analysis, such as in derivative market pricing and property markets. Some are still using the traditional and conventional chaos and bifurcation theory, but theory has advanced, where theories from other disciplines in computing and mathematics such as fuzzy logic have supplemented the shortfall in conventional chaos and bifurcation theory. Fuzzy logic is a universal function approximator (both linear & non-linear) .The analysis using chaotic-fuzzy-system algorithms are more robust than conventional chaos & bifurcation theory. The point here, even that we don’t fully understand or know the dynamics of of climate systems, it is still possible to know the unknowns, but this is an evolutionary process as things are discovered incrementally. I would highly recommend the following book if you are doing chaotic & bifurcation climate data analysis. The book is for general use, but the concepts are applicable in any chaotic dynamical system analysis.

Title: “Fuzzy Chaotic Systems: Modeling, Control, and Applications”

Author: Zhong Li.

Publisher : Springer.

Timothy Chase said…

it is quite often the case that in one way or another the distinction will be made between the actual path of the weather through phase space and the evolution of the climate system as an attractor in which the weather state is embedded.

Tim, don’t get too hooked in word semantics. Let me ask you a question. Path analysis in dynamical system is time-dependent. It means that you can pretty much have a rough estimation of the system’s path that has been traversed. This is the case of brownian motion. One is tended to forget of how the particles traversed a path from A to B. All they are interested in its dynamics at point B and don’t care whether the the actual path is A->C->D->B or A->B or A->C->B, etc, etc. Now, since path traversal in brownian motion is time-dependent, you could take a snapshot of the path by making the time increment continuous, ie, analyse the system at E if it started from A. Suppose that I have the following hypothetical path:

A->B->C->D->E

Since, the segment B->C->D is unimportant, we’re only interested in the final destination E , point B , C or D could be made important by specifying that the end-time is t = B or t = C , etc. In this way, paths history could be analysed. In making time increment almost continuous, the traversed path becomes possible to give a rough estimation.

So, in relation to climate systems modeling, the actual path traversed by a system is as important as the final destination. The behavior of a sytem at t=t1 is as important as when t=t_final.

Now, this kind of analysis is still dominant in particle Physics, however the principles are still the same. Path analysis has been applied by Econo-physicists to the pricing of instruments in financial derivative markets.

“Using path integrals to price interest rate derivatives”

http://arxiv.org/PS_cache/cond-mat/pdf/9812/9812318v2.pdf

This type of path analysis do use the Feynman Path Integral from Quantum Mechanics to analyse the path of an instrument’s price over a specific increment of time.

“Feynman Path Integral of Quantum Electro Dyanmics theory”

http://en.wikipedia.org/wiki/Path_integral_formulation

The main point of my post here, that path is important since one could arbitrarily, calculate the price of a derivative at any final time destination. You need to know the start time and the end time. The closer the end time to the start time, the model becomes continuous, therefore all the possible paths between the start time and the end time could be roughly approximated. Path analysis is climate is no different to path integral analysis in atomic systems or financial derivative pricings.

I lump together many different forms of human suffering under ‘poverty’ on purpose for this discussion. It doesn’t matter if you are sitting destitute and homeless in an immigration camp, or being overrun by looting, murdering, militias, or you’re a family of five living off of a refuse dump. The path away from human suffering almost always leads to generating more GHG’s.

I have a roof over my head, good food on my table, a vehicle to drive, an education, a livelihood, and medical care – for myself and my child, in a relatively secure country. I will not begrudge a single person for wanting and striving for at least what I have. Most of the people on this planet barely have one of these things, but global living standards have been changing during my lifetime, and will continue to improve.

Because of the population density of developing countries (ex. China, India and many others), the very least we can hope for is most countries leveling off at United States GHG levels, but most likely, many will surpass us. GHG’s are in our future. Groups such as SEG and Sigma Xi, as well as IPCC mean well, but none of them have a grip on reality and plan for things like ‘leveling off of CO2 emissions in 2020’. And if the global climate is as sensitive and dire as stated, the ‘reducing emissions’ campaign won’t make a bit of difference.

Consider the practicality of what is proposed. I am surprised (and troubled) that ‘reducing emissions’ is the only tool on our workbench.

If you want to see perfect data on regional climate change look here. http://www.washingtonpost.com/wp-dyn/content/article/2007/09/01/AR2007090101360.html?hpid=topnews

re: #116 Aaron

Yes, we have the same issue in California, another place that cares about wine.

http://winebusiness.com/GrapeGrowing/webarticle.cfm?ref=rn&dataId=48583

The good news in that article is that the wine around Lake Okanagan, BC (an area we visit 2-3 times/year) is getting better. Whether that would compensate for the eventual downturn of Napa and Sonoma remains to be seen.

Also, according to The Winelands of Britain, we should expect to see fine vineyards on the North Shore of Loch Ness, producing great Scot wines.

I (#109) had written:

Falafulu Fisi (#113) responded:

Then there wasn’t much point in your bringing up the nonlinear behavior of climate systems as if it were a criticism of climatology, was there? Varying the initial conditions a performing different runs is how climatology deals with the nonlinearity.

Falafulu Fisi (#113) responded:

Wouldn’t be quite the same thing as a climate model, would it? Climate models are intended to help us understand the climate system, not simply attempt to make projections based upon past performance.

I brought this up and even used the phrase “black box” on an earlier thread at one point:

I point this out because it really doesn’t make much sense to speak of running “climate data thru a purely black box model” given the complexity of the climate system – the fact that there as so many aspects which could be modeled. Whether you are speaking of genetic programming or neural networks, such approaches work best when the problems which they are applied to are fairly deliminated – or at least far more so than the earth’s climate system. The approach used by climatology permits us to cash-in on the theoretical advances of other branches of physics, and to both model the climate system to the best of our current ability while at the same time laying a rational foundation for future work. As it is done, there is no room for curve-fitting or black boxes. As far as I can see, the incorporation of either would undermine the foundation of the discipline itself.

Falafulu Fisi (#113) responded:

In our study of chaos as it applies to weather, even a small difference will tend to become amplified until it redounds throughout the system. One image which I have of this is the Baker transformation (which you are no doubt familiar with – but for the sake of other readers…). If you have a block of dough and a small corner of it is a different color and you proceed to flatten the dough then fold it over and flatten it again, it doesn’t take very long before that color is evenly distributed throughout the dough. Twenty-five times should be about enough to distribute it evenly at the atomic scale if the dough were actually continuous.

But just as small differences become amplified, large differences are reduced in size as they have no where else to go – such is the nature of the attractor – like two moving points moving in opposite directions on the surface of a globe only to meet on the other side. Thus the distance between two arbitrarily close points may grow to the point at which they are on opposite sides of the attractor and then at some later point may become arbitrarily close again. In this sense the initial conditions aren’t really all that important – since the actual object of study in climatology is the attractor (or by analogy, the globe) itself – which corresponds not to the exact state of the weather but to statistical properties, such as the average temperature of the summers for the globe or for a given region.

However, as I said, the people at Hadley seem to be doing rather well with initializing their model with actual real-world data from consecutive days. However, if all that mattered was initializing the models with “the right data,” they would need the data from only a single day. But then at that point we would be attempting a simple weather forecast, wouldn’t we. The initial conditions from one day when run forward through the model 1001 days will not result in weather which is identical to that which one gets when running the same model with the initial conditions from the following day 1000 days. For example, the values for global average temperature will tend to dance around one-another, weather systems will form at different times and in different places, but statistical properties such as the average temperature or precipitation during the summer between two latitudes will tend to remain fairly close – especially when averaged out over a few years.

I (#109) had written:

Falafulu Fisi (#113) responded:

In physical reality, independently of us, there is no model. A model is a construct, an approximation. Some approximations are better than others. However, when you have multiple models which, given the current state of our knowledge seem equally good approximations for modeling reality, it makes sense to use multiple models and determine what predictions are robust – more or less independent of any given model, of the approximations (e.g., grid size) that they employ – in much the same way that one determines what predictions are robust when one varies the initial conditions. Beyond this climatologists appear to be applying Bayesian logic to the projections being made by multiple models although certainly not across the board as of yet.

Falafulu Fisi (#113) responded:

Climate systems are a little more complicated than atoms, don’t you think?

The models of climatology are not the same sort of thing as the models of the structures of atoms. In atomic physics, the model is essentially a way of conceptualizing the structure of the atom where the theory may be an exact mathematical description. However, an exact mathematical description of the climate system would seem to be well beyond our reach. Climate systems are simply too complex. Instead, climate models are built from physical theories. Some aspects of the physics will be left out – as some must be since no complete description is ever possible. But where climate models begin to do poorly, this is an indication that there is some important physics which has been left out, whether it happens to be in terms of aeorosols, the carbon cycle or the dynamics of ice. At that point we incorporate those aspects and the models will tend to do much better as a whole – and not simply in terms of the new aspects which we have just introduced.

I (#109) had written:

Falafulu Fisi (#113) responded:

Perhaps, but if so there wasn’t much reason for you to be talking about exact numerical solutions, then.

Falafulu Fisi (#113) responded:

Climatology isn’t chaos theory, deterministic or otherwise – although it does deal with a form of chaos, namely the weather. Climatology isn’t mathematics – although it employs mathematics. Climatology is physics.

Anyway, fuzzy logic may or may not have a place in climatology at some point. I myself still tend to associate fuzzy logic with air conditioners – so I suppose there is already a connection even at this point. As temperatures rise, people will need more air conditioners, won’t they? But at least according to its advocates, fuzzy logic may be an alternative to Bayesian logic, or one may even speak of fuzzy Bayesian logic as a kind of hybrid of both approaches. If so, it would seem that the natural level at which to apply it would be in multi-model analysis – although it could conceivably be applied to multi-runs within a given model.

[[The path away from human suffering almost always leads to generating more GHG’s. ]]

Doesn’t have to, especially if we switch to renewable sources of energy.

re #115 [Because of the population density of developing countries (ex. China, India and many others), the very least we can hope for is most countries leveling off at United States GHG levels, but most likely, many will surpass us.]

You don’t seem to be distinguishing total GHGs from GHGs per capita, nor total population from population density. There is no obvious reason why population density should increase GHG levels per capita, and a small country could have a high population density without producing either high total GHGs or high per capita GHGs. Are you claiming that many countries will exceed US total GHG levels, or that many will exceed US per capita GHG levels? In either case, there is no reason to think you are right: the USA combines a large population (only China and India have larger ones), with high GDP and a high “carbon intensity” (GHGs produced per unit of GDP). Poor countries could get a lot richer without approaching the USA’s per capita GHG production, even without efforts to reduce carbon intensity. I think it may be you who does not “have a grip on reality”.

[Consider the practicality of what is proposed. I am surprised (and troubled) that ‘reducing emissions’ is the only tool on our workbench.]

What alternative are you suggesting? The two possibilities are the “heads in the sand” approach, and some form of geoengineering. The heads in the sand approach has two forms: hope the vast majority of climate scientists are wrong, and hope we can get by with adaptation only. The first is plain foolish, the second at best very risky, since we don’t know just where the boundaries between costly inconvenience and total disaster lie. It would be wrong to rule geoengineering out, but no approach suggested so far looks anywhere near good enough to bet the future of our civilisation on.

Falufulu Fisi,

Your analogies with early quantum mechanics are really stretching. Climate science is quite mature, while the difficulties encountered by the Bohr atom resided in the previously unknown physics of spin. Also note that it is “NIELS Bohr” not “NIEL Bohr” or “NEIL Bohr”.

Your analogies with particle physics and path integrals are only slightly less strained. Realclimate is a wonderful resource for learning about the physics of climate change. I recommend it.

RE GHG per person, we shouldn’t simply take the GDP and divide by a country’s population. Many of the products China produces end up consumed by Americans. We need to look at how much each person consumes and the GHGs entailed in that.

And if companies making the products could in some way let us know the GHGs entailed in the product (from resource extraction/rainforest destruction (e.g., bauxite mining for aluminum), shipping/processing of raw materials, manufacturing, selling & distribution (incl overhead, such as refrigeration involved), etc. …..

then consumers could buy the same product from a company that produced it with less GHG intensity.

And I’m sure (considering subsidies for fossil fuels) those products would be cheaper on the whole. Though the company could command a higher price bec the product is “greener.”

I know you’re thinking that if companies could make off like bandits so easily, then they would have been doing so. But from what I’ve read that’s often not the case. Some companies even go out of business bec their products cost too much to make because the company did not switch to less GHG intensive strategies (they just didn’t know there was such off-the-shelf solutions to their problems). Business mainly think of how to reduce labor costs, not how to have more efficient lighting. They think of how to evade enviro pollution laws, rather than recycling toxic wastes into useful resources (which often also reduces GHG emission).

Some companies go out of business because… the company did not switch to less GHG intensive strategies… They coulda had a V-8 but it just didn’t occur to them so they folded the enterprise??? Wow, Lynn. Seems a bit of a stretch, though a colorful one with a shade of truth, probably.

Michael wrote in #115: “The path away from human suffering almost always leads to generating more GHG’s … I have a roof over my head, good food on my table, a vehicle to drive, an education, a livelihood, and medical care …”

Most likely you could continue to have all of those things, and more and better, with dramatic reductions in the GHG emissions associated with them, through the application of appropriate energy efficiency and renewable energy technologies. Moreover, the development and application of those technologies is itself a highly productive and profitable sector of the economy through which many more people can reduce their suffering and increase their well-being.

The path away from human suffering leads to phasing out the burning of fossil fuels and other unsustainable dead-end technologies that are degrading and destroying the capacity of the Earth to support life, and replacing them with energy efficiency, clean renewable energy, organic agriculture, and other sustainable technologies that will enable all of humanity — not just a tiny percentage of humanity in the industrialized world — to enjoy a high-quality material existence that is sustainable within the carrying capacity of the Earth’s biosphere.

Hi Gavin

do you know this new study concerning the iris effect?

http://www.agu.org/pubs/crossref/2007/2007GL029698.shtml

can you give your comment about this?

I think it’s important.

[Response: Gavin is on vacation, but some others of us are still around. Well, first of all, we have often emphasized that one must be extremely skeptical any time a single paper claims to overthrow the consensus of scientific opinion. The IPCC report quite clearly takes Linden’s ‘iris hypothesis’ to task, noting (see upper right paragraph of page 48 of WG1 chapter 8 on climate model evaluation) that multiple independent studies have now refuted Lindzen’s arguments. Now all of a sudden, one study (by Roy Spencer et al) claims to reverse the consensus of the latest IPCC report on this? We’ll leave the technical points for later discussion, but suffice it to say that incredible claims (which these are) call for special scrutiny. First of all, what is the track record of the source (see also here)? What is the credibility of the source? We’ll let readers judge for themselves. -mike]

Re #115: [It doesn’t matter if you are sitting destitute and homeless in an immigration camp, or being overrun by looting, murdering, militias, or you’re a family of five living off of a refuse dump. The path away from human suffering almost always leads to generating more GHG’s.]

How so? How, for instance, would dealing with those militias increase GHGs? They’re political & social issues, with at best tenuous connections to energy use. In the last century, we find instances of all those in high-energy countries, and low-energy countries where those problems were absent. Those problems will be solved by changed political systems and family planning, not by increasing energy use.

[I have a roof over my head, good food on my table, a vehicle to drive, an education, a livelihood, and medical care – for myself and my child, in a relatively secure country.]