At Jim Hansen’s now famous congressional testimony given in the hot summer of 1988, he showed GISS model projections of continued global warming assuming further increases in human produced greenhouse gases. This was one of the earliest transient climate model experiments and so rightly gets a fair bit of attention when the reliability of model projections are discussed. There have however been an awful lot of mis-statements over the years – some based on pure dishonesty, some based on simple confusion. Hansen himself (and, for full disclosure, my boss), revisited those simulations in a paper last year, where he showed a rather impressive match between the recently observed data and the model projections. But how impressive is this really? and what can be concluded from the subsequent years of observations?

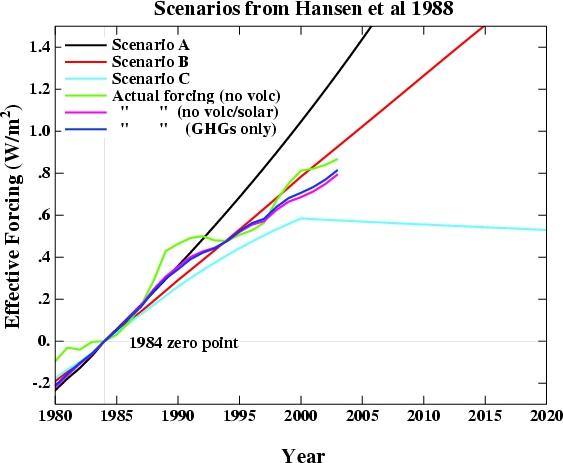

In the original 1988 paper, three different scenarios were used A, B, and C. They consisted of hypothesised future concentrations of the main greenhouse gases – CO2, CH4, CFCs etc. together with a few scattered volcanic eruptions. The details varied for each scenario, but the net effect of all the changes was that Scenario A assumed exponential growth in forcings, Scenario B was roughly a linear increase in forcings, and Scenario C was similar to B, but had close to constant forcings from 2000 onwards. Scenario B and C had an ‘El Chichon’ sized volcanic eruption in 1995. Essentially, a high, middle and low estimate were chosen to bracket the set of possibilities. Hansen specifically stated that he thought the middle scenario (B) the “most plausible”.

These experiments were started from a control run with 1959 conditions and used observed greenhouse gas forcings up until 1984, and projections subsequently (NB. Scenario A had a slightly larger ‘observed’ forcing change to account for a small uncertainty in the minor CFCs). It should also be noted that these experiments were single realisations. Nowadays we would use an ensemble of runs with slightly perturbed initial conditions (usually a different ocean state) in order to average over ‘weather noise’ and extract the ‘forced’ signal. In the absence of an ensemble, this forced signal will be clearest in the long term trend.

How can we tell how successful the projections were?

Firstly, since the projected forcings started in 1984, that should be the starting year for any analysis, giving us just over two decades of comparison with the real world. The delay between the projections and the publication is a reflection of the time needed to gather the necessary data, churn through the model experiments and get results ready for publication. If the analysis uses earlier data i.e. 1959, it will be affected by the ‘cold start’ problem -i.e. the model is starting with a radiative balance that real world was not in. After a decade or so that is less important. Secondly, we need to address two questions – how accurate were the scenarios and how accurate were the modelled impacts.

The results are shown in the figure. I have deliberately not included the volcanic forcing in either the observed or projected values since that is a random element – scenarios B and C didn’t do badly since Pinatubo went off in 1991, rather than the assumed 1995 – but getting volcanic eruptions right is not the main point here. I show three variations of the ‘observed’ forcings – the first which includes all the forcings (except volcanic) i.e. including solar, aerosol effects, ozone and the like, many aspects of which were not as clearly understood in 1984. For comparison, I also show the forcings without solar effects (to demonstrate the relatively unimportant role solar plays on these timescales), and one which just includes the forcing from the well-mixed greenhouse gases. The last is probably the best one to compare to the scenarios, since they only consisted of projections of the WM-GHGs. All of the forcing data has been offset to have a 1984 start point.

Regardless of which variation one chooses, the scenario closest to the observations is clearly Scenario B. The difference in scenario B compared to any of the variations is around 0.1 W/m2 – around a 10% overestimate (compared to > 50% overestimate for scenario A, and a > 25% underestimate for scenario C). The overestimate in B compared to the best estimate of the total forcings is more like 5%. Given the uncertainties in the observed forcings, this is about as good as can be reasonably expected. As an aside, the match without including the efficacy factors is even better.

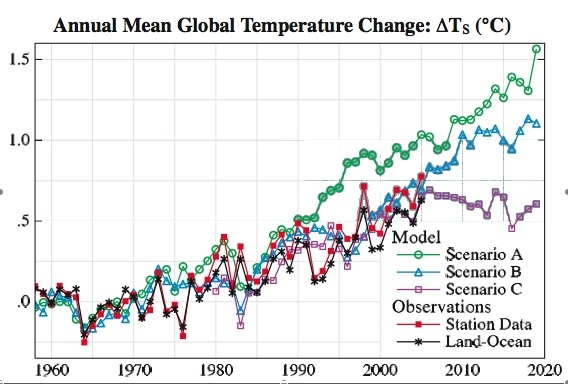

Most of the focus has been on the global mean temperature trend in the models and observations (it would certainly be worthwhile to look at some more subtle metrics – rainfall, latitudinal temperature gradients, Hadley circulation etc. but that’s beyond the scope of this post). However, there are a number of subtleties here as well. Firstly, what is the best estimate of the global mean surface air temperature anomaly? GISS produces two estimates – the met station index (which does not cover a lot of the oceans), and a land-ocean index (which uses satellite ocean temperature changes in addition to the met stations). The former is likely to overestimate the true global surface air temperature trend (since the oceans do not warm as fast as the land), while the latter may underestimate the true trend, since the air temperature over the ocean is predicted to rise at a slightly higher rate than the ocean temperature. In Hansen’s 2006 paper, he uses both and suggests the true answer lies in between. For our purposes, you will see it doesn’t matter much.

As mentioned above, with a single realisation, there is going to be an amount of weather noise that has nothing to do with the forcings. In these simulations, this noise component has a standard deviation of around 0.1 deg C in the annual mean. That is, if the models had been run using a slightly different initial condition so that the weather was different, the difference in the two runs’ mean temperature in any one year would have a standard deviation of about 0.14 deg C., but the long term trends would be similar. Thus, comparing specific years is very prone to differences due to the noise, while looking at the trends is more robust.

From 1984 to 2006, the trends in the two observational datasets are 0.24+/- 0.07 and 0.21 +/- 0.06 deg C/decade, where the error bars (2) are the derived from the linear fit. The ‘true’ error bars should be slightly larger given the uncertainty in the annual estimates themselves. For the model simulations, the trends are for Scenario A: 0.39+/-0.05 deg C/decade, Scenario B: 0.24+/- 0.06 deg C/decade and Scenario C: 0.24 +/- 0.05 deg C/decade.

The bottom line? Scenario B is pretty close and certainly well within the error estimates of the real world changes. And if you factor in the 5 to 10% overestimate of the forcings in a simple way, Scenario B would be right in the middle of the observed trends. It is certainly close enough to provide confidence that the model is capable of matching the global mean temperature rise!

But can we say that this proves the model is correct? Not quite. Look at the difference between Scenario B and C. Despite the large difference in forcings in the later years, the long term trend over that same period is similar. The implication is that over a short period, the weather noise can mask significant differences in the forced component. This version of the model had a climate sensitivity was around 4 deg C for a doubling of CO2. This is a little higher than what would be our best guess (~3 deg C) based on observations, but is within the standard range (2 to 4.5 deg C). Is this 20 year trend sufficient to determine whether the model sensitivity was too high? No. Given the noise level, a trend 75% as large, would still be within the error bars of the observation (i.e. 0.18+/-0.05), assuming the transient trend would scale linearly. Maybe with another 10 years of data, this distinction will be possible. However, a model with a very low sensitivity, say 1 deg C, would have fallen well below the observed trends.

Hansen stated that this comparison was not sufficient for a ‘precise assessment’ of the model simulations and he is of course correct. However, that does not imply that no assessment can be made, or that stated errors in the projections (themselves erroneous) of 100 to 400% can’t be challenged. My assessment is that the model results were as consistent with the real world over this period as could possibly be expected and are therefore a useful demonstration of the model’s consistency with the real world. Thus when asked whether any climate model forecasts ahead of time have proven accurate, this comes as close as you get.

Note: The simulated temperatures, scenarios, effective forcing and actual forcing (up to 2003) can be downloaded. The files (all plain text) should be self-explicable.

Josh (#189) wrote:

(emphasis added)

Did someone bring up Karl Popper’s Principle of Falsifiability?

Well, I am not going to post the full analysis here, but I will provide a link to what I have posted elsewhere…

Post subject: Reference – The Refutation of Karl Popper, etc.

Author: Timothy Chase

Posted: Wed Nov 08, 2006 3:00 pm

http://bcseweb.org.uk/forum/viewtopic.php?p=1943#1943

Karl Popper’s principle of falsifiability fails because of epistemic problems first identified in 1890 by Pierre Duhem involving the interdependence of scientific knowledge, including scientific theories. (You might have noticed before that one point I have stressed is, “Empirical science is a unity because reality is a unity.”) Scientific theories are not stictly falsifiable, but they are testable, and as such they are capable of receiving both confirmation (or justification) and disconfirmation. An alternative along these lines which seems fairly popular nowadays is known as Bayesian calculus, where if a given theory is viewed as relatively unlikely but makes a prediction which is also seen as highly improbable (given the rest of what we believe we know) the theory is viewed as more likely and the alternatives to it which predicted something else are viewed as less likely, including the alternative which was dominant.

This is of course related to the issue of the necessity of knowledge which isn’t strictly provable and that may have to be abandoned at some later point due to additional evidence which we may acquire. Such knowledge is refered to as being “corrigible,” and I brought this up a little bit ago.

In any case, it appears that some form of the Bayesian approach is being applied in the context of climate modeling, and this would seem to be entirely appropriate.

Re #194: I’m sorry, I’m confused. CO2 is not radiactive, is it? So how can we talk about half-lives? Does CO2 decay exponentially, and if so to what ??

Eli Rabett (#186) wrote:

Thank you for the clarification – and the links. I will be reading the material you have made available. However, as far as I can tell, this doesn’t exactly help DocMaynard much. Quite the opposite, in fact.

Julian Flood (#200) wrote:

The article itself is:

Saturation of the Southern ocean CO2 sink due to recent climate change

Corinne Le Quere, Christian Rodenbeck, Erik T. Buitenhuis, Thomas J. Conway, Ray Langenfelds, Antony Gomez, Casper Labuschagne, Michel Ramonet, Takakiyo Nakazawa, Nicolas Metzl, Nathan Gillett, and Martin Heimann

Science. 2007 May 17; [Epub ahead of print]

http://www.sciencemag.org/cgi/content/abstract/1136188v1?maxtoshow=&HITS=10&hits=10&RESULTFORMAT=&fulltext=Labuschagne&searchid=1&FIRSTINDEX=0&resourcetype=HWCIT

I believe one of the more relevant parts to the concern you expressed is:

This is followed by the authors’ conclusion that while simple models (which consider only carbon chemistry) predict that the ocean will take up 70-80% of the carbon dioxide we emit, the long-equilibrium will quite possibly be considerably higher than those models would suggest – given the changes to ocean circulation. This will in part be due to winds in the Southern Ocean increasing throughout this century.

They write:

Figen Mekik (#202):

No, it is a matter of it being radioactive, but when additional CO2 enters the atmosphere, how long it will take for half of the CO2 to be removed from the atmosphere and whether the rate at which it is removed from the atmosphere can be modeled in the way that DocMartyn claims it can. (The short answer to the latter is, “No, it can not.”)

DocMartyn, What you seemingly fail to understand is that most of the carbon goes into the oceans, and if it goes into the oceans, most of it will eventually come back. So while a carbon atom may last about 10 years, it will likely be replaced by another carbon atom. While the oceans are now a net sink of carbon, their ability do absorb our emissions is decreasing as they warm, and at some point they may become a net carbon source. The dynamics of the atmosphere-ocean interaction are sufficiently well understood that it is very unlikely that any new information will drastically change our understanding of climate. Scientists have been researching the solubility of CO2 in aqueous solutions for centuries–mostly in taverns near the universities.

Re: #202

No, CO2 is not radioactive. The phrase “half life” is being used to denote the time it takes for half the fraction of CO2 added to the atmosphere to be removed by natural processes.

Re: 189

Josh,

I agree that this should be done.

I think the obvious way to do it would be to escrow the exact code and operational scripts at the time of prediction, and then at the time of evaluation load all best-available current data on forcings and mother model inputs and then measure forecast accuracy. The group that escrows the code and runs the model at time of evaluation should be different that the group that does

What Hansen, and by extension Gavin, did for this forecast was a crude version of this approach (though much closer to this than Iâ??ve seen any other modeling group do). There is a group that has been testing the role that ensembles of GCMs can play in improving seasonal-level forecasts, but I have looked as hard as I can, and I have not found rigorous forecast falsification for multi-annual or multi-decadal forecasts.

It is surprising that such a structured and systematic effort is not part of the overall climate modeling process, as it is (in my experience, at least) very difficult to maintain a focus on performance in a predictive modeling community without it.

Figen, this may help. The “half life of CO2” appears to be a talking point from the PR side; looking quickly with Google Scholar, I didn’t find any scientific use of the term before it showed up, though I certainly could have missed something.

Plain Google found it:

http://www.google.com/search?q=%22half-life+of+CO2+in+the+atmosphere%2

David Archer, in answering an earlier question about that on RC, points to this:

https://www.realclimate.org/index.php/archives/2005/03/how-long-will-global-warming-last/

Thanks to all for your kind replies :)

Re: 165 In case it was missed, my reply to a question by Neil Bates on who is looking at seasonal variation of temperature increase is in #197.

The problem with talking about a “half-life” is that it assumes that the parent (in this case CO2) can be transformed into some inactive daughter product (bound CO2?, coal? clathrates?…). The problem is that the transformation of CO2 into biomass, its absorption by the oceans and all the other sinks (except for geologic processes that take a VERY long time) is a storage, not a true transformation. Biomass decays and releases CH4 and CO2 on a timescale of decades. Most CO2 absorbed by the oceans stays in the upper portion of the ocean–there’s just no way for it to transport to the depths. So, while at present more CO2 is absorbed than is released, this cannot be taken for granted, especially as the oceans warm. We are currently taking massive amounts of carbon that were removed from the atmosphere hundreds of millions of years ago and burning it. That has to have an effect!

Jim Manzi (#208) wrote:

As I point out in the piece I linked to:

Post subject: Reference – The Refutation of Karl Popper, etc.

Author: Timothy Chase

Posted: Wed Nov 08, 2006 3:00 pm

http://bcseweb.org.uk/forum/viewtopic.php?p=1943#1943

… even Newton’s gravitational theory wasn’t falsifiable in the strictest sense.

There is an interdependence which exists between different scientific theories such that one cannot test more advanced theories without presupposing the truth of more basic and well-established theories in order to make the move from the theory itself to specific, testable predictions. For example, in order to test the prediction that light from a distant star would be “bent” by the gravitational field of the sun, one had to make certain assumptions regarding the behavior of light – namely, that it takes the “straightest” path between any two points. One would have to make certain assumptions regarding how optical devices would affect the behavior of light. One would have to assume that there weren’t certain unknown effects due to either the atmosphere of the sun or the earth which would distort the results.

Typically, when one seeks to test any given advanced theory, while it is in the foreground as that which one intends to test, there are a fair number of background theories, the truth of which one is also presupposing. If the results of one’s test result in disconfirmation, the fault may lie in the theory which one is testing, but at least in principle, it may lie with one of the background theories, the truth of which one has presupposed. Likewise, if the results of one’s test result in confirmation, it may very well be the case that the prediction itself was derived from premises in the theory which are in fact false, but that premises in one of the background theories were also false, and that one logically derived a true prediction from two false premises.

What is required is testability, and what results from such tests is confirmation or disconfirmation, not falsification. Karl Popper’s principle of falsifiability belongs to the history of the philosophy of science, being “cutting-edge” more or less in the 1940s, but is no longer taken seriously in the philosophy of science or in the cooperative endeavor of empirical science.

*

As an aside, from what I have seen, the conclusions of the IPCC WG1 AR 4 2007 (at least with regard to the basics) have considerably more justification than what they are claiming. The point at which their conclusions are weakest is in their inability to take into account all of the positive feedbacks. There are of course negative feedbacks which tend to stabilize the system so long as it remains within the local attractor – but we have moved well outside of this, and at this point what we are finding is considerable net positive feedback, not negative feedback.

However, much of this still needs to be incorporated into the models. As such, we are already discovering in numerous areas that they have underestimated the effects of anthropogenic climate change.

Re Jim Manzi (#208)

Jim,

I hope you don’t mind a PS to what I just wrote, but you have gotten me curious…

You speak of the procedures of falsificationist, multi-decadal, predictive modeling communities you have had experience with – and how they seal things in vaults using different independent teams. Given what you have described, this would sound like something which has to be fairly low volume – as I don’t know of that many places where modeling is done for periods of several decades only to seal the results until the alotted number of years are up.

Is this part of a newer, more long-range approach being applied within certain business communities? If so, it sounds like the use of such methods is almost as well-guarded as that which it is intended to insure.

What are the complexities of the calculations you are speaking of? Given that you describe it along falsificationist lines, it would almost seem that the systems would have to be of relatively low complexity. Are the calculations at all comparable to the climate modeling performed at the NEC Earth Simulator a few years back? How long have they been doing this sort of thing?

In any case, it sounds like the teams of modelers you have worked with have set some pretty high goalposts for themselves. No doubt there is a certain sense of accomplishment one gets from working with people of such dedication.

Blair Dowden and Timothy Chase:

Your follow-ups on the Southern Ocean paper were tremendous, but I want to make it clear to people who might be just skimming that:

Alastar and I are talking about two different processes, for two different reasons, mostly in two opposite places. His is still largely in the future and we hope won’t happen, but mine is inching its way towards being here and now.

The connection is that in either event, however you get there, once the ocean stops taking up C02 you have a bigger problem. Or rather problems.

So, assume the Southern Ocean basically winds down soon to where under current emission conditions, it absorbs no more C02. How long before it’s releasing more C02 than it absorbs?

Now assume the arctic ice is gone and the THC is no longer submerging C02. What part of the ocean is left as a net absorber? how long before the oceans, on average, are releasing more C02 than they absorb?

And these questions are against a backdrop of increasing temperatures and increasing emissions.

Throw in the possibility (weighted, perhaps) of ice sheet breakups in greenland and antarctica, and how do our “Scenarios A B and C” look for the FUTURE now?

If IPCC can’t consider all that, who should?

[[Well actually it does, assuming that at Y=0, atmos. [CO2] = 373 ppm and all man made CO2 generation ended. It takes one half-life, Y=10, for CO2 to fall from 373 to 321 ppm, at Y=18 it falls to 300 ppm and at Y=27 [CO2] is 288 ppm. After four half-lives, 36 years it falls to 282 ppm. ]]

Now try it with a half-life of 200 years, which would be more accurate.

[[[And note that there are many ways to store excess power for use during non-peak-input times.]

All of which A) require significant investment in the equipment to do the storage; and B) lose significant amounts of energy in the storage cycle. This significantly raises your cost per MWh from these sources.]]

Except that the cost per MWh from fossil fuels doesn’t take into account the economic damage they do through pollution.

[[Re #194: I’m sorry, I’m confused. CO2 is not radiactive, is it? So how can we talk about half-lives? Does CO2 decay exponentially, and if so to what ?? ]]

They’re talking about the time it takes for half the CO2 in the air to be absorbed by sinks like the ocean.

Blair Dowden (#148) wrote:

Yes, it appears to be due to changes in atmospheric circulation which are themselves the result of changes due to the increased levels of carbon dioxide – similar I suppose to the changes we are observing in the NW Pacific.

For an older paper along these lines, please see:

Annular Modes in the Extratropical Circulation. Part II: Trends

David W. J. Thompson, John M. Wallace, and Gabriele C. Heger

Journal of Climate, American Meteorological Society

Volume 13, Issue 5 (March 2000) pp. 1018â??1036

http://ams.allenpress.com/perlserv/?request=get-document&doi=10.1175%2F1520-0442(2000)013%3C1018%3AAMITEC%3E2.0.CO%3B2

The focus of the paper is on the Antarctic ocean. As I remember, there are some debates regarding other regions. However, as you note below, it is the arctic and antarctic regions which are principally responsible for the ocean acting as a sink due to absorbtion by colder water.

As I understand it, the churning is bringing up rich organic material, and due to the process of organic decay and the action of churning, this material releases both methane and carbon dioxide. Churning, I would presume, is not the same thing as deep, slower convection. Churning is driven by the wind, and is no doubt more chaotic. Slow convection brings water from farther down and is less likely to break up organic material. To the extent that churning is closer to the surface, it will involve water that is already largely saturated with carbon dioxide, and thus will be far less effective in the absorbtion of carbon dioxide. The churning of organic material near the surface makes things much worse.

As we have only recently observed the phenomena and had previously expected something along these lines near the middle of this century, it would seem that “how much” and how this is a function of variable including but not limited to temperature and air speed will be a matter for further investigation. In any case, we appear to have underestimated how soon this would begin.

Re Marion Delgado (#215)

As I understand it, climatologists are trying to answer the questions you have posed. One good example of this would be Hansen. To the extent that we become able to make reasonable, quantifiable projections, the IPCC may then give this greater consideration.

>143, 148, 149

See also, for example:

http://www.agu.org/pubs/crossref/2007/2006GL028790.shtml

http://www.agu.org/pubs/crossref/2007/2006GL028058.shtml

Re: Ozone Depletion and Antarctic Winds

I did a little digging.

Ozone is a greenhouse gas that results in higher temperatures in the stratosphere. The higher temperatures associated with climate change near the surface are resulting in increased evaporation, leading to more water vapor in the stratosphere which chemically reacting with the ozone – resulting in ozone depletion. As the amount of ozone is reduced, the stratosphere cools, resulting in an increased temperature differential between the stratosphere and the troposphere near the surface, and as a consequence, stronger winds. The increased winds should result in a higher rate of evaporation, but more importantly with respect to the long term, the churning of the nutrient-rich surface waters of the antarctic ocean. The churning of the surface will result in more carbon dioxide being released into the atmosphere, the last of which we have learned has already begun – several decades ahead of schedule.

Although the following deals with the arctic, it now appears to be applicable to the antarctic. It covers much of what I have given just above, namely, from ghgs resulting in the depletion of ozone to increased winds:

Research Features

Tango in the Atmosphere: Ozone and Climate Change

by Jeannie Allen

February 2004

http://www.giss.nasa.gov/research/features/tango/

You may also want to check out:

Brief:

http://www.giss.nasa.gov/research/briefs/shindell_05/

Shindell, D.T.: Climate and ozone response to increased stratospheric water vapor, Geophys. Res. Lett., 28, 1551-1554, doi:10.1029/1999GL011197, 2001

http://pubs.giss.nasa.gov/abstracts/2001/Shindell.html

Putting the boot to Doc Martyn, what is missing in his statement that

is that carbon can flow from the atmosphere into the upper ocean AND BACK AGAIN. Let us try some art work. Assume we have two reservoirs A (for atomosphere) and UO (for upper ocean. They can exchange CO2 with a first order half life of say ten years.

Before we start the experiment, the two are in balance:

X X

X X

A UO

We then put four extra units of carbon as CO2 into the atmosphere

E

E

E

E

X X

X X

A UO

after a couple of decades the system has rebalanced

E E

E E

X X

X X

A UO

Notice that there is now more CO2 in the atmosphere than before we started, half of the initial excess has been redistributed. What if we (foolishly) had believed Doc big shoes? Well according to him we would now have this situation

— E

— E

— E

— E

— X

— X

— X

— X

A UO

This is silly, because once the atmosphere and the upper ocean returned to balance they would remain there. To reduce the amount of carbon in the upper ocean and the atmosphere, you need to “bury” it into the deep ocean, a process that takes hundreds of years.

Doc assumes that no carbon flows from the upper ocean into the atmosphere. In reality a considerable amount does. Until we started to peturb the atmosphere, an equal amount flowed from the upper ocean into the atmosphere as went in the other direction. Today, as we pump more CO2 into the atmosphere, slightly more flows from the atmosphere into the ocean, leaving enough to increase the atmospheric CO2 concentration, and increasing the oceans carbon content.

Now we would really be in trouble if Doc was right, because when CO2 goes into the ocean, it form carbonic acid, H2CO3 which ionizes to H+ and HCO3- and (CO3)2- ions, and that lowers the pH of seawater (makes it more acid). The observed change in acidity due to human emissions of CO2 are ALREADY a threat to much of the life in the sea, and most of the oxygen produced by photosynthesis comes from sea plants. Doc wants us to put all of it into the upper oceans*. Kill the oceans, kill the planet. :(

*the carbon reservoir in the deep ocean is so large that we could sequester CO2 there without affecting the overall acidity of the deep ocean. However if it was not trapped and rose to the surface there would be huge problems.

Steve, way back in #155, if you are still reading :-)

My comment wasn’t based on any special insight or detailed analysis. But I remember that the forecast earlier this year (that 2007 would “likely” be the hottest, with 60% probability) was predicated on a coming El Nino that seems to be weakening (again, based only on half-remembered press coverage – I’ve no special insight here).

I agree that eyeballing the numbers, it looks reasonably close, but (according to hadcrut) temperatures are clearly a little down on 1998 so far.

James, Steve

Concerning the ENSO influence we have to consider that the global temperature lags the ENSO index by 5 to 6 months. So we see the influence of El Nino from last automn/winter in the first half of 2007. A possibly upcoming La Nina during this summer will mainly influence 2008.

However, if the roughly 10-year oscillation of global temperature we have seen over the last several decades (be it due to the solar cycle or internal) holds on, we will see a considerable temperature increase during the coming years, since we are at the minimum now. A new record until 2010 seems likely.

Note that the CRU value of 1998 is relatively higher (compared to the following years) than in the GISS or NOAA data set. Thus it will take longer for a new record high in the CRU data set.

[[Doc assumes that no carbon flows from the upper ocean into the atmosphere. In reality a considerable amount does.]]

Exactly right. To get quantitative, according to the IPCC, about 92 gigatons of carbon enter the ocean each year from the atmosphere, and 90 gigatons enter the atmosphere from the ocean.

REf 204: thank you for that. I don’t think I’ll get worried yet.

Do the model teams include marine biologists? I hope so — the Southern Ocean is jade green for a reason.

JF

Some do:

http://www.agu.org/pubs/crossref/2006/2005GB002511.shtml

https://www.realclimate.org/index.php?p=160

http://www.bgc-jena.mpg.de/~corinne.lequere/publications.html

Walt Bennett asked for some references regarding the carbon cycle, and I am not sure if the ones I gave were useful. Anyway I have just come across this very recent article which might be of interest:

Balancing the Global Carbon Budget

R.A. Houghton

Annual Review of Earth and Planetary Sciences, May 2007, Vol. 35, Pages 313-347 (doi: 10.1146/annurev.earth.35.031306.140057)

Good one, Alastair. Thank you.

Here’s the link to the abstract, just a few sentences well worth reading.

http://arjournals.annualreviews.org/doi/abs/10.1146/annurev.earth.35.031306.140057?journalCode=earth

Re #229/30: Press release here and a research overview with links here.

Re reply to #153

Forgive me for having to ask a third time. Hopefully my question gets more precise and understandable with each iteration.

I want to understand the issue of structural instabilities.

Given that there is some irreducible imprecision in model formulation and parameterization, what is the impact of this imprecision? I’m glad to hear that the models aren’t tuned to fit any trends, that they are tuned to fit climate scenarios. (Do you have proof of this?) But it is the stability of these scenarios that concerns me. If they are taken as reliable (i.e. persistent) features of the climate system then I can see how there might exist “a certain probability distribution of behaviors” (as conjectured by #160). My concern is that these probability distributions in fact do not exist, or, rather, are unstable in the long-run. If that is true, then the parameterizations could be far off the mark.

What do you think the chances are of that? Please consider an entire post devoted to the issue of structural instability in GCMs. Thanks, as always.

http://environment.newscientist.com/article/dn11899-recent-cosub2sub-rises-exceed-worstcase-scenarios.html

http://environment.newscientist.com/data/images/ns/cms/dn11899/dn11899-1_600.jpg

http://www.csmonitor.com/2007/0522/p01s03-wogi.html

—–excerpt——–

“… The Global Carbon Project study held two surprises for everyone involved, Field says. “The first was how big the change in emissions rates is between the 1990s and after 2000.” The other: “The number on carbon intensity of the world economy is going up.”

Meanwhile, … the Southern Ocean, which serves as a moat around Antarctica, is losing its ability to take up additional CO2, reports an international team of researchers in the journal Science this week. The team attributes the change to patterns of higher winds, traceable to ozone depletion high above Antarctica, and to global warming.

“There’s been a lot of discussion about whether the scenarios that climate modelers have used to characterize possible futures are biased toward the high end or the low end,” Field adds. “I was surprised to see that the trajectory of emissions since 2000 now looks like it’s running higher than the highest scenarios climate modelers are using.”

If so, it wouldn’t be the first time. Recently published research has shown that Arctic ice is disappearing faster than models have suggested.

Despite the relatively short period showing an increase in emissions growth rates, the Global Carbon Project’s report “is very disturbing,” Dr. Weaver says. “As a global society, we need to get down to a level of 90 percent reductions by 2050” to have a decent chance of warding off the strongest effects of global warming.

If this study is correct, “to get there we have to turn this corner much faster than it looks like we’re doing,” he says.

—–end excerpt——-

Re #232: Along with the rest of the ClimateAstrology crowd, what you *want* is to prove your belief that the models are invalid along with the rest of climate science. It’s an interesting hobby for Objectivists with time on their hands, but as has been proved again and again by the few climate scientists who have braved the CA gauntlet, in the end it’s a complete waste of their time.

Re Steve (#235):

He is an Objectivist?

I understand they often have difficulty with some aspects of science – like quantum mechanics, general relativity, the big bang, special relativity, evolution, some economics, certain parts of mathematics, etc. If it doesn’t fit their understanding of philosophy, its out. But what fits and what doesn’t oftentimes depends upon the individual’s understanding of “the philosophy.” Never really was all that technically defined. But at least they know they are “the rational ones.”

Come to think of it, there are other groups that claim that title, and they don’t seem to have earned it either. Maybe that is the problem: claiming it. Once you have claimed it, to engage in critical self-examination to identify whether you are behaving rationally is experienced as a threat to everything you believe and everything you stand for. A personal threat. So you don’t engage in critical self-examination. You don’t self-correct. You become unwilling to entertain doubts and uncertainties – and the possibility of becoming something more than you are.

But we digress; that would be philosophy.

Re #237

And the presumptions and distortions in #236, would that be “philosophy” too? Maybe there is a more accurate term for that kind of “digression”?

> Maybe there is a more accurate term

That would be a digression, into nomenclature.

Seems the CO2 numbers are getting back toward Scenario A. Time will tell.

re 232: “My concern is that these probability distributions in fact do not exist, or, rather, are unstable in the long-run. If that is true, then the parameterizations could be far off the mark.”

1. The expressed concern is directly on topic.

2. 27 hours and no fact based responses yet, from the contributors, nor the cheap seats.

Re #235

If anything, what I *want* to believe is that the models are totally reasonable approximations. Unfortunately, a scientific training teaches one to not accept models on faith, but to probe them, investigate their assumptions, limitations, and uncertainties. When agendas such as #235 do not want to hear about model uncertainties, I am not surprised; one encounters that a lot these days. But when the modelers themselves do not want to discuss uncertainties in their parameterizations and predictions, that makes me suspicious. But I am patient. It is entirely possible that my question will be answered satisfactorily, and that my suspicions will be proven unwarranted. To be fair, it’s not an easy question to answer, and perhaps a post is being prepared to address the topic. Maybe the crickets chirping in #240 is actually the sound of beavers busying themselves in the background.

Re #239 “That would be a digression, into nomenclature.”

Then let’s just call #235 what it is – a “dodge” – and be done with this and all digressions. The topic is the accuracy and validity of model projections.

On that topic, I question the precision of the model’s parameters on the grounds that the scenarios to which the model is tuned are not persistent, reliable, indicative features of the atmospheric/oceanic circulation. Rather, the scenarios are a dynamically realized subset of a much wider array of potential dynamical behaviors. I want to know if the fundamental instability of thermodynamic circulation is a serious problem when it comes to parameterizing (i.e. tuning) these models. I can’t see how it wouldn’t be. If one parameter is off, then the error here will propagate to affect the estimation of a neighboring parameter, and so on.

This is in response to bender’s (#232).

bender, I am not a climatologist, and my interest in the field is perhaps a month or two old, but I figured that with a few minutes of looking around on the web, primarily at Real Climate, I would probably run across sufficient material to respond to your requests to the extent that they deserve a response.

bender (#232) wrote:

Essentially, what you are speaking of is chaos and how it might limit the ability to predict not the weather, but the climate, where the climate consists of the statistical properties of the weather over time.

This is a fairly esoteric topic, but it is something under consideration. Moreover, this is something which was discussed (albeit rather briefly) at this website only a few months ago.

gavin wrote:

Please see:

3 Jan 2007

The Physics of Climate Modelling, post #5, inline response

https://www.realclimate.org/index.php/archives/2007/01/the-physics-of-climate-modelling/

Going on the article by Gavin Schmidt to which this is refering, we find the following:

Please see:

Quick Study

If that is true, then the parameterizations could be far off the mark.

The physics of climate modeling

http://www.physicstoday.org/vol-60/iss-1/72_1.html

You write:

This sounds odd to say the least.

Climate scenarios are what you get from the models, not the other way around. What one deals with in climate models are parameterizations. The parameters are adjusted to fit our understanding of the phenomena. For example, we do not know the exact climate sensitivity to carbon dioxide or other forcings. As such, different models use different values for these parameters. However, the parameters themselves are held constant within any given model. However, just as important (as alluded to in the previous passage) are the particular feedbacks which are incorporated into the models.

Nevertheless, assuming you are familiar with how science is done, you will realize of course that the incorporation of additional elements (or feedbacks) is not arbitrary as these necessitate further predictions, and with the evidence that we are accumulating, these most certainly can and will be tested.

Now to estimate the uncertainty associated with our models, we will compare the resulting scenarios against one another. Likewise, we may vary the initial conditions which are the input for a given model, accounting for the uncertainties we have with respect to those initial conditions.

In all cases so far, the estimated rise in temperature (given the current level of carbon dioxide) is in the neighborhood of two to three degrees, suggesting that the results are robust.

You write:

I have already dealt with this above when quoting gavin.

The probability distribution is in terms of the specific weather which is cranked out through computer simulations, not the climate itself. The climate is a measure of the statistical properties of the weather which results from different runs of the models.

You write:

You are right: at this point, such “probability distributions” of climates are not yet a part of such models. The probability distributions are of the weather, the climates are a measure of the statistical properties of the weather simulations.

As for the “climate instabilities,” currently we aren’t seeing any – given the models. What we are seeing is chaos at the level of weather, but the climates which we are seeing are themselves stable. However, the incorporation of additional feedbacks may result in this changing – if we seeing branching points in the climates which result from different simulations.

You write:

As gavin suggests, it is a possibility.

You write:

This seems premature insofar as the global climate models aren’t showing any structural instability at this point.

You write:

I hope I have been able help.

Furthermore, responding to you has helped to clarify my own understanding of what climate models are.

> the scenarios to which the model is tuned are not persistent, reliable,

> indicative features of the atmospheric/oceanic circulation

Chuckle. Of course not. They’re fossil fuel scenarios. From twenty years ago. And impressive, as Gavin wrote up top:

“This was one of the earliest transient climate model experiments …. showed a rather impressive match between the recently observed data and the model projections. … the model results were as consistent with the real world over this period as could possibly be expected and are therefore a useful demonstration of the model’s consistency with the real world. Thus when asked whether any climate model forecasts ahead of time have proven accurate, this comes as close as you get.”

Asserting you doubt the current work by attacking the early work — in any area — is fundamentalism, not science.

That’s a seed, not a foundation. Seeds get used up, they don’t remain essential once something grows from them.

Re #244 Not surprising that a rhetoritician would try to parse the problem away by redifining the terms of the debate. With a chuckle, no less. FYI I’m referring to two papers published in 2007. Since you’re so clever, try to guess which ones.

bender (#245) wrote:

At this point you are being impolite.

Perhaps in part this is my fault – with my estimation of the Objectivist movement. With regard to that, I apologize, although I must say that I have some experience on which to based such statements.

In many ways, the philosophy isn’t that revolutionary, but it is underdeveloped. I know because I am a former philosophy major who focused on technical epistemology and the philosophy of science. I also have reasons for my views regarding the nature of the movement. I had close, personal contact over approximately a decade – since I was an Objectivist for thirteen years.

Now with regard to what you have just stated, it is you who are being a rhetoritician since the subject of gavin’s post was Hansen’s models from twenty years ago. Those models proved to be fairly accurate, at least scenario b – which Hansen stated was the most likely at the time he made the predictions.

“At this point you are being impolite.”

Yes. And you are being uneven in your treatment, as my question has twice now received impolite (and, more importantly, irrelevant) commentary, and it is me who you are rebuking. I give back what I’m given. It’s called tit-for-tat. We can discuss the facts or we can ratchet up the rheotric. You have your choice. For the record, your reply was unhelpful and reflects your amateurism, just as the chuckling in the front row reflects the chuckler’s amateurism. Laugh it up. This issue has legs.

“The subject of gavin’s post was Hansen’s models from twenty years ago.”

The content of the post is as you describe. However the subject of the post is broader than that; it is the credibility of the Hansen et al approach to climate dynamics modeling. You can redefine the debate as narrowly as you like; the harder you work these semantics, the more it worsens your case.

“Those models proved to be fairly accurate”

Your notion of model “accuracy” is perhaps not as nuanced as it needs to be. With a stochastic dynamic system model containing a hundred free parameters, do you have any idea how easy it is to get the right answer … for the *wrong* reasons? A valid model is one which gives you the right answers … for the *right* reasons. There is much more to model validation than an output correlation test, especially over a meagre 20 years.

If your faith in the model’s accuracy is so strong, then why don’t you just sit back patiently, keep quiet, and let the experts defend it? Then my concern will be proven to be unfounded. Then I will leave, we can stay friends, and all will be well again.

bender (#247) wrote:

Alright.

In the name of objectivity, lets set aside personal comments and anything which may be interpreted (rightly or wrongly) as ad hominem attacks. Instead, as a matter of individual choice, we will focus our efforts on the volitional adherence to reality. As I understand it, nothing is of of greater importance. Not obedience to authority. Not any allegience to any group. Not any value.

Reality comes first.

Now you vaguely speak of amateurism. Assuming you are refering to the post #243, if there is something unclear in the explanation that I provided or something about it that is inaccurate, please feel free to point it out and we can discuss.

Re# 243

Just for the record. For things like the direct GHG forcing, for all practical purposes, there is no ‘tuning’ involved in GCMs. CO2, H2O, O3 etc. concentrations all feed directly into the radiative transfer calculations which then produce the associated atmospheric heating/cooling rates (including surface heating/cooling effects). For this aspect of CGMs there is no room for fitting anything. Parameterization (which can be `tuned’), are still necessary, for example, to take into account the role of sub-grid processes such as cloud properties and their effect on the global radiative balance. Cloud feedback issues are perhaps the greatest ligitimate souce of uncertainity in our ability to predict future climate change (see the climteprediction.net stuff at Oxford ?)

W.r.t. Clouds the more we learn particularly about thier vertical properties on a global scale the better we can reduce this source of uncertainity (Google Cloudsat).