At Jim Hansen’s now famous congressional testimony given in the hot summer of 1988, he showed GISS model projections of continued global warming assuming further increases in human produced greenhouse gases. This was one of the earliest transient climate model experiments and so rightly gets a fair bit of attention when the reliability of model projections are discussed. There have however been an awful lot of mis-statements over the years – some based on pure dishonesty, some based on simple confusion. Hansen himself (and, for full disclosure, my boss), revisited those simulations in a paper last year, where he showed a rather impressive match between the recently observed data and the model projections. But how impressive is this really? and what can be concluded from the subsequent years of observations?

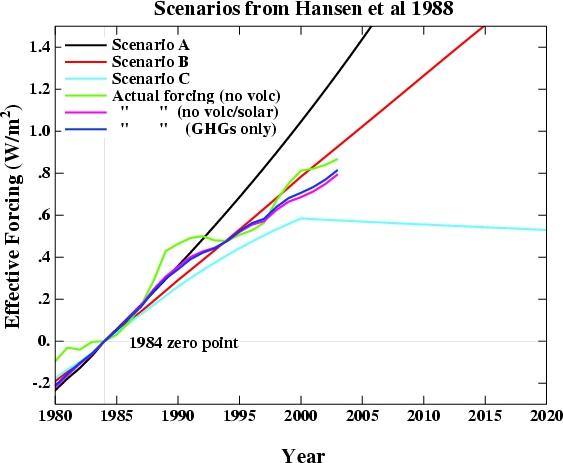

In the original 1988 paper, three different scenarios were used A, B, and C. They consisted of hypothesised future concentrations of the main greenhouse gases – CO2, CH4, CFCs etc. together with a few scattered volcanic eruptions. The details varied for each scenario, but the net effect of all the changes was that Scenario A assumed exponential growth in forcings, Scenario B was roughly a linear increase in forcings, and Scenario C was similar to B, but had close to constant forcings from 2000 onwards. Scenario B and C had an ‘El Chichon’ sized volcanic eruption in 1995. Essentially, a high, middle and low estimate were chosen to bracket the set of possibilities. Hansen specifically stated that he thought the middle scenario (B) the “most plausible”.

These experiments were started from a control run with 1959 conditions and used observed greenhouse gas forcings up until 1984, and projections subsequently (NB. Scenario A had a slightly larger ‘observed’ forcing change to account for a small uncertainty in the minor CFCs). It should also be noted that these experiments were single realisations. Nowadays we would use an ensemble of runs with slightly perturbed initial conditions (usually a different ocean state) in order to average over ‘weather noise’ and extract the ‘forced’ signal. In the absence of an ensemble, this forced signal will be clearest in the long term trend.

How can we tell how successful the projections were?

Firstly, since the projected forcings started in 1984, that should be the starting year for any analysis, giving us just over two decades of comparison with the real world. The delay between the projections and the publication is a reflection of the time needed to gather the necessary data, churn through the model experiments and get results ready for publication. If the analysis uses earlier data i.e. 1959, it will be affected by the ‘cold start’ problem -i.e. the model is starting with a radiative balance that real world was not in. After a decade or so that is less important. Secondly, we need to address two questions – how accurate were the scenarios and how accurate were the modelled impacts.

So which forcing scenario came closest to the real world? Given that we’re mainly looking at the global mean surface temperature anomaly, the most appropriate comparison is for the net forcings for each scenario. This can be compared with the net forcings that we currently use in our 20th Century simulations based on the best estimates and observations of what actually happened (through to 2003). There is a minor technical detail which has to do with the ‘efficacies’ of various forcings – our current forcing estimates are weighted by the efficacies calculated in the GCM and reported here. These weight CH4, N2O and CFCs a little higher (factors of 1.1, 1.04 and 1.32, respectively) than the raw IPCC (2001) estimate would give.

So which forcing scenario came closest to the real world? Given that we’re mainly looking at the global mean surface temperature anomaly, the most appropriate comparison is for the net forcings for each scenario. This can be compared with the net forcings that we currently use in our 20th Century simulations based on the best estimates and observations of what actually happened (through to 2003). There is a minor technical detail which has to do with the ‘efficacies’ of various forcings – our current forcing estimates are weighted by the efficacies calculated in the GCM and reported here. These weight CH4, N2O and CFCs a little higher (factors of 1.1, 1.04 and 1.32, respectively) than the raw IPCC (2001) estimate would give.

The results are shown in the figure. I have deliberately not included the volcanic forcing in either the observed or projected values since that is a random element – scenarios B and C didn’t do badly since Pinatubo went off in 1991, rather than the assumed 1995 – but getting volcanic eruptions right is not the main point here. I show three variations of the ‘observed’ forcings – the first which includes all the forcings (except volcanic) i.e. including solar, aerosol effects, ozone and the like, many aspects of which were not as clearly understood in 1984. For comparison, I also show the forcings without solar effects (to demonstrate the relatively unimportant role solar plays on these timescales), and one which just includes the forcing from the well-mixed greenhouse gases. The last is probably the best one to compare to the scenarios, since they only consisted of projections of the WM-GHGs. All of the forcing data has been offset to have a 1984 start point.

Regardless of which variation one chooses, the scenario closest to the observations is clearly Scenario B. The difference in scenario B compared to any of the variations is around 0.1 W/m2 – around a 10% overestimate (compared to > 50% overestimate for scenario A, and a > 25% underestimate for scenario C). The overestimate in B compared to the best estimate of the total forcings is more like 5%. Given the uncertainties in the observed forcings, this is about as good as can be reasonably expected. As an aside, the match without including the efficacy factors is even better.

What about the modelled impacts?

What about the modelled impacts?

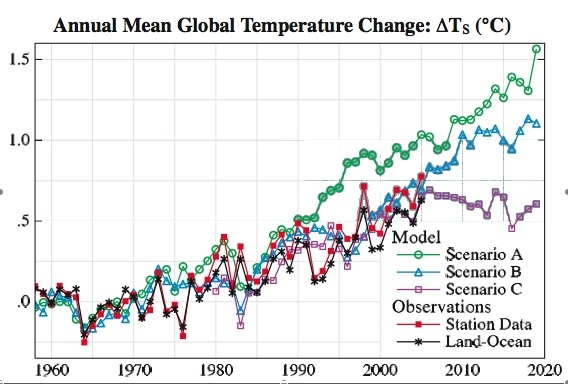

Most of the focus has been on the global mean temperature trend in the models and observations (it would certainly be worthwhile to look at some more subtle metrics – rainfall, latitudinal temperature gradients, Hadley circulation etc. but that’s beyond the scope of this post). However, there are a number of subtleties here as well. Firstly, what is the best estimate of the global mean surface air temperature anomaly? GISS produces two estimates – the met station index (which does not cover a lot of the oceans), and a land-ocean index (which uses satellite ocean temperature changes in addition to the met stations). The former is likely to overestimate the true global surface air temperature trend (since the oceans do not warm as fast as the land), while the latter may underestimate the true trend, since the air temperature over the ocean is predicted to rise at a slightly higher rate than the ocean temperature. In Hansen’s 2006 paper, he uses both and suggests the true answer lies in between. For our purposes, you will see it doesn’t matter much.

As mentioned above, with a single realisation, there is going to be an amount of weather noise that has nothing to do with the forcings. In these simulations, this noise component has a standard deviation of around 0.1 deg C in the annual mean. That is, if the models had been run using a slightly different initial condition so that the weather was different, the difference in the two runs’ mean temperature in any one year would have a standard deviation of about 0.14 deg C., but the long term trends would be similar. Thus, comparing specific years is very prone to differences due to the noise, while looking at the trends is more robust.

From 1984 to 2006, the trends in the two observational datasets are 0.24+/- 0.07 and 0.21 +/- 0.06 deg C/decade, where the error bars (2![]() ) are the derived from the linear fit. The ‘true’ error bars should be slightly larger given the uncertainty in the annual estimates themselves. For the model simulations, the trends are for Scenario A: 0.39+/-0.05 deg C/decade, Scenario B: 0.24+/- 0.06 deg C/decade and Scenario C: 0.24 +/- 0.05 deg C/decade.

) are the derived from the linear fit. The ‘true’ error bars should be slightly larger given the uncertainty in the annual estimates themselves. For the model simulations, the trends are for Scenario A: 0.39+/-0.05 deg C/decade, Scenario B: 0.24+/- 0.06 deg C/decade and Scenario C: 0.24 +/- 0.05 deg C/decade.

The bottom line? Scenario B is pretty close and certainly well within the error estimates of the real world changes. And if you factor in the 5 to 10% overestimate of the forcings in a simple way, Scenario B would be right in the middle of the observed trends. It is certainly close enough to provide confidence that the model is capable of matching the global mean temperature rise!

But can we say that this proves the model is correct? Not quite. Look at the difference between Scenario B and C. Despite the large difference in forcings in the later years, the long term trend over that same period is similar. The implication is that over a short period, the weather noise can mask significant differences in the forced component. This version of the model had a climate sensitivity was around 4 deg C for a doubling of CO2. This is a little higher than what would be our best guess (~3 deg C) based on observations, but is within the standard range (2 to 4.5 deg C). Is this 20 year trend sufficient to determine whether the model sensitivity was too high? No. Given the noise level, a trend 75% as large, would still be within the error bars of the observation (i.e. 0.18+/-0.05), assuming the transient trend would scale linearly. Maybe with another 10 years of data, this distinction will be possible. However, a model with a very low sensitivity, say 1 deg C, would have fallen well below the observed trends.

Hansen stated that this comparison was not sufficient for a ‘precise assessment’ of the model simulations and he is of course correct. However, that does not imply that no assessment can be made, or that stated errors in the projections (themselves erroneous) of 100 to 400% can’t be challenged. My assessment is that the model results were as consistent with the real world over this period as could possibly be expected and are therefore a useful demonstration of the model’s consistency with the real world. Thus when asked whether any climate model forecasts ahead of time have proven accurate, this comes as close as you get.

Note: The simulated temperatures, scenarios, effective forcing and actual forcing (up to 2003) can be downloaded. The files (all plain text) should be self-explicable.

146 Tamino, Consider the physical impossibility of having the warmest year in history, with all its heat radiation confined to surface stations. RSS or UAH calculations from raw data seems totally unrealistic, the UW effort looks right on.

Re: 127

Gavin, thanks for your thoughtful in-line comment.

I thought about it a little bit, and did the following quick analysis, in all cases eyeballing the numbers from the chart in Hansen’s 2006 published paper:

1. Averaged the two observational time series to create an estimated actual temperature for each year.

2. Calculated the 18-year temperature change for each 18-year period starting in 1958 and ending in 1988 (the year the prediction was published), i.e., 1958-1975, 1959-1976 and so on through 1971-1988.

3. Did the same calculation for each 13-year period starting in 1958 and ending in 1988.

4. Calculated the mean measured change (.14C) for the population (N=14) of 18-year periods, and then calculated the Std. Dev. (.22C) for this population.

5. Calculated the mean change (.11C) and Std. Dev. (.20C) for the population (N=19) of 13-year periods.

6. Made the synthetic forecast that an analyst would have made in 1988 based on simple trend for the change for the projected change in temperature over the 13 years ending in 2000 (.11C) and the 18 years ending in 2005 (.14C) vs. 1988.

7. Added these projected changes to the actual temperature in 1988 to create temperature forecasts for 2000 and 2005 as of 1988 based on simple trend.

8. Recorded the Scenario B temperature forecast published in 1988 for the years 2000 and 2005, and termed this “model forecast”.

9. Compared the model forecast to the simple trend-based forecast calculated in Step 7 for the years 2000 and 2005.

The analytical results are as follows:

1. As is obvious from visual inspection of the chart, the model prediction is basically spot-on for 2005. The simple trend-based forecast for 2005 was .46C, while actual in 2005 was .70C, so the forecast error for the simple trend was .23C, or about 1 Std. Dev. for 18-year periods.

2. The model prediction for 2000 was .55C, while actual was .35C. Forecast error for the model was therefore .20C, or about 1 Std. Dev. for 13-year periods. Forecast error for the simple trend in 2000 was about 0.5 Std. Dev.

3. These results are directionally robust against various starting periods for the analysis, including 1964 (the low point in the historical temperature record provided in Hansen) and 1969, as well as for 13-year and 18-year period mean and Std. Dev. calculated for the whole dataset, including the years 1989 – 2005 not available at the publication date for the forecast.

Observations are as follows:

1. We can not reject the null hypothesis of continuation of the simple pre-1988 trend based on evaluation of model performance through 2005.

2. Once normalized for the underlying trend and variance in the data for differing forecast periods, the model was off by the same number of Std. Dev. in 2000 as the simple trend-based forecast was in 2005. That is, the relative performance of the model vs. simple trend is highly dependent on the selection of evaluation period.

3. The model forecast for the period 2010 – 2015 plateaus at an increase of about .70C, which is about .50C, or slightly more than 2 Std. Dev., greater that the simple trend-based forecast for this period, so as per Hansen’s comments in his 2006 paper, that should be a period when evaluation of the forecast will permit more rigorous evaluation of model performance vs. simple pre-1988 trend.

Gavin, I don’t think any of this contradicts anything that you put in your post to start this thread. As you said clearly, model results to date are consistent with an accurate model but do not prove that the model is accurate.

Parameterized models typically fail soon after they are used to simulate phenomena in domains outside those in which the model was parameterized. As climate continues to warm, the probability of model failure thus increases. The linear trend fit of the Hansen model to observations during the last few decades thus does not imply that we can expect more of the same. This is why I am interested in the uncertainty associated with the parameters that make up the current suite of parameterizations. How likely is it that those parameters are off, and what would be the consequences, in terms of overall prediction uncertainty? Is moist convection the wild card? Why or why not? Tough question, I know. Possibly even overly presumptive. Maybe the best way to answer is through a new post?

[Response: You need to remember that the models are not parameterised in order to reproduce these time series. All the parameterisations are at the level of individual climate processes (evaporation, cloud formation, gravity wave drag etc.). They are tested against climatology generally, not trends. Additionally, the models are frequently tested against ‘out of sample’ data and don’t ‘typically fail’ – vis. the last glacial maximum, the 8.2 kyr event, the mid-Holocene etc. The sensitivity of any of these results to the parameterisations is clearly interesting, but you get a good idea of that from looking at the multi-model meta-ensembles. One can never know that you have spanned the full phase space and so you are always aware that all the models might agree and yet still be wrong (cf. polar ozone depletion forecasts), but that irreducible uncertainty cuts both ways. So far, there is no reason to think that we are missing something fundamental. -gavin]

FCH (#117) I meant including 2010, and I won’t offer long odds but I reckon it has to be more likely than not. For a top-of-my-head calculation, 2007 looks like a miss but 3 more shots, each with a roughly independent 1/3 chance of exceeding 1998 means an 8/27 probability of not breaking the record over this time (hence 19/27 that the record is broken).

According to NASA GISS, 2005 already broke the old record.

Re #153 (JA): 2007 looks like a miss? Just based on the GISS combined land/ocean monthlies through April (I assume what’s used for the record, but perhaps it’s land only?), 2007 is second warmest after 2002 (which after a fast start had a relatively cool remaining year and so fell out of the running) but warmer than both 1998 and 2005. Are you expecting La Nina to have its way?

[[By not addressing the actual scales involved, you are entering the realm of irrationality. It’s not that it can’t work, it’s simply that it won’t work given the population of the Earth. An alternative energy solution given today’s technology would require drastic reductions in population.]]

No it wouldn’t. You don’t understand how markets work or how governments can affect them. There’s nothing irrational about what I said, but there’s something ignorant about assuming the US has to stay locked in the infrastructure patterns it now possesses.

[[That is all I am going to contribute to this discussion with you.]]

Super. Then I’ll have the last word by pointing out that renewables can do it all. Maybe not this year, maybe not ten years from now, but to say that it will never happen in our lifetime is just not justified. Technological change can happen very rapidly. Compare the number of horse-drawn buggies being manufactured in 1910 versus 1920. Compare the number of ships built in the US in 1938 versus 1943. Compare the number of electronic calculators in use in 1972 versus 1982. Do I need to go on? We are not locked into the present infrastructure forever. Hundreds of billions of dollars are spent on energy infrastructure every year in this country. How long did it take Brazil to go from gasoline to ethanol for their cars?

[[#113 Barton, How unimaginable it is to have a cluster of 1 million wind turbines over an apt oceanic location, I wonder how much energy that would produce? May be we can produce more wind towers than cars for a few years? ]]

I would love to see that. I understand Denmark is already producing 20% of its electricity from wind, and a lot of that comes from offshore arrays.

[[By the way, planetary Lapse rate = -g/Cp is one of the most elegant equations in atmospheric physics, do you know who was the first person who has derived it? ]]

I’m not sure, but it goes fairly far back. My guess would be 19th century.

Re 127 Jim Manzi,

Hi Jim,

When I use the averaged data as you described from 1958 to 1988 and fit a linear regression I get a slope of 0.09 degC/decade with 95% CIs of 0.04 to 0.14 degC/decade. This is significant, but well below Gavin’s estimate. Using this model to forecast the temperature in 2005 I get an estimate of 0.35C +/- 0.14C, well below the recorded annomaly of 0.7C. I am using the standard approach to estimating and forecasting using ordinary least squares (OLS).

This tells me a forecast based on OLS over this time period is not appropriate. And indeed Gavin has not used OLS to do the forecast, but simply to compare predicted and observed trends. He has done this for a period when the trend is approximately linear and so a linear model is approriate.

If I had the data for the 3 models I could formaly test the hypothesis that their slopes differ for 1988 to 2006. As it is, a forcast for 2005 based on OLS regression for 1988 to 2006 has a mean of 0.61C with a 95%CI from 0.37C to 0.84C. This just tells me what is obvious from the plot. Models B and C are consistent with data, while model A is too high.

Cheers,

Bruce

ps. I get the same estimates for trend (including errors) as Gavin for the 2 observational datasets.

bender (#153) wrote:

My own thoughts, for what they are worth…

I would expect convection and turbulence to come into play, especially hurricanes as they send heat into the atmosphere and cool the ocean below on a large scale, and are themselves so unpredictable.

But there are other things. For example, we don’t presently know all the positive feedbacks, and such feedbacks, even if they were known (and could be appropriately modeled) would undoubtedly introduce a chaotic element much like turbulence itself. Then of course the grid itself implies that the data has only so much resolution. But at this point, there are the model parameters themselves which are known with only a certain level of accuracy. Undoubtedly we will be able to improve upon that and include more of the feedbacks.

It helps, too, to keep in mind that the global model works in concert with regional models. Global calculations determine the background in which regional models are applied, but they don’t have the same level of detail or use as many variables. One recent story involving this is that, while the global models predict temperatures to rise in places like Chicago and Washington DC by a certain amount, regional models are better able to keep track of the time of day when precipitation is likely to occur. Since it is later in the afternoon, this gives the ground a chance to heat up, and as a consequence, rather than being absorbed, the precipitation is more likely to evaporate, drying out the soil, the plants, etc.. As such, they are expecting more dry hot summers, and temperatures are likely to be hotter. 100-110 F in the 2080s. And this will affect the global behavior over time. Global models help tune the regional, but the regional will in turn help to tune the global.

Finally, models don’t predict specific behavior. What they predict is a certain probability distribution of behaviors – using multiple runs with slightly different initial conditions. This helps to account for uncertainty with respect to initial conditions and determine the robustness of the results. Likewise, multiple models which may assume different parameters or take into account different mechanisms help to determine the robustness of various results.

#113 #158 A million wind turbines offshore?

Back of the envelope: the current common turbine size is 3 MW, so that makes 3,000,000 MW for such a windfarm. Because of variability in wind, average output would be something like 40% of this (offshore), or 1,200,000 MW.

What does that mean? World energy demand is 15 TW (2.5 kW/head) of which 1/6 is electricity, which gives 15e9/6 = 2.5e9 kW = 2,500,000 MW. Thus 1 million turbines would provide half of the world’s electricity. Now that would be something.

Currently there is 75,000 MW installed, and the expectation for 2020 is 300,000 MW (1/10 of the figure above, generating 5% of the world’s electricity).

Denmark already gets 20% from wind, Germany and Spain are at 6% or so.

There’s some more figures in Wikipedia: http://en.wikipedia.org/wiki/World_energy_resources_and_consumption but they seem not to be entirely clear on the difference between installed wind power and average output power.

Re: 159

Hey Bruce:

Thanks for pushing the analysis.

You write:

“When I use the averaged data as you described from 1958 to 1988 and fit a linear regression I get a slope of 0.09 degC/decade ”

Check (or at least very close to what I get, but I’m eyeballing numbers from a chart).

You’ll find, as I’m sure is obvious to you, that this slope estimate is highly sensitive to the starting year for the calculation. This is because the behavior in the first several years of the dataset is very different than in the balance of the dataset. 1958 – 1963 has essentially flat temperatures, then there is a big drop in 1964 followed by a steady upward trend from 1964 to 1988 (and, unfortunately for all us, on through to today).

I don’t know of any physical logic for starting the trend calculation in 1958, it’s just the first year Hansen has on his chart. I assume there is some good reason for why this chart starts in 1958, but I don’t know what it is. In the absence of any other a priori information, I think a rational analyst would probably start the slope calculation in 1964 at the start of the “regime change”. If you do this, you would find that the projected temperature for 2006 is about 0.6C. As per the fact that your calculation of the projected value in 2005 based on OLS for the 1988 to 2006 dataset would be .61C, the slope from 1964 to 1988 is not statistically distinguishable from the slope from 1988 to 2006. It is a continuation of the same “trend”.

So should we start the calculation for “trend” in 1958 or 1964, or for that matter, 1864?

Because any of these starting point assumptions is pretty unsatisfying and the slope is so sensitive to this assumption, I calculated the series of 13-year and 18-year differences is to create a distribution of slopes and then considered any one difference a draw from this distribution. This is a grossly imperfect way to do this, of course, but a reasonable approximation to the real time-series analysis.

The estimate developed this way for 2005 is .46C with a large distribution of error, while the range of estimate based on an ensemble of OLS models built on data with various starting points ranges from .35C to .60C with some complicated, but large, distribution of error. So whether you do the “poor man’s Bayesian” analysis one way or the other, you still get the same basic conclusion about what “trend” and uncertainty around trend would have been in 2005.

Once again, I don’t think I’ve disagreed in any fundamental way with you or Gavin on this. When evaluating a model forecast that as of the most recent available date is effectively EXACTLY right, there is no way any reasonable person can conclude that the performance of the model is anything other than consistent with a high degree of accuracy. . The subtle but important point, that Gavin is explicit about, is that this doesn’t necessarily “prove” that the model is “right”, or more precisely, we have to be careful in evaluating the confidence interval that the performance to date establishes. As our discussion so far indicates, this is a tricky problem, exacerbated by a paucity of data points.

Thanks again.

Then I’ll have the last word by pointing out that renewables can do it all

You don’t understand how markets work or how governments can affect them.

But I do know how science works, and it doesn’t work when christian libertarian scientists sit back in their armchairs, and by merely lifting a little finger, are able to pronounce that markets will accomplish the global implementation of alternative energy, and that no effort by christian libertarian scientists is necessary at all.

One more point regarding the ability of models to predict the behavior of complex systems….

There are many uncertainties involved and additional factors which some later theory might account for undoubtedly. However, oftentimes the uncertainties will cancel each other out, even over extended periods of time. If we look over the charts for predicted vs real temperatures over the span of a decade or more, this is much of what we see: drift, but drift that largely cancels itself out such that the distance between the real and the predicted increases only gradually with the real and the predicted crossing over at various points, particularly early on.

This is in fact part of the central conceit behind climatology: it isn’t dealing with the day to day weather so much as the average behavior of the system, but it can do this only because fluctuations and unknown factors often cancel one-another out. In some ways, it reminds me of the dirty back-of-the-envelope calculations a physicist (Oppenheimer?) made immdiately made after witnessing the test at Trinity – which were fairly accurate. Obviously climate models are far more sophisticated, but I believe the same principle applies.

Hi, I was directed here from a comment at WaMo comment page, and here is basically what I asked there:

The seasonal variation of temperature increase caused by general increase in solar luminosity (as some claim is the major cause of GW) would be different from that due to CO2, wouldn’t it, since the mechanism (direct thermal equilibrium with radiation v. absorption of longer-wave IR) is different? Who is looking at that?

BTW, aside from averages, I notice that the seasonal temperature curve seems to have been pushed forward in time a few weeks, with it statying warmer or colder longer in the year. In any case, the change in average is noticeable here in SE Virginia, where it used to snow more etc., although we get more early spring storms and ice.

Re Thomas Lee Elifritz (#163):

Thomas,

Could we drop the adjective “christian” in our criticisms? I belong to the Quasi branch of Spinozism, but I still find it offensive. Besides, many libertarians are nonreligious. Feel free to criticize libertarians, or better yet, libertarian approaches, but please leave religion out of it.

[Response: Note to all. There are plenty of places to discuss religion and science on the web. This is not one of them. -gavin]

re: # 161

The numbers are off by a factor of about 5; the actual, real-world as- installed-and-operating capacity factor for wind power is about 8% ( and that’s the high end of the range). The E.on Wind Report 2005, and this summary of that report gives these numbers.

Wind energy cannot replace base-loaded electricity generating facilities.

Most power-generating methods that are not forced by a big hammer like consumption of fuel seldom ever reach the name-plate capacity even when the driving mechanism is present. The sun doesn’t shine and the wind doesn’t blow all the time. And when these mechanisms are present they are not present to the extent required to attain the rated generating capacity.

All corrections appreciated.

ps

Regarding nuclear energy, if the future situation is as dire as described all round these days, how can *any* options be taken off the table? It would seem that any and all options for energy supplies should be valid for consideration.

Dan Hughes (#167) wrote:

I think this are somewhat worse than what is usually described (IPCC estimates are conservative and do not take into account all of the positive feedback which results from moving outside of the metastable attractor), and in my view we can’t afford to take any of the options off the table – although if we see problems with a given approach, we may try to minimize them through safeguards, e.g., so as to keep nukes out of the hands of rogue regimes. With respect to a given approach, there may be valid concerns, but if that approach is necessary, the concerns may be addressed by other means.

Regarding Wind Power,

Each MW costs about $1.5 million in capital costs on land. I imagine, sea-based windmills would be expensive.

So if you are going to build 1 million 3 MW wind turbines on the ocean, you would need to come up at least $4,500,000,000,000 to build it. Anyone have $4.5 trillion setting around?

Re: #107…

“We” are a group of meteorologists who do modeling and verifications. We are not climate experts but are curious as to the success of actual, real world climate forecasts.

We don’t really know how scenario A, B, and C are defined exactly but clearly “C” has not occurred. The rate of CO2 rise has been increasing so we assumed that forcings in “B” had been exceeded. Therefore, the scenario that occurred was much greater than “C” and greater than “B”.

To estimate the rise in temperature since 1984, we used the difference in moving averages. No matter how we crunched the numbers, the answer was between a .37 and .44 deg rise. I don’t see how you could fail to get a much different answer no matter how you do the numbers.

I’m not sure where our stat guys fetched the data but it came from NOAA and it matches the graphed anomalies that are presented here. Our 1984 smoothed anomaly is around +.14 deg and currently, the smoothed anomaly is about .56 which is rise of +.42.

Then we simply looked the warming forecast in the graphs. It appears to us that Hansen clearly overforecast the warming. Once again, we reiterate that Hansen deserves credit. At the time of his research, global warming was a fringe idea and the warming trend had scarcely even begun. Warming has occured and somewhat dramatically, just not as strongly as forecast.

We aren’t experts in climate science but we are experts in forecasting and verification. This is not a complicated verification. Did we go wrong somewhere ?

[Response: The forcing scenarios are described in the original paper (linked to above) and are graphed for your convenience above. Scenario B has not been exceeded in the real world – what matters is the net forcing, not the individual components separately. The observational data I used are the GISS datasets available online at http://data.giss.nasa.gov/gistemp – different datasets might give slightly different answers, but I haven’t looked into it much. Note that different products available are all slightly different in coverage and methodology and are not obviously superior one to the other. That uncertainty has to factor into the assessment of whether the model trends were reasonable. If you are looking at smoothed anomalies vs the OLS trend, be aware that you are using more information than I did above. -gavin]

I am not convinced that that is true, but in any case intermittent distributed electricity production from wind and photovoltaics can dramatically reduce the “need” for baseline generation capacity. In particular rooftop PV tends to generate the most electricity during periods of greatest demand — ie. sunny summer days when air conditioning usage peaks — which means that we don’t need to have as many big power plants to meet that peak demand.

In addition I would point out that small scale distributed wind and PV are ideal solutions for rural electrification in the developing world, in countries which don’t have the resources to build giant power plants of any kind, or to build the grids to distribute electricity from large centralized power plants. That is one of the markets where wind and PV generation are growing rapidly. This is similar to the way some developing countries, like Vietnam, chose to build modern national telephone systems using cell phone technology rather than wired telephone technology.

Nuclear power can be taken off the table because, completely independent of the dangers and risks that it presents, it is not an effective way — and certainly not a cost-effective way — to reduce GHG emissions as much and as rapidly as they need to be reduced. I often hear nuclear advocates proclaiming that “nuclear is THE solution to global warming” and that “no one can be serious about dealing with global warming if they don’t support expanded use of nuclear power” but I have never heard any nuclear advocate lay out a plan showing how many nuclear power plants would have to be built in what period of time to have a significant impact on GHG emissions. That’s because when you really look at it, you will see that even a massive global effort to build thousands of new nuclear power plants would have only a modest impact on GHG emissions and even that impact won’t occur for decades.

Resources spent building new nuclear power plants means resources diverted from other efforts — conservation, efficiency, wind, PV, biofuels, geothermal, mass transit, less car-oriented communities — that would produce more results faster.

#161, Thanks for the revealing calculations. In my opinion 1 million wind towers can be produced faster than 1 million automobiles, and the world produces dozens of millions automobiles a year. May be the good people at Ford , Chrysler may start a rethink, produce wind turbines along with hydrogen electric cars, an automobile industry feedback! With the total car concept producing everything from the energy to motor, while leaving Exxon to the petro-chemical industry.

#169 Your numbers are a bit high given the production costs would lower if mass production occurs.

Have you factored revenues?

Hi Bruce Tabor, than you for the equation for calculating the levels of Atmospheric CO2 vs. Man made carbon release.

It was a little too complicated for me so I devised my own. Looking at the CO2 data from Hawaii it is obvious from the saw-tooth pattern of the seasonal levels of CO2 that the rate at which CO2 can be removed from the atmospher is more than an order of magnitutde greater than the slow rise in mean CO2. This means we can perform steady state analysis.

To do this I needed an estimate of the amount of CO2 released by Humans per year.

(Marland, G., T.A. Boden, and R. J. Andres. 2006. Global, Regional, and National CO2 Emissions. In Trends: A Compendium of Data on Global Change. Carbon Dioxide Information Analysis Center, Oak Ridge National Laboratory, U.S. Department of Energy, Oak Ridge, Tenn., U.S.A.)

I also used the Hawaian data from 1959 to 2003 (averaging May and November).

The steady state equation for atmospheric [CO2} ppm is as follows:-

[CO2] ppm = (NCO2 + ACO2)/K(efflux)

Where:-

NCO2 is the release of CO2 into the atmosphere from non-human sources

ACO2 is man-made CO2 released from all activities

and K(efflux) is the rate the CO2 is removed from the atmosphere by all mechanisms.

You can calculate that NCO2 is 21 GT per year, ACO2 in 2003 was 7.303 GT and K(efflux) is 0.076 per year. This last figure gives a half-life for a molecule of CO2 in the atmosphere of 9.12 years.

Running this simulation, with the nimbers for ACO2 from Marland etal., 2003, reproduces the Hawian data from 1959 to 2003 with an average error of 3.95 +/- 2.8 ppm.

It appears that we would hit the magic number of 560 ppm when our species releases slightly more into CO2 the atmosphere than does mother nature, I make it about 21.5 GT per year from human sources and 21GT natural to hit 550 ppm.

Please feel free to check my numbers.

RE: #172 and earlier discussions on PV, etc, although this also applies somewhat to windpower: for some techs, costs go down, seriously, as unit volumes go up, and manufacturing processes improve.

If computers were still as expensive as mainframes, we wouldn’t have them in our watches.

In another thread here, I mentioned a good talk by Charles Gay of Applied Materials regarding cost curves for PV cells.

I recommend:

http://www.appliedmaterials.com/news/solar_strategy.html,

On that page, “what is solar electric” is a fairly simple talk.

Charles Gay gives a nice talk in

http://www.appliedmaterials.com/news/assets/solar.wmv

and the parts showing the new manufacturing machinery are impressive.

CAVEAT: this is a commercial company of course, but it has a solid reputation as a sober, conservative one.

#167 Windpower (Dan Hughes), #169 (John Wegner)

Dan, you misinterpret the numbers from the E.ON report. Their installed capacity was 7,050 MW, and average power 1,295 MW (see page 4), giving a capacity factor of 18%. Germany is a special case, lots of turbines are in areas where there’s not much wind, and where turbines never would have been built without the generous feed-in tariff. A more common value is 25% on land (UK and Denmark). The record seems to be 50% in New Zealand.

By the way, the capacity factor only reflects the nature of the wind speed distribution, and the relative size of rotor and generator, i.e. it does not indicate some physical limit to what a wind turbine can do or something. What matters is the cost per kWh.

The figure I used (40% offhore) is not unreasonable. I’ll be happy to dig up some more numbers (it’s an interesting matter actually) but I don’t have the time just now.

Cost of wind turbines on land is commonly cited at EUR 1,000,000 per MW and EUR 1,500,000 per MW offshore.

That’s less than five years of the world’s military expenditures — and less than ten years of the USA’s military expenditures, since the USA accounts for approximately half of the entire world’s military expenditures.

So, yes, there is that much money “setting around”. It’s a question of priorities.

Re #175 Cost of windpower

Yes 4.5 trillion is about right. However, total expenditure for wind is the wrong number to look at. It would cost you roughly the same amount if you were to generate the same amount of electricity by conventional means (if you factor in environmental cost that is – if you don’t, conventional is about a factor “cheaper”). What you have to look at is the cost of generating 1 kWh of electricity.

Doc Martyn: In no way is the atmospheric CO2 concentration in a steady state, which pretty much invalidates your calculation. Human emissions for the past decade have been on the order of 7 GtC/year. The yearly increase in concentration were about 1.9 ppm. 1.9 ppm is about 4 GtC.

Of course, I don’t really like the idea of calculating a “carbon lifetime”. You really want an ecosystem model and an ocean model of at least 2 layers (surface and deep ocean). Then you could have exchange rates of the surface layer and the ecosystem with the atmosphere, and of the surface and deep oceans, and you could hypothetically model a pulse of CO2 emissions and back out a “time to 50% reduction”… except that in the real world, the ecosystem is very sensitive to temperature and precipitation, and the ocean mixing may or may not slow down enough to slow exchange of CO2 into the deep ocean…

And in the long term, human emissions would have to drop to ZERO in order to stabilize concentrations, because the deep ocean will eventually reach equilibrium with the surface layers. This differs from gases like methane which do have a “real” lifetime due to reactions with hydroxyl radicals, where for any given steady state anthropogenic methane emissions are, a stabilized concentration value will eventually be reached.

Re: #170 (Heidi)

I can see at least two potential inconsistencies in your analysis. First, for observed temperatures you used the difference in moving averages (what was the size of your averaging window?), but it appears that to gauge the predictions you used the point-to-point temperature difference between 1984 and 2006. Second, as Gavin pointed out, the land-ocean temperature index tends to underestimate the truth because it’s based on sea surface temperature rather than air temperature, while the meteorological-station index temperature tends to overestimate the truth because land warms faster than ocean. The truth probably lies somewhere between.

Gavin, can you post the actual *numbers* for the predictions for scenarios A, B, C, so we can compare them to observation numerically using the same procedures?

Marcus, why do you assume that one can’t use steady state calculations for modeling the levels of atmospheric CO2?

You stated

“The yearly increase in concentration were about 1.9 ppm. 1.9 ppm is about 4 GtC.”

To which I say big deal? The yearly summer/winter difference is greater than this amount. The saw-tooth patters shown in atmospheric CO2 of the study in Hawaii quite clearly show that the rate at which CO2 is absorbed in the Norther monts is much greater than the overall trend.

Please state why I cannot use steady state kinetics? My fit is much better than most and I have based it on a single set of CO2 emission data.

Please [edit] explain your reasoning.

The discussion about alternative power is a) interesting but b) off-topic and thus has a bad effect in terms of the utility of this comment thread. This sort of thing happens a lot, so I would like to suggest to the RC team that a (weekly?) open thread be maintained. John Quiggin does this and it seems to work quite well.

Re Doc (#174):

Atmospheric CO2 half-life? There isn’t any.

There are a variety of sinks for carbon dioxide, including the soil, the ocean and the biosphere. Each of these will act as sinks at different rates. Moreover, the ocean (which has been responsible for absorbing as much as 80% of anthropogenic emissions) can become saturated, or as temperatures rise in the temperate regions or winds increase in arctic regions and stir up carbon dioxide from below, act as an emitter. Likewise CO2 which is absorbed at the surface of the ocean will raise the PH level, and a higher PH level makes the ocean less able to absorb carbon dioxide.

It would be reasonable to expect carbon dioxide to have a half-life if there were only one sink and the absorbtion of CO2 at a later point were independent of the CO2 which had been absorbed previously. Likewise, heat and drought have been reducing the ability of plants to absorb CO2. As such, there can be no constant “half-life” of atmospheric CO2.

Re 162 G’day Jim,

I agree.

For fun (!) I tried an ARIMA model (Autoregressive integrated moving average) on the 1958 to 1988 data forcasting to 2006. ARIMA models enable the capture of autocorrelated structure in the data.

A simple model that fits is an ARIMA(0,1,1) model. It would take me forever to explain this but basically it involves analysing the change in data from year to year (the data is differenced – to do the forecasts it must be integrated (I)) using a moving average (MA) term but no autoregressive (AR) term.

The forecast for 2005 is 0.40 C with 95%CI of 0.01 to 0.79. The point estimate for the ARIMA model is similar to the OLS (0.35C) but the 95% CI is much broader (0.21 to 0.49 for OLS).

ARIMA models that fit the data from 1880 to 1988 have a very complex covariance structure, which results in very wide CIs for the 2005 forecasts.

To me this says that this type of modelling is not a particularly useful approach to this problem. Much better to base the model on the underlying physics.

“Each of these will act as sinks at different rates.”

Which is why you use steady state kinetics.

“Moreover, the ocean (which has been responsible for absorbing as much as 80% of anthropogenic emissions)”

How does the ocean know which CO2 molecule is anthropogenic and which is natural?

You say 80%, what on does that mean? Its a number, we are attempting to establish the FLUXES between two or more systems, i.e. the ocean and the atmosphere. A number like 80% does not mean anything, only rates and steady state levels are important.

“can become saturated”

How do you know? have you every looked at what happens when you raise the pCO2 above a large mass of deep water? If you, or someone you have read has performed the expirement, show the reference.

“can become saturated” based on what exactly? Chemistry, physics or conjecture?

“as temperatures rise in the temperate regions or winds increase in arctic regions and stir up carbon dioxide from below, act as an emitter”

“Stir up CO2 from below.”

If I were to raise a billion metric tons of sea water from the depths and dump it on the surface, why would the overall effect be the release of CO2? You may think that the warming of this volume of water from cold to normal temperature will release CO2. However, what about the effects when you consider what this water also contains. If you stir up water from the depths you bring up nutrients. Ocean food chains are pretty much nutrient limited, especially in things like iron and copper. Stirring up nutrients will lead to more photosynthesis.

Moreover, the abstract you cited states that there will be less deep ocean to surface currents. So which is it, more deep water rising or less.

Data, not supersition.

There is considerable confusion here about terminology. Half-life is formally defined as the time necessary to reduce the current value of something to half its value. For first order decays (think simple radioactive decay) where the rate constant k does not change over time the rate of change of x with respect to time, dx/dt, in other words how fast it decays,

dx/dt = -k x and the half life, t1/2 = ln2/k where ln2 = 0.67

the half life is independent of the initial value. For other types of simple kinetic systems the half life depends on the initial value for example in a second order decay

dx/dt =-k x^2 the half life t1/2 = 1/(k xo) where xo was the initial value.

If you let k vary with some parameter or the rate of change of x with time depends on a complex mechanism, the half life will turn out to depend on many things.

A useful discussion of how half lives depend on kinetic mechanisms can be found in what is usually Chapter 14 of most General Chemistry textbooks. What Timothy Chase appears to be saying is that CO2 kinetics are not simple first order. That is true.

So does that mean a 1 degree increase by around 2015?

Most of this is beyond me but would appreciate a layman’s conclusion?

Recently the papers are saying the oceans are using up their CO2 capacity, will this affect the models?

Thanks,

Grant

Flow of carbon (in the form of CO2, plant and soils, etc) into and out of the atmosphere is treated in what are called box models. There are three boxes which can rapidly (5-10 years) interchange carbon, the atmosphere, the upper oceans, and the land. The annual cycle seen in the Mauna Loa record is a flow of CO2 into the land (plants) in the summer and out of the atmosphere as the Northern Hemisphere blooms (the South is pretty much green all year long) and in reverse in the winter as plants decay. Think of it as tossing the carbon ball back and forth, but not dropping it into the drain. Thus Doc Martyn’s model says nothing about how long it would take for an increase in CO2 in the atmosphere to be reduced to its original value.

To find that, we have to have a place to “hide” the carbon for long times, e.g. boxes where there is a much slower interchange of carbon with the first three. The first is the deep ocean. Carbon is carried into the deep ocean by the sinking of dead animals and plants from the upper ocean (the biological pump). This deep ocean reservoir exchanges carbon with the surface on time scales of hundreds of years. Moreover the amount of carbon in the deep ocean is more than ten times greater than that of the three surface reservoirs.

The second is the incorporation of carbonates (from shells and such) into the lithosphere at deep ocean ridges. That carbon is REALLY lost for a long long time.

A good picture of the process can be found at

http://earthobservatory.nasa.gov/Library/CarbonCycle/Images/carbon_cycle_diagram.jpg

A simple discussions of box models can be found at

http://www.nd.edu/~enviro/pdf/Carbon_Cycle.pdf

And David Archer has provided a box model that can be run online at

http://geosci.uchicago.edu/~archer/cgimodels/isam.html

and here is a homework assignment

http://shadow.eas.gatech.edu/~jean/paleo/sets/undergrads1.pdf

180+ comments and counting – pretty sure mine will get lost, but what the heck…

What I’m most curious about is the model’s accuracy given EXACT data. That is, I would like to take Dr. Hansen’s model and parameters from 1984 and run it with the exact data for the relevant independent variables – not guesses by Dr. Hansen – and see how close the model comes. I would think this should eliminate the high-frequency noise that is quite noticeable from the graphs and should therefore be very accurate for every data point.

The point is that of course the actual independent variables (i.e. the ones that are inputs to rather than outputs from the model) will differ from predictions. But given that the predictions were right, would the model have been as accurate as, say, Newton’s laws at predicting future behavior? This is the falsifiable test, and I’m having a hard time finding out when and where it is being done for existing models. If it is being done, I would appreciate some further reading on what is done and how it turned out.

[[But I do know how science works, and it doesn’t work when christian libertarian scientists sit back in their armchairs, and by merely lifting a little finger, are able to pronounce that markets will accomplish the global implementation of alternative energy, and that no effort by christian libertarian scientists is necessary at all. ]]

Gavin et al., I consider this a strike at my religion, if not my politics (I left the Libertarian Party when they wouldn’t endorse Desert Storm in 1991). If it makes it easier to visualize, make Elifritz’s term “Jewish liberal scientists,” or “Black radical scientists.”

[[The seasonal variation of temperature increase caused by general increase in solar luminosity (as some claim is the major cause of GW) would be different from that due to CO2, wouldn’t it, since the mechanism (direct thermal equilibrium with radiation v. absorption of longer-wave IR) is different? Who is looking at that?]]

Neil — you’re right that the mechanism of global warming by increased sunlight would be different. First, the stratosphere would be warming, since sunlight first gets absorbed there (in the UV range, by oxygen and ozone). Second, the equator would be heating faster than the poles, by Lambert’s cosine law.

Instead, the opposite is observed in each case. The stratosphere is cooling, which is predictable from increased greenhouse gases since “carbon dioxide cooling” dominates over heating in the stratosphere. And the poles are heating faster than the tropics — “polar amplification” — which was also predicted by the modelers.

[[The sun doesn’t shine and the wind doesn’t blow all the time. ]]

Actually, fossil-fuel and nuclear power plants aren’t on-line 24/7 either. And note that when the sun doesn’t shine, the wind still blows, and vice versa. And note that there are many ways to store excess power for use during non-peak-input times. Solar thermal plants can store it in molten salt. Windmills and photovoltaics can store it in storage batteries, or by compressing gases in pistons, or in flywheels, or by pumping water uphill to run downhill through a turbine later. We don’t need new technology to start getting a large fraction (and eventually all) of our power from renewables. We need the political will to start building.

[[Regarding Wind Power,

Each MW costs about $1.5 million in capital costs on land. I imagine, sea-based windmills would be expensive.

So if you are going to build 1 million 3 MW wind turbines on the ocean, you would need to come up at least $4,500,000,000,000 to build it. Anyone have $4.5 trillion setting around? ]]

How much is spent around the world on building new power plants each year? The $4.5 trillion you’re talking about wouldn’t be a cost on top of all our other costs, it would be a cost in place of another cost.

“Thus Doc Martyn’s model says nothing about how long it would take for an increase in CO2 in the atmosphere to be reduced to its original value.”

Well actually it does, assuming that at Y=0, atmos. [CO2] = 373 ppm and all man made CO2 generation ended. It takes one half-life, Y=10, for CO2 to fall from 373 to 321 ppm, at Y=18 it falls to 300 ppm and at Y=27 [CO2] is 288 ppm. After four half-lives, 36 years it falls to 282 ppm.

Neil (#165) a solar mechanism would heat the stratosphere and the troposphere and the surface. Greenhouse gas warming warms the troposphere and the surface and cools the stratosphere. That was one of the first things people looked at and, guess what, the stratosphere is cooling. Also look at Elmar Uherek’s excellent explanation

Re #191 [Actually, fossil-fuel and nuclear power plants aren’t on-line 24/7 either.]

But there’s no reason for all the FF & nuclear plants serving an area to go off-line at the same time. If you have cloudy conditions, or at night, all solar output will drop over wide areas. Likewise wind depends on weather systems: get a high pressure system sitting over e.g. the west coast, and the regional wind power output would drop.

[And note that when the sun doesn’t shine, the wind still blows, and vice versa.]

Not necessarily: around here the wind tends to blow strongly during the afternoon and evening. Midnight to noon will be generally be calm. Then there are seasonal variations in the pattern: we see much stronger wind from late winter to early summer than in the rest of the year.

[And note that there are many ways to store excess power for use during non-peak-input times.]

All of which A) require significant investment in the equipment to do the storage; and B) lose significant amounts of energy in the storage cycle. This significantly raises your cost per MWh from these sources. Check out the difference in cost between a grid-connected home solar array, and an off-the-grid system, for instance.

Re: #65

I have been looking at that in doing plots of mean temperatures at climate stations in the U.S. (1890s to current). The most warming is has been occurring in the Upper Midwest, Alaska and the upper elevation stations in New Mexico, Colorado, Wyoming and Montana.

I’ve also been looking at average overnight minimum temperatures vs average daily maximums. As expected with greenhouse warming, the minimums are increasing faster than the maximums. Increasing humidity is a factor in that too.

Plots at:

http://new.photos.yahoo.com/patneuman2000/albums

http://npat1.newsvine.com/

http://www.mnforsustain.org/climate_snowmelt_dewpoints_minnesota_neuman.htm

> one half-life, Y=10, for CO2

This combines several different assumptions. Have you read the “‘Science Fiction Atmospheres” piece?

It seems like it’s time for someone to restate the obvious, so I’ll volunteer.

Of course alternatives to fossil fuels cost more than fossil fuels do, provided you neglect the environmental impact of fossil fuels. If this were not the case, the other sources of power would already be dominant.

Unfortunately, the marketplace as presently constituted does not adequately account for the damage due to fossil fuels.

The fact that carbon is not already largely phased out is a simple example of the tragedy of the commons. In the global aggregate, fossil fuel use is much more expensive than it appears. It’s just that you are extracting wealth from a common pool every time you use them, rather than from your own resources. The commons is the climate system. So each of us, when we maximize individual utility in our energy decisions, reduce the viability of the world as a whole, by extracting value from the climate resource held in common.

The simplest solution is to increase the cost to the consumer of carbon emissions to the point where these externalities are accounted for, and wait for alternatives to emerge from private enterprise. Since it would be counterproductive to increase the profits of the producer, this amounts to a tax. Public revenues can be held constant, if so desired, by reducing other taxes.

The devil is in the details of course, but the big picture is really not all that complicated.

Ref: saturation of Antarctic ocean.

I can’t find a decent summary of the research. Could someone kindly tell me what wind data they used. If the warming scenarios are correct then I would have expected wind speeds to decrease as the driver, temperature difference, will also be reduced between the tropics and the poles.

My bet is that surface pollution is the culprit, not wind — all CO2 mechanisms are disrupted by this.

Is there an isotope signal from this new outgassing?

JF