At Jim Hansen’s now famous congressional testimony given in the hot summer of 1988, he showed GISS model projections of continued global warming assuming further increases in human produced greenhouse gases. This was one of the earliest transient climate model experiments and so rightly gets a fair bit of attention when the reliability of model projections are discussed. There have however been an awful lot of mis-statements over the years – some based on pure dishonesty, some based on simple confusion. Hansen himself (and, for full disclosure, my boss), revisited those simulations in a paper last year, where he showed a rather impressive match between the recently observed data and the model projections. But how impressive is this really? and what can be concluded from the subsequent years of observations?

In the original 1988 paper, three different scenarios were used A, B, and C. They consisted of hypothesised future concentrations of the main greenhouse gases – CO2, CH4, CFCs etc. together with a few scattered volcanic eruptions. The details varied for each scenario, but the net effect of all the changes was that Scenario A assumed exponential growth in forcings, Scenario B was roughly a linear increase in forcings, and Scenario C was similar to B, but had close to constant forcings from 2000 onwards. Scenario B and C had an ‘El Chichon’ sized volcanic eruption in 1995. Essentially, a high, middle and low estimate were chosen to bracket the set of possibilities. Hansen specifically stated that he thought the middle scenario (B) the “most plausible”.

These experiments were started from a control run with 1959 conditions and used observed greenhouse gas forcings up until 1984, and projections subsequently (NB. Scenario A had a slightly larger ‘observed’ forcing change to account for a small uncertainty in the minor CFCs). It should also be noted that these experiments were single realisations. Nowadays we would use an ensemble of runs with slightly perturbed initial conditions (usually a different ocean state) in order to average over ‘weather noise’ and extract the ‘forced’ signal. In the absence of an ensemble, this forced signal will be clearest in the long term trend.

How can we tell how successful the projections were?

Firstly, since the projected forcings started in 1984, that should be the starting year for any analysis, giving us just over two decades of comparison with the real world. The delay between the projections and the publication is a reflection of the time needed to gather the necessary data, churn through the model experiments and get results ready for publication. If the analysis uses earlier data i.e. 1959, it will be affected by the ‘cold start’ problem -i.e. the model is starting with a radiative balance that real world was not in. After a decade or so that is less important. Secondly, we need to address two questions – how accurate were the scenarios and how accurate were the modelled impacts.

So which forcing scenario came closest to the real world? Given that we’re mainly looking at the global mean surface temperature anomaly, the most appropriate comparison is for the net forcings for each scenario. This can be compared with the net forcings that we currently use in our 20th Century simulations based on the best estimates and observations of what actually happened (through to 2003). There is a minor technical detail which has to do with the ‘efficacies’ of various forcings – our current forcing estimates are weighted by the efficacies calculated in the GCM and reported here. These weight CH4, N2O and CFCs a little higher (factors of 1.1, 1.04 and 1.32, respectively) than the raw IPCC (2001) estimate would give.

So which forcing scenario came closest to the real world? Given that we’re mainly looking at the global mean surface temperature anomaly, the most appropriate comparison is for the net forcings for each scenario. This can be compared with the net forcings that we currently use in our 20th Century simulations based on the best estimates and observations of what actually happened (through to 2003). There is a minor technical detail which has to do with the ‘efficacies’ of various forcings – our current forcing estimates are weighted by the efficacies calculated in the GCM and reported here. These weight CH4, N2O and CFCs a little higher (factors of 1.1, 1.04 and 1.32, respectively) than the raw IPCC (2001) estimate would give.

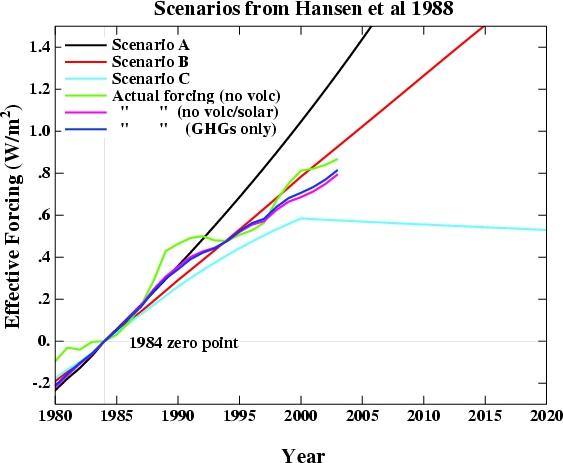

The results are shown in the figure. I have deliberately not included the volcanic forcing in either the observed or projected values since that is a random element – scenarios B and C didn’t do badly since Pinatubo went off in 1991, rather than the assumed 1995 – but getting volcanic eruptions right is not the main point here. I show three variations of the ‘observed’ forcings – the first which includes all the forcings (except volcanic) i.e. including solar, aerosol effects, ozone and the like, many aspects of which were not as clearly understood in 1984. For comparison, I also show the forcings without solar effects (to demonstrate the relatively unimportant role solar plays on these timescales), and one which just includes the forcing from the well-mixed greenhouse gases. The last is probably the best one to compare to the scenarios, since they only consisted of projections of the WM-GHGs. All of the forcing data has been offset to have a 1984 start point.

Regardless of which variation one chooses, the scenario closest to the observations is clearly Scenario B. The difference in scenario B compared to any of the variations is around 0.1 W/m2 – around a 10% overestimate (compared to > 50% overestimate for scenario A, and a > 25% underestimate for scenario C). The overestimate in B compared to the best estimate of the total forcings is more like 5%. Given the uncertainties in the observed forcings, this is about as good as can be reasonably expected. As an aside, the match without including the efficacy factors is even better.

What about the modelled impacts?

What about the modelled impacts?

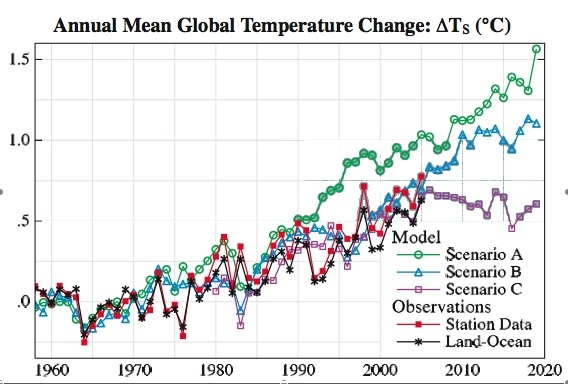

Most of the focus has been on the global mean temperature trend in the models and observations (it would certainly be worthwhile to look at some more subtle metrics – rainfall, latitudinal temperature gradients, Hadley circulation etc. but that’s beyond the scope of this post). However, there are a number of subtleties here as well. Firstly, what is the best estimate of the global mean surface air temperature anomaly? GISS produces two estimates – the met station index (which does not cover a lot of the oceans), and a land-ocean index (which uses satellite ocean temperature changes in addition to the met stations). The former is likely to overestimate the true global surface air temperature trend (since the oceans do not warm as fast as the land), while the latter may underestimate the true trend, since the air temperature over the ocean is predicted to rise at a slightly higher rate than the ocean temperature. In Hansen’s 2006 paper, he uses both and suggests the true answer lies in between. For our purposes, you will see it doesn’t matter much.

As mentioned above, with a single realisation, there is going to be an amount of weather noise that has nothing to do with the forcings. In these simulations, this noise component has a standard deviation of around 0.1 deg C in the annual mean. That is, if the models had been run using a slightly different initial condition so that the weather was different, the difference in the two runs’ mean temperature in any one year would have a standard deviation of about 0.14 deg C., but the long term trends would be similar. Thus, comparing specific years is very prone to differences due to the noise, while looking at the trends is more robust.

From 1984 to 2006, the trends in the two observational datasets are 0.24+/- 0.07 and 0.21 +/- 0.06 deg C/decade, where the error bars (2![]() ) are the derived from the linear fit. The ‘true’ error bars should be slightly larger given the uncertainty in the annual estimates themselves. For the model simulations, the trends are for Scenario A: 0.39+/-0.05 deg C/decade, Scenario B: 0.24+/- 0.06 deg C/decade and Scenario C: 0.24 +/- 0.05 deg C/decade.

) are the derived from the linear fit. The ‘true’ error bars should be slightly larger given the uncertainty in the annual estimates themselves. For the model simulations, the trends are for Scenario A: 0.39+/-0.05 deg C/decade, Scenario B: 0.24+/- 0.06 deg C/decade and Scenario C: 0.24 +/- 0.05 deg C/decade.

The bottom line? Scenario B is pretty close and certainly well within the error estimates of the real world changes. And if you factor in the 5 to 10% overestimate of the forcings in a simple way, Scenario B would be right in the middle of the observed trends. It is certainly close enough to provide confidence that the model is capable of matching the global mean temperature rise!

But can we say that this proves the model is correct? Not quite. Look at the difference between Scenario B and C. Despite the large difference in forcings in the later years, the long term trend over that same period is similar. The implication is that over a short period, the weather noise can mask significant differences in the forced component. This version of the model had a climate sensitivity was around 4 deg C for a doubling of CO2. This is a little higher than what would be our best guess (~3 deg C) based on observations, but is within the standard range (2 to 4.5 deg C). Is this 20 year trend sufficient to determine whether the model sensitivity was too high? No. Given the noise level, a trend 75% as large, would still be within the error bars of the observation (i.e. 0.18+/-0.05), assuming the transient trend would scale linearly. Maybe with another 10 years of data, this distinction will be possible. However, a model with a very low sensitivity, say 1 deg C, would have fallen well below the observed trends.

Hansen stated that this comparison was not sufficient for a ‘precise assessment’ of the model simulations and he is of course correct. However, that does not imply that no assessment can be made, or that stated errors in the projections (themselves erroneous) of 100 to 400% can’t be challenged. My assessment is that the model results were as consistent with the real world over this period as could possibly be expected and are therefore a useful demonstration of the model’s consistency with the real world. Thus when asked whether any climate model forecasts ahead of time have proven accurate, this comes as close as you get.

Note: The simulated temperatures, scenarios, effective forcing and actual forcing (up to 2003) can be downloaded. The files (all plain text) should be self-explicable.

RE # 98

[Why do you assume I haven’t? ]

Barton, put aside your wishful thinking and get serious about the 4 trillion kilowatt-hour US 2006 electric power demand (minus the 10-20 percent of high end efficiency capability) being replaced by:

a. how many wind towers;

b. how many sqft of solar panels (absent storage capability)

their output being fed into a grid that today struggles to manage the flow of bulk power transfers.

Wishing an end to fossil fuel consumption is about all we believers seem to offer.

Give us some numbers, please.

You need to revisit the concept of “renewable energy.”

Surely you jest. I am a pioneer of alternative energy. I certainly don’t have to justify my contributions to anybody.

The world does not have to run on fossil fuels.

Yes it does. We are not anywhere near closing any supply chain loops for any aspect of alternative and/or renewable energy. Ideally, the solar irradiance is adequate, but until we come up with some technological breakthroughs in optical and/or thermal energy conversion, we are many many orders of magnitude away from anything even remotely qualified as sustainable, and thus still utterly dependent upon fossil fuels. The numbers are simply not there yet, no matter how cavalierly you wave them away with simple monocrystalline solar cells and small wind generators, lead acid batteries, 12 volt DC appliances and inverters. I encourage you to install them, as I have developed these techniques over the previous several decades, but unless you’re extremely clever and resourceful, these systems will not deliver food to your table from great distances.

Are you that clever and resourceful? Are you ready for sustenance farming in your back yard? Are you ready to dump that Victorian home and/or cheap stick McMansion, and build a superinsulated Earth home? How do you intend to pay your ta-xes and mort-gage? Are you familiar with the amount of capital investment required for a complete alternative solution? Multiply that by billions.

Clearly this is one part of the solution, but I find it incredible that you can completely trivialize that from the comfort of your armchair. That’s not how science is done and technology is developed. If you’re going to talk, you need to walk.

http://www.sciencemag.org/cgi/content/abstract/316/5825/709

Chip:

In regards to the IPCC emissions scenarios, things get a bit confusing when one is dealing with predictions of things we ourselves can influence. So, yes, I will agree with you that the high end IPCC scenarios are unlikely to come to pass but that is because I don’t think humanity will be that stupid in the face of this problem and will, at some point, ignore what the Cato Institute and Western Fuels Association thinks is best (despite the best efforts of you and Patrick Michaels!) and actually do what is best for humanity.

So, in a sense, we are back at the Easterbrook Fallacy: It is exactly because we are going to take action to avoid the worst scenarios that they are unlikely to actually come to pass. However, it obviously doesn’t then follow that we should do nothing to avoid them because they aren’t likely happen!

You need to revisit the concept of “renewable energy.” The world does not have to run on fossil fuels.

[note to moderator]

Rather than arguing here with people, it would probably be more productive to point them to some fairly recent ‘numbers’ :

http://www.sciencemag.org/cgi/content/abstract/298/5595/981

Review

ENGINEERING:

Advanced Technology Paths to Global Climate Stability: Energy for a Greenhouse Planet

Hoffert et. al.

There’s a very simple yes/no question in #42 still awaiting reply. Unfortunate that the moderator departed at #40. I know: folks are busy.

[Response: Not sure what yes/no question you are referring to, or indeed what error bars are being referred to in #42. Observational errors on any one annual mean temperature anomaly estimate are around 0.1 deg C, and the errors from the linear fits are given in the text. If you were more precise, I might be able to help. – gavin]

Funny how we looked at the very same set of forecasts and came up with a much less favorable evaluation.

Lets use the verification period from mid 1984 to Feb 2007. How much have temperatures risen since then ? Our figures show that the average global temperature has risen between .37 deg C and .43 deg C depending on how we smooth it. Either way, this agrees roughly with the commonly quoted warming rate of .17 deg C per decade.

From the graphs in Hansen’s paper, we interpolate the following forecasts of warming for the 1984-2006 period….

Scenario A 0.84 deg

Scenario B 0.64 deg

Scenario C 0.48 deg

So, forecast minus observed….

Scenario A 0.84 deg – .40 deg = error of 0.44 deg

Scenario B 0.64 deg – .40 deg = error of 0.24 deg

Scenario C 0.48 deg – .40 deg = error of 0.08 deg.

Our interpretation is that Scenario A is the one that has occured…

“Scenario A assumes continued exponential trace gas growth, scenario B assumes a reduced linear growth of trace gases, and scenario C assumes a rapid curtailment of trace gas emissions such that the net climate forcing ceases to increase after the year 2000.”

http://adsabs.harvard.edu/abs/1988JGR….93.9341H

The rate of CO2 growth has continued at an increasing rate.

So, in summary, Hansen overforecast the warming by more than a factor of two (.84 deg vs .40 deg). Even allowing Scenario B as the verification, the forecast is still off by 60% (.64 vs .40).

Hansen’s forecast, however, should not be totally discreditied. At the time of his research, global warming was a fringe idea and the warming trend had scarcely even begun. Warming has occured and somewhat dramatically, just not as strongly as forecast.

[Response: Who are ‘we’? I ask because I find a number of aspects of ‘your’ analysis puzzling. First off, there is no way that Scenario A was more realistic – the proof is in figure 1 above which gives the real forcings used. Secondly, I cannot discern what your interpolation procedure is for analysing the data – it is not a linear trend (which are given above) – and without that information it is unclear whether it is appropriate and what the error bars on the forced component would be due to the unforced (random) noise component associated with any one year in any one realisation. And thirdly, you don’t give a source for your observational data – absent these three things I cannot comment on your conclusions. – gavin]

re #58

I don’t know how you can look at the satellite record since 1998 and not conclude that the temperature has fallen.

“Statistically significant trend” is open for debate…but clearly satellite measured temperatures are lower than in 1998.

http://upload.wikimedia.org/wikipedia/en/7/7e/Satellite_Temperatures.png

Heidi (#107) wrote:

Impressive chart.

For the last twenty-five years, the new highs have been coming along with a fair amount of regularity. The trend lines seem quite telling.

I would say that the temperatures are definitely going up.

re #70, #85 Gas at $4/US Gallon?? Perish the thought!

Over here in the UK, today’s pump price was 95.9p/litre.

Using 1 US gallon = 3.785 litres, that works out at roughly 3.63GBP.

Using the xe.com’s exchange rate of 1 GBP = 1.97705 USD, that equates to $7.18/gallon. (when I last ran this calculation, the USD was even weaker, and the sums worked out to c$8.50/gallon).

The point, though, is this: even at that price, people still drive their cars over here. Traffic volume continues to increase in this green and pleasant land, so I suspect it’s going to take something a bit sterner than a mere $8/US Gallon to materially affect peoples’ decisisions about transport and energy use.

Heidi,

“Statistically significant trend” was their choice, not mine, and they freely admit they got it wrong, so it’s a bit silly for you to pretend otherwise. Obviously given the size of the temperature spike in the monthly satellite record for 1998, it may be some time before this measurement is beaten. However, the annual average record in surface temperatures should be overtaken in the next few years. I’d bet money on it happening by 2010, in fact. Any takers?

“Observational errors on any one annual mean temperature anomaly estimate are around 0.1 deg C”

There are a finite number of temperature recording stations and these are placed around the Earth in an heterogeneous manner. It is therefore obvious that each station requires a different weighting, and coupled with natural variation of temperature, there can be no simple formular for determination of the SD, as in +/- 0.1 deg C.

What is the actual error for the years recorded. What are the error bars of projection A, B and C in the year 2010?

[Response: Estimates of the error due to sampling are available from the very high resolution weather models and from considerations of the number of degrees of freedom in the annual surface temperature anomaly (it’s less than you think). How would I be able to know the actual error in any one year? If I knew it, I’d fix the analysis! Finally, what do you mean by the ‘error bars of projection’? These were single realisations, and so don’t have an ensemble envelope around them (which is how we would assess the uncertainty due to weather noise today). That would have given ~0.1 deg C again in any one year, much less difference in the trend. If you want uncertainty due to the forcings, then take the span of Scenario A to C, if you want the uncertainty due to the climate model, you need to compare different climate models which is a little beyond this post – but look at IPCC AR4 to get an idea. – gavin]

[[Barton, put aside your wishful thinking and get serious about the 4 trillion kilowatt-hour US 2006 electric power demand (minus the 10-20 percent of high end efficiency capability) being replaced by:

a. how many wind towers;

b. how many sqft of solar panels (absent storage capability)

their output being fed into a grid that today struggles to manage the flow of bulk power transfers. ]]

John, put aside your wishful thinking and get serious about the amount of new power-related infrastructure built every year, and how quickly we could switch to renewables if the bulk of that were directed that way. For an example of how quickly the US can produce something it really wants to produce, you might consider the amount of US shipbuilding in 1938 versus that in 1943.

I didn’t say renewables could do it all this minute. What I said was that renewables could do it all, a point which I will continue to stand by despite your patronizing attitude.

[[The world does not have to run on fossil fuels.

Yes it does. We are not anywhere near closing any supply chain loops for any aspect of alternative and/or renewable energy. Ideally, the solar irradiance is adequate, but until we come up with some technological breakthroughs in optical and/or thermal energy conversion, we are many many orders of magnitude away from anything even remotely qualified as sustainable, and thus still utterly dependent upon fossil fuels.]]

No it doesn’t. We can add large amounts of wind, solar, geothermal and biomass to the mix now, and in 10-50 years can be running entirely off renewables. All that’s necessary is the political will to do it. The repeated statement that we need technological breakthroughs in order for renewable energy to be useful is a lie. Solar thermal plants work now, so do windmills. No new technology required.

We are utterly dependent on fossil fuels in 2007. We do not have to be utterly dependent on fossil fuels in 2027.

[[ Are you ready for sustenance farming in your back yard? Are you ready to dump that Victorian home and/or cheap stick McMansion, and build a superinsulated Earth home? How do you intend to pay your ta-xes and mort-gage? Are you familiar with the amount of capital investment required for a complete alternative solution? Multiply that by billions.]]

Straw man. All I said was that we should switch to renewable sources of energy. Living like a cave man as the solution to global warming is a lie thought up by people like Rush Limbaugh and repeated by thoughtless people like yourself.

[[Clearly this is one part of the solution, but I find it incredible that you can completely trivialize that from the comfort of your armchair. That’s not how science is done and technology is developed. If you’re going to talk, you need to walk. ]]

Jeez, Tom, please don’t let my old professors know I don’t know how science is done. They might take back my degree in physics.

[[I don’t know how you can look at the satellite record since 1998 and not conclude that the temperature has fallen.

“Statistically significant trend” is open for debate…but clearly satellite measured temperatures are lower than in 1998.]]

Not as of 2005-2006 they aren’t. Your information is obsolete.

re # 108, 110

The bet from 1998 was better discussed in #80, apparently by the guy who made the bet.

Clearly, the raw temp has fallen since 1998 (on sat data) but apparently not the trend as they defined it to be calculated.

Re #108:

Which year is “by 2010”? I’ll take you up on 2009 being lower than 1998. 2011 or 2012 — very likely not.

1998 was exceptional in a lot of ways. Let’s hope 2011 or 2012 aren’t equally exceptional.

Repost, hoping for a reply:

Re: #45 and the pull quote “a good fraction of it’s going to stay there 500 years”: One of the main points regarding AGW which I took from AIT and have relied on since that time is that CO2 is highly persistent in the atmosphere. It has seemed to me from the beginning that this is the crucial point, because the lag effect and the persistence equate to ‘built in’ warming far into the future.

However, there seems to be hot dispute over the residency time of CO2. I have seen estimates as low as 5 years.

My question is this: what research/reference material is available which justifies the more long-term predictions? I have searched for such corroboration and thus far have come up empty.

Walt Bennett

Harrisburg, P

We can add large amounts of wind, solar, geothermal and biomass to the mix now, and in 10-50 years can be running entirely off renewables.z

Still having trouble with orders of magnitude, I see. Did you even bother to read the article I posted? Carbon and hydrocarbon combustion are integrated high temperature processes, with fertilizer and metals production thrown into the mix, the same fertilizers and metals that permit the production and transportation of food over great distances. Unless everyone is producing their own food in their back yard, renewing the soil every year from biomass, which we know now is physically impossible given the population of the Earth, then it simply won’t work. By not addressing the actual scales involved, you are entering the realm of irrationality. It’s not that it can’t work, it’s simply that it won’t work given the population of the Earth. An alternative energy solution given today’s technology would require drastic reductions in population.

Wind perhaps has the greatest potential from a energy cost standpoint, but solar has severe materials bottlenecks at this time. Do you know why?

Re #118 Since no-one else has replied I will give it a go.

It does not seem to be generally recognised that CO2 like H2O is a feedback. When temperatures rise the oceans give off more CO2, and when temperature fall the cold oceans absorb more CO2. Thus the CO2 content of the atmosphere depends on sea surface temperatures, amongst other things.

So, although each molecule of CO2 that escapes from the oceans will, on average, be back in the ocean again in five years time, if the sea surface temperature rises the increase in the atmospheric CO2 will remain.

But just to be quite clear, the warming of the oceans is being caused by the increase in CO2 due to man’s activities. But that increase will be self sustaining. In fact it will soon get worse, because at present 40% of the increase is being dissolved in the oceans. Half of that CO2 is removed from the atmosphere by rain in the Arctic and buried deep in the ocean by the descending leg of the THC (the Conveyor ocean current.) When the Arctic sea ice disappears, the atmospheric CO2 will no longer be removed in the NH. Thus the 40% of man made CO2 which is currently being removed into the oceans will be reduced to the 20% which is being absorbed in the Weddell Sea.

Assuming the world does not get so hot that all vegetation is killed off, eventually the creation of peat bogs and the burial of trees will remove the excess CO2 from the atmosphere, but that will take perhaps thousands of years. Of course, if civilised man continues to rule the planet, no trees will be buried as they will be needed to fuel his extravagant life style.

RE # 113 Barton, you said,

[I didn’t say renewables could do it all this minute. What I said was that I didn’t say renewables could do it all this minute. What I said was that renewables could do it all, a point which I will continue to stand by despite your patronizing attitude.

Barton, my attitude is not patronizing; it is critical of your refusal to back up any of your hand-waving claims that:

[renewables could do it all].

Where did you ever get that notion.

Will China, India, Brazil, Indonesia, EU, US, Canada, Mexico, Papua New Guinea, Egypt, Russia……..run their economies entirely on renewables? Of course not. No economy can except maybe the remarkable Amish people.

Read the business announcements of US, China and India energy growth reliant upon coal, coal, coal, coal. If you think you can change that scenario, do something more than make pronouncements.

You do not serve your position by arguing what no one else is proposing, i.e.,[renewables could do it all].

That is all I am going to contribute to this discussion with you. And I care just as deeply as you about saving our children from the future we are designig for them.

I don’t mean to sound sarcastic but aren’t the high end estimates, by definition, unrealistically high? Isn’t that the whole point of having such estimates? If it wasn’t unrealistically high it would be the best estimate (or at least closer to that), not the high end estimate. Aren’t worst-case scenarios supposed to be worse than you really think it is going to be? I may be missing something, but I find all this talk about the high end estimate being too high both obvious and trivial. Based simply on the name I would be surprised, and extremely worried, if they weren’t too high.

#118, Walt, there is a long explanation about how long CO2 mixing ratios persist in the atmosphere on Real Climate (use the search box), but the short answer is:

If you are asking how long on average a single particular molecule of CO2 persists in the atmosphere, five years is a good estimate,

If you are asking how long an increase in the total amount of CO2 in the atmosphere will persist, 500 years is a reasonable estimate (see the RC post for details). See also #120

Eli,

I am not following your Comment 99… “Chip is doing it again…when he neglects to mention…” I used precisely that Hansen (1988) quote in my original comment (#27). So what have I neglected to do? Your point is precisely my point, Scenario A was never thought to be correct. This is definitely true in the long run (as Hansen’s (1988) quote makes clear), and by Gavin claiming that the close match with Scenario B shows that “Thus when asked whether any climate model forecasts ahead of time have proven accurate, this comes as close as you get” indicates that scenario A was never thought to be correct in the short run either.

Obviously, we, the denizens of the world, will determine the future course of human emissions to the atmosphere as well as other perturbations to the earth. And our actions will ultimately determine which SRES scenario was closest to predicting the future. Reality, rather than idealism, will largely determine how fast fossil fuel emissions will decline… and reality, believe it or not, involves issues of greater personal urgency to most of the world’s ~6.5+ billion (and growing) people than matters of climate.

-Chip Knappenberger

to some degree, supported by the fossil fuels industry since 1992

(and, as is the case with virtually all of you, a beneficiary of the fossil fuels since birth)

[Response: Chip, you seem to insist that we break everything down into correct and incorrect projections. There is no such binary distinction. The reason why we have best case and worst case scenarios is not because they are likely, but because they are possible. The scenario generation process was designed to give possible futures and for modellers to ignore such possibilities is irresponsible. I point out again that these results do not span the whole space of possibilities – witness polar ozone depletion which was much worse and much more rapid than models predicted. Remember, there are always unknown unknowns! -gavin]

I have just recalculated the trendline in the RSS lower troposphere satellite data using the most recent April 2007 data.

Get this: the trend is 0.0001K per decade.

So I would say the bet (referred to above in #80) is not over yet.

Morever, the lower troposphere temperature has declined by 0.723C since April 1998 (the peak of the El Nino event) to April 2007 (a mild La Nina event).

Re: #124 (John Wegner)

What’s the starting point of your analysis?

Gavin:

Thanks for the excellent post. We have gone back and forth some on this issue, and your analysis and interpretation is a real example of the intellectual integrity that we can expect from a scientist speaking in his area of technical expertise.

The first several paragraphs of your post go into the details on a question of fact: which projected Scenario ended up being closest to the actual measured forcings that occurred between Hansen’s prediction and today. I took Hansen’s word for it in his 2006 NAS paper that these were closest to Scenario B, but the depth of your presentation was very useful.

You then proceed to analyze the fit of model predictions vs. actual results through 2006. You note that the Scenario B forecast is a good fit to the observed outcomes. I think that there are two key points here:

1. You highlight the first point very clearly. Exactly as you say:

“But can we say that this proves the model is correct? Not quite. Look at the difference between Scenario B and C. Despite the large difference in forcings in the later years, the long term trend over that same period is similar. The implication is that over a short period, the weather noise can mask significant differences in the forced component.”

You then proceed to conduct a simple and important piece of sensitivity analysis, basically asking what is the maximum sensitivity that we could exclude as a possibility at the 95% significance level. I don’t see an explicit statement of the maximum value, but you say that a sensitivity of 1C would fall below it. In this sensitivity analysis you are explicit that you are “assuming the transient trend would scale linearly”. I presume that there is some physical basis for this assumption, but isn’t this a huge assumption in calculating the probability distribution for the actual sensitivity?

2. The second point is that the exact start and end dates that we pick for the evaluation of prediction vs. actual turn out to be critical. Suppose instead of evaluating this forecast with 1988 as the base through 2006 (i.e., 18 years from forecast), we have evaluated it through 2000 (i.e., 12 years from forecast). In that case (reading approximately from the chart published in Hansen’s paper), the Scenario B forecast would have been about 0.2C of warming and actual would have been indistinguishable from 0C warming. This would have implied massive % error. While I suppose it’s possible that a detailed analysis of the actual forcings through 2000 might have reconciled this finding, even if they did not I think we would both agree that a reasonable analyst would not have concluded in 2000 that we had just falsified the prediction. (This is one of the reasons that Crichton’s claim that Hansen was “off by 300%” or whatever was so outrageous.) All this is of course the flip-side of your appropriately modest claim that we have not yet proved the accuracy of the model – or more precisely, measured the fact of most interest: the sensitivity – at the 18-year point.

I think what this point highlights is that without an a priori belief about the relationship between time from prediction and an expected confidence interval for prediction accuracy, it’s very hard to know where the finish line is in this kind of an evaluation.

Gavin, once again thanks for taking the time to develop and post this material.

[Response: If you restricted the analysis to 1988 to 2000 (13 years instead of 23 years of 1984-2006), no trends are significant in the obs or scenario B (scenario A is and scenario C is marginally so), although all error bars encompass the longer term trends (as you would expect). Short term analyses are pretty much useless given the level of noise. The assumption that the transient sensitivity scales with the equilibrium sensitivity is a little more problematic, but it’s a reasonable rule of thumb. Actually though, there is slightly less variation in the transients than you would expect from the equilibrium results. -gavin]

#124 May be NOAA needs to be corrected as their RSS lower troposphere trend is .20 C/decade

http://lwf.ncdc.noaa.gov/oa/climate/research/2007/apr/global.html#Temp

#113 Barton, How unimaginable it is to have a cluster of 1 million wind turbines over an apt oceanic location, I wonder how much energy that would produce? May be we can produce more wind towers than cars for a few years?

By the way, planetary Lapse rate = -g/Cp is one of the most elegant equations in atmospheric physics, do you know who was the first person who has derived it?

RE # 124: how much urgency do you think the climate now represents for the people of Australia?

#125 Mr Wegner, if it is the Mid-troposphere, Consider UW-RSS as a more valid calculation. UW gets it more right (cheers to the guys and gals at the U of Washington), In no way little old me would have picked up the historical all time 2005 warming signal in February-March 2005 with an amateur telescope and not powerful reliable weather satellites.

Well, Gavin,

The thing that got me commenting in this thread in the first place was your claim, in your original post, that a pretty good a priori forecast had been made with Scenario B. The reason, I guess that Scenario B is considered “the” forecast is that the other two scenarios were known at the time to be relatively unlikely, if not outright impossible. Thus, today, you point to Scenario B as the true “forecast”. I ask, then, if you were in the same position today, as Dr. Hansen was in 1988, which scenario would you point to as the “perhaps most plausible”? Instead of qualifying the SRES scenarios, the IPCC chose to present them all as equally likely. Based upon your subsequent comments, I think we agree that they are all not equally likely, and we know this a priori. Further, Dr. Hansen (and yourself and many others) do not like it when the results of Scenario A are presented against actual observations to claim that a gross error had been made. Why? Because Scenario A was never really considered by you all to be reflective of the way things would evolve. In the IPCC’s case, the results from the high-end scenarios are splashed all over the press indicating how bad things may possibly become. If you don’t like comparisons being made between observations and Scenario A (because it wasn’t considered to be very likely), then how do you feel about advertising outcomes produced with the high-end SRES scenarios (when they aren’t very likely either)?

Maybe your answer is that all SRES scenarios should be considered Scenario Bs, and that the IPCC didn’t include the next to impossible Scenarios A and C. I could accept this as an answer. But I think that you think that the set of SRES scenarios that could really be considered Scenario Bs is less than the total number.

Thanks for the fair treatment and consideration of my comments.

-Chip Knappenberger

to some degree, supported by the fossil fuels industry since 1992

[Response: There are two separate points here. First, scenario A did not come to pass, therefore comparisons of scenario A model results to real observations is fundamentally incorrect – regardless of how likely anyone though it was in 1988. Secondly, Jim said B was ‘most plausible’, not that A was impossible. We are climate scientists not crystal ball gazers. So can I rule out any of the BAU scenarios? No. I can’t. If people who’ve looked into this more closely than I for many years think these things are possible, I would be foolish to second guess them. More to the point, all the scenarios assume that no action is taken to reduce emissions to avoid climate change. However, actions are already being taken to do so, and further actions to avoid the worst cases will continue. If that occurs, does that mean it would have been foolish to to look at BAU? Not at all. It is often by estimating what would happen in absence of an action that an action is taken. So if in 100 years, emissions tracked the ‘Alternative Scenario’ rather than A1F1 would that mean that A1F1 scenario was pointless to run? Again no. The only test is whether it is conceivable that the planet would have followed A1F1 – and it was (is). -gavin]

To tamino #126.

Sorry, I should have included the starting date – January 1998. My comments were specifically about the bet referred to in #80 – that there would be a statistically significant downward trend in lower troposphere temperatures (from RSS or UAH) from January 1998 to December 2007. People seem to give the RSS group more credibility even though the UAH temps are virtually identical.

If the current temperature from April 2007 remains in place for the remainder of the year, there will be a downward trend in temperatures of 0.0003C per decade in regards to the bet although that is unlikely to be significant.

What is significant, however, is the amount of heat that the 1997-98 El Nino dumped into the atmosphere and the fact that temperatures are now 0.7C lower than the peak of 1998. The current La Nina pattern may, in fact, result in a continuing decline in temperatures.

I believe this also has bearing on the accuracy of Hansen’s model predictions.

Thanks for the post.

Eli,

Thanks for the tip. I am anxious to read the link, but I cannot find it no matter how I try. Might you help me locate it?

Alistair, thank you for that boilerplate, which makes a lot of sense. Do you have any references you can share?

Re: #125, #131 (John Wegner)

First of all, your numbers are wrong. The trend from Jan. 1998 to Apr. 2007 is not 0.0001 K/decade, it’s 0.01 +/- 0.34 K/decade. I’m guessing that instead of multiplying the annual rate by 10, you divided by 10.

Second, you’ve demonstrated an interesting fact, namely: EVEN IF you do the most extreme cherry-picking possible (and Jan. 1998 is the most extreme choice), you *still* get a positive trend rate, albeit not statistically significant.

Third, the trend for the entire RSS MSU data set is 0.18 +/- 0.06 K/decade. Taking the data ONLY from 1998 to Apr. 2007, the range of values is -0.33 to +0.35 K/decade, which range includes the whole-dataset estimate 0.18 K/decade. So, the data from 1998 to 2007 provide no statistical evidence that the trend is any different from that of the entire dataset.

What you’ve essentially shown is that for the MSU data, even cherry-picking will not produce your desired result.

Re 80 (and further on)

Chip

I have seen the global emission numbers from Mike Raupach (CSIRO and chair of global carbon projekt). It will be published soon in PNAS.

2002/2003 and 2003/2004 have shown an increase of around 5%, 2004/2005 of around 3%.

I fear that Hansens emission scenario B trend has shown to be the most adequate only because of the breakdown of the soviet imperium in the 1990ies. If you look what has happened during the last few years in China and India and how negotiations on emission caps are very tough, and that there is lots of coal in China, etc., I do not know how you can think that the high emission scenarios ar so unlikely. Of course we hope they will not happen, but at the moment I cannot see any reason to exclude them.

Walt, try this: How long will global warming last by David Archer. I was hurried at the time I wrote, even Rabetts have day jobs.

re. 43 [Also, have any academic studies been done that show similar conclusions about other contrarians?]

Are you trying to deceive the public? Who are you working for?

I don’t care a whit about academic studies…and neither should you or the public …unless you have a political agenda.

“Academic study” means that your facts are not necessarily checked to see if you are lying (such as “academic studies” that show that tobacco smoking is not harmful to your health…or that global warming is not happening…and there are plenty of examples of both that are just lies).

Make it a peer-review study in an established peer-review journal…that stands up under scrutiny and then you can start paying attention.

I can’t believe that you said that.

I think the climate denialists are further refining our models for disingenuousness every day.

Climate science says, “this is a range given our inability to completely predict massive human and insitutional actions and the availability and development of technology, so here are some scenarios – if the conditions are X0, we see X1 as a likely outcome. If they’re Y0, we see Y1 as a likely outcome. If they’re Z0, we see Z1 as a likely outcome. We see X0, Y0 and Z0 as being in the range of likely outcomes.” Not only is the true picture closest to Y0, the midrange estimate, and the one the representative of climate science presented as likeliest, but the data follows the model prediction if Y0 is correct very closely.

The denialists come back with the same tired dishonest argument about X1-Z1 being imprecise and X1 being wrong.

At some point you have to tell them they need new squid ink because the ocean of plausibility has already been saturated with their old formula and doesn’t have the water-clouding power it used to have.

Alastair:

Apparently, we may already be there, at least in the Southern Ocean:

As someone has completely misunderstood my previous post I’ll re-word it slightly, and I hope I’ll get a sensible response this time:

Re. #18

Has an peer reviewed study been done that proves that he is? (If not, where does your factor of two figure come from?)

Also, have any peer reviewed studies been done that show similar conclusions about other contrarians?

And have any peer reviewed studies been done that show what proportion of press/media coverage of global warming is sympathetic to the argument that it is anthropogenic and serious, what proportion is antipathetic to that argument, and what proportion is neutral; and how the proportions have altered over recent years?

Dave

See also:

https://www.realclimate.org/index.php?p=160

7 Jun 2005

How much of the recent CO2 increase is due to human activities?

Contributed by Corinne Le Quéré, University of East Anglia.

and

http://www.agu.org/pubs/crossref/2006/2005GB002511.shtml

and

http://lgmacweb.env.uea.ac.uk/lequere/publi/Le_Quere_and_Metzl_Scope_2004.pdf

And much else. I hope Dr. Le Quéré will say more here soon. I’ve really been hoping to hear from the biologists more.

Hi Walt,

Re #135 The general opinion amongst most climate scientists and oceanographers is that a slow down in the THC will cause a cooling based on what they think happened at the start of the Younger Dryas stadial (mini ice age.) However, the oceanographer who pioneered that belief is now having second thoughts. See Was the Younger Dryas Triggered by a Flood? by Wallace S. Broecker in Science 26 May 2006.

Meanwhile in Germany they have been investigating CO2 and ocean. See Biopumps Impact the Climate. They published a paper in Science today described here Antarctic Oceans Absorbing Less CO2, Experts Say and here http://www.nature.com/news/2007/070514/full/070514-18.html

The paper shows that I am wrong to think that it is only in the Arctic where there is a danger of the CO2 failing to be sequestered. Also their figures for the proportions of CO2 will be more accurate than those quoted by me which were very rough but of the right order.

I think it is obvious that the situation is pretty scary. What is worse is that scientists who run RealClimate don’t seem to be aware of the danger. Perhaps it is not their speciality and they prefer not to comment.

HTH, Alastair.

Re #108:

Which year is “by 2010”? I’ll take you up on 2009 being lower than 1998. 2011 or 2012 — very likely not.

1998 was exceptional in a lot of ways. Let’s hope 2011 or 2012 aren’t equally exceptional.

To tamino #135 – I’m guessing you rounded the figures from RSS while I used all the data and a statistical package to derive the trend. Try it again.

Re: #142 (John Wegner)

I did no rounding. I used the monthly means downloaded from the RSS website. Using a program I developed myself, which has been in use among astrophysicists for over a decade, I get a trend from Jan. 1998 to Apr. 2007 of 0.00122 K/yr, which is 0.0122 K/decade. To test the remarkably unlikely possibility that the program is wrong, I also loaded the data into ExCel in order to compute the trend. I got exactly the same result. Perhaps you should try it again.

And in fact, it doesn’t really matter whether it’s 0.0122 K/decade or 0.0001 K/decade, it’s still greater than zero, and it’s still true that even cherry-picking can’t produce the result you want.

And of course, it’s still +/- 0.34 K/decade.

Re. 105 Thomas Lee Elifritz ,

Thanks for the reference Thomas. Interestingly the paper discusses using superc onducting nitrogen (N2) liquid pipelines as an effcient transmission network. A recent Scientific American article proposed that superconducting hydrogen (H2)be used instead – eliminating the duplication of “power lines” and hydrogen pipelines.

Bruce

Re #143: About the southern ocean absorbing less carbon dioxide, the Nature paper said:

My thoughts on this are:

1) The cause is said to be ozone depletion, only indirectly linked to global warming.

2) Are wind speeds supposed to increase over most of the oceans? I think we are talking about a local effect here that should not be extrapolated.

3) Why does ocean churn reduce CO2 uptake? I thought CO2 absorption was slowed down by the lack of mixing with lower ocean layers.

Later they say “An increase in global temperature is predicted to worsen the effect, since warmer waters hold less gas.” This makes sense, but the question is by how much. This paper does not address that issue.

Alastair McDonald (#143) wrote:

The paper you refered to is:

Saturation of the Southern ocean CO2 sink due to recent climate change

Corinne Le Quere, et al.

Not available unless you have a subscrption,but there is a little introductory article at:

Polar ocean is sucking up less carbon dioxide

Windy waters may mean less greenhouse gas is stored at sea.

Michael Hopkin

Published online: 17 May 2007

http://www.nature.com/news/2007/070514/full/070514-18.html

The mechanism is a little strange: not the reduced mixing, but increased mixing due to the winds resulting in carbon being brought up from below. A real step in the direction of the ocean becoming an emitter rather than a sink.

In any case, I know I have brought this up before, but another carbon cycle feedback is kicking in: heat stress is reducing the ability of plants to act as carbon sinks, at least during the warmer, dryer years. Plenty of positive feedback here, too, although not quite as significant in terms of the greenhouse effect. Higher temperatures mean increased evaporation from the soil. Evaporation from the soil means that plants will have less water. Plant die-off means carbon dioxide is released and more soil is exposed, increasing evaporation, and thus drying out the soil for neighboring plants. Then there are the fires which release a great deal of carbon dioxide. I remember a reading a paper recently (I could look it up) which demonstrated that the fires are typically associated with human settlement, though, at least in the Amazon river valley – which makes this process more controllable.

Impact of terrestrial biosphere carbon exchanges on the anomalous CO2 increase in 2002â??2003

W. Knorr

GEOPHYSICAL RESEARCH LETTERS, VOL. 34, L09703, doi:10.1029/2006GL029019, 2007

Now of course increased evaporation also means increased precipitation, but this tends to fall prematurely over the source of the evaporation – the oceans. And even when it does happen over land, it tends will be more sporadic. Floods. Not that conducive for growing crops.

Then we have the decreased albedo of ice during the spring which implies greater absorbtion of sunlight, then we have the ice quakes which are becoming more severe in Greenland, the rapidly moving subglacial lakes in the antarctic.

It looks like some long-anticipated feedback loops are getting started – and some unanticipated ones as well.

I suspect the latter: they do the science and leave the panic to others. Probably works best that way – they can do what they are good at, and we can take care of the other part. A neat division of labor. In case, this will take time, just probably not as much as we thought. But if we don’t light the fuse to something other than global warming, I strongly suspect that people will still be here a million years from now, even if they are only hanging on by their finger nails.

Hi Walt (re #72, 118 and others).

I must admit I share your frustrations regarding an explantion of how long CO2 stays in the atmosphere. Ive made a little progress by myself which I’ll attempt to share.

There are 2 seperate issues in this problem: the CO2 residence time and the CO2 response function.

If you look at Figure 1, page 5 of this document:

http://www.royalsoc.ac.uk/displaypagedoc.asp?id=13539

You will see the residence time of carbon (CO2) in the atmosphere is about 3 years. Your typical CO2 molecule will spend 3 years in the atmosphere before being taken up by the ocean or terrestrial biosphere. But you will also notice CO2 has a residence time of 6 years in the surface ocean and 5 years in the living terrestrial biosphere. The fluxes to and from the atmosphere are huge and approximately balance and this is why the residence time of CO2 is so short. This approximate balance of fluxes is both directions is a reflection of the equilibrium exchange of CO2 between the atmosphere and the ocean/terrestrial biosphere.

However, the equilibrium exchange does not help us answer our question. What happens when we put more than the equilibium CO2 in the atmosphere? This is dealt with by the CO2 response function. A lot of work on this has been done by Fortunat Joos et al in Bern, which I don’t pretend to understand in detail at this stage:

http://www.climate.unibe.ch/~joos/

However, if you go to footnote (a), page 34 at the bottom of Table TS.2 in the Technical Summary of the IPCC WG1 AR4 report there is an equation which summarises the Bern CO2 response function:

http://ipcc-wg1.ucar.edu/wg1/Report/AR4WG1_TS.pdf

I’ll try and reproduce it here:

“The CO2 response function used in this report is based on the revised version of the Bern Carbon cycle model used in Chapter 10 of this report [which did not help me understand the function – Bruce]

(Bern2.5CC; Joos et al. 2001) using a background CO2 concentration value of 378 ppm. The decay of a pulse of CO2 with time t is given by

a0 + a1*exp(-t/t1) + a2*exp(-t/t2) + a3*exp(-t/t3)

where a0 = 0.217, a1 = 0.259, a2 = 0.338, a3 = 0.186, t1 = 172.9 years, t2 = 18.51 years, and t3 = 1.186 years, for t < 1,000 years." Now I would intuitively have thought that the decay function would be related to the size of the "pulse", but a simple way to understand this function is to multiply it by 100ppm and use a spreadsheet to calculate the amount left over 1000 years.I calculated 57 years to reach 50 ppm. The function does not decay to zero, but to 21.7 ppm - so calculating a mean is meaningless - although it is clear from my reading that slower processes which operate over thousands of years have been ignored in the equation. This is This is a compartmental model with three decay processes that operate over different time scales. You can see this more clearly be plotting the response function versus the log of the time scale. It has 3 sloped straight lines joined by curves before becoming flat around 1000 years (I wish I could show this here). The initial decay is dominated by 0.186exp(-t/1.186), the second by 0.338exp(-t/18.51), and the last by 0.259exp(-t/172.9). These times (t1, t2 and t3)are sometimes called the e-folding times, the time to decay to 1/e of the original value. (not quite the half-life) Physically it means that the initial fast decay process requires a large driving force (departure from the equilibrium CO2). Then it peters out and each of the two slower processes take over in turn. [This is why I don't understand why the size of the pulse, which is the concentration gradient - the driving force - is not specified. Although it may make sense if the compartments are arranged consecutively. I have to think about it. I'm struggling to find an explanation of the model.] I hope this helps!