At Jim Hansen’s now famous congressional testimony given in the hot summer of 1988, he showed GISS model projections of continued global warming assuming further increases in human produced greenhouse gases. This was one of the earliest transient climate model experiments and so rightly gets a fair bit of attention when the reliability of model projections are discussed. There have however been an awful lot of mis-statements over the years – some based on pure dishonesty, some based on simple confusion. Hansen himself (and, for full disclosure, my boss), revisited those simulations in a paper last year, where he showed a rather impressive match between the recently observed data and the model projections. But how impressive is this really? and what can be concluded from the subsequent years of observations?

In the original 1988 paper, three different scenarios were used A, B, and C. They consisted of hypothesised future concentrations of the main greenhouse gases – CO2, CH4, CFCs etc. together with a few scattered volcanic eruptions. The details varied for each scenario, but the net effect of all the changes was that Scenario A assumed exponential growth in forcings, Scenario B was roughly a linear increase in forcings, and Scenario C was similar to B, but had close to constant forcings from 2000 onwards. Scenario B and C had an ‘El Chichon’ sized volcanic eruption in 1995. Essentially, a high, middle and low estimate were chosen to bracket the set of possibilities. Hansen specifically stated that he thought the middle scenario (B) the “most plausible”.

These experiments were started from a control run with 1959 conditions and used observed greenhouse gas forcings up until 1984, and projections subsequently (NB. Scenario A had a slightly larger ‘observed’ forcing change to account for a small uncertainty in the minor CFCs). It should also be noted that these experiments were single realisations. Nowadays we would use an ensemble of runs with slightly perturbed initial conditions (usually a different ocean state) in order to average over ‘weather noise’ and extract the ‘forced’ signal. In the absence of an ensemble, this forced signal will be clearest in the long term trend.

How can we tell how successful the projections were?

Firstly, since the projected forcings started in 1984, that should be the starting year for any analysis, giving us just over two decades of comparison with the real world. The delay between the projections and the publication is a reflection of the time needed to gather the necessary data, churn through the model experiments and get results ready for publication. If the analysis uses earlier data i.e. 1959, it will be affected by the ‘cold start’ problem -i.e. the model is starting with a radiative balance that real world was not in. After a decade or so that is less important. Secondly, we need to address two questions – how accurate were the scenarios and how accurate were the modelled impacts.

So which forcing scenario came closest to the real world? Given that we’re mainly looking at the global mean surface temperature anomaly, the most appropriate comparison is for the net forcings for each scenario. This can be compared with the net forcings that we currently use in our 20th Century simulations based on the best estimates and observations of what actually happened (through to 2003). There is a minor technical detail which has to do with the ‘efficacies’ of various forcings – our current forcing estimates are weighted by the efficacies calculated in the GCM and reported here. These weight CH4, N2O and CFCs a little higher (factors of 1.1, 1.04 and 1.32, respectively) than the raw IPCC (2001) estimate would give.

So which forcing scenario came closest to the real world? Given that we’re mainly looking at the global mean surface temperature anomaly, the most appropriate comparison is for the net forcings for each scenario. This can be compared with the net forcings that we currently use in our 20th Century simulations based on the best estimates and observations of what actually happened (through to 2003). There is a minor technical detail which has to do with the ‘efficacies’ of various forcings – our current forcing estimates are weighted by the efficacies calculated in the GCM and reported here. These weight CH4, N2O and CFCs a little higher (factors of 1.1, 1.04 and 1.32, respectively) than the raw IPCC (2001) estimate would give.

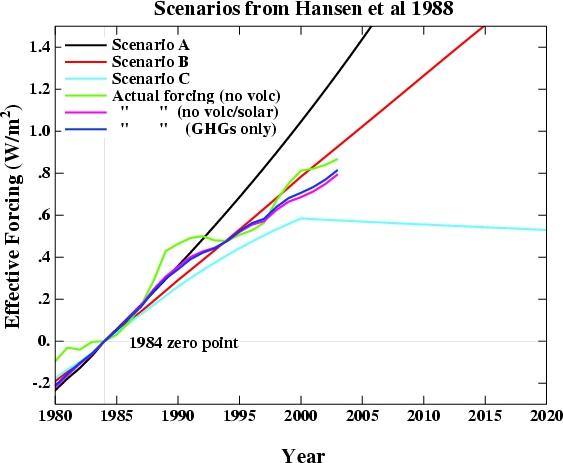

The results are shown in the figure. I have deliberately not included the volcanic forcing in either the observed or projected values since that is a random element – scenarios B and C didn’t do badly since Pinatubo went off in 1991, rather than the assumed 1995 – but getting volcanic eruptions right is not the main point here. I show three variations of the ‘observed’ forcings – the first which includes all the forcings (except volcanic) i.e. including solar, aerosol effects, ozone and the like, many aspects of which were not as clearly understood in 1984. For comparison, I also show the forcings without solar effects (to demonstrate the relatively unimportant role solar plays on these timescales), and one which just includes the forcing from the well-mixed greenhouse gases. The last is probably the best one to compare to the scenarios, since they only consisted of projections of the WM-GHGs. All of the forcing data has been offset to have a 1984 start point.

Regardless of which variation one chooses, the scenario closest to the observations is clearly Scenario B. The difference in scenario B compared to any of the variations is around 0.1 W/m2 – around a 10% overestimate (compared to > 50% overestimate for scenario A, and a > 25% underestimate for scenario C). The overestimate in B compared to the best estimate of the total forcings is more like 5%. Given the uncertainties in the observed forcings, this is about as good as can be reasonably expected. As an aside, the match without including the efficacy factors is even better.

What about the modelled impacts?

What about the modelled impacts?

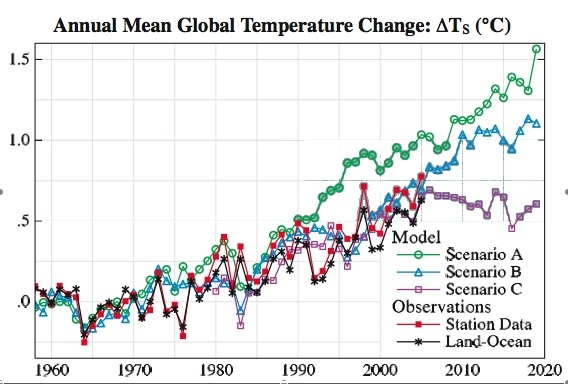

Most of the focus has been on the global mean temperature trend in the models and observations (it would certainly be worthwhile to look at some more subtle metrics – rainfall, latitudinal temperature gradients, Hadley circulation etc. but that’s beyond the scope of this post). However, there are a number of subtleties here as well. Firstly, what is the best estimate of the global mean surface air temperature anomaly? GISS produces two estimates – the met station index (which does not cover a lot of the oceans), and a land-ocean index (which uses satellite ocean temperature changes in addition to the met stations). The former is likely to overestimate the true global surface air temperature trend (since the oceans do not warm as fast as the land), while the latter may underestimate the true trend, since the air temperature over the ocean is predicted to rise at a slightly higher rate than the ocean temperature. In Hansen’s 2006 paper, he uses both and suggests the true answer lies in between. For our purposes, you will see it doesn’t matter much.

As mentioned above, with a single realisation, there is going to be an amount of weather noise that has nothing to do with the forcings. In these simulations, this noise component has a standard deviation of around 0.1 deg C in the annual mean. That is, if the models had been run using a slightly different initial condition so that the weather was different, the difference in the two runs’ mean temperature in any one year would have a standard deviation of about 0.14 deg C., but the long term trends would be similar. Thus, comparing specific years is very prone to differences due to the noise, while looking at the trends is more robust.

From 1984 to 2006, the trends in the two observational datasets are 0.24+/- 0.07 and 0.21 +/- 0.06 deg C/decade, where the error bars (2![]() ) are the derived from the linear fit. The ‘true’ error bars should be slightly larger given the uncertainty in the annual estimates themselves. For the model simulations, the trends are for Scenario A: 0.39+/-0.05 deg C/decade, Scenario B: 0.24+/- 0.06 deg C/decade and Scenario C: 0.24 +/- 0.05 deg C/decade.

) are the derived from the linear fit. The ‘true’ error bars should be slightly larger given the uncertainty in the annual estimates themselves. For the model simulations, the trends are for Scenario A: 0.39+/-0.05 deg C/decade, Scenario B: 0.24+/- 0.06 deg C/decade and Scenario C: 0.24 +/- 0.05 deg C/decade.

The bottom line? Scenario B is pretty close and certainly well within the error estimates of the real world changes. And if you factor in the 5 to 10% overestimate of the forcings in a simple way, Scenario B would be right in the middle of the observed trends. It is certainly close enough to provide confidence that the model is capable of matching the global mean temperature rise!

But can we say that this proves the model is correct? Not quite. Look at the difference between Scenario B and C. Despite the large difference in forcings in the later years, the long term trend over that same period is similar. The implication is that over a short period, the weather noise can mask significant differences in the forced component. This version of the model had a climate sensitivity was around 4 deg C for a doubling of CO2. This is a little higher than what would be our best guess (~3 deg C) based on observations, but is within the standard range (2 to 4.5 deg C). Is this 20 year trend sufficient to determine whether the model sensitivity was too high? No. Given the noise level, a trend 75% as large, would still be within the error bars of the observation (i.e. 0.18+/-0.05), assuming the transient trend would scale linearly. Maybe with another 10 years of data, this distinction will be possible. However, a model with a very low sensitivity, say 1 deg C, would have fallen well below the observed trends.

Hansen stated that this comparison was not sufficient for a ‘precise assessment’ of the model simulations and he is of course correct. However, that does not imply that no assessment can be made, or that stated errors in the projections (themselves erroneous) of 100 to 400% can’t be challenged. My assessment is that the model results were as consistent with the real world over this period as could possibly be expected and are therefore a useful demonstration of the model’s consistency with the real world. Thus when asked whether any climate model forecasts ahead of time have proven accurate, this comes as close as you get.

Note: The simulated temperatures, scenarios, effective forcing and actual forcing (up to 2003) can be downloaded. The files (all plain text) should be self-explicable.

>NOAA

Eh? Look at 1990-2000 on the scenarios and on the NOAA CO2 graph.

>Have the forcings from 1959 to present been growing eexponentially or linearly?

The answer appears to be “No.”

Re #49 the normal bell curve can look exponential between -2 and -1 standard deviations, so extrapolate with care. Coal mining and burning will stop one day due to the quality of the reserves, not the size ie when there is no longer a $ profit or energy gain.

Randy, re comment No. 46.

Your response sounds like an excuse for inactivity. Inactivity means BaU which, in the long term, spells possible catastrophe. It is possible to have a civilized lifestyle with lower CO2 emissions. Per capita, the US emits the most CO2 of anyone and it wouldn’t require too much expenditure or effort to bring it down. Most West European countries have half the per capita emissions of the US and Sweden manages with a quarter. No one looking at Sweden would conclude their lifestyle is inferior to yours. The world population may well expand to 9bn, but most of these extra people will emit a fraction of the greenhouse gases than does a citizen of the USA. Your population may be 300 million but, if you compare your emissions with those of, say China, your effective population is roughly the size of theirs. This isn’t an anti-US rant: we all have to reduce our emissions. Doing nothing is not an option.

#4 Peak coal that soon? I’ve heard that a single state in the US has more energy in coal that the oil energy in Saudi.

As for peak oil that’s a tricky one. Might be sooner than 2025. Have a look at the Tables in this link. Good old Exxon claims “no sign of peaking” bless them. And OPEC “deny peak oil theory” apparently!

http://www.worldoil.com/Magazine/MAGAZINE_DETAIL.asp?ART_ID=3163&MONTH_YEAR=Apr-2007

Re 27

Chip,

During the last few years CO2 emissions have increased at a rate or even faster than the worst SRES scenarios (e.g. A1FI). So I am not so sure that these scenarios are so unrealistic. We just can hope that the rates since 2002 are noise and not a trend…

Re 49.

The graph you link to shows NET TOTAL CO2 in the atmosphere. This is not the same thing as the emission rate referred to in the different scenerios (sort of like the difference between a curve and it’s derivative).

Re #24 Sean O,

“From a scientific POV, this may be acceptable but politicians are using this kind of data to create legislation that will cost billions and likely cost lives in hopes of saving lives.”

No one with any credibility would do a risk analysis that ONLY includes ONE side of the equation as well as EXAGGERATES the costs of action. The Stern report is one attempt to balance the equation. The expected cost of doing nothing far exceeds the cost of taking action.

Re #4, #10, #12, #16 etc.

My problem with Peak Oil is that once it is upon us it will trigger a desperate search for alternatives that will include making gasoline from coal, tar sands and oil shales (among others). These are much more CO2 intensive ways to power vehicles than oil, and would potentially accelerate global warming.

Since Chip is here, it wuld be remiss to fail to mention his own prediction, made (jointly with Pat Michaels) as recently as 1998:

Of course, Gavin is too much of a gentleman to bring it up…but I’m not :-)

#57

” trigger a desperate search for alternatives”

Funnily enough that very same issue of World Oil has a very good editorial on alternatives. Looks like it needn’t be that desperate if the concept of extractus digitus grabs the politicians.

http://www.worldoil.com/Magazine/MAGAZINE_DETAIL.asp?ART_ID=3170&MONTH_YEAR=Apr-2007

“I am struck by the lack of fundamental breakthroughs required for an abundant, clean energy future, whether in electricity generation from wind, coal (IGCC), ocean thermal, ocean wave, ocean tide, solar, nuclear, or liquids from coal-to-liquids, gas-to-liquids, biofuels, bio-engineered fuels, and so on.”

#48

Eli,

“Thomas Knutson’s reply”

I don’t think the link works so could you repost that part for us ta?

I am not a trained climatologist or scientist to comment on the issue of global warming, but I do have some very serious thoughts on the matter:

– Every human act that uses external energy to perform, contributes to global warming, however large or miniscule.

– Even the act of growing and consuming food is not carbon-neutral, as lot of external energy is used for cultivation, fertilizers, transportation, storage, etc.

– Every human appears to have 10, 20 or more horses yoked with him (the primemovers that burn fossil fuels and make our current lives comfortable)which consume oxygen and spew out far more carbon-dioxide than man would do alone.

– the natural eco-system could perhaps only balance off animal (including man) respiration and plant photosynthesis. Perhaps no surplus carbon sink exists at all to absorb the emissions caused by burning of fossil fuels accumulated in the earth over millions of years.

– In short, no human solution to global warming is possible for the industrial man and industrial civilization is ultimately doomed. It is only a question of how much time we may have in facing irreversible climate change and damage.

RE:44

Where do you live? Because the United States just had a very cold April.

The results to date show that the 1998 models Over-Estimate the impact of GHGs on temperature.

This has important consequences. If the GHG-temperature link is on the low end of the range of estimates (1.5C to 4.5C per doubling), then global warming will not be a significant problem.

The results to date, suggest that the link is indeed on the low end of the range, maybe even below the 1.5C lower limit. If that is the case, then global warming will not be a problem at all.

#60, #48 You can find a working link in a further comment of mine on the MKL paper, K being the Chipster. Now this is not simple blog whoring on my part, but actually evidence of Eli having a senior moment. Among other things, as Knutson and Tulyea point out, Michaels, Knappenberger, and wadda you know Landsea

Bad estimates of forcing are a tradition at New Hope Environmental Services for argument pushing as is

There is nothing new under the hot Virginia sun.

On the one hand, we have Vinod Gupta (#61) saying, “… no human solution to global warming is possible for the industrial man and industrial civilization is ultimately doomed.”

On the other hand, we have John Wegner (#62) saying, “The results to date, suggest that the link is indeed on the low end of the range, maybe even below the 1.5C lower limit. If that is the case, then global warming will not be a problem at all.”

Lord, what fools these mortals be!

John Wegner wrote:

> The results to date show that … global warming will not be a problem at all.

Where did you publish this or where did you find it published?

There are unfortunately a great many people named “John Wegner” who show up in a Google search for climate studies, from a lot of different places; I can’t figure out whether you’re talking about your own publication or something you read.

What’s your source, please, and why do you consider it to be reliable?

Have you reconciled your source with the IPCC’s published statements, or have reason to consider it more reliable?

Sean O (Re comment 24)

I’m an engineer by training myself (recently made the switch to meteorology). Before moving into this field, I worked in the mining industry in Australia. I saw mining companies, including the major, pour millions, sometimes tens of millions of dollars into project on the rumour that a significant deposite could be found. Projects have been greenlighted here and overseas with huge risk and uncertainty associated with it. This does not even mention to risks and uncertainty associated with sending people to work in deep shafts.

A seismic even killed a miner here last year and trapped another two for two weeks. There must have been a risk assesment taken on the mine, and uncertainties were no doubt present in this analysis. Yet men were sent into the shaft and put at risk.

The petroleum industry is the best example of this. Test wells in deep water cost over a million US smackers to drill. The expansion into the Gulf of Mexico and the Caspian Sea for meger returns shows that massive risk and uncertainty associated with petroleum.

My point is that “industry” descions are full of uncertainty. To wax lyrical about a hypothetical industry that does not act until a near certainty is reached is to break with reality.

To put it in context, our best guess (from GCM models) is that temperatures will rise due to GHG emissions and this carries substantial risk. Our best guess in 1988 has been shown to be remarkably accurate. 2+2 = ?

[[I have faith that science and technology will develop the answers we need, but it will take time. ]]

And in the meantime we should just go on burning all the fossil fuels we want?

The solutions are here now: Solar, wind, geothermal, biomass, conservation. No breakthroughs required. Mass production will in and of itself drive down the capital costs, and of course for solar, wind and geothermal the fuel costs are zero.

[[In short, no human solution to global warming is possible for the industrial man and industrial civilization is ultimately doomed. It is only a question of how much time we may have in facing irreversible climate change and damage. ]]

Switching to renewable sources of energy should do the trick.

I believe that will happen, sooner, rather than later.

When the price of gas goes up to 4 dollars a gallon, as it probbaly will this summer, you will see consumers flocking to alternate choices.

#58, You brought out a curious , I might say puzzling reason why medias carry any credence to The Michaels and Lindzen’s

of this world, when all they have to do is look at their none existing betting records, good thing they are not putting their money where their mouth is, otherwise they would be quite poor. I will repeat this again and again, anyone interested doing a story from a climate change expert out there? Look at their temperature projection record. Sports writers do performance statistics all the time, a science story might be just as important!

Re: #45 and the pull quote “a good fraction of it’s going to stay there 500 years”: One of the main points regarding AGW which I took from AIT and have relied on since that time is that CO2 is highly persistent in the atmosphere. It has seemed to me from the beginning that this is the crucial point, because the lag effect and the persistence equate to ‘built in’ warming far into the future.

However, there seems to be hot dispute over the residency time of CO2. I have seen estimates as low as 5 years.

My question is this: what research/reference material is available which justifies the more long-term predictions? I have searched for such corroboration and thus far have come up empty.

Walt Bennett

Harrisburg, PA

# 58.

What “media”are you talking about. Remember , the # 1

objective of most media outlets ( ie the ones that are corporate> is to maximize the wealth of the stockholders, not make editorial decision about climate scientists’ credibility. This IS a capitalist country you know.

The solutions are here now:

No, they are not. Human beings have overpopulated the Earth far beyond its natural carrying capacity, and is essentially sustained by burning coal, oil, gas and uranium. You need to revisit the concept of ‘numbers’.

RE # 69 Barton, you cannot simply throw down your wild assumption and expect anyone to take you seriously.

How about putting some more thought into:

[Switching to renewable sources of energy should do the trick]

On the first graph it was suggested that B is the best match. From real world experience wouldn’t it be more reasonable to suggest that A is what has happened… i.e. that exponential growth in forcings has occured? It could be that this is evident in the curve as well both before and after the 1991 volcano in that the curve is steeper than B before 1991 and then after the significant flat portion then crosses B at a similar slope. However, looking at the overall shape of the curve it might be more reasonable to suggest that neither A nor B can be inferred directly from the data. There is too much noise. Unfortunately, it seems that in two regions the data are steeper than A. It would be interesting to see the derivative of these curves to see at what points the slopes are in the best agreement. and when they are not.

re: 62. No, the US did not have “a very cold April”. It was just below average, nationwide. Some regions were considerably above average. The actual NOAA stats indicate it was the 47th coolest April out of the past 113 years and about 0.3 F below average. That is not “very cold” but rather is closer to the middle of the pack, nationally.

April saw the largest demand for gasoline ever recorded. I now think the American people will buy just as much gasoline at $4 plus as they were at $3 plus.

Re #46 Randy: “All these people breathe….”

Comment by Randy

You’re not suggesting that human respiration in any way increases GHGs, are you?

If so your really need to do some reading.

A couple of replies:

James (comment 58), as you know, we have discussed this bet previously, see your posting on it.

And I have admitted that we would have lost. The same holds true today. Yes, we cherry-picked the start of the period of the bet – that was the point. And yes, we would have lost despite that, as indeed there will be no statistically significant downward trend in the global average temperature in the lower troposphere as measured by satellites (take your pick of either UAH or RSS datasets) from January 1998 through December 2007 (the period of our proposed bet). In fact, the UAH data from January 1998 through present (April 2007) is (a non-significant) 0.072 ºC/decade and the RSS trend for the same period is (a non-significant) 0.012 ºC/decade (overall, from the start of the record until now, the UAH and RSS global lower tropospheric trends are (a significant) 0.15ºC/dec and 0.18ºC/dec, respectively). Is there anything more that you would like me to add?

Perhaps another wager? How about this – I’ll take the low end of the IPCC range of projected warming and you take the high of the range, and whoever reality proves to be closer to will be the winner? (note I would have won this bet for a period of the past 20 years using Dr. Hansen’s 1988 projections to define the range – as proven by Gavin’s analysis).

My point here is I personally believe, and probably so do a lot of other folks, that the high end of the IPCC temperature (and forcing) range is unrealistic. My question is why included it? Why did Dr. Hansen include his scenario A, when now, Gavin contends that the real “forecast” was scenario B? What is the real “forecast” now?

Eli (Comment 48, 64), do you think the emissions scenario used in Knutson and Tuleya (2004) was reasonable? (hint, they assumed a 1%/yr increase in atmospheric CO2 concentration from present day for 80 years – the current rate (depending on how you define “current” is some where between 0.5 and 0.6%/yr). If they used an “idealized” scenario, then they should have only given an “idealized” conclusion – not one with a date attached to it that is likely to be far sooner than observations suggest. And as far as the “hot Virginia sun” goes, we’ve been overall a bit chillier than normal since about February – kind of unpleasant during winter, but kind of nice, now! :^)

Urs, (comment 55), thanks for the information about global CO2 emissions. I have seen the numbers for 2003 and 2004(?) but nothing since, do you have, or can you point me to, the global CO2 emissions numbers for more recent years? Thanks!

-Chip Knappenberger

to some degree, supported by the fossil fuels industry since 1992

What is the real “forecast” now?

The real forecast is 383 ppm rising at 2 ppm/year, a minimum carbon dioxide sensitivity to doubling of 3 C, adding positive feedbacks, some of which are unknown, yields a 5 C increase in global average temperatures by 2100, and of course, time does not stop in 2100. There is 15 feet of water in West Antarctica just waiting to be released. By all available metrics, we have initiated a ‘carbon dioxide catastrophe’. You can spin it all you want, but the numbers and the paleoclimate history of the planet Earth are already starkly clear to most intelligent, credible and inquiring minds.

RE # 80, Chip, try this link:

http://www.esrl.noaa.gov/gmd/ccgg/trends/

It will give you measurements up to Apr. 2007, both Manua Loa and global and monthly means going back to 1958.

Mr. Knappenberger, you’ve already used that talking point in this thread, and it’s been answered; did you read the answer? If not look above, find timestamp 10:29 am.

Read through the second paragraph of Gavin’s answer, in green font.

FYI : West Antarctica Melting/QuickScat in the news today :

http://www.livescience.com/environment/070515_antarctic_melt.html

# 78- because gas was cheap in April.Despite the ‘whining’ by us ‘muricans about gas prices, it’s RIDICULOSULY cheap .

I think $ 4.00 will be a little wake up call.

RE # 81

Thomas, you said:

[There is 15 feet of water in West Antarctica just waiting to be released.]

and add glacial meltback, Greenland meltback and ocean thermal expansion. Yes, they are also being, and waiting to be, released.

So, an honest, intelligent, credible and inquiring mind can ponder this question: Can capitalism survive that amount of sea level rise?

Re: Think outside the box. No need for breakthroughs, I’m optimistic the evolution of existing technologies will make CO2 emissions surprisingly easy to control. My principal worry is businesses, politicians and green pressure groups doing much damage in the process. Witness the stupid biofuel craze, where forests are cleared for energy crops .

#80, MSU data has been questionned once or twice in the past, for instance from NOAA sep 2005:

“Scientists at the University of Washington {UW}, developed a method for quantifying the stratospheric contribution to the satellite record of tropospheric temperatures and applied an adjustment to the UAH and RSS temperature record that attempts to remove the satellite contribution (cooling influence) from the middle troposphere record. This method results in trends that are larger than the those from the respective source.”

Have we forgotten the UW with the UAH, as with UW-UAH?

From just a guy who sees significant warming trends with a telescope and a digital camera.

The RSS MSU Lower Troposphere temperature trend might now be going down versus 1998.

January 1998 was about 0.6C above average while the latest figures for April 2007 are only about 0.2C above average.

http://www.remss.com/msu/msu_data_description.html#msu_amsu_trend_map_tlt

Mr Roberts (re: 83),

In Gavin’s response that you point to, he concludes “I have previously suggested that it would have been better if they had come with likelihoods attached – but they didn’t and there is not much I can do about that.” I take that to mean that he thinks that all scenarios are not equally likely. I agree. I am taking the position that I don’t think the high end scenarios are very likely at all–for instance, SRES A1FI results in a CO2 concentration of somewhere around 970ppm by 2100, SRES A2 produces ~850ppm. In comment 81, Thomas Lee Elifritz thinks the real forecast for CO2 concentration is 383 + 2pmm/yr which produces ~570ppm by 2100–an amount less than nearly all SRES scenarios! In 1988, Dr. Hansen wrote that his scenario B was “perhaps the most plausible.” So obviously people have opinions as to which scenarios are more likely than others.

Gavin concluded his original post with “Thus when asked whether any climate model forecasts ahead of time have proven accurate, this comes as close as you get.”

So why is everyone being so coy? I am just asking what people think are the most likely scenarios, i.e. which climate model forecasts you think “ahead of time” will prove to be most accurate? My hunch is that not many will give much credence to the high-end IPCC scenarios. Perhaps I will be wrong. You all have opinions, so what are they? And how do they square with the IPCC SRES scenarios?

-Chip Knappenberger

to some degree, supported by the fossil fuels industry since 1992

John Wegner wrote:

> The results to date show that … global warming will not be

> a problem at all.

Where did you publish this or where did you find it published?

re: #86 John, I don’t see any evidence of a decline in the TLT data presented on that page. 1998 was an exceptional year — why would you select it as a basis for comparison? Or did I miss something?

Chip, nobody’s being “coy” — have you looked at the sea level rise numbers, or the Arctic ice area numbers, compared to the projections?

Have you looked at the change in the view of the Antarctic ice, from changeless to changing unexpectedly?

Perhaps you missed this little gem of childhood:

“… a voice came to me out of the darkness and said,

‘Cheer up! Things could be worse.’

So I cheered up and, sure enough, things got worse.”

People like me with no expertise are here to try to learn what’s known, and perhaps to remind the scientists to speak more clearly, use one idea per paragraph, and aim for the national 7th grade average reading level.

You’re here presenting PR positions for your clients, right? paid time?

Or can you differ with their public stance on your own time?

The latest IPCC report explicitly doesn’t include changes the climate scientists tell us are clearly happening, because the changes got published after the cutoff.

http://scienceblogs.com/stoat/2007/04/egu_thursday_1.php

So — opinion? My opinion is I trust the research more than the PR.

Look at the history, from asbestos to lead to tobacco to pesticides to now. What can you tell about what industry PR claims? They’re lies. They minimize risk and put off certainty with …. opinions.

John Wegner wrote:

> The RSS MSU Lower Troposphere temperature trend might now

> be going down versus 1998.

You’re reading from the error Pielke Senior made and admitted making, using a period that’s too short to determine a trend. It’s blown already:

http://scienceblogs.com/stoat/2007/05/another_failed_pielke_attempt.php

Chip,

The simple answer is that SRES isn’t climate science, it is economics with a bit of politics thrown in.

Turning SRES into climate changes, that is where the climate science comes in.

Note, however, that over 2 decades, the SRES question hardly matters, and you have to go to wide extremes to make a noticeable difference (eg Hansen’s A, and C forecasts).

Over 100 years, the emissions matter a whole lot more. But over that long a time scale, they are much more a matter of choice, and less a matter of the inertia in the current infrastructure. In no small part, we get to choose whether we prefer A2 or B1 etc. We don’t get to choose climate sensitivity.

> Chip K

Check yourself out here, when wondering why people think estimated ranges may be too conservative:

http://www.wunderground.com/education/ozone_skeptics.asp

[[The solutions are here now:

No, they are not. Human beings have overpopulated the Earth far beyond its natural carrying capacity, and is essentially sustained by burning coal, oil, gas and uranium. You need to revisit the concept of ‘numbers’. ]]

No, thanks, I was satisfied with the calculus, linear algebra, etc. I took way back in my college days.

You need to revisit the concept of “renewable energy.” The world does not have to run on fossil fuels.

[[RE # 69 Barton, you cannot simply throw down your wild assumption and expect anyone to take you seriously.

How about putting some more thought into:

[Switching to renewable sources of energy should do the trick] ]]

Why do you assume I haven’t?

Chip is doing it again, when he tries to trash Hansen by saying

he conveniently neglects to mention that the 1988 paper characterized Scenario A :

James Annan in comment 95 is exactly right, in the long run the actual scenerio is a matter of political will, in the short, it is pretty easy to get it right and to bracket the possibilities, something that Hansen recognized from the get go, and that Chip is trying to obfusticate.

My point here is I personally believe, and probably so do a lot of other folks, that the high end of the IPCC temperature (and forcing) range is unrealistic. My question is why included it? Why did Dr. Hansen include his scenario A…

– Comment by Chip Knappenberger â�� 16 May 2007 @ 11:39 am

Am I missing something? I’m not a climate scientist or engineer so the question isn’t snark. Don’t the high-, intermediate- and low-end scenarios present a range of model prediction, each based on a grouping of different assumptions & factors? And wouldn’t the purpose of this, especially in the relatively early stages of a research program, be to provide a basis for testing data against the model scenarios in order to evaluate the various assumptions & factors? Why then wouldn’t you include all three? If you were to leave out one or more possible scenarios, even if at the time you don’t necessarily consider it likely, wouldn’t you be opening yourself to charges of not being rigorous in your approach? It seems that including A, B and C and then testing the real world data against each is science 101. Certainly even in applied engineering design there are scenarios that may not be judged at the time to be likely but are still considered, especially if the consequences of not doing so could reflect on the credibility of the design team.