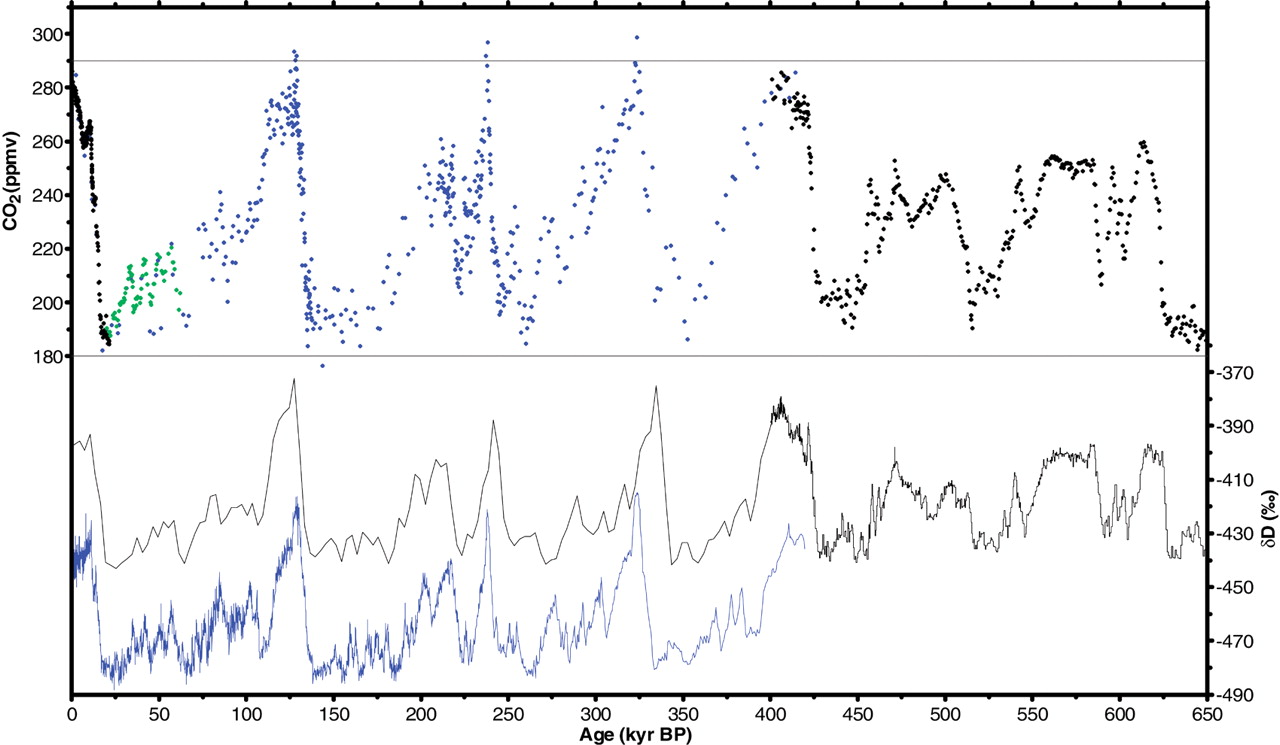

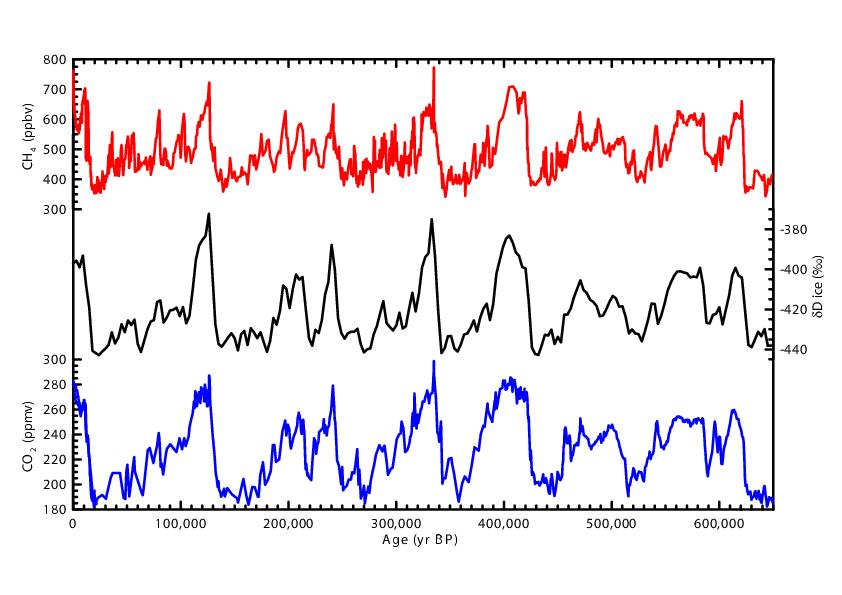

The latest results from the EPICA core in Antarctica have just been published this week in Science (Siegenthaler et al. and Spahni et al.). This ice core extended the record of Antarctic climate back to maybe 800,000 years, and the first 650,000 years of ice have now been analysed for greenhouse gas concentrations saved in tiny bubbles. The records for CO2, CH4 and N2O both confirm the Vostok records that have been available for a few years now, and extend them over another 4 glacial-interglacial cycles. This is a landmark result and a strong testament to the almost heroic efforts in the field to bring back these samples from over 3km deep in the Antarctica ice. So what do these new data tell us, and where might they lead?

(suite…)

First of all, the results demonstrate clearly that the relationship between climate and CO2 that had been deduced from the Vostok core appears remarkably robust. This is despite a significant change in the patterns of glacial-interglacial changes prior to 400,000 years ago. The ‘EPICA challenge’ was laid down a few months ago for people working on carbon cycle models to predict whether this would be the case, and mostly the predictions were right on the mark. (Who says climate predictions can’t be verified?). It should also go almost without saying that lingering doubts about the reproducability of the ice core gas records should now be completely dispelled. That a number of different labs, looking at ice from different locations, extracted with different methods all give very similar answers, is a powerful indication that what they are measuring is real. Where there are problems (for instance in N2O in very dusty ice), those problems are clearly found and that data discarded.

First of all, the results demonstrate clearly that the relationship between climate and CO2 that had been deduced from the Vostok core appears remarkably robust. This is despite a significant change in the patterns of glacial-interglacial changes prior to 400,000 years ago. The ‘EPICA challenge’ was laid down a few months ago for people working on carbon cycle models to predict whether this would be the case, and mostly the predictions were right on the mark. (Who says climate predictions can’t be verified?). It should also go almost without saying that lingering doubts about the reproducability of the ice core gas records should now be completely dispelled. That a number of different labs, looking at ice from different locations, extracted with different methods all give very similar answers, is a powerful indication that what they are measuring is real. Where there are problems (for instance in N2O in very dusty ice), those problems are clearly found and that data discarded.

Secondly, these results will allow paleoclimatologists to really look in detail at the differences between the different interglacials in the past. The previous 3 before our current era look quite similar to each other and were quite short (around 10,000 years). The one 400,000 years ago (Marine Isotope Stage 11, for those who count that way) was hypothesised to look more like the Holocene and appears to be significantly longer (around 30,000 years). Many of the details though weren’t completely clear in the Vostok data, but should now be much better resolved. This may help address some of the ideas put forward by Ruddiman (2003, 2005), and also help assess how long our current warm period is likely to last.

More generally, since the extra interglacials that are now resolved have very different characteristics from the previous ones, they may allow us to test climate theories and models over a whole new suite of test cases. To quote Richard Alley “Whether you’re a physicist, a chemist, a biologist, a geologist, or any other “ist” studying the Earth system, there is something in these data that confirms much of your understanding of the planet and then challenges some piece of your understanding”. It’s all very exciting (for us ‘ists’ at least!).

Premièrement, ces résultats démontrent clairement que la relation entre climat et CO2 déduite a partir de l’enregistrement de Vostok est remarquablement robuste – en dépit d’un changement majeur dans les caractéristiques des alternances glaciaire-interglaciaire avant 400 000 ans. Un exercice international, ‘le challenge EPICA’ avait été mis en place il y a quelques mois pour les chercheurs travaillant sur les modèles du cycle du carbone, afin de déterminer si ce serait le cas ou pas, et la plupart des prédictions se sont révélées justes. (Qui a dit que les prédictions climatiques ne peuvent être vérifiées ?). Ceci va de pair avec le fait que toute incertitude sur la fiabilité et reproductibilité des enregistrements de gaz a effet de serre doit maintenant être complètement écartée. En effet, que différents laboratoires, étudiant de la glace extraite de différentes manières et provenant de différents endroits donnent des résultats très similaires, montre de manière très claire que ce qu’ils mesurent est réel. Dans le cas ou il subsiste des problèmes (par exemple pour mesurer le N2O dans de la glace riche en poussières), ces problèmes sont clairement identifiés et ces données écartées.

Deuxièmement, ces résultats vont permettre aux paléoclimatologues de regarder en détail les différences entre les différents interglaciaires du passé. Les 3 précédents interglaciaires a notre ère se ressemblent beaucoup et furent relativement brefs (environ 10000 ans). Celui qui a eu lieu il y a 400 000 ans (correspondant au stade isotopique marin 11, pour ceux qui comptent de cette manière) était par contre supposé ressembler a l’Holocene (la période interglaciaire pendant laquelle nous vivons) et semble être plus long (environ 30 000 ans). Alors que beaucoup de détails n’étaient pas complètement résolus dans les données de Vostok, ceux ci devraient maintenant s’éclaircir. Ces enregistrements permettront peut être de tester et de résoudre certaines des idées de Ruddiman, et également nous aider a estimer la durée probable de la période chaude pendant laquelle nous vivons.

Plus généralement, comme ces interglaciaires semblent assez différents de ceux précédemment décrits, ceux-ci vont nous permettre de tester certains modèles et théories du climat dans une nouvelle série de cas. Comme le dit Richard Alley “que vous soyez un physicien, un chimiste, un biologiste, un géologue ou qui que ce soit étudiant le système Terre, il y a dans ces données a la fois des confirmations majeures de ce que nous comprenons déjà de cette planète et de nouvelles interrogations sur quelques points”.

Update 1 Dec: Thomas Stocker sent in a better figure of the composite results for CO2, CH4, and the isotopes:  .

.

Mise-a-jour 1er décembre: Thomas Stocker a envoyé une image plus claire des données: .

.

Re #46 — where did “The direct influence for a CO2 doubling is some 0.68 K (or 0.23 K for the current level), based on its absorption bands” come from? According to Houghton (2004) the direct influence for a CO2 doubling alone is 1.2 K. And that’s down substantially from Manabe and Wetherall’s (1967) estimate of 2.0 K.

On the subject of ice cores, I saw a talk by JP Steffensen recently on the current status of the NGRIP timescale. The results are pretty bloody exciting – they’ve got major Deuterium excess changes at the onset of the Younger Dryas occurring in a year. A year!

Gavin #43

I think you may have missed the point.

“….that there has been no drift of the mole fraction, cannot be rejected for any of the Primaries with the statistics we have.Therefore, we assume that,there has been no drift until now…… The above does not imply that the defined WMOScale has not drifted”.

They are talking about an unknown amount of “drift” possibly (probably?) over and above the amount they have recalibrated for.

Their own page (extracts shown as follows) on preparation and stability is littered with examples of drift potential, and those are only the ones they know about now. What others remain as yet undetected?

The manufacturing target of the CO2 test canisters is “within 5 umol/mol for near ambient concentrations.” But this appears to be greater than the annual measured change of 1.3 ppmv.

“The zero air from both trapping agents was tested and found to be less than 5 umol/mol for CO2.”

“Aquasorb is phosphorus pentoxide (P2O5). However, CO2 was found to increase by 2-5 umol/mol with this new drying material. This problem and the safety issue of cycling phosphoric acid in a high pressure system prompted the decision to discontinue use.”

“The steel cylinders used in the past exhibited too much drift in CO2 mixing ratios, with half of these drifts on the order of 0.05 umol/mol CO2 per year [Komhyr, 1985] could be at different rates or of a different sign in each cylinder.”

“There are some cases (8%) with evidence of drift greater than 0.045 umol/mol per year. For this reason, it is recommend that standards be recalibrated during long use and at the end of their useful volume. The drifts, as shown in the histogram, cannot be predicted. Drift in most of our standards is often undetectable for histories of less than 2.5 years. In some documented cases the drift was attributed to H2O mixing ratios greater than 5 umoles mole-1, stainless steel valves, or valves with packing materials, which preferentially absorb trace gases. There may be an increasing trend in the CO2 with decreasing cylinder pressure below 20 atmospheres. This is different in each cylinder or may not be present at all.”

“There have been some cases of major drift (0.05 per mil 13C per week) of the CO2 stable isotopes, attributed to the Teflon paste used as the cylinder valve sealant and also to unknown regulator contamination. There may be some evidence for long-term drift of CO in aluminum cylinders as exhibited in smaller high-pressure cylinders [Paul Novelli, CMDL, personal communication, 1999]. This drift, however, is on the order of the measurement precision and has not yet been well quantified.”

“Drifts have persisted for months due too evacuating the cylinder down to vacuum before filling. Drifts due to spiking, discussed below, have been eliminated by filling the cylinder immediately after spiking and filling the cylinder laid down. There is no evidence to show drifting due to the filling process described below. As a result of these experiences and to remain conservative about long-term unknown effects, the following steps have been adopted by CCGG…”

“The zero air is created with specific chemical traps for each trace gas. Carbon dioxide can be removed with sodium hydroxide, Ascarite (Thomas). Carbon monoxide is removed with Schutze reagent. The zero air from both trapping agents was tested and found to be less than 5 umol/mol for CO2..”

“Occasionally the targeted concentration is missed by an amount that requires adjustment. If the concentration of the standard is closer to ambient than the target concentration, it is easiest to blow this off and start over. Experimenting with introducing small volumes of high pressure (high or low concentrations) into the air stream have proven very time consuming due to the time necessary for the cylinder to become evenly mixed before obtaining a usable measurement from the standard.”

“There is evidence that as the volume of pressurized air is used up, the trace gas can come off the walls in disproportionate amounts. This has sometimes been seen in cylinders with CO2 when a standard is used at pressures below 20 atm. Thus CO2 mole fraction may increase with pressure loss. This effect is variable and may not be measurable in all tanks.”

NOAA Technical Memorandum ERL-14

CMDL/CARBON CYCLE GREENHOUSE GASES GROUP STANDARDS

PREPARATION AND STABILITY

Duane Kitzis

Conglong Zhao

http://www.cmdl.noaa.gov/ccgg/refgases/stdgases.html

Remember they are claiming to show accurately changes of 1.5ppmv over a year.

If they have made a mistake, or there is drift in the primary calibration, then all the figures produce by this method may be wrong.

Science should be about independent testing and replication, but if all the testers are using the same equipment, using the same calibration of the primary, then there is in fact no independent verification of the method or the calibration.

See also http://www.cmdl.noaa.gov/ccgg/refgases/reg.guide.html Guidelines For Standard Gas Cylinder and Pressure Regulator Use, for how complicated all this is to work in practice.

One comment and one question:

Comment: Aren’t we fighting Occam’s Razor here? We keep coming up with reasons to explain away the obvious: There is a reason for the CO2 / temperature lag that still supports man-made climate change. There is a reason that even though CO2 and temperature patterns clearly are cyclical, this time it is different. Even though many scientists a priori believe in man-made climate change but design, run, and analyze their own data, their results are still considered objective. At what point do we say enough is enough and that we really don’t know?

Question: We know that large volcanic eruptions can cause 1+ year winters and yet nature adapts. We know that the earth makes large swings in temperature on its own, albeit over a long period of time. We know that another ice age is all but inevitable. So isn’t it plausible that IF there is man-made global warming, nature will adapt? For all we know, in 500 years, the bigger concern could be a new ice age, right?

[Response:Of course “nature will adapt”. But this is like saying that during the Great Depression, the economy “adapted”. If you are the particular working stiff or particular plant, animal, or economic system affected, you might not personally benefit from the long term adaption. Indeed, your argument is a bit like saying there is no reason to fight infectious disease, as eventually evolution will take over and some people will survive — i.e. society will adapt. Your suggestion is neither a scientific idea, but a philosophical idea and not a very well thought out one at that. Sorry to be harsh, but you are trodding on very well covered and uninteresting ground. As for the point about volcanoes, you are confusing high frequency forcing with low frequency forcing. If I hit my car, it shakes but doesn’t move. If I apply slow, steady pressure, I can move it. That’s the difference between high and low frequency forcing. The anthropogenic excess greenhouse effect is all about the latter. –eric]

Re #53

Most of the cited drift discussion is irrelevant as it pertains to particular cylinders, not the overall measurement system. In any case, there are multiple lines of independent corroborating evidence (like isotope ratios) as well as good physical reasons to expect atmospheric concentrations to rise (burning 6e9 tons of fossil carbon per year). It is healthy to consider the possibility of drift, but silly to postulate it in absence of any evidence. You might as well argue that all our measurements have drifted because the standard meter in Paris shrank.

[Response:Agreed. Furthermore, one can take an off-the-shelf diode laser system (and there are hundreds of these in circulation and measure CO2. You will get 375+ ppm CO2 if you do this outdoors. If you had done it 100 years ago you would have gotten 300 or so. There is a huge huge change, easily measureable even on a poorly calibrated instrument. This is not a difficult measurement. For context, in our lab we regularly measure differences that are 1000 times smaller. –eric]

#54 – “For all we know, in 500 years, the bigger concern could be a new ice age, right”?

Wrong.

http://en.wikipedia.org/wiki/Paleocene-Eocene_Thermal_Maximum

Re #46: Ferdinand, what is the basis of your statement that “The direct influence for a CO2 doubling is some 0.68 K”? The IPCC 2001 report (section 1.3.1) claims 1.2 K, before feedbacks. If you have different information showing the real value is only half that much, I would like to know what it is.

Re #54

Check google scholar and you’ll find that the time scale for the next ice age is more like 500 centuries than 500 years. From the perspective of immobile species and long-lived infrastructure, there’s a huge difference between a rapid multidecadal temperature increase and the transient from a volcanic eruption. Nature always adapts, but the process may not be painless.

Re 55

So you accept that some of the drift discussion is relevent. Thank you.

Some you say is irrelevent because it relates to particular cylinders, but particular cylinders are what are used in the measuring process.

As to corroboration by isotope ratios, it seems also to be an inexact science::

Hitoshi Mukai (NIES, Japan)

Many laboratories…are analyzing carbon and oxygen isotope ratios of atmospheric CO2 to study carbon cycle on the earth….However, previous inter-comparison activities like CLASSIC clearly showed difficulty in obtaining the same results when different laboratories analyzed the same sample1). Although there are many laboratories involving CO2 isotope analysis, there has been no handy reference material to compare their scales to each other. http://www.members.aol.com/mhmukai/WMOreport.htm

As to the “good reason to expect”, if one goes into these things prejudging the outcome then it is no surprise that the expected outcome is achieved.

“You might as well argue that all our measurements have drifted because the standard meter in Paris shrank.”

Well if we were still using the original Paris meter without knowing it had shrunk, then we would indeed be getting our sums wrong.

re #54: Your perception that things are in doubt which in fact aren’t in doubt at all seems to be causing your razor to cut the wrong way.

We know from first principles that all else equal, a planet with more greenhouse gases in its atmosphere will be a warmer one at its surface. We know from simple mass conservation arguments that we are causing a huge relative shift in the concentration of such gases.

The simplest expectation is a warming surface and a cooling stratosphere. We also observe about the right amount of surface warming and stratospheric cooling. People who allege that this is mere coincidence have a whole lot of ‘splainin’ to do. Why didn’t the CO2 cause warming, and if it didn’t what else did? And what about that stratosphere?

I am glad you appeal to Occam, but I suggest you not appeal to willful ignorance in the process. You need to look for the simplest explanation that actually accounts for the evidence. Throwing away evidence is not the idea at all.

Finally, I think scientists, by definition, have no a priori beliefs in anything, and am very disappointed that you make an argument based on an assertion to the contrary.

Scientists, actually, rather like surprises. It gives us something to think about.

re #53; Your argument might carry more weight had you not signed it with the same name as you used in the demonstrably uninformed comment #27. It’s clear you are inexpert about the basic facts of climatology. So why, then, are you making assertions rather than asking questions? Are you interested in the facts of the matter, or have you already chosen a policy position and therefore seek to get the facts to conform?

Anyway, exactly where do you propose the excess carbon flux from fossil fuels is going, and why do you suggest the measurement curve is trending sharply upward even though the excess carbon is going some mysterious somewhere? (Obviously measurement error won’t account for the consistent trend.)

Re: #56 and #58

500 years was a a number I rather rashly through out there – my mistake. What I am referring to is the belief among some scientists that we are in a brief warming period between glacial advances. I believe there was an article in Nature several years ago about a European study that stated the next ice age may occur within the next 10k to 20k years. If interested, I can dig it up in my web bookmarks and post it.

Re: #59

Starting with last first: the definition of a scientist doesn’t create reality, and I find it naive to believe that all (or even most) scientists start with no a priori beliefs, especially those involved with climate change research. Why do many students in college choose to study environmental sciences in the first place – isn’t it because many are concerned about climate change and the affects man is having on the environment? While admirable, this also means that these people do have preconceived ideas, and hence strict, structured scientific methodologies need to be followed in order to help ensure objective results.

And while there are charges that global warming skeptics receive funding from mining and oil companies and are therefore biased, scientists on the other side of the fence receive funding from environmental groups and government organizations, both of which gain publicity and power when research supports the existance global warming – it gives reason for these organizations existance. Would Al Gore have pushed for funding for scientists who repeatedly had solid research that showed global warming wasn’t occuring?

Let me state that I used to believe man-made global warming was fact. However, when I started looking at more and more historical data from different studies, my skepticism grew. Perhaps I am ignorant in my observations, and I am surely not aware of all the research that exists on climate change. So I propose this: I will list the data / research that has influenced my current position, and perhaps others can post reasons / links that discredits this research.

1. Vostok data: to me, it looks like we are in an upwards swing of a warming pattern that will eventually turn the other way. albeit we are talking in 10k’s of years.

2. Temperature data from the NOAA (http://www.ncdc.noaa.gov/paleo/pubs/briffa2001/briffa2001.html): In both plates 2 & 3, many of the trendlines don’t show anything different from what we see over the last 1000+ years. While some do, it hardly seems conclusive. Am I missing something?

3. Other things such as the earth’s orbit, tilt, and electromagnetic fields also can play into global temperatures, along with volcanic eruptions, solar events, etc. Have these all been ruled out? And if not, isn’t it possible that temperature increases caused by these factors also increase greenhouse gas levels, ala the “lag theory”?

4. How do we know at what point temperatures will level off, even with a continual increase in greenhouse gases? Will it increase linearly forever? And how can we really measure this, given all the elements at play?

5. Assuming humans are creating an increase in greenhouse gases, isn’t it possible that the earth will counter balance these actions? For example, there is a theory that an increase in CO2 would cause an increase in plant life, which then reduces the CO2, etc. Is this not realistic?

6. Large volcanic eruptions like Krakatoa in the late 1800’s reduce temperatures by a signifcant amount for several years, but temperatures right themselves shortly thereafter.. Hence, the argument that by slightly affecting temperatures over a period of several hundred years humans could cause long term damage to the earth seems less plausible to me.

8. From a philosophical perspective: if the goal of fighting global warming is to help mankind live well on planet earth, wouldn’t we be better off using our economic, political, and scientific resources to fight the preventable diseases that cause the deaths of millions of chilldren every year?

Re: 62

In terms of being between glaciations, that is pretty much the point of the whole article. It appears that the current interglacial would come to an end in 10-20,000 years were there no man made pertubations.

When it comes to scientific bias; this is why we have the whole peer review process. Science does abandon cherished ideas when they are demonstrated to be false. But as an opposite argument – given the stance of the current US government on climate change, I would strongly suspect that any national lab that started coming out with countervailing evidence would get a big funding hike. And this whole point is irrelevant until someone actually comes up with evidence that climate scientists are systematically modifying their results to support a given conclusion.

TO your numbered points:

(1) If you go out and find a temperature record of the past 10,000 years, you will see that temperatures maxed out arounf 5-6,000 years ago and went into a very slow decline from then. You can see this in the graph attached to this article – the highest temperatures are attained just after deglaciation. It is incorrect to think that we are surrently in a natural long term warming pattern.

See: http://www.aip.org/history/climate/xCampCent.htm

(2) You are missing the point that local temperatures don’t all have to change.

(3) All of the things you mention (Apart from ‘electromagnetic fields’) are included in current models, although thinhgs loke orbital changes are far too slow to account for the observed warming. The idea that GHG concentrations can lag warming that started from other causes is actually very bad news, since it implies that human induced warming could lead to even more natural GHG release.

(4) Temperatures will not increase in a linear fashion, and we don’t know if they will level off. Of course, most of the uncertanty here is on the upside.

(5) There is a vast amount of evidence showing that humans are increasing GHGs (Including some older articles on this site). About half of the emissions are taken up by the biosphere. It is highly unrealistic to think that all emissions could be taken up; that would imply that CO2 concentrations could never change for ANY reason.

(6) Most species can survive short term transient effects; long term climate zone shifts are a different matter. However, for Humans, the biggest problem is that many of our cities – representing vast investments of capital and materials – may either vanish below sea level or lose their water supply and become uninhabitable.

(8) That is in many ways a false dichotomy; it assumes that reducing GHG emissions actually costs money, to start with.

Here is something that occurs to me on reading your post – in a series of points, you are making some fairly scattergun arguments. Is this a real position, or simply using the rhetorical tactic of flinging as much mud as possible in the hope that some sticks?

Re #62, #63: “flying as much mud as possible”. Exactly the problem.

There is no coherent intellectual or scientific position with which we find ourselves arguing. Indeed, we don’t want to “argue” in any sense commonly understood by legal and political types. We want to consider and present the bulk of scientific evidence, including the parts that are well-understood and the parts that are in question.

That is why it is a good idea for the editors of the site to refrain from making any policy recommendations. As a community it is our obligation to help the policy sector and the public to understand the evidence. In the face of deliberate opposition to this goal, it is our obligation to try harder and in a more sophisticated way. While as individuals we may prefer one policy or another, as scientists our obligation ends once the state of knowledge is reasonably well perceived by the policy sector and by the general public. On the other hand, as long as confusion abounds, history clearly shows that we are morally required to try to correct it. Unfortunately, we have not been doing a good job of this. The culture of the geophysical sciences did not emerge in the context of deliberate obfuscation in which we find ourselves today, and so we find ourselves needing to develop new skills.

The main tactics of the obfuscation are 1) to try to present the appearance of a scientific debate on matters where there isn’t any and 2) to try to attribute political motives to the scientific community, where any effort to elucidate the high degree of confidence that has emerged in various important matters of immediate policy importance is deliberately conflated with advocacy.

People who use expressions like “scientists on the other side of the fence”, or “used to believe in global warming” or other such nonsense tend to spend a great deal of attention quibbling with various points. Most of the quibbles are of marginal importance (did Mann et al keep sufficient records of their work in the mid-90s to replicate their results exactly?) or are utterly without rational support (could the abrupt increase in CO2 in the 20th century somehow be a measurement artifact?). The objective of this nonsense is to create the appearance of a debate. Many people, as a result, believe in good faith that there is a debate, and may in good faith propagate the confusion. Something debate-shaped emerges, but it’s a travesty of a debate.

The exact import of all the evidence continues to be a fascinating scientific field and one with a gerat deal of both intellectual merit and practical consequence. We would like ot discuss these matters here. Instead, we get a barrage of disconnected and hostile questions.

The most important point is that there really is no fence to be on the other side of. There will always be outliers in the distribution of scientific opinion on any open question. It does very little good, morally or intellectually, to draw a fence around those outliers whose opinion expects the least impact from anthropogenic change and cast this as some sort of meaningful debate between opposing schools of thought.

It’s certainly possible that a scientific question can have a lumpy distribution, and that there might be competing schools of thought, but it simply isn’t the case in the climate sciences today, especially as regards the policy related questions that the public seems to think are our only obsession.

The few remaining well-informed people who are outliers on the question as to whether restraints on net CO2 emissions are timely do not form a meaningful cluster of opinion and they present no coherent theory. Fossil fuel interests who are motivated to delay the policy response make the best of their dwindling intellectual resources. (That they are dwindling is unsurprising, as evidence continues to pile up that the mainstream view that emerged in the ’80s on anthropogenic climate change is essentially correct.) They try to drum up a ‘debate’ so that they can continue to extract value from their property as long as possible without restraint. One could imagine more sophisticated ways for these interests to protect their financial positions, and indeed some corporate energy interests are doing so, but in fact it is clear that some of them are resorting to a rather crude and morally dubious approach of muddying the waters of honest debate based on facts with the pollutant of manipulative argument designed to support a particular political agenda.

The reasons for their unfortunate success in creating something that looks at first glance like a legitimate debate are well outside the range of topics appropriate for RealClimate, but the fact of their success is obvious from the sorts of discussions that appear here.

There is currently no serious competing theory to the scientific mainstream on this question. That is why the critiques of the policy-relevant aspects of the science are inconsistent and incoherent.

Even more striking is the complete absence of sensible critiques of any aspects of the intellectual core of the discipline. If we were so deeply wrong as many wish to believe, there would have to be some very fundamental and interesting core error in our reasoning. No one tries to find any such a thing. There is only tedious sniping.

RE #62, point 8: I’m in favor of recycling, but in the case of this and other specious arguments deriving fron Bjorn Lomborg I’m thinking source reduction might be the better approach. :) On the point, though, it might be worth considering that we have the resources to deal with the disease problem you describe right now, and simply choose not to. You may be interested to know that one of the near-term impacts of global warming is to make this disease problem worse.

RE: 64

Thanks for your responses – I appreciate the discussion and my intention is not merely to ruffle feathers but rather to foster open debate.

I agree with your comment that this should not be a policy discussion, but a data-centric discussion. While most of my points were regarding existing data and theories/models, some were of a policy nature, and I apologize for this.

However, I take issue with your comment in which you say there aren’t relevant or meaningful opposing views among scientists. There are many scientists who express sceptism about current global warming theories. Morever, I refuse to accept consensus as the standard by which I measure whether or not a theory is accurate. Hard data, as you suggest, is the best method. And if it is so clear that their is man-made climate change, my rantings should be easy to refute :-)

Data: I direct your attention to a graph of temperature change trendlines from multiple studies that seems to pop up a lot: http://www.ncdc.noaa.gov/paleo/pubs/briffa2001/plate3.gif . All but one trend line show recent temperature changes that are not drastically different from what the earth has experienced in the last 1000 years, and the one trend line that shows a spike only goes back 150 years.

As I am obviously :-) not a member of the scientific community, please explain these results.

Re 64

And if it is so clear that their is man-made climate change, my rantings should be easy to refute

So far they have been. ;)

Graphic All but one trend line show recent temperature changes that are not drastically different from what the earth has experienced in the last 1000 years, and the one trend line that shows a spike only goes back 150 years.

Check the various hockey stick and ocean heat posts here. All but one of those lines are reconstructions that stop somewhat short of the most recent warm decade. The one with the spike is the instrumental record. The key is not that the instrumental record isn’t too far outside history, but that there’s no natural forcing to explain its spike.

#59 I just want o be sure that I get this right. You are saying that hundreds of labs around the world with very different techniques are all making an error because they are in one way or another linked to the NOAA standard and so they are all consistently but erroneously measuring a drift of 80ppm. Is this right?

I just told this over lunch to the boss of our CO2 lab, she is still a bit frantic and I am not sure if I understood her answers correctly.

Re 59 (CO2 measurement drift)

So you accept that some of the drift discussion is relevent. Thank you.

Some you say is irrelevent because it relates to particular cylinders, but particular cylinders are what are used in the measuring process.

I only said “most” because I didn’t feel like rereading the article three times to be sure of “all.” While there might be some way to construct an argument for overall drift, it would have to be miniscule. Individual cylinders don’t matter because they get swapped out and recalibrated from time to time. If there was a long-term drift in a cylinder, it would get noticed by differences at swap time or across labs. This would be especially obvious for high drift rates (which had short durations) or inconsistent signs. Even if the year-to-year drift of a particular cylinder were as great as the atmospheric trend, that doesn’t mean the whole measurement system would miss a 30% change over 30 years.

As to corroboration by isotope ratios, it seems also to be an inexact science::

Since the 13C trend is at least 3x the size of the interlab error it again seems implausible that drift could account for the difference.

As to the “good reason to expect”, if one goes into these things prejudging the outcome then it is no surprise that the expected outcome is achieved.

Noting agreement between independent lines of evidence is not the same as prejudging the outcome. I seriously doubt that scientists setting up monitoring at Mauna Loa in 1958 fooled themselves because they were looking for a particular trend. The burden is on you to identify a plausible mechanism for a long-term drift of >30% in multiple instrument types and a plausible way for sink uptake to increase by 6 GtC/yr without any increase in atmospheric concentration.

Re: #66

You’re misreading the graph. Only one of the datsets in the NGDC graph (the observed temperatures) extends until 2000–the others stop around 1980 or earlier. This leads to the illusion that only one record shows an unusual trend. Every dataset in the graph shows higher temperatures in the 20th century than any other time in the record.

Michael, thank you for that powerful #64; I like the way your argument is developing, esp. wrt our work did not emerge in the context of deliberate obfuscation. I’d like to think Shellenberger and Nordhaus have come up with a coherent response to this condition. In addition, I’d explicitly say that responding to deliberate obfuscation/mendacity/FUD spread so far and wide in comment threads is not “debate”.

Best,

D

RE: 66

I’m not sure if the graph you were posting (which needs to be cleaned up by deleting the ). at the end of the link) really shows what you say it would. While writing that “All but one trend line show recent temperature changes that are not drastically different from what the earth has experienced in the last 1000 years” I see a temperature shift from -0.35 to +0.25 degrees Celsius or of 0.7 K since the year ~1920. There is no comparable period showing such a big change in such a short time in any of the graphs – except when you look at the graph of Crowley & Lowery between 1850 and 1950 perhaps. But compared to the obersvation this seems to be on of the most unreliable calculations for that period (or the obersvations would have to be flawed).

And you might have to get a look on this:

http://en.wikipedia.org/wiki/Image:1000_Year_Temperature_Comparison.png

Re #39 (comment),

Eric, the work of Cuffey and Vimeux was used by Jouzel ea. to correct the Vostok reconstructed temperature curve derived from the deuterium data. This only affects the amplitude of the temperature change, not the timing of the start and end of the cooling period, neither does it affect the timing or amplitude of the CO2 lag. The Cuffey and Vimeux correction (btw, data not available at the NOAA Vostok database) gives a somewhat longer high temperature level (which increases the covariance between CO2 and temperature), followed by a faster decrease until the minimum is nearly reached at the moment that the CO2 level starts to decrease. Thus there is simply no measurable influence of 40-50 ppmv CO2 on the temperature drop, even with a corrected temperature curve.

To make it clear, here the time periods for the different changes:

Temperatures in K, uncorrected (between brackets for corrected temperature data, as far as can be deduced from the coarse graph in Jouzel ea.), compared to current temperature and BP (before present):

128,000-118,000: +2 -> -1.5 (+2 -> 0)

118,000-112,000: -1.5 -> -6 (0 -> -4)

112,000-108,000: -6 -> -7 (-4 -> -4.5)

108,000-107,000: -7 -> -4 (-4.5 -> -2)

107,000-103,000: -3.5 +/- 0.5 (-2 +/- 0.5)

CO2 levels

128,000-112,000: 270 +/- 10 ppmv

112,000-106,000: 270 -> 230 ppmv

106,000-95,000: 230 +/- 7 ppmv

In addition, the CH4 (methane) levels:

128,000-118,000: 700 -> 560 ppbv

118,000-112,000: 560 -> 460 ppbv

112,000-106,000: 460 +/- 20 ppbv

106,000-105,000: 460 -> 590 ppbv

105,000-102,000: 590 -> 490 ppbv

As you can see, CH4 closely follows the temperature changes, while CO2 lags so much that it can’t have any influence on the temperature drop…

Re #51,

See Hans Erren’s calculations…

Re #66: “There are many scientists who express sceptism about current global warming theories.”

Disagreement about the details? Sure, lots. Disagreement with the consensus (anthropogenic GHGs causing significant global warming) by scientists qualified to make the judgment? Very, very little. “Many (skeptic) scientists?” Palpably false. If you want your other points taken seriously, it’s probably best to not make assertions of that sort.

Re #66; There are simple answers to each of your eight points, but together your eight points do not constitute the basis for a contrary theory. It is to this that the conclusion of #63 referred, and with this that I agreed.

Regarding your eight queries, I would say that (aside from the last question which is rather off topic) you don’t raise any issues that aren’t already in the basic repertoire of geophysics. So while it may be worth taking the time to address them, the result can only be a painstaking effort to convey geophysical understanding in a didactically disorganized way.

Like a toddler playing the “why” game, you can always find more elementary questions to ask. Producing this sort of question is dramatically easier than formulating a correct and compelling answer.

If you are genuinely interested, perhaps we can discuss your background and then we can recommend a course of study. Otherwise, it doesn’t really pay to address these questions one by one and in depth.

I would rather focus on your third paragraph in #66, which betrays, I think, a misundertsanding of the scientific method and of the extraordinary community that enables it to work.

You say: I take issue with your comment in which you say there aren’t relevant or meaningful opposing views among scientists. There are many scientists who express sceptism about current global warming theories.

While the truth of this assertion depends sensitively on the meaning of “many”, you are arguing against a point I did not make. What I said was that among the skeptics, no one has produced a coherent alternative explanation of how things might work such that greenhouse gas accumulations would be a matter of small consequence. That is, as regards anthropogenic climate change, there is a consensus position and there is some number of doubters, but there is no alternative school of thought, no coherent alternative theory.

You say Morever, I refuse to accept consensus as the standard by which I measure whether or not a theory is accurate.

While I’ve heard this sort of thing lately, I find it disturbing and peculiar in the extreme. Any scientist will attest to the value of asking other scientists questions in neighboring disciplines. The probability of a single scientist making an assertion regarding his or her own field with very little qualification that turns out to be unfounded is quite small. The probability that unanimous unqualified agreement among several scientists turns out to be unfounded is vanishing. This is exactly the basis on which science can proceed, because far more is now known than any individual can check. We have confidence in this methodology because some of us do wander from one field to another, and when we do check, we find that assertions that are claimed to be on a sound basis in a rigorous science almost invariably turn out to be true.

Without consensus, we have no science, and so we must proceed blindly on what we can each figure out alone.

In the present case, we have fifteen year old predictions of climate changes that are highly unlikely within natural variability that essentially show every indication of being basically correct. We have a community of scientists that finds this outcome unsurprising, if perhaps disturbing. We have a formal international consensus process that continues to refine the predictions but finds little basis to question it. We have a detailed report issued by that consensus body along with numerous references to the primary literature. We have essentially unanimous agreement from relevant scientific organizations ( e.g., the American Meteorological Society, the American Geophysical Union, the American Association for the Advancement of the Sciences, the National Academy of Sciences).

On the other side, we have a contingent of political organizations and opinionated publications that call this consensus into question. This group is the more politically sophisticated, but it draws on perhaps two dozen arguably qualified scientific professionals, among them having nothing resembling an alternative theory with explanatory or predictive power.

In this situation, arguing from authority must carry some weight. Human progress is built on intellectual and moral trust. Consensus in science is not achieved lightly and shouldn’t be challenged as if it were a mere intellectual fad. Let’s see some alternatives with equal explanatory power before we go throwing out the opinions of established science. At present there are not two equal sides to “the issue”. In a scientific sense, what the public sees as “the issue” has been framed by nonscientists. At present, there really is nothing in science that resembles “the issue” usually framed as “believing in global warming”.

Public discourse is not advanced by what can only be called impertinent questions. If we were in a more civilized phase of society, we would see far less of both credulity and incredulity here, more trust in the scientific community to converge upon truth and give honest evaluations of uncertainty. Then we might get more exploration of the nature of the relevant evidence and its consequences, ideally leading to responsible and informed policy debates and policy decisions.

RE: #75 and all my other friends here :-)

In deference to Mr. Tobis’s comment, perhaps we should take this to another forum, if others are interested. Any suggestions? I have a blog that I am willing to open up for comments without censoring, or perhaps a more neutral forum?

Re 46, 51, 74

Hans Erren’s napkin is hardly an authoritative source. I find it quite bizarre that he can neglect feedback, the atmosphere, and most of the available data, and then write “That’s physics. All the rest is models and hype.” His calculation is physics about as much as tossing a coin is economics. And what is physics anyway, if not testing of models with data?

Re #51 and #57,

I used Hans Erren’s calculations to give a fast response, but now derived it from the on-line Modtran calculation program:

View downward at 70 km, no rain or clouds, water vapour scale at 1 (= no increase) and CO2 levels on 280 and 560 ppmv. By adjusting the ground temperature offset with 560 ppmv, we could increase the outgoing radiation back to the original radiation at 280 ppmv, thus get it all again into dynamic equilibrium. The result:

Tropics: +0.88 K

Mid-latitudes: winter +0.76 K, summer +0.84 K

Sub-arctic: winter +0.63 K, summer +0.71 K

1976 US standard atmosphere: +0.86

(I suppose that the latter is a kind of world average)

If one includes clouds in the US standard atmosphere, the increase in temperature, necessary to reach the equilibrium is lower: +0.62 K for cumulus and +0.81 K for standard cirrus (the latter rather unexpected, as cirrus clouds are thought to increase temperature by reflecting more IR energy back down than they reflect incoming light back to space?).

Thus based on spectral absorption, a doubling of CO2, including clouds, seems to be very near what Hans Erren calculated and lower than what the IPCC proposes. I don’t know what the cause of the difference is.

I am still reading this dense thread. However, initially, let me offer the following casual remarks: Looking at the graph of CO2 cycles over 600 kYears I would tend to draw parallels as some of your contributors have done, like the architect drawing layers with acetate transparencies, to look at alignments with geologic and archeologic milestones which are familiar to me.

Purely from a math perspective I was tempted to assess the acceleration which the width of one dot on the graph represents, understanding that the epica core shows mostly newly discovered older CO2 cyclical data as a way of highlighting the astounding new rise many hundreds of ppm over historical levels over so short a timeframe; which is to say to this novice reader it appears that adding 60 dots above the 0th kYear would yield the current CO2 level in 2005; however, it would appear that the cycles usually take 20 kYears instead of 0.1 kYear, making our curve exceedingly steep in 2005.

It is probable this distinguished assemblage of scientific thinkers already has engaged in a thermodynamic analysis as well: my first concept was to measure the total energy output of all the fossil fuels humans have combusted in the past 0.1 kYears and then compare that total energy release figure to the archeologically known past times when other large energy releases occurred, as for example with volcanic activity, meteor impact in the atmosphere, and other events which revealed similarly large quanta of energy into the atmospheric system.

I will study your data with earnest interest. You have my congratulations and heartfelt appreciation for the arduous effort it has required to arrive at this new look at another 200 kYears of past history from a climatologic perspective.

Re #79: Hans Erren’s calculation does not make any sense to me. He starts with the standard formula for calculating the radiative forcing for a change in carbon dioxide levels:

dE = 5.35 * ln([CO2]/[CO2 orig), in watts per square meter.

Fine, but then to calculate the temperature change this will cause, he substitutes this into the derivative of the Stefan-Boltzmann equation, producing

dT = ( [5.35 * ln([CO2]/[CO2 orig) ) / (4[sigma] T^3), where T is the Earth’s average surface temperature.

The amount of radiative forcing due to carbon dioxide has nothing to do with the Earth’s original temperature, but here it seems to depend on its cube. This is clearly incorrect.

Can someone give me a link to the on-line Modtran calculation program so I can understand what Ferdinand is doing with it?

#77 – I have just set up a talkshop forum at

http://www.lifeform.net/talkshop

It’s wide open, and anyone is welcome, for a lively flame war, or whatever. I’ll be working on hacking a bigger post_form box for it soon, as the one that came with it is quite small. It’s also HTML compatible.

Thomas Lee Elifritz

http://cosmic.lifeform.org

Re #51 and #79: I believe that one thing that Hans Erren’s calculation is neglecting is that the earth is not a blackbody. Rather, its albedo is ~0.3 which means it only absorbs about 70% of incoming radiation. If one corrects for that, the 0.7 C that Hans calculates becomes about 1.0 C.

In reality, it would probably be more accurate to use the absorptivity in the infrared spectrum and I am not sure what that is. I would assume that Houghton’s estimate of 1.2 C comes from some sort of more careful calculation of this sort. It would probably be wise to learn more about it before believing any derivation that gets a different result.

Re #81: Well, as far as it goes, I think the basic principles of this calculation is correct. The earth’s temperature comes into it because basically you are calculating the amount that the temperature of the earth needs to increase in order to increase the amount of it radiates to compensate for the radiative imbalance induced by the increase in greenhouse gases.

But, as others have pointed out (e.g., #78), while such a back-of-the-envelope approach is useful for some basic intuition and understanding, it ignores the very important physics of the feedbacks, etc. by deceptively classifying them as “models and hype”. And, it ignores for example the increasing evidence (some of which is the subject of another recent posting here on RealClimate) that the water vapor feedback that considerably magnifies the “bare” warming is indeed being treated approximately correctly by the models.

Really, if you read the ways that people attack the theory evolution and the way they attack the theory of anthropogenic climate change, one can’t help but be struck by the parallels. And, of course, what they have in common is that the accepted science contradicts strongly-held political or religious beliefs or economics interests. That is really what much of the argument is about.

Re #80: If you try to do an estimate of the amount of energy released by combusting fossil fuels, you will find it is totally insignificant relative to the amount of energy we receive from the sun. Global warming is not about us directly warming the earth by producing energy…rather it is about changing the radiative balance of the earth so that it absorbs more (or re-emits less) of the abundant supply of energy that it receives from the sun.

So, in other words, the thermodynamic analysis that you seem to be contemplating is asking the wrong question.

Let’s not criticize Hans Erren or others for leaving out feedback, since the question being addressed is what the temperature rise would be if we double CO2 but don’t allow any feedback. This is a contrafactual, but it is just a way of saying how big the role of feedback is.

Hans Erren’s calculation is almost right. The T**3 comes from finding the slope of Stefan-Boltzman. The only thing you have to be careful of, in computing this slope, is that the T shouldn’t be the surface temperature. Rather, it should be the radiating temperature of the planet, since the greenhouse effect means the Earth radiates at a colder temperature than the surface. The radiating temperature is about 255K. This gives a sensitivity coefficient a = 4 sigma T**3 = 3.76. Then, with the canonical 4 W/m**2 for doubling CO2, you get a temperature increase of 1.06 (larger than Ferdinand, smaller than Houghton/IPCC). By the way, one should be cautious of using the Earth’s albedo to infer the Earth’s “emissivity” (as in comment 83). The statement of Kirchoff’s Law for “average” emissivity has some assumptions about the wavelength-weighting in it, and one can’t generally go from the visible albedo to the IR emissivity. (it sort of works out anyway, because of some peculiarities of the constraints imposed by energy balance, but it’s not really a correct argument).

Now, using Modtran, Ferdinand gets a lower number. I’ve done this calculation from time to time myself over the past couple years, and if I bang in some standard midlatitude profiles, I also get numbers lower than IPCC for the unamplified response. This is not mainly because the sensitivity coefficient is different from the idealized Stefan-Boltzman calculation, but because the CO2 radiative forcing tends to be on the low side. However, the sensitivity coeffs and radiative forcing depend on the base-state relative humidity, the tropopause height, and cloudiness. You can get bigger numbers in dry tropical conditions. The number usuallly quoted in the IPCC comes from doing a geographically resolved version of what Ferdinand did with the online Modtran model, and I presume that it’s the geographical variations that bump up the number. I’ve never actually checked this calculation myself, but the number used in IPCC seems consistent with what I’ve seen from diagnostics of GCM simulations.

Ferdinand’s conclusion that the glacial/interglacial CO2 and temperature point towards a sensitivity in the lower end of the IPCC estimates is incorrect, though. This calculation has been done more carefully for the LGM, using tropical temperature as a proxy, and I’ve been working on using SH temperature as a proxy. If anything, the LGM results point towards a sensitivity in the mid to higher range, though it turns out that the LGM data doesn’t constrain the sensitivity nearly as much as one would hope.

One way of putting it is that the NCAR climate model gets about the right cooling in the SH during LGM times, and the cooling in the SH is mostly due to CO2 amplified by clouds and ice. Therefore, there can’t be anything much wrong with the feedbacks in the NCAR model — and presumably not with the forecasts for the future either.

Re: #77, “In deference to Mr. Tobis’s comment, perhaps we should take this to another forum, if others are interested. Any suggestions? I have a blog that I am willing to open up for comments without censoring, or perhaps a more neutral forum?”

Two things about this.

1. Are you trying to get others to another, unmoderated forum so you can get away with making ad hominum attacks?

2. “A more neutral forum”? The moderators of RC are neutral. They have to be, in order to be the respectable and reputable scientists they are. If they were not neutral, seldom would they be published in peer-reviewed journals.

Also, they are neutral, since they are not trying to grab funds from lobby groups and special interests. True scientists, they are, since they are among those who wish to keep science pure of tampering.

#73 Ferdinand could your cited cooling period have something to do with obliquity? (precession of the equinox). Would be nice if the graph above would have obliquity values…

Re #85: Raypierre, you said: “The only thing you have to be careful of, in computing this slope, is that the T shouldn’t be the surface temperature. Rather, it should be the radiating temperature of the planet“. This was explained to Hans over a year ago. Good luck with getting him to update his page!

Looking back over the posts that touch on the issue of leads and lags, the relief is almost palpable. What would have happened if things had turned out differently and Siegenthaler et al found that CO2 leads temperature by 1900 years? Quel horreur: we’d all have to become sceptics!

Re #86: Indeed the same point has been explained to Hans by different people on multiple occasions, so there’s no excuse for missing it. Yet the page remains unchanged, and people keep citing it…

Thanks Raypierre for this (#85) explanation, it gives a lot of information…

About Archer’s on-line Modtran program (for those interested, you can find it here), this includes a “1976 U.S. Standard atmosphere”, is that not some kind of geographically weighted global average, as the IPCC used?

The difference between this calculation (0.86 K for a CO2 doubling in clear skies, 0.62-0.81 K with different cloud types) and the IPCC’s estimate (1.2 K) is quite substantial…

About the model results in glacial/interglacials, more to come…

Tom, If you read on in my page

http://home.casema.nl/errenwijlens/co2/howmuch.htm

You see that in the comparing table Note added 7 February 2004:

The Stefan boltzmann climate sensitivity has a value of 1.05 K/2xCO2, which is pretty close to Pierre’s number.

Now 1K/2xCO2 is also the high frequency climate sensitivity associated with eg Pinatubo cooling.

For GHG warming estimates up to 50 years this low value high frequency climate sensitivity (transient sensitivity) is far more important than some equilibrium sensitivity of typical several centuries.

Any climate model that does not honour observed low transient climate sensitivities is falsified.

(this message is shadowposted at ukweatherworld)

[Response: The Pinatubo comment is bogus. See Wigley et al (2005) or Frame et al (2005) for a more serious discussion of whether Pinatubo constrains climate sensitivity (only the long tail really does). -gavin]

Wow it’s already posted!

Gavin, I identified Frame et al (2005) as

Frame, D. J., B. B. B. Booth, J. A. Kettleborough, D. A. Stainforth, J. M. Gregory, M. Collins, and M. R. Allen (2005), Constraining climate forecasts: The role of prior assumptions, Geophys. Res. Lett., 32, L09702, doi:10.1029/2004GL022241.

http://www-atm.physics.ox.ac.uk/user/das/pubs/constraining_forecasts.pdf

“Wigley et al 2005” is ambiguous, can you please specify?

I was referring to

Climate forcing by the volcanic eruption of Mount Pinatubo

David H. Douglass and Robert S. Knox GEOPHYSICAL RESEARCH LETTERS, VOL. 32, L05710, doi:10.1029/2004GL022119, 2005

About models in general: I have (had – now retired) some experience with models, be it for chemical processes, not climate. For a multivariable process with several inputs and many feedbacks like climate is, there are a lot of problems, even if all physical parameters and feedbacks were known to sufficient accuracy (which is not the case for climate). And it is possible to have the right output (temperature and humidity/precipitation trends) for past and present periods with different sets of sensitivity, depending of the feedback factors and the starting point used.

Back to the glacials/interglacials. In all periods I have met in the literature before the industrial era, the temperature change is the initiator (Milankovitch cycles, less sure / unknown for other events). CO2 follows this with some (huge) lag. In most cases there is a huge overlap of the trends, which makes it impossible to know how high the influence of CO2 is on the temperature change, including the huge feedbacks which come from ice albedo. Only at the onset of the last glaciation, the lag of CO2 is such large, that CO2 starts to decline at the moment that the temperature is near its minimum. That means that the change in temperature was without the help of CO2 changes (but maybe with some help of CH4 changes). And as the CO2 change caused no decrease in temperature (there was even an increase of 3 K, or 2.5 K corrected, at 108 kyr BP while CO2 levels were falling), its influence including all the feedbacks (but probably without ice albedo feedback) is minimal. There were several (relative) sudden temperature increases in the middle of the last glacial, where temperature changes lead, while CO2 lags with some 1,200 +/- 700 years, one of these events (A1) has a temperature increase period which is only some 1,000 years. See: Fig.2 of Indermühle ea. and the explanation at the same page. This too points to a lower influence of CO2 (+ feedbacks).

Of course, some of the climate effects are well known, like the direct effect of a CO2 change, and maybe the direct water feedback induced by higher temperatures. But the direct/indirect impact of aerosols is far from sure (and probably overestimated, see the recent findings of Philipona), the indirect impact of solar variations (maybe via UV/stratospheric propagation on cloud cover?) probably is underestimated (see e.g. Stott ea.) or even ignored in most models and last but not least, cloud reactions in the models are far from perfect, as well as in the tropics (see Allan & Slingo) as in the Arctic, see the Cicero article:

“There is a significant deviation between the models when it comes to cloud cover, and even though the average between the models closely resembles the observed average on an annual basis, the seasonal variation is inaccurate: the models overestimate the cloud cover in the winter and underestimate it in the summer. The average extent of sea ice varies greatly from model to model, but on average the models show a fairly realistic ice extent in both summer and winter.”

Thus the models are right (in result) for the wrong reasons (the change in winter cloud cover overrides all the extra heat from warmer inflow and GHG changes and albedo changes in summer!) and that doesn’t guarantee the accuracy of any future projection. This may be the case for models in other time periods too (as models have probably the wrong sign for cloud cover influenceâ?¦). It would be interesting to rerun a few models for the LGM to Holocene period (and eventually other transition periods) with increased response to solar variations, a differentiated cloud response (especially in the tropics and around the poles) and a decreased influence of CO2â?¦

An excellent news release about an upcoming speech by Lord May, head of the Royal Society:

“Impact of Climate Change ‘Can be Likened to WMD'”

http://www.commondreams.org/headlines05/1129-04.htm

A partial analysis of the temperature trends found in the Epica Graph above does not explain well

what happened, or what caused the peaks and dips in temperature change, one must include obliquity and other long term geological events to have a better idea. Writing off CO2 as the cause of these changes can’t be done without a complete a thorough analysis. My understanding of the regular cooling periods is that they are triggered by astronomical events, not mentioning them is a puzzle. Epica’s outstanding work really shows, at the very least, that we never had so much CO2 in our atmosphere over the last 650,000 years, a signature of the modern age, and of different climate to come.

Re #87,

Wayne, it is quite difficult to find the exact timing of the different cycles around the glacial/interglacials. One I have found is of the Ice Core Working Group where Fig.2 (Vostok trends) need to be compared to the various cycles which may influence climate (see Fig. 9). It looks like that the 110,000 and 90,000 BP peaks in equinox may be related to lower temperatures in the Vostok ice core. But data for the start of the last glaciation are lacking here, or very coarse in other trend figures (see e.g. Wikipedia). For the Arctic, there is a good correlation with the cycles anyway.

While looking for the Milankovitch cycles, the Ice Core Working Group has some remarkable findings on solar variation in the Holocene:

“Particularly exciting results from high-resolution ice cores include the observation that many geochemical parameters show strong spectral power at frequencies close to or identical to those observed in the sun. Worldwide coolings during the Holocene have a quasi-periodicity of 2600 years in phase with previously defined ~2500 year variations in d14C (e.g., Denton and Karlen, 1973; Stuiver and Braziunas, 1989, 1993; O’Brien et al., 1995). Also, d18O in the GISP2 core is coherent with both the ice-core 10Be time-series and with the tree-ring record of atmospheric 14C (Stuiver et al, 1995; Figure 11). Remarkably, the series are coherent not only in phase but also in amplitude, providing what is probably the best evidence to date for the elusive sunclimate relationship, a subject of debate for more than a century.”

Re #85: Thank you, Raypierre, for correcting my attempt to criticize Erren’s calculation for radiative forcing. Can you explain where the figure for the radiating temperature of the planet comes from? I would guess it is possible to directly measure it by satellite. The equation makes it look like an independent variable, but surely the radiating temperature goes down as greenhouse gas levels go up. Is there an equation describing this relationship?

[Response:You can find a pretty good elementary discussion of the business of radiating temperatures and radiating levels in Archer’s textbook (available online, for now). For an explanation at the advanced undergraduate level I can refer you to the first radiation chapter of my own in-progress textbook. This chapter isn’t yet posted, but I’ll probably finish it and post it in the next few weeks. Look for it here In general, the story goes as follows. Once you reach equilibrium, it’s not the radiating temperature that changes with addition of more GHG. That has to stay the same, since the planet still has to get rid of the same amount of energy absorbed from incident sunlight. You can calculate this radiating temperature for any given planet by looking at the total energy absorbed (plus internal energy in the case of Jupiter or Saturn) and setting this energy equal to sigma * Trad**4, then solving for Trad. Alternatively, if you have measurements of net outgoing infrared from a satellite platform (e.g. ERBE) you can set the net infrared to sigma*Trad**4 instead. In equilibrium, you should get the same answer both ways. What happens when you add a greenhouse gas is that the radiating level goes to higher altitudes, so you are extrapolating along the adiabat a greater distance to reach the ground and hence get a higher temperature (see my water vapor article a few posts down for more details. –raypierre]

RE No. 95: “Impact of Climate Change ‘Can be Likened to WMD'”

From this headline, I imagined it would be an article suggesting the distortion of the experts’ views by the ‘politicians’ was similar in both cases.

I came across an article by Prof Zbignuiew Joworowski pointing out the artificially low CO2 concentrations in ice cores from below 200 Mtrs if CO2 clathrates are not held at 5 Bars and -15oC. Is this done, or is there a way to side step it? article at http://www.warwickhughes.com/icecore/ this also shows the seemingly arbitrary selections of atmospheric concentrations of CO2 in the 19th Century to establish baselines, comments?