par William Connolley et Eric Steig (traduit par Pierre Allemand)

L’édition du 10 février de Nature contient un intéressant article “Les grandes variations de températures de l’hémisphère Nord reconstituées à partir d’observations à basse et haute résolution ” par Anders Moberg, DM Sonechkin, K Holmgren, NM Datsenko, et W Karlin (doi:10.1038/nature03265). Cet article adopte une nouvelle approche du problème de la reconstitution des températures passées à partir de marqueurs (“proxies”) des paléoclimats. Un des principaux résultats est une reconstitution montrant une variabilité à l’échelle du siècle dans les moyennes des températures de l’hémisphère nord plus importante que celle montrée dans les reconstitutions précédentes. Ce résultat amènera sans doute beaucoup de discussions et de débats futurs concernant la validité des travaux précédents. Néanmoins, le résultat ne change pas fondamentalement un des aspects les plus discutés de ces travaux, à savoir que les températures depuis 1990 semblent être les plus chaudes des 2000 dernières années.

(suite…)

La nouveauté, dans cet article est l’utilisation d’ondelettes (un outil statistique d’usage courant dans le traitement des images par ordinateur) pour séparer les composantes à haute et basse fréquence. La reconstitution des températures est alors obtenue en combinant l’information à haute fréquence (< 80 ans) provenant des anneaux de croissance (cernes) des arbres avec l’information à plus basse fréquence (>80 ans) provenant des sédiments lacustres ou d’autres marqueurs à résolution pluri-annuelle. Cela a deux résultats qui peuvent être importants : permettre l’utilisation d’observations à fréquence non annuelle (les reconstitutions récentes précédentes, MBH98, Esper et al., utilisaient des observations comportant au moins une valeur par an pour permettre un calibrage avec les mesures instrumentales ; l’approche de Moberg autorise seulement l’utilisation de moyennes sur 50 ans et rejette les observations provenant des anneaux de croissance sur une longue période, qu’elle considère comme non fiable. D’autres techniques ont été également employées ou suggérées pour la combinaison des signaux à basse et haute fréquence (Guiot, 1985; Rutherford et al. (2005) ).

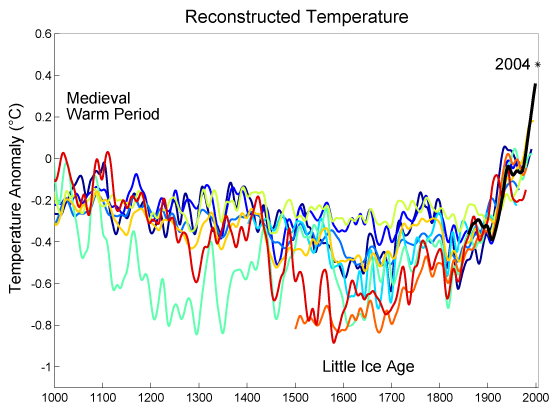

Le résultat est un allongement de la période du signal, par rapport à, par exemple, Mann, Bradley et Hughes, 1998 (MBH98). Moberg et al. montrent 2 « périodes de chaleur anormales» dans la courbe lissée, autour des années 1000 et 1100, à 0°C sur leur échelle d’anomalie. Quelques années à l’intérieur de ces périodes atteignent presque +0,4°C par rapport à la moyenne. A titre de comparaison, les mesures instrumentales les plus récentes atteignent +0,6°C et même un peu plus dans la période qui suit 1990, sur la même échelle. Les années les plus froides de ce qu’il est convenu d’appeler le « Petit âge glaciaire » se trouvent autour de 1600 et se situent à environ – 0,7°C par rapport à la moyenne, certaines années atteignant –1,2 °C.

Ces résultats vont raviver l’intérêt au delà de la communauté scientifique, en raison de la “totémisation” de la forme « en crosse de hockey » des reconstitutions précédentes (encore que chacun convient qu’il n’y avait aucun besoin de cette “totémisation” ). Nous espérons que les commentaires de la presse sur cet article qui mentionnent l’accroissement de la variabilité souligneront également l’autre résultat principal : à savoir qu’il n’y a ” pas d’autre évidence au cours du dernier millénaire de conditions plus chaudes que la période suivant 1990 – et cela en accord avec les études semblables précédentes (1-4.7)” où (1) = MBH98, (2) = MBH99, (7) = Mann et Jones(03). L’article'”News” de nature rejette explicitement l’idée que ceci signifie que nous ne sommes pas la cause du réchauffement actuel. Et il cite le statisticien Hans von Storch (qui a été assez fortement critique sur les premiers travaux): “il n’affaiblit en aucun cas l’hypothèse selon laquelle le réchauffement observé récemment est dû principalement à l’activité humaine”.

Il y a deux questions techniques concernant cet article qui valaient la peine d’être discutées, et que les auteurs n’ont pas pu traiter, faute de place dans Nature.

D’abord, ce que l’utilisation des ondelettes a apporté n’apparaît pas très clairement. Moberg et al. ont utilisé les ondelettes pour combiner les données à fréquence haute des anneaux de croissance avec des données à fréquence plus basse provenant d’autres sources, qui avait une résolution temporelle plus basse. Mais cela signifie que les observations à basse résolution deviennent en fait le facteur prépondérant, les anneaux de croissance ajoutant simplement un bruit de fond qu’on interprète visuellement seulement comme une sorte de barre d’erreur.

En second lieu, en raison de l’utilisation des ondelettes, ils aboutissent à un signal sans dimension qui doit être normalisé par rapport aux mesures instrumentales entre 1859 et 1979. Ainsi, si (par exemple) leur reconstitution apparaît trop plate dans cette période, cette normalisation accentue les variations pour la période préindustrielle. Inversement, si elle apparaît trop contrastée, elle sera atténuée. L’ajustement est fait pour adapter « valeur moyenne et variance » mais (d’après Nature) c’est un peu bref. Parle-t-on de la variance des séries après lissage ou avant ? S’il s’agit (ce que nous supposons) des séries brutes, la plus grande partie de la variance vient probablement des variations inter annuelles des anneaux de croissance, *mais* la partie réellement importante est le signal à long terme. Si c’est le signal à long terme qui doit être pris en compte, alors il existe seulement 1 ou 2 degrés de liberté dans la période de calibrage (1859 – 1979). Cette incertitude apparaît en un sens dans la figure 2a, qui montre les chiffres originaux utilisés, avec une échelle en °C. Résoudre point par point tous ces problèmes risque de prendre beaucoup de temps.

Une réponse sera sans doute apportée à ces questions, et les auteurs d’autres reconstitutions de température (y compris des auteurs de RealClimate) feront des commentaires sur cette méthode. Il est à noter que, dans tous les cas, les résultats de Moberg et al., s’ils sont démontrés, n’exigeront aucun changement dans le résumé pour les preneurs de décision du GIGCC qui dit : « l’accroissement de température au cours du 20ème siècle est sans doute le plus important de ces 1000 dernières années. Il apparaît aussi que, dans l’hémisphère nord, les années 1990 apparaissent comme la décade la plus chaude, et 1998 l’année la plus chaude (Figure 1b). Moins de choses sont connues, par suite du manque de données, sur les moyennes annuelles antérieures aux 1000 dernières années… » Cette dernière affirmation est largement fondée sur MBH99. Tout cela reste vrai avec la reconstitution de Moberg, et se trouve réellement quelque part renforcé puisque leurs résultats remontent jusqu’à 1000 ans avant MBH99.

Great article. I think that you are correct that it does act to support what the IPCC has said.

However I have some questions for the experts. I notice that Moberg uses the Sargasso Sea data by Keigwin. I was wondering how strong this data is? I think that Keigwin does a very good job of showing the changes in the Sargasso Sea but also the problems in the interpretation of this data (e.g. salinity) and the possible effects of changes in the NAO.

I also note that Keigwin appears to show a change in temperature of about 2.5C between the LIA and MWP (from his temperature graph but I believe he quotes a lower value in his text). This seems quite large and could this be part of the reason for his finding of more cooling during the LIA?

Finally, I know that some have interpreted his work as reflecting changes in the location of the THC as opposed to changes in overall temperature. Even Keigwin hints at it in a later paper but do climatologists tend to see it this way?

Thanks for the work.

Regards,

John

If the temperatures are highly variable in the Northern Hemisphere doesn’t that imply that the Northern Hemiphere is more sensitive to relatively small changes in temperature than other areas? I have read that it is, and does not this data back it up to some extent? Thats what I see here (layman). The variabilities on the graph show higher precision measurements and inferences from tree rings etc. The upward trend is still readily apparent, and in fact this reflects the warming trend rather nicely, although it be more pronounced here, like the canary in the coal mine.

[Response: If I can re-phrase your question, I would ask “if the temps are more variable, does that imply a higher sensitivity to forcing (in the future)?”. This question is apparently rather more complicated than I thought – I’m awaiting a post here from someone more expert to explain it to me… – William]

Further Response: Greater climate sensitivity in the N. Hemisphere, and greater sensitivity overall, is definitely one possible explanation for the data (if, as is argued, the data reflect reality more than previous work does). See Crowley, 2000 in Science for a more detailed discussion implications of various climate reconstructions for climate sensitivity. But also see Gavin’s caveats to this in another Response below.–Eric]

Of course, the mere fact that the “totemizing” was propagated by the IPCC, the environmental lobby and especially the authors of this blog as “the scientific consensus” should cause disinterested viewers to wonder as to who is trying to fool who.

Isn’t it interesting that now Mann, Connelley et al don’t produce the “scientific consensus” and “peer-reviewed” rhetorical weaponry any more? Could it be because those two phrases, which have been used to bludgeon scientific debate into the ground, are now worthless after M&M were published with full and open review in Geophysical Research Letters?

Moburg et al 2005, on its own, does not prove anything definitive about climate. It does however destroy the pretentions of some people, not a million miles from this blog, that their previous work was “robust, to moderately high levels of confidence” when it was clearly nothing of the kind.

[Response: in fact the totemising has mostly been done by the skeptics. They used to lead on the satellite temperature record, until that started to show warming, and then different versions showed even more warming. M&M was reviewed by GRL, but certainly not “openly” – journal review is not an open process. As the post explicitly pointed out, Moberg doesn’t strongly affect the “consensus” – indeed everything written in https://www.realclimate.org/index.php?p=86 remains true with the Moberg record. The idea that this paper destroys previous work is wrong. As the post says, there will be a (scientific) debate, and an idea of what is correct will emerge – William]

I’m a rookie at this, so please bear with me while I get up to speed. Here are several questions about the data:

1) Where, exactly, do these temperature readings and reconstructions come from? Libya, for example, is in the “Northern Hemisphere,” as are Saudi Arabia, Greenland, the Azores, Hawaii, and large expanses of the Pacific and Atlantic oceans. Unless floating trees grow in the ocean, it would seem rather hard to obtain tree rings for much of the Northern Hemisphere.

2) The point of that first question, of course, is to seek the control group to whom you are comparing the test results. How do you separate the effects of human causation from whatever self-correcting mechanism occurs in nature over time? Since MBH98 shows many ups and downs over the course of 1000 years, it would seem self-evident that there exists some self-correcting mechanism or random variation in nature.

3) In order to assert human causation, I would think the data would have to show that, for example, Rocky Mountain National Park had continued unabated to the present day the cooling trend established from approximately 1750 through 1850, while the Houston Ship Channel area exhibited the warming trend since the onset of industrial activity. If both areas exhibit the warming trend, that would seem to argue persuasively against human causation, wouldn’t you agree?

Please educate me with data. Thanks.

[Response: What is being reconstructed is the hemispheric mean temperature anomaly for any one year. It turns out that while the actual temperature at a point is not very representative of the actual temperature at nearby points, the temperature anomalies are. Therefore, you don’t need to have perfect coverage of the whole hemisphere to get a good estimate of the hemispheric average anomaly. In fact as few as a couple of dozen strageically placed stations can give you a very good estimate indeed. Now the locations of avaialble proxy data (tree rings, ice cores, ocean sediment records, corals etc.) are not necessarily optimally spread out, but the spatial sampling error is actually quite easy to calculate, and goes into the error bars shown on most reconstructions.

The reconstructions are not exercises in detection and attribution, which as you correctly point out can’t be done from the observations alone. You need information about the degree of intrinsic variability, estimates of the natural forcings (principally solar and volcanic), and estimates of the human related forcings (GHGs, land use change, aerosols etc.). This information is less reliable in the past, and so these reconstructions are not used directly in such calculations (although Crowley (2000) is an attempt).

Your last point implicitly assumes that all climate and climate forcings are local. This is not the case. Greenhouse gases are well mixed and have an effect globally, other forcings may be more regional (aerosols, land use) but they can still have far field affects due to the nature of the atmospheric circulation. The detection of human causation is basically an excercise in explaining the variations we’ve seen. If you require that human influences be included to get a good match, then the influence is detectable. If not, then you cannot say that the human influence is significant. The ‘balance of evidence’ suggests that you do need the human influences, and that this is now (and increasingly) significant. – gavin]

This is the first look I have had at a large version of the record from Moberg. What strikes me is that it closely follows the borehole data , and somewhat like Esper, et al up to about 1550, and then switches tracks to follow MBH, and co. Off hand this is a mystery to me but as I guess I would have a real close look at the data sets they picked.

Just a small nit, wavelets are not a “statistical tool”.

[Response. You are technically correct. How’s this? “Wavelets were part of the toolbox that Moberg et al. used in their statistical reconstruction of climate variability.”

For those interested in knowing more about wavelets, there are many good books on the subject, including a nice non technical one by the writer-spouse of a mathemetician. Can’t recall the title off hand but the author is Hubbard I think. -eric]

[Response: if you’re interested, http://paos.colorado.edu/research/wavelets/ has a nice intro, and wavelet software for IDL, Matlab and Fortan – William]

One really simple question here: What are we to make of the “temperatures since 1990”? Is it weather, or is it climate? By every definition of *real* climate that I have seen, a minimum of 25 to 30 years of data are required to define climate, preferably longer.

So to what purpose do you compare the “weather” since 1990 with the previous “climate”? Perhaps some on this site are eagerly, impatiently, and prematurely anticipating the eventual emergence of a *real* CLIMATE signal that heralds an unquestioned anthropogenic impact on ***climate***. REAL CLIMATE! Why do I feel the need to shout?

[Response In spite of the shouting, this is actually a reasonable question. It is certainly not weather we are talking about. If one uses the very reasonable definition of climate as the “mean statistics of the atmosphere”, then the quesition becomes “the statistics over what time period?” If we use a time period of one decade, then yes, all data estimates show that the 90s were the warmest decade in the last 1000+ years. Still, this doesn’t mean the period 2001-2010 or 2011-2020 will also be as warm. We’ll see. As for what I wish for… I’m a skier, and the ice core research I do requires very cold conditions, so I am hoping against hope for some cooler weather. -eric]

The key question is not whether the 90s were the warmest ever (or the late 20th century for that matter) but what the different reconstructions tell us about how climate responds to forcing. In this regard it’s important to consider the difference between Crowley et al (2000), who use an energy balance model with a sensitivity of 2.0 to get something like the MBH99 reconstruction, and the ECHO-G climate model, which has a sensitivity of 3.5 and reasonable stratospheric component and gives somthing like Moberg.

[Response: This is not quite right. The sensitivity of ECHO-G is around 2.7 ºC. The difference in response between Zorita et al (2004) and Crowley (2000) is that the forcings were significantly larger in ECHO-G (by a factor of 2 for solar, and with larger volcanic forcing as well). The stratospheric component of ECHO-G is obviously better than in an EBM but many of the important factors that lead to this being important were not considered in those runs (i.e. the volcanic forcing was input as an equivalent TOA forcing, rather than as absorbing lower stratospheric aerosols, and no stratospheric ozone feedbacks on the solar forcing were included). – gavin]

Hi Gavin, all I have to go on is the paper itself Zorita et al They give the climate sensitivity of ECHO-G as 3.45 (p273), but this is from a doubling of present-day conditions so is a non-standard sensitivity, I think? They also say that solar values were ‘derived from the values used by Crowley (2000) (p274). For volcanic, they say ‘We stick to the same dataset used by Crowley (2000)’. You’re saying that they scaled these datasets differently? The way they handled volcanoes is a dodgy… I see from your 2003 paper that you don’t find much effect from volcanoes (on the decadal mean).

[Response: The 3.45 is not the equilibrium sensitivity, but the response at 2100 to a transient increase in CO2. The number I quoted is from a new study (still in press), but is similar to that reported (2.6) for a model with the same atmospheric component in IPCC (2001). In looking into the volcanic forcing, I have a feeling that there may be some confusion somewhere (not necessarily with Zorita et al) in converting the Crowley radiative forcing numbers to equivalent TSI forcing. I am checking the sources…. The solar forcing is definitely larger than in Crowley (by about a factor of 2). – gavin]

Re: #7: climate trends

When Eric says

he must mean that there is still some possibility that the current warming will flatten out or slow down or even reverse.

[Response:Yes. Climate varies on its own, on top of the long term trend that we are forcing it to have. I think the money is on 2011-2020 being warmer than 1990-2000. But if it isn’t it won’t make me think we missed something, or have some fundamental lack of knowledge. Of course, various pundits will claim “the scientists had it wrong”, but I guess we will cross that bridge if and when we come to it.]

Accepting that climate is the statistics of weather over some reasonable time period, I want to ask about something that climate modelling does not seem to address – thresholds.

Since there has been noticeably increased warming over the last 20 years or so and less so before that, is it possible that the forcing from GHGs in the atmosphere surpassed some threshold around then and that there is some feedback (outside the normal physics affecting water vapor) that has kicked in to create the observed warming? See this data for example. GHG levels were also quite high in 1984 – 344.5 ppm CO2 as measured at Mauna Loa – but the warming has risen dramatically since then. We are at about 379 ppm CO2 now (awaiting the official measurement, due soon).

Naively, it looks like something happened.

[Response: I very much doubt it. See my response to #11 below –eric.]

Dave (#10)

Let’s suppose, just suppose, that we have just recently encountered the onset of a Dansgaard-Oeschger event. These events produce rapid climate warming which is followed by a gradual, steady cooling over many years (centuries, in fact). Superimposed on this event might be a true anthropogenic signal. However the D-O event itself is a phenomenon that is clearly within the bounds of natural variability.

Although there is little solid confirmation of the cause of these sudden D-O warmings, one of the most plausible explanations is based on a parallel phenomenon which well-known in the atmosphere. The oceanic boundary layer is driven by the identical thermodynamic processes that drive the atmospheric boundary layer. In the ocean there are dual buoyancy mechanisms — salinity and temperature — which drive boundary layer instability. In the atmosphere there is fundamentally only one dominant driving mechanism — temperature (although modulated by water vapor and the latent heat released by condensation into clouds).

The equivalent to a D-O event in the atmospheric boundary layer occurs when large amounts of “potential” instability accumulate, and the instability is then released in the form of violent thunderstorms. The phenomenon which delays the release of instability, while it continues to accumulate, is known as a “cap” — a stable layer which contains the instability until a threshold is reached at just one, most unstable location. Then the vast reservoir of instability is released explosively.

This is a process with large hysteresis. Instability accumulates far beyond an equilbrium state before its release is triggered. The system then restores itself to a relaxed stable state as the instability is released. And once this stable state is established, a new reservoir of pent-up instability begins to accumulate.

In the atmosphere these processes operate on time scales of hours to days. The oceanic boundary layer (the thermocline), with its much more vast reservoirs of heat, operates on much longer time scales. I contend that a likely trigger of the D-O events is the same sort of local break of an instability “cap” which initiates an intense episode of Deep Water formation — either in the North Atlantic or in the Antarctic seas (the latter is more likely the case in today’s climate). Over decades to centuries this instability is released (cold water which previously lingered at the surface is sequestered in the deep ocean), and the circulation gradually subsides, just as the explosive development of initial thunderstorms following the first breach of the atmospheric “cap” is followed by a succession of weaker and weaker storms.

As I said, let us *suppose* that such a D-O event was triggered some time in the last two decades. If so, then the prognosis as defined by the course of natural variability would be for a short period of rapid warming followed by a gradual, steady cooling.

Since D-O events are a fairly common feature of past climates, there certainly is precedent. Since our observational record is far to short to have documented any D-O event previously, we could be “blindsided” by the onset of such a phenomenon. And since we happen to be in an unprecedented period of increasing CO2, it would be exceedingly difficult to separate such an uncommon natural event (a D-O warming) from the anthropogenic signal.

What we think we know, as climate scientists, pales miserably in comparison to all the phenomena we have never observed with modern technology.

[Response: I would agree with much of this comment, though I’m far less sanguine about our understanding of D-O events, and I’m far less convinced of their relevance to modern climate. It seems increasingly clear that D-O events must involve major sea ice changes (and there is not much sea ice left, by comparison with what was present during the glacial period (20000+ years ago, when these events happened), so D-O events are increasingly unlikely in the future).

I would also add that the “prediction” made by #11 about what a D-O event would look like is based on the Greenland ice core records, and the picture of “abrupt warming/slow cooling” picture comes from the data on millennial timescales. It does not begin to describe what did actually happen globally during these events, and is even less relevant to what might happen in the future.

In any case, the key point is that there is no evidence to suggest we have crossed any sort of “threshold”. Comment #10 implies there are aspects of the data which suggest this. That is an “eyeball” approach to the data which is not backed up by any sort of analysis. So to #10, I would say again that the answer is firmly no: there is no evidence for any sort of threshold being crossed. -eric]

I think the money is on 2011-2020 being warmer than 1990-2000. But if it isn’t it won’t make me think we missed something, or have some fundamental lack of knowledge. Of course, various pundits will claim “the scientists had it wrong”, but I guess we will cross that bridge if and when we come to it.

Hang on a minute.

This site has consistently maintained that past climate variability had occurrred within a fairly narrow

range. It’s argued against the MWP and LIA – or their extent. It supports the hockey-stick representation of shallow fluctuations of climate over the past 1000 years and points to the sudden “unprecedented” increase in 20th century temperatures as evidence that increased CO2 levels are the main contributory factor. Now – apparently – if the next 20 years fail to show the expected level of increase – then it’s all down to natural variability. Never mind the continuing increase in atmospheric CO2 – what happened to the 0.5 degree rise due to equilibrium response to recent levels of GHGs.

I’d like to remind you we are now 15 years into the 1990-2100 period when everything is about to unfold. In 2020 we will be 30 years along the journey. This will be 32 years after James Hansen told the world that global warming was “happening now”.

If the ‘evidence’ in 2020 is no stronger than it is to-day then you’ve got at least 2 things wrong. Climate sensitivity (which I believe is about 1/3 of your claims) and past climate variability (which is probably wrong anyway). Apart from that – you’ll be spot on.

[Response: I would agree that the money is on 2011-2020 being warmer than 1990-2000. I would somewhat disagree that if it isn’t, there is no problem. Assuming Co2 levels increase roughly as expected, then it would (I would guess, plucking numbers somewhat out of the air) be better than 95% certain that the latter period would be warmer. What I think Eric was saying was that even given that, there is a small chance that natural variability could cause a downturn. That is the science point of view. But… from a political point of view, if there was a decade-long downturn in temperature, and all the attribution analyses showed it to be a temporary downswing with more warming expected later… no-one would listen – William]]

[Response: Yes, William has properly interpreted my point. Another way to look at this is that while I won’t be that suprised if 2011-2020 is not warmer than 1990-2000, I will be very surprised if 2000-2030 is cooler than 1970-2000. The data show that natural variabilty on decadal timescales is non-trivial, but that on multidecadal timescales it becomes much more trivial. Having said all that, this is a little off-the-cuff. One could quibble with the detais. My point is merely this: natural variability exists, and looking at individual decades in not really long enough. — eric]

Re: #10, #11

I merely raised the possibility of a threshold being crossed, wondering whether there was any serious analysis that supported it. Of course I’ve seen the often used IPCC TAR result here showing that modelling results combining natural and anthropogenic forcings reproduce 20th century global mean surface temperature anomalies relative to the 1880 to 1920 mean. This would seem to imply that no threshold has been crossed.

However, Richard Alley, writing for a general audience in Scientific American (Nov, 2004) in an article entitled Abrupt Climate Change, spends a lot of time talking about thresholds (changes of state) using his “canoe tipping” analogy. He states that “many thresholds may still await discovery”. And we know how Wally Broeker feels about this. So, I was wondering what the thinking was about thresholds with respect to the current warming trend on this blog. I also think that paleoclimate scientists tend to see more possibility of abrupt climate changes than climate modellers do.

Also re: the current warming trend, Hansen and Sato expect 2005 to surpass 1998 due to a weak El Nino superimposed on the general anthropogenic forcing in this GISS press release. So, we know which way they’re betting. If this indeed comes to pass, and the warming grows in subsequent years, there may come a point when the signal exceeds the known forcings and feedbacks as currently modelled. At which point it might be decided that transient climate sensitivity is too weak or some unknown threshold has been crossed. Of course, this is all just pure speculation on my part. And hey! why not?

Finally, when I raise the possibility of some undetected threshold in the current warming signal, that doesn’t mean I believe it.

[Response: “Thresholds” stuff is quite difficult. On the one hand, they may be there “awaiting discovery”. OTOH, there is no good sign of them at the moment. One example is the THC “shutdown”, which was “predicted” by simpler models, but tends not to occur in the more sophisticated models, coupled AOGCMs. OTTH, although these models are capable of showing some “emergent” behaviour (ie, stuff not built into them), this may be limited. HadCM3+vegetation, for example, shows a speed-up of atmos CO2 in mid-C21 when the Amazon forests start to decline due to climate change. But if the “thresholds” were related to GHG levels, we would have noticed, because CO2 etc are continuously monitored. The bottom line is probably that whilst we should take the idea of sudden changes seriously, and investigate possibilities within the scientific arena, it wouldn’t work to take such stuff out into the wide world without a rather better basis. Analogies are nice, but they aren’t evidence, just guides to thinking – William]

The undetected “threshold” scenario that I posed in #11 could be more generally characterized as a shift in the thermo-haline circulation (THC) caused by an emergent new source region for deep water formation. The most obvious D-O (Dansgaard-Oechsger) events in the vicinity of the LGM (Last Glacial Maximum, 20,000 years ago) represent extreme examples of this scenario. The interactions (feedbacks) between THC shifts and sea ice and glacial calving (as indicated by ice-rafted debris in deep sea core records) would tend to seriously magnify the climate changes compared to what would be expected from a similar THC shift today.

But I’d like to move beyond the discussion of simple “thresholds”.

There is another class of slightly more subtle climate shifts that could be driven by long-time-scale ocean circulation processes which fall beyond the scope of observational recognition. The most tangible example, of how such a shift might appear, is the apparent recent emergence (last half of the 20th century) of a much more intense oscillation in, and particularly a much more intense positive phase of the Arctic Oscillation (AO, which is closely paralleled by the North Atlantic Oscillation — NAO).

The class of hypothetical climate shifts to which I allude involve fundamental changes in the frequency and amplitude of known oscillatory behavio(u)r of the atmosphere-ocean-cryosphere system and the potential emergence of new oscillatory behaviors.

We should not limit ourselves to being vigilant for the “crossing of thresholds”. The undiscovered effects that may emerge as we proceed with our uncontrolled experiment in anthropogenic forcing run the gamut from modulation of the frequency and duration of relatively short-term, well-characterized, cycles such as ENSO (El Nino – Southern Oscillation), AO and the Pacific Decadal Oscillation, through long-term oscillations, which have always been a part of the natural variability of the Earth-system, but which operate on such long time scales that they have not been properly recognized or understood (e.g. D-O type events), to the emergence of completely new modes of oscillation as climate is influenced by anthropogenic processes.

The statement: “there is no evidence” of such emergent changes (about which I’ve speculated), only means that vigilant climate scientists have not yet identified the possible evidence, perhaps because it does not exist (and my speculation is wrong), *or* because it has either been overlooked or is still in the early stages of emergence.

I hope you’re receptive to a follow-up on this interesting subject. I couldn’t agree more with your remarks. I wasn’t trying to be alarmist. Alley’s article really does emphasize non-linear state changes. I was puzzled by the “…related to GHG levels, we would have noticed…” statement. I take “threshold” to mean that GHG forcing could alter some natural variability like the Arctic Oscillation (which spends more and more time in its positive mode, increasing heat transport from mid-latitudes) or ENSO (more frequent or stronger El Nino events).

There tends to be an emphasis here at realclimate.org on the “hockey team” results (Mike prefers this term!) – for example, this posted subject Moberg et. al. And while the “hockey team” shows the late 20th century and current warming to be unusual, it is not as important in my view as the current warming trend itself. Why? Well, because that’s just how people work. Humans work on human timescales, which are short. I know that’s not the scientific view, but that’s reality.

So for example, here’s some bad luck, from the GISS press release cited in #13 and given the U.S. refusal to participate in Kyoto or otherwise make meaningful GHG reductions:

So when Eric says “Still, this doesn’t mean the period 2001-2010 or 2011-2020 will also be as warm. We’ll see”, citing some possible natural variablity which masks a longer-term climate warming trend, I want to say “we’re doomed!, doomed!”.

This point of view guides my posts. I’m being as honest as I can be.

[Response:I assume that by your “we’re doomed” statement you are saying you worry that in the event it is not warmer in 2001-2010 than 1990-2000, that this will damage our ability to get the word out. I think that is true, but it doesn’t change the fact that climate is highly variable and it might just work out that way. The natural world doesn’t always cooperate in a way that makes things easy to explain. Now, on the thresholds business, you should be aware that I’m probably more conservative on this than most in the paleoclimate research community, especially those (like Alley, and me) that have worked mostly on ice cores, looking at longer timescales. My take on this is that the case that “big non-linear responses” can happen is strong, but that the case that they will happen in the near or medium term is weak. I would echo William’s comment that this is an interesting and important area of scientific study, but I do not think it is well enough understood that it makes sense to spend a lot of energy “warning” people about it. The reality of global warming is, to paraphrase IPCC, “very well understood and very very likely” (and that is a statement few would disagree with). On the othe hand, I would put the probabilty of non-linear threshold crossings in the near future as “poorly understood; probably not very likely” (and many might disagree with me). -eric]

The warmest year of record (1998), based on global temperature records from 1880-2004, was associated with the 97-98 strong El Nino. As the THC slows, we can expect the frequency of El Nino to decrease and the strength to increase.

[Response: Not too sure about this: the jury is definitely still out on any possible impacts of THC changes on ENSO variability…. – gavin]

Is the jury out on stronger El Ninos as the planet warms?

[Response: In my view, yes. The paleoclimate data (from corals) indeed suggest it is more complicated than weaker/stronger. Some data suggest weaker El Nino in the mid Holocene, for example, but perhaps stronger La Nina during the mid-Holocene. See the article by Tudhope , with accompany commentary by J.Cole in Science vol. 291 (year 2001). http://www.sciencemag.org/cgi/content/full/291/5508/1496 will get you there if you are a subsriber. The abstract can be viewed free at http://www.sciencemag.org/cgi/content/abstract/291/5508/1511 -eric]

It seems to me that the ECHO-G model results presented in Moberg et al. (2005), which support their paleo-reconstruction, rests largely on the solar forcing reconstruction over the past 2000 years. Yet historical solar forcings are *highly* uncertain (no?), especially before ~1610. On the other hand, if we assume their solar forcing to be adequate, the excursions in solar constant of ~5-7W would correspond to a forcing of ~0.9-1.2W at TOA, leading to an equilibrium response of the model to ~0.6-0.8K globally assuming a 2XCO2 sensitivity of 2.7K.

But given the uncertainties in past variations of the solar forcing, the fact that ECHO-G agrees well with their data is a rather weak argument, no?

[Response: You are correct, long term solar forcing is highly uncertain. But I don’t think this is really a big part of their results – there is enough wiggle room in the climate sensitivity, the solar forcing and uncertainties of the reconstruction to support any number of comparisons. Their reconstruction does not depend on any particular model simulation. – gavin]

I look at the data as a reasonably informed lay-man. To construct a thought experiment, if this is looked on with the view of an immortal climat/meteorologist of the 11th century, as the centuries progressed, he would have been in a complete panic about the continued and inexplicable decline in temperature. By the late 16th century, he would have been utterly convinced that death by freezing was a certainty.

To adapt from the first paragraph of this page, at this stage his learned paper might read, “This result will undoubtedly lead to much discussion and further debate over the validity of previous work. The result, though, does not fundamentally change one of the most discussed aspected of that previous work: temperatures since 1570 still appear to be the *coldest* in the last 570 years.” And what clue would this have given him to the next 100 years and what could he have done about it?

[Response:You are missing two things. The first is the rate of the recent rise, which is unprecedented over the last 2000 years. The second is the body of theory as well as the data. If all we had was the data, and no theory to say “…and we believe that temperature increases will continue”, then there would be little reason to worry – William]

I’m researching the sceptics view of climate change for an international engineering firm that needs to know more about how this issue will affect us… I’ve got the IPCC report and other things that support anthropogenic climate change, but I need to address the other side of the argument as well, especially for a group of conservative engineers. Could you point me in the direction of sources for the sceptics arguments and counterarguments so I can present a balanced and convincing account of this issue? Also, what direction of research would you recommend for a large engineering firm? Is carbon storage a good area to work on? Or sustainable infrastructure? Or alternative transportation? What is realistic?

Thanks.

[Response: This is a great teaching moment! Your very question is ill-posed, but why? You are presupposing that climate science is like a courtroom, each side is advocating for a course of action and it is the jury’s job to see who presents the best case. In a courtroom, lawyers can use all sorts of tactics to sway the jury (and they do) but science is not like this. Scientists have a much higher responsibility to the actual facts and underlying physics. Lawyers can frequently pose completely inconsistent arguments as part of a defence – scientists cannot. There are not ‘two sides’ to the science of climate change. There is only one. To be sure, there are many uncertainties in many of the issues, but you will find all of these outlined in the IPCC reports. The perception that you may have of the ‘debate’ in the media or politics is mostly due to the inevitable compression of news stories, combined with an apparent journalistic need to provide ‘balance’ (see Chris Mooney’s article on this), and well-funded campaigns by interests who are worried about what the reality of climate change might imply on the regulatory front. One of the reasons why we started this site was to provide some of the context missing in the public perception of the issue, and I hope we are succeeding in some small way. There have been a couple of posts which address the difference between the ‘debate’ in the newspapers, and the ‘noble search for truth’ that scientists like to think we indulge in (slightly tongue in cheek there) – For instance, What If … the “Hockey Stick” Were Wrong? or Peer Review: A Necessary But Not Sufficient Condition.

So, in summary, read the IPCC report, or the National Academies Report requested by the administration in response to IPCC. All the viewpoints and reasoned discussion are there.- gavin]

Well, hang on a minute.

Gavin deserves the highest respect as a scientist and as a commentator on climate change science. You will do well by paying close attention to his responses. However his opinions do tend to lean toward the non-skeptic’s, or the consensus perspective.

A responsible skeptic will not presume to tell you that what s/he thinks is fact. A responsible skeptic will request that you remain open minded to opinions from both sides, and consider the uncertainties involved *without* prejudging them based on the demonstrable human predilection toward a “herd mentality” — by “herd mentality”, I mean that once a consensus is formed, a flock of “me too” science papers become much more easily accepted, by peer review journals, than the skeptics’ papers. In this sort of environment only the most “thick skinned” skeptic will venture to submit contrarian research results to peer reviewed journals. In this sort of environment, the “money” is with the consensus — If the researcher wants funding (and virtually all of us are dependent on it), the easy road to funding is to decorate one’s proposals with the accepted “consensus buzz words”.

The intent of REAL science is to remain open minded. But the inevitable reality is that once a consensus gathers momentum, even the best of minds begin to close. The responsible skeptic will acknowledge the weight of consensus, but will remind those who are willing to listen that there remains enough unacknowledged uncertainty to warrant concern about “jumping to conclusions”. The responsible skeptic will readily admit that if and when those who jumped on the bandwagon early are proven to be correct, s/he will readily join their ranks. But the responsible skeptic reserves a higher standard of proof before their personal opinion is swayed.

In the case of anthropogenic influence on climate change, I have to strongly disagree with Gavin’s assessment that “there are many uncertainties in many of the issues, but you will find all [emphasis is mine] of these outlined in the IPCC reports”. In some of my other posts on this site, I have attempted to point out uncertainties that IPCC has failed (egregiously in some cases) to acknowledge. In the interest of brevity, I will not repeat them here, but if pressed, I will identify them again for you.

But to summarize my personal, individual position, as a skeptic, I find the emerging anthropogenic influence on climate to be nascent at best. Specifically, I find that the human influence on climate since about 1990 seems beyond the limits of natural climate change, but I insist that “Climate” is defined by time scales longer than 15 years — specifically at least a 30 year average. And I find attribution studies, which would impute more certainty into these short term results, to be inadequate. To wit: Those who work with computer models of climate trust them more than is warranted by the validation that can be done against the (limited) available observations. Computer models are widely used to “attribute” and to “project” climate trends into the future. It is the computer models which have swayed the consensus toward the conclusion that the recent trend of rapid warming (the last 10 to 15 years) is sufficiently anomalous to project that it will be sustained for at least 30 years, and can thus be “preordained” as inevitable REALclimate change.

I have not fully expounded my personal skeptic’s opinions on the unaddressed problems with climate/earth system computer models. But, again, if pressed, I will devote more time to this. Suffice it to say here that my specialization, for the last 30 years, is in the field of modeling. I create parameterizations of land-atmosphere interactions which are incorporated into climate models and numerical weather prediction models. I have extensive, first-hand experience with climate models, and with climate modelers. And I am keenly aware of the unintentional biases which can skew the modelers’ perception of their own work. The most important of these is the ingrained culture, in climate modeling science, of failure to do double blind studies.

Typically, the modeler poses a problem (an inadequately modeled climate process) and a solution (a change to the model) and then proceeds to test the solution. Since the modeler is not constrained to do only blind studies, s/he knows what desired changes the solution hopes to produce. Since the modeller is not blind, s/he accepts the model solution if it produces the desired changes (even if they are produced for entirely the wrong reasons). Once the model has produced the desired change, the modeler (without justification) ceases to test further legitimate possible “solutions” and proceeds to publish the results. If the desired changes are not produced, the modeller just continues to revise the posited “solution” and runs the model over and over again until (and ONLY until) the “expected” solution is achieved.

Unfortunately this process is able to dispatch “results” much, much more quickly than true double blind science. But the results that it dispatches are much more suspect.

As a scientist who hopes to be viewed as a responsible skeptic, I present this comment only as my personal opinion. I am more than willing to listen to opposing perspectives. My over-riding desire is to keep the climate science discussion on course as an open-minded interchange. Note well: I am, in general, deeply skeptical of most of the entrenched and dogmatic media-recognized “skeptics”. And I am very skeptical of even some of the more well-respected skeptical scientists, e.g. Richard Lindzen’s “infrared Iris effect” (I have posted detail elsewhere on this site). But I find it to be premature to “annoint” anthropogenic influence on today’s REALclimate (30+ year averages) as the unquestioned predominant effect. Talk to me in another 15 years — Based on advances in modeling technology and on the additional observational record, I guarantee that I will have modified my opinion several times during that period. And I expect any scientist worth his/her salt to say the same.

I have noticed that proxy data is often processed by subtracting the mean and dividing by the standard deviation. I assume that subtracting the mean is to center the data at 0. What is the reason for dividing by the standard deviation?

Well then, the debate is over! Gavin, in his response in #20, has declared that the opposition doesn’t exist, and to the extent that it does exist, it has Evil Motives and can therefore be dismissed outright. Good work, Gavin!

(Sarcasm aside, I appreciate Peter’s reasoned response in #21.)

[Response: Read what I said carefully. I never said there was no uncertainty, and there certainly is plenty of vocal opposition. I was making the point that science is not a a binary endeavour of two opposing ‘sides’ each trying to score points, and attempts to place science uncomfortably within this framework serve only to obsfucate. And by the way, no more sarcasm please. – gavin]

I think Wetzel’s comment 21 neatly captures the difference between a scientific question and a policy question. I agree with him on the basic issue that as a scientific question whether human influences on climate have become determinative is not closed (although we would probably disagree as to whether the answer is currently likely, very likely or a slam dunk in IPCC/CIA terms. OTOH, the scientific question has practical and policy implications. Is the level of knowledge high enough that scientists should recommend taking mitigating actions. In such an arena, simply waiting is the choice of an action of equal weight to taking mitigating actions.

Scientists cannot simply wash their hands in the sands of uncertainty. Like it or not policy recommendations must emerge from and be shaped by the state of current knowledge and trying to avoid doing so or preventing others from doing so is irresponsible.

I make the difficult decision here to indulge in a tiny bit of policy commentary, despite the “prime directive”, prominently featured in the upper right-hand side of your browser window:

“The discussion here is restricted to scientific topics and will not get involved in any political or economic implications of the science.”

Wisdom transcends simple science. Wisdom dictates that fouling one’s nest is contraindicated. Regardless of established science results, it is my personal opinion that the wise choice is to strenuously work to limit anthropogenic pollution.

(Re: response to #23) Gavin, I never said that you said… (oh forget it.)

I guess I disagree with your characterization of science. I wouldn’t call science a “binary endeavour,” but I certainly wouldn’t say there is only one side. I’ll grant you that there is only one side to the facts that scientists uncover (like proxy data), but these facts require interpretation (like statistical analysis) to be meaningful to the rest of the world or to create a workable theory. It’s this interpretation phase where the opposition, debate, and side-taking occur; and I might add that this is a critically important step. To say that “there are not ‘two sides’… there is only one” ignores this distinction and appears to be an attempt to cut off debate.