Guest commentary by Karsten Haustein, U. Oxford, and Peter Jacobs (George Mason University).

One of the perennial issues in climate research is how big a role internal climate variability plays on decadal to longer timescales. A large role would increase the uncertainty on the attribution of recent trends to human causes, while a small role would tighten that attribution. There have been a number of attempts to quantify this over the years, and we have just published a new study (Haustein et al, 2019) in the Journal of Climate addressing this question.

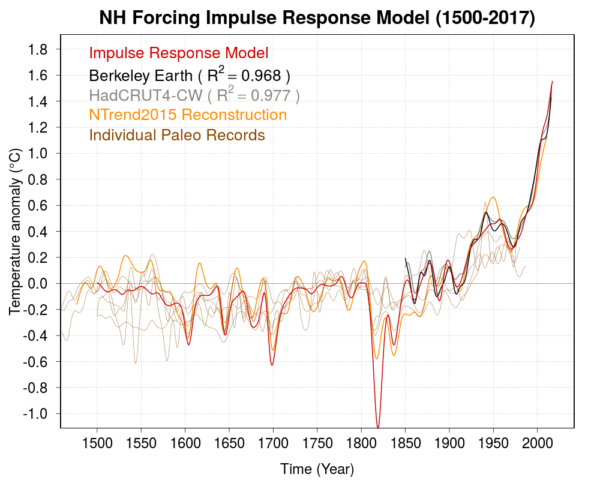

Using a simplified climate model, we find that we can reproduce temperature observations since 1850 and proxy-data since 1500 with high accuracy. Our results suggest that multidecadal ocean oscillations are only a minor contributing factor in the global mean surface temperature evolution (GMST) over that time. The basic results were covered in excellent articles in CarbonBrief and Science Magazine, but this post will try and go a little deeper into what we found.

Until recently, the hypothesis that there are significant natural (unforced) ocean cycles with an approximate periodicity of 60-70 years had been widely accepted. The so-called Atlantic Multidecadal Variability index (AMV, sometimes called the AMO instead), but also the Pacific Decadal Variability index (PDV) have been touted as major factors in observed multidecadal GMST fluctuations (for instance, here). Due to the strong co-variability between AMV and GMST, both, the Early 20th Century Warming (1915-1945) and the Mid-Century Cooling (1950-1980) have been attributed to low-frequency AMV variability, associated to a varying degree with changes in the Atlantic Meridional Overturning Circulation (AMOC). In particular, the uncertainty in quantifying the human-induced warming fraction in the early 20th Century was still substantial.

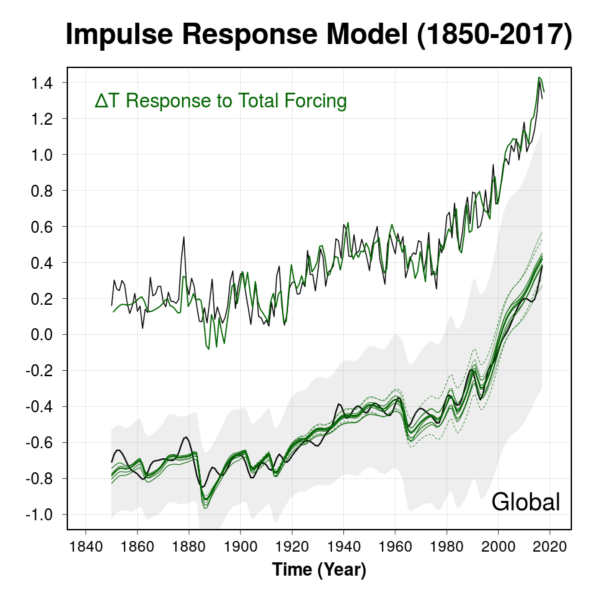

In contrast to those earlier studies, we were able to reproduce effectively all the observed multidecadal temperature evolution, including the Early Warming and the Mid-Century cooling, using known external forcing factors (solar activity, volcanic eruptions, greenhouse gases, pollution aerosol particles). Adding an El Niño signal, we virtually explain the entire observed record (Figure 1). Further, we were able to reproduce the temperature evolution separately over land and ocean, and between Northern and Southern Hemispheres (NH/SH). We found equally high fractions of explained variability associated with anthropogenic and natural radiative forcing changes in each case. Attributing 90% of the Early Warming to external forcings (50% of which is due to natural forcing from volcanoes and solar) is – in our view – a key leap forward. To date, no more than 50% had been attributed to external forcing (Hegerl et al. 2018). While there is less controversy about the drivers of the Mid-Century cooling, our response model results strongly support the idea that the trend was caused by increased levels of sulphate aerosols which temporarily offset greenhouse gas-induced warming.

What does this mean?

Some commentators have used the uncertainty in the attribution for the Early 20th Century warming as an excuse to not accept the far stronger evidence for the human causes of more recent trends (notably, Judith Curry). This was never very convincing, but is even further diminished given a viable attribution for the Early Warming now exists. Despite a number of studies that have already provided evidence – based on a solid physical underpinning – for a large external contribution to observed multidecadal ocean variability, most prominently the AMV (e.g. Mann et al., 2014; Clement et al., 2015, Stolpe et al. 2017), ideas such as the stadium wave (Wyatt and Curry, 2014) continue to be proposed. The problem is that most studies that argue for unforced low-frequency ocean oscillations do not accommodate time-varying external drivers such as anthropogenic aerosols. Our findings highlight that this non-linearity is a crucial feature of the historic forcing evolution. Any claim that these forcings were/are small has to be accompanied by solid evidence disproving the observed multidecadal variations in incoming radiation (e.g. Wild 2009). On the contrary, our findings confirm that the fraction of human-induced warming since the pre-industrial era is bascially all of it.

Implications

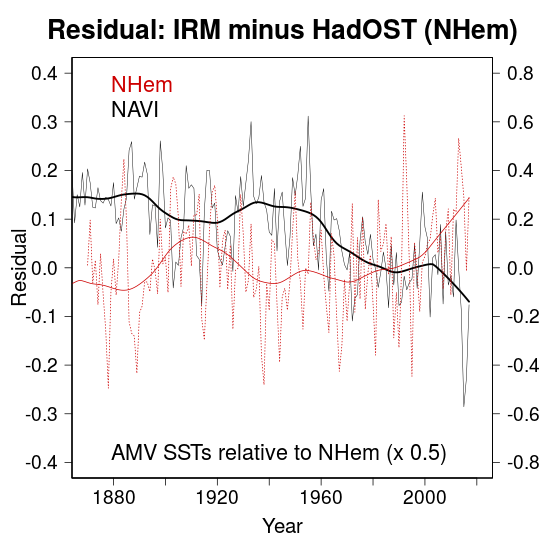

We conclude that the AMV time series (based on the widely accepted definition) almost certainly does not represent a simple internal mode of variability. Indeed, we think that the AMV definition is flawed and not a suitable method to extract whatever internal ocean signal there might be. Instead we recommend the use of an alternative index which we think will be closer to the internal signal, called the North Atlantic Variability Index (NAVI). It is essentially the AMV relative to the NH temperature (Figure 2). The resulting timeseries of the new NAVI index is a good representation of the AMOC decline, arguably the true internal component (although also forcing-related) in the North Atlantic. This implies that while the AMOC is an important player (see for instance, Stefan’s RC post), it is not driving alleged low-frequency North Atlantic ocean oscillations. The AMV should therefore not be used as predictor in attribution studies given that the multidecadal temperature swings are unlikely internally generated. Though we note that the projection of AMV on GMST is small in any case.

What did our results rely on?

There are three novelties that led to our conclusions: (1) We differentiate between forcing factors such as volcanoes and pollution aerosols with regard to their transient climate response (TCR). For example, anthropogenic aerosols are primarily emitted over NH continents, i.e. they have a faster TCR which we explicitly account for in our analysis. (2) We use an updated aerosol emission dataset (CEDS, Hoesly et al., 2017, also used in CMIP6), resulting in a substantially different temporal evolution of historic aerosol emissions compared to the older dataset (Lamarque et al. 2010). The effective aerosol forcing is based on the most recent estimate by [9]. (3) The final change is related to the observational data. The HadISST1/2 (Kennedy et al. in prep) ocean temperature dataset (SST) has never been used in conjunction with land data. We have combined HadISST2 with Cowtan/Way over land (using air temperature over sea ice) and filled the missing years after 2010 with OSTIA SSTs (due to it being preliminary only). In addition, it has been known for quite some time now that there is a bias in virtually all SST dataset during the 2nd world war (Cowtan et al., 2018, and see also Kevin’s SkS post). We correct for that bias over ocean (1942-1945), which, in conjunction with warmer HadISST2 SSTs before the 1930s, significantly reduced previous discrepancies related to the Early Warming. Lastly, the fact that the model is initialised in 1500 A.D. ensures that the slow response to strong volcanic eruptions is sensibly accounted for (Figure 3), as it has shown to be important on centennial timescales (e.g. Gleckler et al. 2006).

What about overfitting?

In order to address this issue, we would like to point out that not a single parameter depends on regression. TCR and ECS span a wide range of accepted values and all we did is to estimate TCR based on the best fit of the final response model result with observations. We concede that the fast response time and the effective aerosol forcing are difficult to pin down given there is a wide range of published estimates available. However, it is worth mentioning that the results are not very sensitive to variations in both parameters (see thin lines in Figure 1). Instead, the overall uncertainty is dominated by the TCR and GHG forcing uncertainty. The story is more complex when it comes to the NH/SH and land/ocean-only results as we need to account for the different warming-ratios. Guided by climate model and observational data, we introduce a novel method that objectively estimates the required TCR factors.

Conclusions: It was us.

The findings presented in our paper highlight that we are now able to explain almost all the warming patterns since 1850, including the Early Warming period. We achieve this by separating different forcing factors, by including an updated aerosol dataset and by removing notable SST biases. We have avoided overfitting by virtue of a strict non-regression policy. We ask the different research communities to take these findings as food for thought, particularly with regard to the Early Warming. We most definitely believe that it is time to rethink the role of the AMV and recommend using our newly introduced NAVI definition instead. This will also help to understand contemporary AMOC changes and its relation to climate change better, and perhaps provide guidance as to which climate models best approximate internal ocean variability on longer timescales.

References

- K. Haustein, F.E.L. Otto, V. Venema, P. Jacobs, K. Cowtan, Z. Hausfather, R.G. Way, B. White, A. Subramanian, and A.P. Schurer, "A Limited Role for Unforced Internal Variability in Twentieth-Century Warming", Journal of Climate, vol. 32, pp. 4893-4917, 2019. http://dx.doi.org/10.1175/JCLI-D-18-0555.1

- G.C. Hegerl, S. Brönnimann, A. Schurer, and T. Cowan, "The early 20th century warming: Anomalies, causes, and consequences", WIREs Climate Change, vol. 9, 2018. http://dx.doi.org/10.1002/wcc.522

- M.E. Mann, B.A. Steinman, and S.K. Miller, "On forced temperature changes, internal variability, and the AMO", Geophysical Research Letters, vol. 41, pp. 3211-3219, 2014. http://dx.doi.org/10.1002/2014GL059233

- A. Clement, K. Bellomo, L.N. Murphy, M.A. Cane, T. Mauritsen, G. Rädel, and B. Stevens, "The Atlantic Multidecadal Oscillation without a role for ocean circulation", Science, vol. 350, pp. 320-324, 2015. http://dx.doi.org/10.1126/science.aab3980

- M.B. Stolpe, I. Medhaug, and R. Knutti, "Contribution of Atlantic and Pacific Multidecadal Variability to Twentieth-Century Temperature Changes", Journal of Climate, vol. 30, pp. 6279-6295, 2017. http://dx.doi.org/10.1175/JCLI-D-16-0803.1

- M.G. Wyatt, and J.A. Curry, "Role for Eurasian Arctic shelf sea ice in a secularly varying hemispheric climate signal during the 20th century", Climate Dynamics, vol. 42, pp. 2763-2782, 2013. http://dx.doi.org/10.1007/s00382-013-1950-2

- M. Wild, "Global dimming and brightening: A review", Journal of Geophysical Research: Atmospheres, vol. 114, 2009. http://dx.doi.org/10.1029/2008JD011470

- R.M. Hoesly, S.J. Smith, L. Feng, Z. Klimont, G. Janssens-Maenhout, T. Pitkanen, J.J. Seibert, L. Vu, R.J. Andres, R.M. Bolt, T.C. Bond, L. Dawidowski, N. Kholod, J. Kurokawa, M. Li, L. Liu, Z. Lu, M.C.P. Moura, P.R. O'Rourke, and Q. Zhang, "Historical (1750–2014) anthropogenic emissions of reactive gases and aerosols from the Community Emissions Data System (CEDS)", Geoscientific Model Development, vol. 11, pp. 369-408, 2018. http://dx.doi.org/10.5194/gmd-11-369-2018

- C.E. Forest, "Inferred Net Aerosol Forcing Based on Historical Climate Changes: a Review", Current Climate Change Reports, vol. 4, pp. 11-22, 2018. http://dx.doi.org/10.1007/s40641-018-0085-2

- K. Cowtan, R. Rohde, and Z. Hausfather, "Evaluating biases in sea surface temperature records using coastal weather stations", Quarterly Journal of the Royal Meteorological Society, vol. 144, pp. 670-681, 2018. http://dx.doi.org/10.1002/qj.3235

- P.J. Gleckler, K. AchutaRao, J.M. Gregory, B.D. Santer, K.E. Taylor, and T.M.L. Wigley, "Krakatoa lives: The effect of volcanic eruptions on ocean heat content and thermal expansion", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL026771

Nice work! Thanks for the write-up.

Some words missing:

“we were able to temperature evolution separately over land and ocean” =

“we were able to reproduce the temperature evolution separately over land and ocean” ?

Also, external links in the text seem to lead back to this post (not a big problem, since the DOI links work, but still).

Thanks for this study. i followed the discussion on the warming hiatus and AMO and PDO. But since 2012 the warming was speeding up again while the AMO was in the cooling mode. Since then i suspected that the AMO didn’t played a strong role.

Also that you connect the internal forcing more with the ENSO makes sense to me, when the ENSO-index and temperature increase are compared. Here there was allways a strong connection. Especially, the El-Nino 1997/98 with an temperature increase of 0.18 °C warming and the El-NINO 2015/16 with 0.24°C of warming during these years.

Another thing are marine heat waves (El NINO is for me actually also a kind of marine heat wave) which are occurring now since some 20 years. The “blob” 2013-2015 in the north east pacific for example. An ocean region of some 9 mio km2 about 1.8-2.4°C warmer than average. Or the Atlantic heat wave 2017 with 1.7 °C higher than average. 2018 there was one near New Zeeland with 4 °C warmer temperatures and 4 mio. km2.

Seems that marine heat waves, arctic/mid lattitude/suptropic and tropic circulation changes, low wind events and reduced upwelling could be another forced signal that is now arising out of the noise since the last 0.4 °C warming. Main mechanism are lower wind speeds creating favorable conditions for marine heat waves. This feed back could have a big impact on the warming rate.

But most worrying of all are the role of sulfate emissions. I now two global estimates on the amount of global cooling – one up to 1 °C global cooling and the other a cooling effect of about 0.5-1.1°C. This would mean that we would have without air pollution much more warming than the 1 °C we have now.

But nowadays the sulfate emissions come to an plateau and the masking effect will disappear. And when the emissions will start to slowly decrease we will get another warming push.

When we look at the temperature increase in the GLOBAL Land-Ocean Temperature Index in with the base period of 1951-1980 the danger becomes obvious: 2011 we had 0.58 °C – 2012 0.62°C – 2013 0.65°C – 2014 0.73°C – 2015 0.87°C and 2016 0.99°C which adds up to 0.4 °C of warming in 5 years! 2017 and 2018 again a little bit cooler.

My prognosis out of observations would be 1.5°C will be breached with the next strong El-Nino as soon as 2026 (Every 10 years a strong El-Nino is the latest prognosis and the El-Nino 2015/16 had a reduces heat discharge). When the next strong El-Nino will come around 2030 we will jump past the 1.5 °C mark. If it comes 2035 we will come close to 2°C of warming.

Also what will be important that on our way from 1 towards 2 °C some important forced feed backs will increase non-lineary: snow cover extent in the NH, arctic sea ice in summer, but also sea ice decline in winter (the strongest feed back of all by a far margin – from -30 to 40 below zero to zero or above) which is being observed since 4 years). The reason here warm waters accumulation in the lower layers in the arctic ocean coming to the surface now). And last an accelerating Methane concentration increase in our atmosphere with the additional warming with Methane being 80 times more powerful than CO2 on a 20 year times scale.

My personal view is that the new generation of models are full on point and Earth is much more sensitive towards an increase in emissions than we thought possible.

Sorry, for my English and sluggish writing – takes to much time to make it nice but i hope you got the points ;)

The best

Jan

“never very convincing” is the understatement of the age! Standards of courtesy and honesty seem powerless in the face of determined opposition, ever more brazen.

Honest scientists can continue to support reality, but only reality itself (sadly, not good for children and other living things) will win against the endless armor of unskeptical “skeptics” who resist the slightest taint of true scientific skepticism.

Thanks @CM! Oupsi!

@Jan: Actually, we show that aerosol-induced cooling is currently only ~0.4°C (see 3rd figure in the CarbonBrief article). Higher aerosol sensitivity would be incompatible with the observed mid-century hiatus. Plus, current warming would be overestimated if transient sensitivity was higher than we report. The neat thing is that the temporal evolution of (warming) anthropogenic greenhouse gases and (cooling) aerosols is not a mirror image. Hence they can both be constrained fairly robustly now.

I follow Gavin’s Climate Model Projections Compared to Observations page as the definitive comparison. Looking at the histograms at the bottom of the page, the models are currently warming at a trend somewhere at 1.5x to 2x the data trends. (1.7x per Santer 2014, which still looks about right.) My impression has been that the explanation for this has been unaccounted natural variability, such as AMO, on short to medium time scales.

So my question is: Is this the natural variability issue that your model addresses/resolves? I.e, does your study narrow the “95% envelope of simulations”. Specifically, does your model give a better quantitative estimate of ECS, and what is that estimate?

Jan,

Yes, though most folks pretend we’re well below the 1.5C limit, perhaps 1C of warming according to thermometers plus maybe 1C of aerosol masking means that if you look out the side window you’ll get a glimpse of the big DANGER! 2C LIMIT! sign…

Al Bundy (really, man, can’t you come up with a better name?):

Aerosol cooling isn’t -1 C. In comment 4 Karsten gives -0.4 C. Before it was about -1/2 that of CO2’s warming, but the CO2 warming is getting larger so the ratio is dropping.

Thanks for this accompanying post to your paper. The introduced NAVI index ( essentially the extratropic north Atlantic SST – NH temperatures) is an almost brand new Index for the NA variability. However there is IMO some danger to conflate some effects of the observed warming: on the NH is located most of the land and land warms faster than the SST which is not an effect of the NA. Also the arctic amplification of any warming has an impact on the NH temperatures and this is also not an effect of the NA. After subtracting the NH temps from the SST NA this must lead to a strong reduction of the NAVI index due to any warming which is not the result of a declining NA index, see your cited fig.2. Did you try to avoid the mentioned non- NA effects on the NAVI index, for instance with subtracting extratropic NA SST from the global SST which would be a mixture of the established NA indexes deduced from v. Oldenborgh and Trenberth/Shea?

How much are you attributing to solar forcing for early 20th century warming vs. volcanic (reduced activity?)?

Very interesting. But regarding our fate as mankind in the near future (the next about thirty to hundred years), it has already been clear for at least ten to twenty years, that it is very bleak indeed, seen in the light of the ever more overwhelming amounts of scientific results like these. Especially when looking at what this means for ecological stability, food security and health issues. Unfortunately this has so far had no real consequences at all for how we as a society are managing our energy consumption. Business as extremely usual goes on and on and on behind a very thin facade of “green” slogans and symbolic gestures. The main response from the political and economic elites is just ever more empty slogans and ignorance or direct censorship of the scientific warnings. Since these contradict the iron-cast dogmas about the inevitability of ever accelerating economic growth.

The openly ignorant opinion is just the tip of the silently ignorant “iceberg” of those determining our common future. They couldn’t care less about the science, except for their growing efforts to silence it by focusing media and political “attention” (if you can still speak of such a thing in any meaningful way regarding our current public sphere of chaotic nonsense) on almost anything but this which is most important.

looks in order.

Thanks for writing this up on RC!

I’m really interested to see where this idea goes in the literaturein the future. It would be quite fabulous if historic attribution could be tightened up as this seems to promise!

Great stuff! Very comprehensive!

Thanks a lot for your answer. Nice that the effect of aerosols is slowly being better quantified. Overlooked the graph ;) Allays wondered if it could be really that high. But even 0.4 °C is a huge masking effect. The next 0.5 °C of warming will show if we are in deep trouble.

Therefore i have a Question: Currently it is estimated that methane is responsible for about 29% of the climate forcing of the greenhouse gases. This number is the result of the direct physical effect of all the methane molecules i guess.

Therefore, i would like to know how much stronger a methane molecule is compared to a CO2 molecule? On 100 years it is about 25 times stronger, on 20 years about 80 times stronger, but on a 1 year time scale it is how much stronger?

Just wonder how much of an effect the annual increase in methane concentration has on climate forcing we observe nowadays…

The best

Jan

Работа не по найму

Dr. Haustein or Dr. Jacobs

Do your results account for the ocean warming observed using the Argo system and reconstructed from various other old data? I am sorry if it is obvious that it does, but I am a layman and could easily have missed the point.

Glad to see that overfitting is addressed. Many in the RealClimate community believe this is a non-issue brought up by “climate deniers”. Maybe now some will pull their craniums out of the posteriors on this facet of modeling of years past.

Dan DaSilva,

I don’t think you understood the bit where they discuss overfitting and conclude that they’re not. Researchers are always wary of overfitting and this has nothing to do with the specious claims of the climate denial community. The non-issues along this vein that are brought up by the climate denial community aren’t ignored because climate scientists are unaware of the dangers of overfitting. They’re ignored because they have no merit. Your comment is especially ironic given Roy Spencer’s habit of overfitting in an effort to back up his dubious claims.

I have been reviewing Hoesly et. al. (reference #8) for their organic carbon aerosol emissions data and find that they relied on a 2007 paper for this input that only had data through 2000. In the paper Hoesly and team projected organic carbon increases from 2000 onward for residential and waste sources of this aerosol that were a 25% increase from the total in that year.

I also note that the current PIK Potsdam RCP 3.0 series of organic carbon aerosol forcing for the CMIP6 shows a continuation of dominance of organic carbon as a cooling aerosol through 2100, even after SOx is reduced in the mitigation scenario.

1. While I have not asked Hoesly or her team about this, it seems that this projected increase does not fit the field data that has been coming out of China since 2017 (chen et al https://doi.org/10.1016/j.scitotenv.2016.11.025 )

2. The treatment of OC emissions from residential and waste combustion, typically from open air burning, as a global forcing aerosol is inappropriate. The vast majority of this aerosol’s impact is locally impacting and contained by winter inversion zones.

Therefore, the assumption of massively increased emissions of organic carbon and its increased effect on the global temperature record since 2000, as well as the continuation of this forcing through 2100 in the RCPs is exaggerated, possibly by as much as a factor of 2.

This would be another indication of a higher TCR coupled with a higher (more negative) SOx forcing than is currently being modeled.

To clarify my question above. If you have a model that more accurately assesses the contribution of natural variation, doesn’t this imply that the model provides a more accurate estimate of TCR and ECS? I notice under Figure 1 that the best fit is to a TCR of 1.6K. Is 0.6 a reasonable estimate of the TCR/ECS ratio, yielding an ECS of 2.67?

The authors present their method as a significant step forward in separating natural from anthropogenic forcings. Are they also claiming it is a step forward in narrowing the range of the IPCC ECS estimate of 1.5 to 4.5, to a value more around 2.67?

David Appell: really, man, can’t you come up with a better name?

AE: You dis my hero Al Bundy, the Everyman Inventor? Sigh, now I have to go with second best…

I think the aerosol numbers are foggy :-) and I’d bet the truth is above .7C, given the Perennial Truth: it’s always worse than we think. A paper came out in January, “With this new method, Rosenfeld and his colleagues were able to more accurately calculate aerosols’ cooling effects on the Earth’s energy budget. And, they discovered that aerosols’ cooling effect is nearly twice higher than previously thought.”

https://www.sciencedaily.com/releases/2019/01/190122104611.htm

Im Gegensatz zu Losartan zeigt sein Metabolit E 3174 eine nicht kompetitive Form der Rezeptorhemmung, die zudem noch wesentlich länger in ihrer Wirkung anhält und somit zu einer effektiven Verstärkung der Angiotensin-II-Hemmung führt. Bei Asthma bronchiale oder anderen chronisch obstruktiven Lungenfunktionsstörungen sollte das Medikament nur mit Vorsicht angewendet werden. Dies führt zu den typischen Nebenwirkungen, welche die Anwendung deutlich einschränken, wie Blähungen, Darmgeräusche, Durchfall, Bauchschmerzen und gelegentlich Übelkeit. Irbesartan wird für die Behandlung folgender Krankheiten eingesetzt. perindopril bestellen

Dan DaSilva:

Climate model overfitting is a non-issue, Dan, as this post makes clear. We’re still waiting for you to pull your cranium out, you know.

@frankclimate (8):

We alluded to that problem in the paper: “Arguably, asymmetric land-ocean warming is a more mundane explanation for the colder NA region relative to NHem, as it is physically consistent with a transient warming scenario, but the slow pace of the NAVI decline suggests a contributing role for AMOC.”

But you are right, it would actually be better to have an even more advanced index definition. In fact, we did subtract NHem SSTs from the AMV region and what we got is almost no trend. This isn’t surprising, as the AMV area (25-60N/7-75W) is so much larger than the AMOC region (40-60N/15-50W). If we use the AMOC region SSTs and subtract the NHem SSTs, we do get a similar slowdown than that in Fig 2 as shown below (rhs): NAVI (AMV) vs NAVI (AMOC)

Note that there is a “dip” associated with North American and European aerosols during 1960-1990. Since NAO projects on AMOC pretty strongly, it’s arguably a NAO response. To which degree NAO and aerosol forcing (or any other forcing for that matter) are interlinked is still subject to debate/uncertainty. Since this issue has been brought up a couple times, we try to get the modified NAVI index (AMOC region minus NHem SSTs) into the final version of the paper.

@James Cross (9): I haven’t split solar and volcanic activity. So only guesswork, but probably 50/50 (of the 50% that natural forcings contribute to the early warming)

@Jan (14): The global warming potential of CH4 over 10 years would be 108 (times stronger than CO2).

@Ken (16): We do not use ocean heat data in our analysis. We mainly focus on the transient climate response and don’t infer the equilibrium climate sensitivity (ECS).

@Jai (19): Thanks a lot for these additional comments. We are indeed aware of some recent research which suggests that Chinese aerosol emissions may have actually decreased more than thought. As to what the climate response to OC and SOx emissions really is, I agree that there’s a lot of remaining uncertainty. SOx emissions have gone down considerably over the last 30 years or so. As sensitive as they may be, their negative forcing is somewhat constrained by the observed cooling during the 1950-1980s. That cooling is not strong enough to be compatible with an outrageous SOx forcing. Hence if OC climate effects are indeed smaller than we think, TCR would presumably be lower rather than higher.

@MPassey (20): We argue that ECS cannot be constrained based on our analysis. While our prescribed TCR/ECS ratio of 0.55 (based on CMIP5) seems to work very well – associated with an ECS of 2.8K given our best TCR estimate is 1.57K – we cannot narrow down the IPCC uncertainty in a meaningful way. Our results do indicate that TCR is probably between 1.3 and 1.8K, hence ECS is between 2.3 and 3.3K, but then again the TCR/ECS ratio uncertainty needs to be added.

Jan at #2 laid down the show stoper climate variability — global dimming.

“But most worrying of all are the role of sulfate emissions. I now two global estimates on the amount of global cooling – one up to 1 °C global cooling and the other a cooling effect of about 0.5-1.1°C. This would mean that we would have without air pollution much more warming than the 1 °C we have now.”

John

@KarstenHaustein, thanks again for your answer, always wondered what the number would be.

In one study the methane rise of 6.9 ppb is quantified in carbon with an annual rise of 25 mio. tonnes. Is it possible in a raw calculation to multiply this rise with 108 to get a raw figure of the forcing?

Because this would mean that the 25 mio. tonnes of annual methane increase have the about the same impact as a rise of co2 in carbon of 2.7Gt (25×108) and that would be huge…

Because this would mean that the concentration of methane in our atmosphere of about 1865ppb would amount to a rough forcing of about 201ppm in CO2 (1865ppb x 108) but this number would be substantially higher than the forcing that is attributed to methane.

So i guess it’s not that simple. Just wonder how to get a feeling of what the annual increase in methane means in direct forcing.

The best

Jan

This strikes me as a major step forward.

But this is perplexing: Adding an El Niño signal, we virtually explain the entire observed record (Figure 1).

…

On the contrary, our findings confirm that the fraction of human-induced warming since the pre-industrial era is bascially all of it.

In the paper there is this: In our assessment of potential contributions from Atlantic and Pacific multidecadal variability,

546 we demonstrate that with the exception of prolonged periods of El Nin˜o or La Nin˜a preponderance,

547 there is little room for internal unforced ocean variability beyond subdecadal timescales, which is

548 particularly true for the NA region.

What I am not finding in the paper is an explicit presentation of an El Niño signal.

Is the El Niño signal part of human-induced warming?

Another good step forward is this paper, linked at Judith Curry’s blog Climate Etc: D. Kim et al., Inference related to common breaks in a multivariate system with joined segmented trends with applications to

global and hemispheric temperatures. Journal of Econometrics (2019), https://doi.org/10.1016/j.jeconom.2019.05.008

Largely concordant with the Haustein et al paper (at least not discordant), and with a specific claim about the “hiatus”.

In your opinion, does the work of Haustein support the occurrence of the “hiatus”?

About the “hiatus” I found this:

404 The positive residual after 2000 (also visible in the NHem residual in Fig. 7a) is perhaps more

405 interesting as it relates to the infamously dubbed ”hiatus” period in the wake of the strong El

406 Nin˜o in 1997/98. While primarily caused by a clustering of La Nin˜a events around 2010 (Kosaka

407 and Xie 2013; England et al. 2014; Schurer et al. 2015; Dong and McPhaden 2017), upon closer

408 inspection another feature stands out. There has been a succession of anomalously cold years

409 between 2010-2013, which is exclusively linked with boreal winter. More precise, this period

410 is linked with extremely cold Eurasian winters (Cohen et al. 2012) which may or may not have

411 been assisted by forced atmospheric circulation changes in response to declining sea ice (Tang

20

Accepted for publication in Journal of Climate. DOI 10.1175/JCLI-D-18-0555.1.

412 et al. 2013; Cohen et al. 2014; Overland 2016; Francis 2017; Hay et al. 2018). But other than

413 that, SHem (Fig. 7c) and Ocean (Fig. 7f) residuals are inconspicuously smooth and only diverge

414 before 1900 as outlined above already. Overall, our results support previous work that has shown

415 that using updated external radiative forcing (Huber and Knutti 2014; Schmidt et al. 2014) and

416 accounting for ENSO-related variability explains the so-called ”hiatus”.

I would infer that if the “so called hiatus” has been [explained], then it was indeed a real occurrence.

Did something cause the a clustering of La Nin˜a events ?

In order to address this issue, we would like to point out that not a single parameter depends on regression. TCR and ECS span a wide range of accepted values and all we did is to estimate TCR based on the best fit of the final response model result with observations.

Your reported estimate resulted from an iterative procedure that used a published range of values in a grid, and was the result that produced the “best” fit. What exactly was the fit criterion? That this iterative procedure is not a computational linear or nonlinear regression hardly matters.

“I would infer that if the “so called hiatus” has been [explained], then it was indeed a real occurrence.”

Anyone trained in science and in reading scientific literature would infer differently. This is a statement that other factors are operating, not the so-called one. It’s essentially hiatus denial…a denial which is correct in this case since there is no evidence for a hiatus except incompetent statistical “reasoning”.

Matt Marler, Substitute “fluctuation” for “hiatus”, and I would agree. Scientists study both signal and noise. This is noise.

30: jgnfld: Anyone trained in science and in reading scientific literature would infer differently. This is a statement that other factors are operating, not the so-called one

If the “badly named” hiatus did not occur, can it be explained?

@32

Flip a coin 100 times. You will very (statistically) likely see 1 or more “statistically significant” (p <= .03) runs of 5 or more heads or tails.

Except these runs aren't statistically significant in the least, they are completely expectable.

In point of fact this is so expectable I and I'm sure many others here have used this as a classroom demonstration of untrained statistical reasoning on Day 1 of Stats 101 routinely*.

There is an "explanation" for the runs, it's just that the explanation is we expect noise to produce such short term "events" (actually nonevents) like this.

The "hiatus" is explained in the same way. You see noise and think there must be some explanation. It's a well known cognitive illusion related to seeing actual shapes in clouds.

—–

*1. Split class in two.

2. Have students in one group flip actual coins 100 times and record the results.

3. Have students in the other group produce a "random" series of flips mentally.

4. Have students collect and shuffle the sheets.

5. Take sheets and separate them into piles of those containing "significant" runs of 5 or more in one pile and those not containing such runs into the other (put smartass papers with runs of 20 or more in pile #2).

Your sorting will recover which students flipped physical coins and which did not with a high degree of accuracy.

@32 (cont.)

While the problem can be worked out analytically, the following few lines of R code will allow you to quickly and easily explore this effect for various run lengths using a Monte Carlo approach.

*****

# NOTE: nnzero() is stored in package Matrix and you want it

require(Matrix)

onerun = function(RunLength) {

# NOTE: the rle() function encodes series as run lengths

Y = rle(sample(1:2,100,replace=TRUE))

sum(Y$lengths >= RunLength)

}

# NOTE: nnzero() sums nonzero entries

# NOTE: replicate() runs the simulation n times.

nnzero(replicate(100000,onerun(5))) # should be ~97180

nnzero(replicate(100000,onerun(10))) # should be ~8710

*****

You will see you can expect to see a run of 5 or more heads or tails ~97% of the time.

Note that even the supposedly “highly significant” event of a run of 10 heads or tails in a row (p <= .001) is reasonably common somewhere within such a series as you EXPECT to see it ~9% of the time somewhere across a series of 100 flips.

This well known effect is one of the main reasons why cherrypicking is bad when looking at any series and why you shouldn't do it. Our brains are constructed to "see" patterns. Even where there are none. Math is more reliable.

Additionally, there is a second problem with the "hiatus": Annual temp values are positively autocorrelated. This means that values near to prior values are more likely than a white noise coin flip model would suggest. This of course makes runs even more likely. I have yet to see anyone asserting there was a pause actually control for this effect. They seem to universally use a cherrypicked white noise model.

Karsten #24

While the period cooling 1950 to 1980 is lower in the global, the northern hemisphere temperature dataset shows increased cooling (where the aerosols had more impact). Please see the reference graph of SO2 from Anthropogenic Sulfur Dioxide Emissions 1850-2005 Smith, S.J. et al and Northern Hemisphere GISStemps through 2012.

What stands out greatly is that after the SO2 emissions peaked in 1975 a much greater warming than the global average occurred, indicating a much greater positive forcing that was being balanced by the SO2 pulse.

Link to SO2 vs. NH temps graph here: https://forum.arctic-sea-ice.net/index.php/topic,1384.msg208483.html#msg208483

Follow up to post above.

The comparison of NH SO2 and NH temperature against Southern Hemisphere SO2 and SH temperatures.

the amount of northenr hemisphere warming is roughly double that of the southern hemisphere but in the 1990s the increase in SH SO2 starts to moderate the temperature warming of the southern hemisphere.

Link to SO2 NH and NH temp vs. SH SO2 and SH temp found here: https://forum.arctic-sea-ice.net/index.php/topic,1384.msg208486.html#msg208486

Marler said:

“Is the El Niño signal part of human-induced warming?:

You would think that a person like Marler who has been posting comments to Curry’s site for many years would have figured out that ENSO is a natural variation and is apparent in the proxy record gong back centuries, well before any human-induced warming occurred.

33, jgnfld: The “hiatus” is explained in the same way. You see noise and think there must be some explanation.

It was the paper by Haustein et al that provided the explanation of which I wrote.

You misunderstood or evaded my question: taking the coin analogy, if the coin did not come up heads, does it make sense to explain how it came up heads?

37, Paul Pukite: You would think that a person like Marler who has been posting comments to Curry’s site for many years would have figured out that ENSO is a natural variation and is apparent in the proxy record gong back centuries, well before any human-induced warming occurred.

My question was directed to the people who wrote this, and other references to a signal in el nino and la nina: While primarily caused by a clustering of La Nin˜a events around 2010 (Kosaka

407 and Xie 2013; England et al. 2014; Schurer et al. 2015; Dong and McPhaden 2017), upon closer

408 inspection another feature stands out. There has been a succession of anomalously cold years

409 between 2010-2013, which is exclusively linked with boreal winter.

and

546 we demonstrate that with the exception of prolonged periods of El Nin˜o or La Nin˜a preponderance,

in

A limited role for unforced internal variability in 20th 1 century warming.

If you are correct, they are dropping some of the natural variation from the natural variation, before concluding that natural variation played a limited role.

@38

It’s possible I’m wrong, but you appear to be looking for an explanation of a specific instantiation produced by a random (noise) process. But by definition noise has no observable/measurable causal/correlative physical reason within any particular study paradigm. If there were such it wouldn’t BE noise. It would be signal.

If a coin does not come up heads, yes, one may quite legitimately ask whether a random process is a sufficient explanation for that observation. It usually is so long as the coin is fair and the flipping is done sufficiently well that the inherent chaos of flipping is well modeled by a random process.

If I’m missing your point I apologize.

Marler said:

“If you are correct, they are dropping some of the natural variation from the natural variation, before concluding that natural variation played a limited role.”

Karsten Haustein, the author of this post, knows well that El Nino/La Nina is a natural variation. He is also aware of our model of ENSO, which involves a solution of the Laplace’s Tidal Equations (i.e. a GCM formulation), correlates well with the data and shows no AGW signal … as of yet.

@Matthew: No hiatus whatsoever. Just a “fluke” which has been explained in all possible detail. Re best-fit for TCR: We used simple linear regression between obs and model. No tuning. No calibration. Just based on the regression slope.

@Jai: That’s right. The NH (or Land for that matter) warming was accelerated from 1975 onwards due to global brightening. Especially over Eurasia (except South Asia).

In related news: I’ve just updated the response model with AR6 direct aerosol forcing and compared it to the updated version of Cowtan/Way using HadSST4. Turns out that I have to reduce the total effective aerosol forcing (by virtue of adjusting the remaining indirect aerosol forcing contribution down) even further than suggested in Forest (2018) to match the observed southern hemisphere ocean warming. An effective aerosol forcing of only -0.6W/m2 appears to be the most plausible scenario now. The remaining aerosol “warming” would only be ~0.3°C. TCR would be slightly reduced to 1.5K. If Benestad et al (2019) is correct, TCR would stay at around 1.6K. In contrast, I don’t think it would affect the aerosol forcing estimate. I need to stress that this result is purely based on model tuning of the aerosol forcing. There isn’t much work which suggests such low aerosol forcing apart from the recently critiqued work of Bjorn Stevens. In fact, AR6 proposes a considerable increase in the indirect forcing (as high as -0.9W/m2). Yet, our simple tuning exercise suggests that such high aerosol forcing scenario is incompatible with observations. I for one tend to think that it is extremely unlikely to be as high as AR6 currently proposes (-1.1W/m2 total effective aerosol forcing).

42 Karsten Haustein: @Matthew: No hiatus whatsoever. Just a “fluke” which has been explained in all possible detail. Re best-fit for TCR: We used simple linear regression between obs and model. No tuning. No calibration. Just based on the regression slope.

I asked and you answered, so thank you. So you call “it” a “fluke” instead of a “hiatus” — that implies to me that”it” happened. Your explanation and quantification, which I called a “step forward” (as with the Kim et al paper I referenced), shows that the “fluke” appeared as the particular result of processes that themselves had never been interrupted , only changed a bit during that interval. “It” was not a “hiatus” in the processes, but a “hiatus” in surface temperature increase.

How you conclude that “something based on a regression slope” is not a calibration or tuning is a mystery to me. That is how measurement instruments are calibrated, for instance HPLC for measuring the concentrations of blood constituents.

Let me introduce myself properly:

https://www.researchgate.net/profile/Matthew_Marler

I hope to read more of your work.

Matthew Marler,

Ferchrissake, dude. Have you never heard of fluctuations? Yes, there was a period of a few years where the temperature did not increase at the same breakneck speed that it has exhibited over the past 40 years. Because this occured after a huge-ass El Nino, there were some charlatans and morons who made the mistake of thinking this had some relevance to the question of whether we are wrecking our climate with greenhouse gas emissions. It doesn’t.

Shall we call it a fluketuation?

@43

Calling an insignificant run a “fluke” implies random variation is a sufficient explanation. There is no “it” requiring any further explanation. That is the case here. NO one doing statistics properly by controlling for multiple comparisons and (or even or) autocorrelation has ever shown differently.

Calling an insignificant run, say of even 9 heads in a 100 coin flip experiment, a “tails hiatus” implies–to many if not most language users anyway–there is some “it” requiring an explanation. There isn’t. It’s expectable about 8% of the time. It requires no further explanation at all.

Most deniers wish to firmly imply that a “hiatus” in the surface temperature increase most definitely implies a hiatus in the processes of warming. Or that there is much doubt about them. That is the whole point of trumpeting said “hiatus” through the deniosphere for so many years now.

Not sure what your purposes are. You still seem to be thinking there is an “it” when you say “[the] processes…had never been interrupted , only changed a bit during that interval”. The point is there is no evidence there was any such change in any process at all. The processes operating without even one bit of change can easily throw this result out from time to time. Happens any and every time the observed residual errors are an order of a magnitude greater than the slope of the trend. Sometimes God really does play dice with things. Or at least chaotic processes makes it appear so, even in principle.

jgn, #45–

I agree with the thrust of that.

But some researchers are going to be curious about how that fluctuation occurred. Yes, it was statistically expectable, and thus has no evidentiary weight whatever (ex post facto, anyway) in terms of the actual trend. But some folks are going to be looking at it from a mechanistic point of view and wondering specifically what gives rise to this and other fluctuations. And it’s tough to say that that is somehow illegitimate–even though some *other* folks like to misuse such inquiry to muddy the waters on said warming trend.

After all, one can imagine someone investigating the mechanics of coin tosses, right? Say, with the aim of insuring that coins to be used in athletic contests (on the outcomes of which considerable sums of money may be riding, in various ways) be designed to be as fair as they possibly can be?

@46

a. Looking for further signals within noise can be reasonable sometimes, true. But only up to a point–especially if the data are noisy in the first place. Being from a stats background, the existence of an unexplained random error component doesn’t bother me too much. It does bother others as I think we are seeing here (including possibly you?). However, it is not at all clear that a completely mechanistic explanation will ever be possible even in principle when chaotic variables are involved.

I am not physicist enough to judge how much mechanistic signal is left in the various temp series so I cannot comment on the legitimacy of doing so except to point out that it may or may not be and I don’t know which it is. I have seen discussions stating that model runs consistently produce “hiatuses” without building in any additional mechanistic variable to produce such sequences. That suggests to me that there may not be a whole lot of room left in which to find more signal.

b. There is a reasonably famous study of coin flips by Diaconis, Holmes, and Montgomery, 2004 https://statweb.stanford.edu/~susan/papers/headswithJ.pdf (a long press release version with less math is at https://news.stanford.edu/pr/2004/diaconis-69.html). They actually t6heorized and then measured a consistent bias of 51%-49% in favor of the face upwards side when the flip is performed. Additionally, they built a machine which could reliably deliver the same flips repeatedly by reducing noise all through the system (including the landing surface as well as the launcher as I remember).

Diaconis–who is also a magician–trained himself to deliver coin flip results nearly 100% of the time, even, by working to standardize his delivery! So yes, sometimes mechanistic explanations work…but note that he made every possible effort to reduce noise to near zero which is something unlikely to happen in the much, much larger and much more coarsely measured dynamical system which is the Earth’s climate.

c. The Superbowl coin flip history (https://www.docsports.com/super-bowl-coin-toss-history.html) shows a “significant” run of 5 heads from 2009-2013 (p <= .03). This is not surprising in the least since the odds of seeing a run of 5 in 53 tosses is ~84% and a run of 5 heads half that. Given that grass/artificial turf is a very chaotic surface, it is highly unlikely the ref could use Diaconis' technique I would guess.

d. How much chaos and randomness are related is a neat point. This is discussed many places. One quickie starting place is section 5 "Randomness Without Chance" in the Stanford Encyclopedia of Philosophy entry "Chance versus Randomness" https://plato.stanford.edu/entries/chance-randomness/#ChanRandDete

Anyway, this is the best job I can do giving you an answer to your various points/question. Perhaps someone more knowledgeable can add more if needed.

44, Ray Ladbury: Have you never heard of fluctuations?

Golly, that’s a tough one.

Did fluctuations produce the poorly named “hiatus”. Sure. Haustert et al and Kim et all both showed how. It had previously been shown in Science Magazine that the hiatus could have been predicted, although it had not been in fact predicted.

Does that imply that the hiatus did not in fact occur? Of course not. Was it a “fluke”, or did it result from known processes?

Up next: given the accuracy of the Science Mag post-prediction and the two explanations of the hiatus, now that all the processes are better known, what’s the prediction (or model, conditional on the not yet observed aerosols) for the next 20 years? Will the badly named hiatus resume, or seem to resume, after the most recent el nino is fully resolved? I am hoping for more accurate predictions than the pre 2000 predictions of the poorly named hiatus.

FWIW, I have not claimed anything about the repeatability of the fluke, only that it happened, whatever you decide to name it.

45, jgnfld: Calling an insignificant run, say of even 9 heads in a 100 coin flip experiment, a “tails hiatus” implies–to many if not most language users anyway–there is some “it” requiring an explanation. There isn’t. It’s expectable about 8% of the time. It requires no further explanation at all.

The important difference from coin flipping is the claim that the “hiatus fluke” has been explained.

Nope. The claim is no other explanation is necessary in the absence of any relevant hypothesis or contradictory data.

How does one explain the “significant” run of heads in Super Bowl coin tosses for 5 years? It is possible some force caused the run, true. But a completely sufficient explanation given present knowledge is it was not even remotely a fluke but rather chaotic factors operating in a completely expectable way. If one wants to call that event a “tails hiatus”, well I guess one can. But it’s certainly not the way I would employ the terminology.

I would suggest that in order to study this scientifically one needs to provide and test at least 2 things in order to do so:

1. What is the proposed hiatus producing mechanism?

2. Why was said mechanism apparently only activated during that time and not continuously. Were it always operating one would expect them to be more common than expected by chance alone?

Speculation is fine, but at some point one needs a mechanism.

Lastly, what does regression lead one to expect after a seriously high local max (2-3 s.d. units above the trend depending on which series is examined) occurs such as happened in 1998?

Another bit of regression/chance at work: When Martinez hit 4 HRs in one game a couple of years ago and batted .459 for the week it was a wonderful achievement for a truly great hitter. But he finished the season batting .303 w/ 45 HRs which was nowhere near that game or weekly rate.