“The greenhouse effect is here.”

– Jim Hansen, 23rd June 1988, Senate Testimony

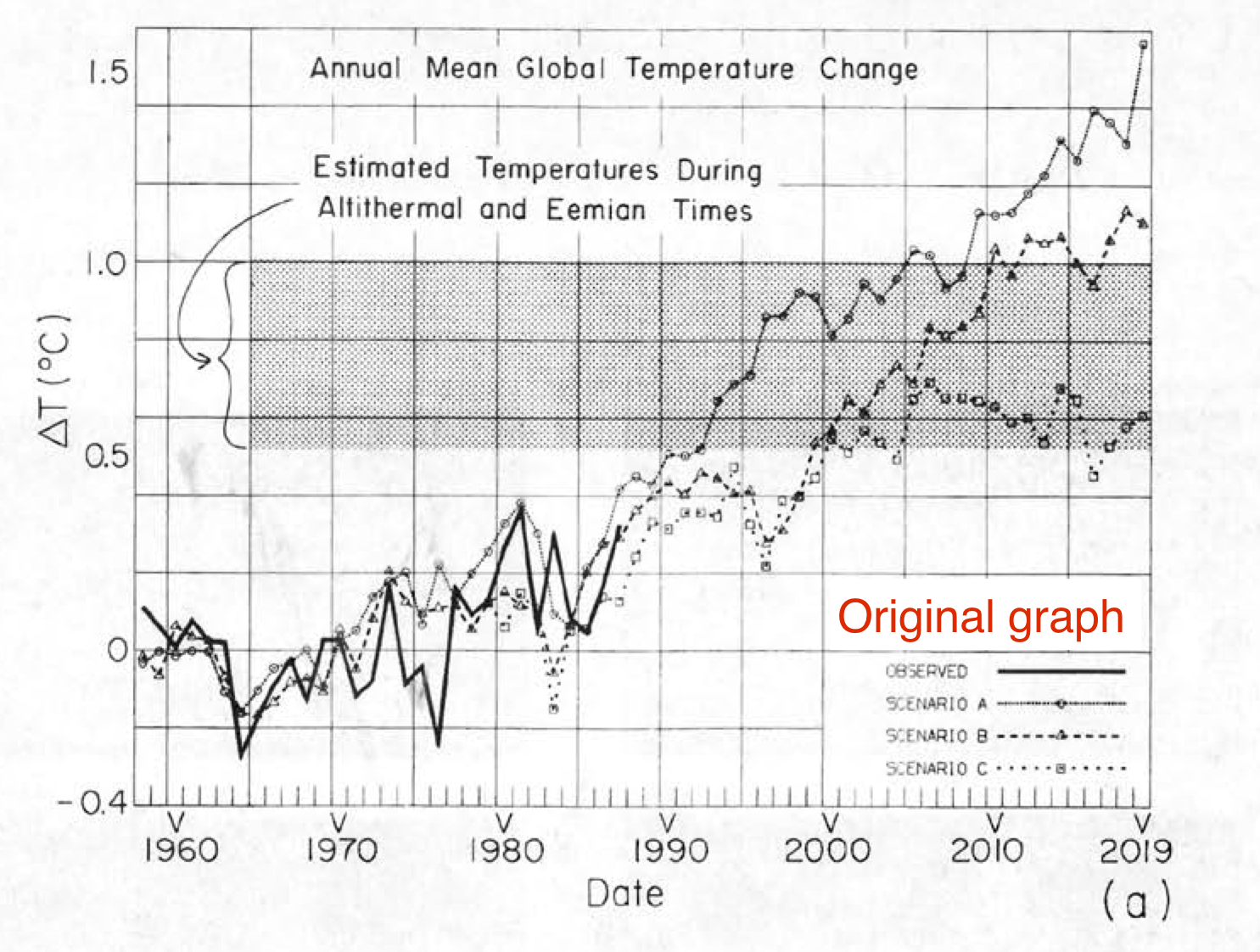

The first transient climate projections using GCMs are 30 years old this year, and they have stood up remarkably well.

We’ve looked at the skill in the Hansen et al (1988) (pdf) simulations before (back in 2008), and we said at the time that the simulations were skillful and that differences from observations would be clearer with a decade or two’s more data. Well, another decade has passed!

How should we go about assessing past projections? There have been updates to historical data (what we think really happened to concentrations, emissions etc.), none of the future scenarios (A, B, and C) were (of course) an exact match to what happened, and we now understand (and simulate) more of the complex drivers of change which were not included originally.

The easiest assessment is the crudest. What were the temperature trends predicted and what were the trends observed? The simulations were run in 1984 or so, and that seems a reasonable beginning date for a trend calculation through to the last full year available, 2017. The modeled changes were as follows:

- Scenario A: 0.33±0.03ºC/decade (95% CI)

- Scenario B: 0.28±0.03ºC/decade (95% CI)

- Scenario C: 0.16±0.03ºC/decade (95% CI)

The observed changes 1984-2017 are 0.19±0.03ºC/decade (GISTEMP), or 0.21±0.03ºC/decade (Cowtan and Way), lying between Scenario B and C, and notably smaller than Scenario A. Compared to 10 years ago, the uncertainties on the trends have halved, and so the different scenarios are more clearly distinguished. By this measure it is clear that the scenarios bracketed the reality (as they were designed to), but did not match it exactly. Can we say more by looking at the details of what was in the scenarios more specifically? Yes, we can.

This is what the inputs into the climate model were (CO2, N2O, CH4 and CFC amounts) compared to observations (through to 2014):

Estimates of CO2 growth in Scenarios A and B were quite good, but estimates of N2O and CH4 overshot what happened (estimates of global CH4 have been revised down since the 1980s). CFCs were similarly overestimated (except in scenario C which was surprisingly prescient!). Note that when scenarios were designed and started (in 1983), the Montreal Protocol had yet to be signed, and so anticipated growth in CFCs in Scenarios A and B was pessimistic. The additional CFC changes in Scenario A compared to Scenario B were intended to produce a maximum estimate of what other forcings (ozone pollution, other CFCs etc.) might have done.

But the model sees the net effect of all the trace gases (and whatever other effects are included, which in this case is mainly volcanoes). So what was the net forcing since 1984 in each scenario?

There are multiple ways of defining the forcings, and the exact value in any specific model is a function of the radiative transfer code and background climatology. Additionally, knowing exactly what the forcings in the real world have been is hard to do precisely. Nonetheless, these subtleties are small compared to the signal, and it’s clear that the forcings in Scenario A and B will have overshot the real world.

If we compare the H88 forcings since 1984 to an estimate of the total anthropogenic forcings calculated for the CMIP5 experiments (1984 through to 2012), the main conclusion is very clear – forcing in scenario A is almost a factor of two larger (and growing) than our best estimate of what happened, and scenario B overshoots by about 20-30%. By contrast, scenario C undershoots by about 40% (which gets worse over time). The slight differences because of the forcing definition, whether you take forcing efficacy into account and independent estimates of the effects of aerosols etc. are small effects. We can also ignore the natural forcings here (mostly volcanic), which is also a small effect over the longer term (Scenarios B and C had an “El Chichon”-like volcano go off in 1995).

The amount that scenario B overshoots the CMIP5 forcing is almost equal to the over-estimate of the CFC trends. Without that, it would have been spot on (the over-estimates of CH4 and N2O are balanced by missing anthropogenic forcings).

The model predictions were skillful

Predictive skill is defined as the whether the model projection is better than you would have got assuming some reasonable null hypothesis. With respect to these projections, this was looked at by Hargreaves (2010) and can be updated here. The appropriate null hypothesis (which at the time would have been the most skillful over the historical record) would be a prediction of persistence of the 20 year mean, ie. the 1964-1983 mean anomaly. Whether you look at the trends or annual mean data, this gives positive skill for all the model projections regardless of the observational dataset used. i.e. all scenarios gave better predictions than a forecast based on persistence.

What do these projections tell us about the real world?

Can we make an estimate of what the model would have done with the correct forcing? Yes. The trends don’t completely scale with the forcing but a reduction of 20-30% in the trends of Scenario B to match the estimated forcings from the real world would give a trend of 0.20-0.22ºC/decade – remarkably close to the observations. One might even ask how would the sensitivity of the model need to be changed to get the observed trend? The equilibrium climate sensitivity of the Hansen model was 4.2ºC for doubled CO2, and so you could infer that a model with a sensitivity of say, 3.6ºC, would likely have had a better match (assuming that the transient climate response scales with the equilibrium value which isn’t quite valid).

Hansen was correct to claim that greenhouse warming had been detected

In June 1988, at the Senate hearing linked above, Hansen stated clearly that he was 99% sure that we were already seeing the effects of anthropogenic global warming. This is a statement about the detection of climate change – had the predicted effect ‘come out of the noise’ of internal variability and other factors? And with what confidence?

In retrospect, we can examine this issue more carefully. By estimating the response we would see in the global means from just natural forcings, and including a measure of internal variability, we should be able to see when the global warming signal emerged.

The shading in the figure (showing results from the CMIP5 GISS ModelE2), is a 95% confidence interval around the “all natural forcings” simulations. From this it’s easy to see that temperatures in 1988 (and indeed, since about 1978) fall easily outside the uncertainty bands. 99% confidence is associated with data more than ~2.6 standard deviations outside of the expected range, and even if you think that the internal variability is underestimated in this figure (double it to be conservative), the temperatures in any year past 1985 are more than 3 s.d. above the “natural” expectation. That is surely enough clarity to retrospectively support Hansen’s claim.

At the time however, the claim was more controversial; modeling was in it’s early stages, and estimates of internal variability and the relevant forcings were poorer, and so Hansen was going out on a little bit of a limb based on his understanding and insight into the problem. But he was right.

Misrepresentations and lies

Over the years, many people have misrepresented what was predicted and what could have been expected. Most (in)famously, Pat Michaels testified in Congress about climate changes and claimed that the predictions were wrong by 300% (!) – but his conclusion was drawn from a doctored graph (Cato Institute version) of the predictions where he erased the lower two scenarios:

Undoubtedly there will be claims this week that Scenario A was the most accurate projection of the forcings [Narrator: It was not]. Or they will show only the CO2 projection (and ignore the other factors). Similarly, someone will claim that the projections have been “falsified” because the temperature trends in Scenario B are statistically distinguishable from those in the real world. But this sleight of hand is trying to conflate a very specific set of hypotheses (the forcings combined with the model used) which no-one expects (or expected) to perfectly match reality, with the much more robust and valid prediction that the trajectory of greenhouse gases would lead to substantive warming by now – as indeed it has.

References

- J. Hansen, I. Fung, A. Lacis, D. Rind, S. Lebedeff, R. Ruedy, G. Russell, and P. Stone, "Global climate changes as forecast by Goddard Institute for Space Studies three‐dimensional model", Journal of Geophysical Research: Atmospheres, vol. 93, pp. 9341-9364, 1988. http://dx.doi.org/10.1029/JD093iD08p09341

- J.C. Hargreaves, "Skill and uncertainty in climate models", WIREs Climate Change, vol. 1, pp. 556-564, 2010. http://dx.doi.org/10.1002/wcc.58

The second graphic from below (Observed vs. Natural drivers, green line, red line) misses the last years for the natural drivers. It also lacks the El Nino effect, which is also a natural phenomenon.

[Response: It uses CMIP5 data (which only went to 2005), and the ENSO variability is supposed to be in the spread accounting for the internal variability. – gavin]

Nice video:

https://www.yaleclimateconnections.org/2018/06/judgment-on-hansens-88-climate-testimony-he-was-right/

Judgment on Hansen’s ’88 climate testimony: ‘He was right’ Climate scientists’ consensus: James Hansen ‘got it right’ in congressional global warming and human causation testimony 30 years ago this week. (Peter Sinclair, Wed June 20, 2018)

A nice lesson for all of us:

The ‘economic’ models projecting future emissions were wrong. Some real emissions (CO2)followed the A/B scenario, but others were more in line with C (CFC12).

However the climate model of Hansen was spot-on.

If there is one dominant factor in the uncertainty of climate projections, it’s our own behavior (we know already for a long time).

PS: Scenario C was not a realistic ‘economic’ scenario but just an assumption that all human impact would stop after the year 2000 (the ultimate impact of the millennium bug??)

A very clear and informative summary of both the predictions of the recent past and the drivers and consequences of their impact that have resulted in our present climate. Given the apparently strong role that orbital drivers have played in the past 800,000 years, I would be interested in learning the predictions pertinent to the next 20,000 years given (1)that current drivers and their trajectories remain as they are now or predicted to be in the future (without any human attempts to alter them) and (2) that all drivers impacted by human activities are curtailed or at least not further enhanced.

As an aside, it also seems to me that as long as orbital drivers are changing continuously (over long time periods)it remain difficult to determine the equilibrium climate sensitivity (“S”) to carbon dioxide.

Nature Vol 448 30 August 2007

CORRESPONDENCE

Climate: Sawyer predicted rate of warming in 1972

SIR — Thirty-five years ago this week, Nature published a paper titled ‘Man-made carbon dioxide and the “greenhouse” effect’ by the eminent atmospheric scientist J. S. Sawyer (Nature 239, 23–26; 1972). In four pages Sawyer summarized what was known about the role of carbon dioxide in enhancing the natural greenhouse effect, and made a remarkable prediction of the warming expected at the end of the twentieth century. He concluded that the 25% increase in atmospheric carbon dioxide predicted to occur by 2000 corresponded to an increase of 0.6 °C in world temperature.

In fact the global surface temperature rose about 0.5 °C between the early 1970s and 2000. Considering that global temperatures had, if anything, been falling in the decades leading up to the early 1970s, Sawyer’s prediction of a reversal of this trend, and of the correct magnitude of the warming, is perhaps the most remarkable long-range forecast ever made.

Sawyer’s review built on the work of many other scientists, including John Tyndall’s in the nineteenth century (see, for example, J. Tyndall Philos. Mag. 22, 169–194 and 273–285; 1861) and Guy Callender’s in the mid-twentieth (for example, G. S. Callendar, Weather 4, 310–314; 1949). But the anniversary of his paper is a reminder that, far from being a modern preoccupation, the effects of carbon dioxide on the global climate have been recognized for many decades.

Today, improved data, models and analyses allow discussion of possible changes in numerous meteorological variables aside from those Sawyer described. Hosting such discussions, the four volumes of the Intergovernmental Panel on Climate Change 2007 assessment run to several thousand pages, with more than 400 authors and about 2,500 reviewers. Despite huge efforts, and advances in the science, the scientific consensus on the amount of global warming expected from increasing atmospheric carbon dioxide concentrations has changed little from that in Sawyer’s time.

Neville Nicholls

School of Geography and Environmental Science,

Monash University, Victoria 3800, Australia

Remarkable, the agreement between what is measured and Hansen’s 1988 model is within 3 sigma (95% confidence level). This attests to the scientific quality of the model assumptions and underlying climate change physics and chemistry involved in the processes. Well done!

On cue, the Wall Street Journal provides the “alternative facts” for this anniversary, care of Paul Michaels and Ryan Maue of the Cato Institute. https://www.wsj.com/articles/thirty-years-on-how-well-do-global-warming-predictions-stand-up-1529623442?cx_testId=0&cx_testVariant=cx_1&cx_artPos=0#cxrecs_s

This summary is very helpful. I would be interested in reasons for the much slower increase in methane emissions.

Hansen is a giant, including in his activism. He recently used the F word in a public statement, expressing his frustration- and grief. Let’s hope that other scientists also begin to show emotions, or do whatever it takes to communicate our climate emergency to the general public. If Americans knew what you and Hansen do, our country would be much farther along in reducing emissions, including from land use. As the song said, the hour is getting late.

If I claimed to be an authority in a given field, and presented my information to a congressional panel, and in that presentation I provided 3 predictions of the future, an “if we change nothing” accelerating model, a “most plausible” continued growth model, and a “highly unlikely” third example of lower effect, and 30 years later 2/3 of my predictions were proven to be positively wrong, and the remaining 1/3 that came close I originally claimed to be the most unlikely, would my predictions really warrant a “skillful” praise from anyone with a shred of integrity? This article attempts to blow smoke up Hansen’s backside. The specifically chosen descriptions of his predictions are intended to wash away the fact that he was almost entirely wrong in his predictions, and tries to emphasize that one of his predictions came close, so that justifies the hysteria that we’ve enjoyed over these past 30 years.

The last line is probably the best example of this washing. The use of the “substantive” descriptor is supposed to bring up thoughts of accuracy. What it actually means is “it is real”. So the descriptor could still be used even if the increase was .00001 degrees. In this context, it is meaningless. It is used to sound like its a “substantial” warming, but it isn’t, so a close word is used to fake the implication.

When all is said and done, we have little to worry about and Hansen’s “skillfulness” is questionable at best.

[Response: Words actually have definitions. If the increase since 1984 had been only 0.00001 degrees, the projections would not have been skillful with respect to persistence. But please, go ahead, show us your calculation to show which projections have skill with whatever definition you prefer. – gavin]

Can you please comment on this analysis of the same claims by James H, in the Wall Street Journal, that came to the opposite conclusion? I teach information literacy, and would want to show students how to determine methods on assessing and critiquing the methods in both to come to their own conclusions:

Here is the link: https://www.wsj.com/articles/thirty-years-on-how-well-do-global-warming-predictions-stand-up-1529623442?mod=searchresults&page=1&pos=3&ns=prod/accounts-wsj

Ad 1. “[Response: It uses CMIP5 data (which only went to 2005), and the ENSO variability is supposed to be in the spread accounting for the internal variability. – gavin]”

WR: I cannot find the big 1998 El Nino in the spread as shown in the graphic. Besides this, the effects of El Nino’s are important enough to give them an own representing line (like Volcanic, Solar and Orbital).

Isn’t this graphic misrepresenting (by its omissions) the factual situation up to now? Suggesting a much smaller role for all natural variations than there is in reality: known and already accepted natural variations (also the big recent 2015-2016 El Nino) plus the unknown and/or not yet accepted natural factors – which are also supposed to be represented in the spread as ‘uncertainty’.

I demand a line on the graph for La Niña.

I am a little confused about the final conclusion here. If you look at the ~2013ish observable temperature rises for 3 years, you see a drastic increase that was not modeled and looks as an anomaly or at least deserves attention. In fact, if you remove those samples, it matches the Scenario C very closely. The claim that “By contrast, scenario C undershoots by about 40% (which gets worse over time)” is questionable at best unless there is some explanation by the author for those data points and why that trend of data will keep it in line with Scenario B (prior to those data points, Scenario B overestimates it, while Scenario C is inline with the observables).

I am not saying there is not a reason for it nor that it is unexpected, it is just omitted in this presentation and is crucial to the conclusion.

#9 Tim:

There is such a thing as wisdom: knowledge plus good “instincts” or intuitions. Hansen understood climate science and he was right: human activity has caused Earth to warm significantly and we must reduce greenhouse emissions to avoid disruptive climate change.

Anyway. Whether he was right for the wrong reasons is irrelevant: he is right now. No informed person believes otherwise.

You think you know better. But no scientific institution or learned society agrees with you. Not in any branch of science or any place on the planet.

That means you are wrong. If you don’t understand that you are delusional.

Tim, #9–

Given that the three projections (not predictions) are mutually exclusive scenarios, it would be rather remarkable if more than one proved to be right.

And I’m afraid that the fact that I even have to point that out shows graphically that you are (or at least, can be) a rather silly fellow–so I’m really glad that you’re not claiming to be some sort of authority.

re:9. “When all is said and done, we have little to worry about and Hansen’s “skillfulness” is questionable at best.”

Oh yes we all know you know so much more than literally every professional climate society in the world and thousands of peer-reviewed scientists for over a century of climate change research. Yes you are the one that has refuted all the hard science. Because you have provided the data and analysis that show peer-review for 100 years is all wrong and you, yes you, are the one who are right. /s

(Gosh, what pure statistical and scientific arrogance and ignorance you spewed.)

James Hansen on june 18 was awarded one of eight Asian Nobels from the Tang Foundation in Taiwan. Comes with $1 million prize. Awards ceremony in Taipei on Sept 21. News not reported in usa or by columbia pr office. Google for details

My comment?

Zeke Hausfather posted this fantastic graph via Twitter comparing temperatures vs. cumulative forcings for the scenarios and observed.

https://twitter.com/hausfath/status/1010240656004927491?s=12

#7 & 10 – The WSJ has a pay wall. I suspect others would like to see the whole article.

Hansen has also been vindicated with his prescriptions for GHG abatement. His ‘fee and dividend’ proposal is much like the revenue neutral carbon tax in Australia 2012-2014. Emissions declined in that period and since the repeal of the tax they have risen steadily. Many billions of $A were thrown at alternative approaches to emissions abatement with poor results.

It seems odd that climate activists praise Hansen but disdain his liking for nuclear power. We’re seeing the consequences of this as Germany struggles to phase out coal. Hansen seems to get it right more often than not.

And somewhere, a guy named “Tim” slapped his forehead and cried “D’oh!”

and either doubted his own wisdom, or that of someone he copied a comment from so he could rebunk it here at RC.

There’s a lot of wrong out there, and people do copy and paste that stuff in here like the cat proudly presenting a freshly-killed mouse.

“The Greenhouse Effect is here” says Jim Hansen, 23 June 1988.

The question you have to ask yourself …where was it before then?

Nick Stokes has indicated that a comparison to Ts might be most appropriate, because that’s what Hansen (apparently?) had available at the time. If you do that, you get above scenario B:

https://moyhu.blogspot.com/2018/06/hansens-1988-predictions-30-year.html

[Response: I don’t think that is right. The model projections are the true global mean in those simulations, and if we had worse estimates of the real global means previously, that is just too bad. If this had been a statistical model that had been trained on the (poorer) estimate, Nick might have had a point, but that is not how this was put together. An appropriate adjustment to the GISTEMP and Cowtan&Way estimates would be to make a correction for ocean SST standing in for ocean SAT in those indices – but that is small on these timescales. – gavin]

Jim Hansen certainly deserves a Muldoon for his good works but, silence gives consent, and for more than thirty years he has allowed the presss ,and TV ,to ride roughshod over the error bars of what he published under peer review .

Gavin’s good faith notwithstanding , one still has duty to remind him that all three networks jumped at the opportunity to publicize, celebrate, and downright hype the worst case scenario at the expense of the retrospectively OK Scenario C —

History happens, and as the tree falls, so must it lie.

[Response: What the media chooses to focus on is not really under the control of any scientists (but given there are things we can do to influence it), but none of that has any relevance to the scientific assessment of any projections. The numbers are what they are. – gavin]

#23 Mack

It was here, although that is neither here nor there, something not clear to people who are not all there.

Tim #9

You called them predictions. They were projections, not predictions. They were projections of the climate’s response to three different emissions scenarios. He was not trying to predict emissions.

Mack, #23– Building.

Johnno @ 21 says:

While Dr. Hansen has done many things that make him deserving of respect and praise, it doesn’t automatically follow that every opnion he may have in areas outside his field of expertise is supported by the most up to date facts. In the case of nuclear power generation it boils down to a rapidly changing economic and technological playing field, where multiple factors are comming together in a perfect storm to make nuclear, in its present configuration unprofitable, and frankly, obsolete! Granted, there are hints of some technological developments in compact modular reactors that may help nuclear rise again from the ashes but it remains to be seen.

https://climatenexus.org/climate-news-archive/nuclear-energy-us-expensive-source-competing-cheap-gas-renewables/

Furthermore, the implications of radically disruptive technologies for grid management, such as Tesla’s recently deployed ‘Giant Battery’ down in Australia serve to underscore this point.

https://electrek.co/2018/05/11/tesla-giant-battery-australia-reduced-grid-service-cost/

So the bottom line for nuclear power generation is that it is an obsolete and uneconomic technology that currently requires ever increasing government and taxpayer subsidies to survive in the marketplace. So while Dr. Hansen is certainly entitled to his opinion, like everyone else, he may just be unaware of some of the more recent writing on the wall that portends nuclear’s ultimate demise, for purely economic reasons.

Hank, #22–

At least, we may hope so…

Following the evaluations of climate forecasts is even more like watching paint dry than following the month-to-month variations in Arctic and Antarctic ice extent and ice mass. I look forward to the 40-year evaluations of Hansen’s 1988 prediction. Right now we have an “end-spurt” in the temperature record, like spurts in previous years, some of which evened out and some of which portended level shifts in the global mean temperature.

Gavin 25:

“The numbers are what they are. ”

Yes, Gavin , but we remain at risk of a disturbing reality, with two sets of facts arising from the same set of numbers, one for doing science, that reflects the numbers as published, and another metafactual set editied with a view towards driving public perceptions.

The core problem of the Climate Wars, the elision of science and advertising very much resembles that of the electoral cycle– the reduction of politics to unsound sound bytes and duelling cartoonists.

re Man #23

Where was the greenhouse effect?-

look at the moon, look at earth, the main difference is because of the greenhouse effect.

If Hansen et al. said in Earth’s Energy Imbalance: Confirmation and Implications, in 2005, that increasing ocean heat content implied the expectation of additional global warming of about 0.6C, how do papers like Leach and Millar still cling to the notion that 1.5C is still achievable?

Cox estimates equilibrium climate sensitivity at 2.8C. Since the atmospheric concentration of CO2 at the end of April 2018, was 410.31ppm compared to the 1750 reference atmospheric composition of 278 ppm, doesn’t that mean that we are already looking at (2.8 * 410.31/576) or 1.99C.

Royce estimates the thermal inertia time scale associated with global warming is ≈ 32 years. So aren’t we kooking at 2C by mid-century?

Hansen 2011 inferred the planetary energy imbalance is 0.58±0.15 Wm2. Since the surface of the planet is 510.1 million km² this amounts to 296 terawatts of heat.

Microsoft has announced it is testing the feasibility of situating and cooling its servers in the ocean depths. What they don’t acknowledge is the fact that the same devices that direct heat away from the central processing units of their servers, heat pipes, which move heat through the phase changes of the working fluid they contain, can also direct the entire heat load of global warming to a depth of 1,000 meters where, in the tropics, a portion of the heat can be converted in turbines to vital energy.

Heat relocation of this kind would extend the current 32-year grace period with global warming to 250 years, at which time, given that the diffusion rate of ocean heat is 1 centimeter a year, the heat can be recycled.

Just as putting data centers at the bottom of the ocean would produce a slight warming of the deeper water so does OTEC. Currently the surface is warming at a rate of 0.2°C per decade. Moving this heat to a depth of 1000 meters, given the diffusion rate, this heat would be distributed through the water column over 250 years and thus would neither be a serious problem to the environment nor would it deplete the OTEC resource.

Since most of the heat of warming (93%) is accumulating in the ocean we can relocate about 274 terawatts of this by converting 21 terawatts to electrical energy and the residual 253 terawatts will be available in 250 years to produce additional work.

In fact, the heat of warming can be recycled 13 times constantly providing one and a half times more energy than we are currently deriving from fossil fuels, 13 times longer than the entire 250-year span of the fossil fuel era.

@29 FM you’re not comparing apples and oranges. The big Tesla battery can do fast response frequency correction, a problem created by the amount of intermittent power on the grid. It cannot supply massive amounts of power for hours at a stretch replacing coal and gas fired generation.

South Australia also has one of the highest retail electricity prices at 47c per kwh. It also has a quarter of the world’s easily mined uranium

http://minerals.statedevelopment.sa.gov.au/invest/mineral_commodities/uranium

Bizarrely nuclear power is prohibited in Australia. When batteries actually reduce stationary emissions we’ll know it is the way ahead.

But if we had built nuclear reactors to replace coal plants continuously since 1988 we would be much better off.

Fred Magyar @ 29 — World wide nuclear power has a role to play and many nuclear power plants are under construction. But this is the wrong thread for a discussion of this matter. If you care for further exchange of commentary there is the Forced Responses thread for such purposes.

Hansen & Ramanathan share Tang Prize worth $1.3 million:

Two Scholars Awarded Tang Prize for Sounding the Alarm on Climate Change and Impact of Air Pollution

AP Press Release

2018 Jun 18

https://www.apnews.com/5b52d57f2dce89943e348f3cb3b6bee9

Re: “Misrepresentations and lies. Over the years, many people have misrepresented what was predicted and what could have been expected. Most (in)famously, Pat Michaels testified in Congress about climate changes and claimed that the predictions were wrong by 300% (!) – but his conclusion was drawn from a doctored graph (Cato Institute version) of the predictions where he erased the lower two scenarios:”

People are still doing that, even after your blogpost was published. They have no shame when it comes to intentionally misrepresenting the facts. For example, Roger Tallbloke:

https://twitter.com/RogTallbloke/status/1011136109915623424

Tim in #9 whacked the hornet’s nest and the hornets are PISSED! :)

On the issue of weather and CC, here’s a new book y’all may like – top 100 weather events of the past 4.567 x 10^9 years. Also, embedded in this article is a video of many scientists stating that Hansen was right.

https://www.wunderground.com/cat6/Top-100-Weather-and-Climate-Moments-Last-4567-Billion-Years

I’d better check this one out too so that I have a balanced perspective:

https://www.amazon.com/Human-Caused-Global-Warming-Ball-ebook/dp/B01LP5K0XK/ref=pd_rhf_se_s_cp_1_7?_encoding=UTF8&pd_rd_i=B01LP5K0XK&pd_rd_r=X9B1ZJP12FGJW9NZR957&pd_rd_w=e7nbq&pd_rd_wg=cFFxB&psc=1&refRID=X9B1ZJP12FGJW9NZR957

“In the first four months of operations of the Hornsdale Power Reserve (the official name of the Tesla big battery, owned and operated by Neoen), the frequency ancillary services prices went down by 90 per cent..’

Frequency services are provided automatically by hydro or thermal turbines, whether nuclear or fossil fueled, but not by wind turbines or PV panels. At the moment, South Australia is getting 1% of its power from wind, 3% from solar, 82% from gas, and 14% from Victorian coal. The Tesla battery has enough storage to power the state’s grid for about three minutes of average demand.

https://www.electricitymap.org/?wind=false&solar=false&page=country&countryCode=AUS-SA&remote=true

“Thirty Years Of Failed Climate Predictions.” Courtesy of my favorite climate realist, Tony Heller:

https://youtu.be/J2u_TIWPupw

Sorry to (once again) rain on your parade, folks. Unlike some other climate skeptics one could name, Heller backs up every assertion with tangible evidence.

Wesley Dingman #4 You might like to look at https://www.nature.com/articles/ngeo1358 : Determining the natural length of the current interglacial P. C. Tzedakis, J. E. T. Channell, D. A. Hodell, H. F. Kleiven & L. C. Skinner. Nature Geoscience volume 5, pages 138–141 (2012). This argues that the current interglacial would terminate within the next 1500 years if (and only if) CO2 were to fall below 250 ppm, long term. This seems unlikely to happen any time soon. Loutre, M. F. & Berger, A. Future climatic changes: are we entering an exceptionally long interglacial? Climatic Change 46, 61–90 (2000) argued (in contrast to Tzedakis et al) that the current interglacial would have been exceptionally long anyway, based on the orbital configuration. Unless CO2 levels drop dramatically over the next several hundred years or there are other major interventions, a glaciation is very unlikely, and a “super interglacial” of unknown duration seems very likely. The future climate apparently hinges largely on human activities, with the orbital forcing variations too extended to change the direction for the next few thousand years.

Victor, #42–

“…my favorite climate realist, Tony Heller…”

AKA Steve ‘CO2 snow’ Goddard. Well, that explains quite a lot.

And as to his evidentiary record being called ‘tangible’, well, my experience with his writing is that he never gives you enough information to verify what he asserts (such as actual links to alleged NOAA data he’s claiming to critique). A curious thing, given that it’s all available online. So I’d say it’s about as ‘tangible’ as that CO2 snow he once claimed fell at the South Pole.

The denialists have lost their case merely by admitting that there is such a graph, Why? Because, once you admit that this graph is what it is — it shows anthropogenic global warming — then everything else is just a question of timing.

Unless and until you can specify the mechanism that will stop this, it’s going to continue.

So, instead of arguing about the one-point-in-time error — the vertical distance between (say) Scenario B and observed temperatures — read the error the other way — for any given temperature, look at the horizontal distance between those lines.

So, half a degree of warming? Hansen B predicted that for the year 2000. We actually saw that in the 1998 El Nino, but temperatures did not convincingly stay above that level until all the way to … 2006 or so.

So, for half-a-degree of warming, Hansen B was maybe six years too early.

Three quarters of a degree? Hansen B first predicted that for around 2008 or so. We didn’t actually see that until 2016. So he was eight years early on that one. Whoop-te-doo.

One degree above this graph’s baseline? Looks like Hansen B permanently stays above that level starting in 2016. And so if, in reality, we actually get that in (say) 2024 or so? That’s a minor detail.

Two degrees of warming? Four degrees? Once you admit this graph is real, until you specify the mechanism that will stop it, it’s no longer a question of IF it will happen. You’ve already admitted that it will. The only uncertainty is WHEN it will happen. Name a particular adverse consequence — e.g., “dust-bowlification” of the US Midwest — and we’re haggling over whether its your kids or grandkids that will have to deal with it.

Hansen’s main point was that, for the first time, the “signal” of global warming could be convincingly distinguished from the noise of natural variation. In hindsight, he was absolutely right about that. A corollary if his analysis is that this warming process will continue unless we act to stop it. And he made a pretty good estimate of how fast it would proceed.

Focusing on the fact that is Scenario B predictions are a little high (or in my view, a little early) really misses the point. There are only two points you need to take away from that graph. One, if you extend the time axis, the graph continues upward at roughly the same rate, for the next century or two at least. Two, the resulting temperatures eventually imply a very screwed up USA and world.

Given what a horrific job we have done so far in dealing with this, for some denialist to say that we actually have a decade (or two or three) longer before we hit temperature X, Y, or Z — that’s just rearranging the deck chairs on the Titanic. If that’s all they can bring to the table, then the denialists really don’t disagree with mainstream science at all.

Victor says:

“Sorry to (once again) rain on your parade, folks. Unlike some other climate skeptics one could name, Heller backs up every assertion with tangible evidence.”

Steve Goddard (aka Tony Heller) was kicked out as an administrator at Watts Up With That years ago for his rabid denial of Henry’s Law, which in the end embarrassed Watts. He’s also somewhat weak (I’m being polite) of a bunch of other bits of basic physics. It’s not surprising that Victor thinks he’s an authoritative source …

So Victor’s become a mature denialist now — he started off posting his own disbelief from simple incredulity (“can’t be happening”) then advanced toward pretend science (“anything but CO2” and “eyeball the chart”) and has now settled into mature denialist status, repeatedly rebunking old stuff as though it were something new and disconcerting. He’s citing his favorite sources, all of them prime wells of bunkum, and he’s been rebunking those guys’ old claims in his posts as though they were somehow newsworthy.

Congratulations, Victor, you’ve become consistently, repetitively boring. Everything you’ve posted you could have looked up and found had been long since addressed by many others. You’re now a mature copypaster of others’ misstatements.

“Unlike some other climate skeptics one could name, Heller backs up every assertion with tangible evidence.”

That would be the Heller who got an article on the arctic published in a UK newspaper, and then retracted because he made numerous mistakes – who got kicked off WUWT for making too many stupid mistakes (his pixel counting stuff is legendary), and who even after years of attempts at education by Nick Stokes still does not understand one yota about area weighting, that the US is not the world, and that you cannot just compare temperatures from many decades ago with current temperatures – measurement methods have changed which must be corrected. He makes all of these mistakes in the video you provide. Add the various misrepresentations, and there we go: another climate misinformer. Well, with the caveat that Heller might actually believe what he is saying.

Oh, Victor, you really don’t research your enthusiasms.

Tony Heller’s arithmetic was too wrong even for Watts to promote.

http://rankexploits.com/musings/2014/how-not-to-calculate-temperature/#comment-130003

You can look this stuff up. When you find something you’re sure supports your “anything but CO2” claims, do Google the statement.

That way you’ll know what’s bunk and what’s not, before you rebunk old stuff you’ve just discovered as though it were news.

#42 One wonders whether Victor has finally seen sense and is posting in parody, but (caveat Poe’s Law) it’s hard to tell. Steven Goddard/fake-name Heller the passing-off-website-name, bleating of fraud and posting trash on El Reg back in its dark, stupid days?

Back on topic, what do the authors think of this analysis? https://arstechnica.com/science/2018/06/barents-sea-seems-to-have-crossed-a-climate-tipping-point/