A recent story in the Guardian claims that new calculations reduce the uncertainty associated with a global warming:

A revised calculation of how greenhouse gases drive up the planet’s temperature reduces the range of possible end-of-century outcomes by more than half, …

It was based on a study recently published in Nature (Cox et al. 2018), however, I think its conclusions are premature.

The calculations in question involved both an over-simplification and a set of assumptions which limit their precision, if applied to Earth’s real climate system.

They provide a nice idealised and theoretical description, but they should not be interpreted as an accurate reflection of the real world.

There are nevertheless some interesting concepts presented in the analysis, such as the connection between climate sensitivity and the magnitude of natural variations.

Both are related to feedback mechanisms which can amplify or dampen initial changes, such as the connection between temperature and the albedo associated with sea-ice and snow. Temperature changes are also expected to affect atmospheric vapour concentrations, which in turn affect the temperature through an increased greenhouse effect.

However, the magnitude of natural variations is usually associated with the transient climate sensitivity, and it is not entirely clear from the calculations presented in Cox et al. (2018) how the natural variability can provide a good estimate of the equilibrium climate sensitivity, other than using the “Hasselmann model” as a framework:

(1)

Cox et al. assumed that the same feedback mechanisms are involved in both natural variations and a climate change due to increased CO2. This means that we should expect a high climate sensitivity if there are pronounced natural variations.

But it is not that simple, as different feedback mechanisms are associated with different time scales. Some are expected to react rapidly, but others associated with the oceans and the carbon cycle may be more sluggish. There could also be tipping points, which would imply a high climate sensitivity.

The Hasselmann model is of course a gross simplification of the real climate system, and such a crude analytical framework implies low precision for when the results are transferred to the real world.

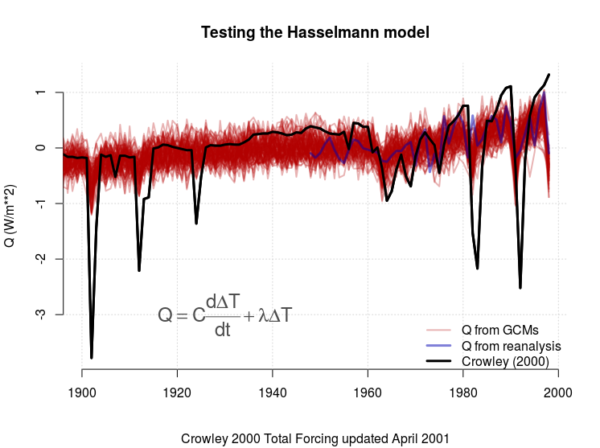

To demonstrate such lack of precision, we can make a “quick and dirty” evaluation of how well the Hasselmann model fits real data based on forcing from e.g. Crowley (2000) through an ordinary linear regression model.

The regression model can be rewritten as , where

,

, and

. In addition,

and

are the regression coefficients to be estimated, and

is a constant noise term (more details in the R-script used to do this demonstration).

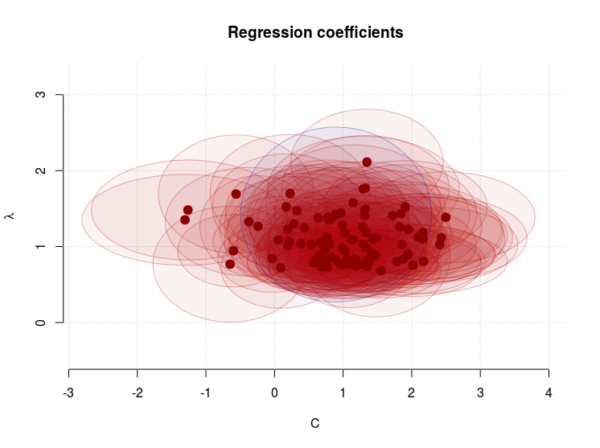

It is clear that the model fails for the dips in the forcing connected volcanic eruptions (Figure 1). We also see a substantial scatter in both (some values are even negative and hence unphysical) and

(Figure 2).

The climate sensitivity is closest associated with , for which the mean estimate was 1.11

, with a 5-95-percentile interval of 0.74-1.62

.

We can use these estimates in a naive attempt to calculate the temperature response for a stable climate with and a doubled forcing associated with increased CO2.

It’s plain mathematics. I took a doubling of 1998 CO2-forcing of 2.43 from Crowley (2000), and used the non-zero terms in the Hasselmann model,

.

The mean temperature response to a doubled CO2-forcing for GCMs was 2.36, with a 90% confidence interval: 1.5 – 3.3

. The estimate from reanalysis was 1.71

The true equilibrium climate sensitivity for the climate models used in this demonstration is in the range 2.1 – 4.4 , and the transient climate sensitivity is 1.2 – 2.6

(IPCC AR5, Table 8.2).

This demonstration suggests that the Hasselmann model underestimates the climate sensitivity and the over-simplified framework on which it is based precludes high precision.

Another assumption made in the calculations was that the climate forcing Q looks like a white noise after the removal of the long-term trends.

This too is questionable, as there are reasons to think the ocean uptake of heat varies at different time scales and may be influenced by ENSO, the Pacific Decadal Oscillation (PDO), and the Atlantic Multi-decadal Oscillation (AMO). The solar irradiance also has an 11-year cycle component and volcanic eruptions introduce spikes in the forcing (see Figure 1).

Cox et al.’s calculations were also based on another assumption somewhat related to different time scales for different feedback mechanisms: a constant “heat capacity” represented by C in the equation above.

The real-world “heat capacity” is probably not constant, but I would expect it to change with temperature.

Since it reflects the capacity of the climate system to absorb heat, it may be influenced by the planetary albedo (sea-ice and snow) and ice-caps, which respond to temperature changes.

It’s more likely that C is a non-linear function of temperature, and in this case, the equation describing the Hasselmann model would look like:

(2)

Cox et al.’s calculations of the equilibrium climate sensitivity used a key metric which was derived from the Hasselmann model and assumed a constant C:

. This key metric would be different if the heat capacity varied with temperature, which subsequently would affect the end-results.

I also have an issue with the confidence interval presented for the calculations, which was based on one standard deviation . The interval of

represents a 66% probability, and can be illustrated with three numbers: and two of them are “correct” and one “wrong”, which means there is a 1/3 chance that I pick the “wrong” number if I were to randomly pick one of the three.

To be fair, the study also stated the 90% confidence interval, but it was not emphasised in the abstract nor in the press-coverage.

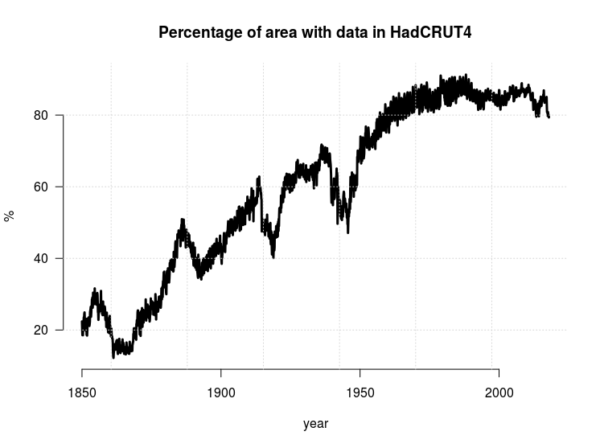

One thing that was not clear, was whether the analysis, that involved both observed temperatures from the HadCRUT4 dataset and global climate models, took into account the fact that the observations do not cover 100% of Earth’s surface (see RC post ‘Mind the Gap!’).

A spatial mask would be appropriate to ensure that the climate model simulations provide data for only those regions where observations exists. Moreover, it would have to change over time because the thermometer observations have covered a larger fraction of Earth’s area with time (see Figure 3).

An increase in data coverage will affect the estimated variance and one-year autocorrelation

associated with the global mean temperature, which also should influence the the metric

.

My last issue with the calculations is that the traditional definition of climate sensitivity only takes into account changes in the temperature. However, there is also a possibility that a climate change involves a change in the hydrological cycle. I have explained this possibility in a review of the greenhouse effect (Benestad, 2017), and this possibility would add another term the equation describing the Hasselmann model.

I nevertheless think the study is interesting and it is impressive that the results are so similar to previously published results. However, I do not think the results are associated with the stated precision because of the assumptions and the simplifications involved. Hence, I disagree with the following statement presented in the Guardian:

These scientists have produced a more accurate estimate of how the planet will respond to increasing CO2 levels

References

- P.M. Cox, C. Huntingford, and M.S. Williamson, "Emergent constraint on equilibrium climate sensitivity from global temperature variability", Nature, vol. 553, pp. 319-322, 2018. http://dx.doi.org/10.1038/nature25450

- T.J. Crowley, "Causes of Climate Change Over the Past 1000 Years", Science, vol. 289, pp. 270-277, 2000. http://dx.doi.org/10.1126/science.289.5477.270

- R.E. Benestad, "A mental picture of the greenhouse effect", Theoretical and Applied Climatology, vol. 128, pp. 679-688, 2016. http://dx.doi.org/10.1007/s00704-016-1732-y

“The claim of reduced uncertainty for equilibrium climate sensitivity is premature” This is what many climate skeptics have been saying for years and they have been called deniers for their efforts.

How does it feel to be a denier? Probably feels like you are doing science and you do not care what other less informed may say. Welcome to the camp of “the deniers”, those for who the science is not settled.

[Response: I don’t see your logic here. -rasmus]

Sorry, you lost me at equation 1 through not defining the symbols. I can guess at some but I’m not sure; e.g, is Q an amount of energy or an energy flow (power)? I’d normally expect it to be an amount of energy but think it’s the net in- or outflow here.

[Response: Yes, Q is the forcing, C is Earth’s “heat capacity”, $\lambda$ is a measure for the change to the temperature response due to feedback mechanisms. -rasmus]

Anyway, a rather fundamental question about something I recently realised I wasn’t sure of: is ECS > TCS or could it be less? In other words, after the immediate increase in temperature caused by a doubling of CO₂ over 70 years, would the temperature necessarily continue to increase a certain (large or small) amount or could the more slowly acting feedbacks cause it to drop back in the longer term?

[Response: ECS is usually considered greater than TCS because it takes time for the system to stabilise. Good question about different feedbacks and timescales. I don’t know the answer. -rasmus]

There’s the complication that if CO₂ stays level the amount of human-created aerosols would presumably reduce. Does that affect the answer?

[Response: Yes, probably. -rasmus]

Rasmus: Peter Cox kindly pointed me to the extended data, which show a comparison where the models are masked to match the HadCRUT4 observations:

https://www.nature.com/articles/nature25450/figures/6

The other question I had is whether using blended rather than air temperatures would make a difference, which I am interested in exploring with them. I don’t have good intuition as to whether this will affect the results, but I don’t expect it to be a major issue.

Rasmus – your response to Ed D at #2 appears to suggest that there is no satisfactory prediction of the behaviour of the feedbacks post ECS being achieved after a doubling of CO2. Is this correct, or am I mis-reading the text ?

[Response:An equilibrium would also suggest that the action of the feedbacks also have stabilised, so I think a more appropriate question is whether there are any slow feedback mechanisms that slowly kick in before the state of equilibrium to moderate the end-effect. This of course is in an ideal and theoretical setting. There are some long simulations with global climate models, but I don’t know if there have been any studies dedicated to answer your question. -rasmus]

If it is correct, then the term Equilibrium Climate Sensitivity would surely be a misnomer, in that no predictable equilibrium is achieved.

Short of the exhaustion of both ice & snow stocks and of the various carbon stocks that are vulnerable to outgassing as a consequence of raised SAT (by which point H. Sapiens is of course long gone), I’ve yet to see a cogent explanation of quite why the feedbacks should stop their acceleration and instead reduce their outputs towards zero simply because the anthro-GHG outputs raising SAT are someday halted. Surely any such deceleration of the feedbacks would require a substantial global cooling to have occurred?

Perhaps you could explain ?

Regards,

Lewis

[Response: I don’t know of any feedbacks should stop their acceleration and instead reduce their output on the longer term. I hope I didn’t give the impression that this were the case. -rasmus]

#1 Response to response from ramus, no logic seen:

Maybe you are so deep in the science that this makes no logic to you. I agree in part, logic is not the highest concern in these matters.

[Response: Another thing is the bad habit of putting labels on each other. It’s better to discuss the results, the analysis, and the methods. -rasmus]

Dan DaSilva #1, #4,

It would be helpful if you could reference your own equations on this subject, since I for one have missed them if they were presented here.

So far, this just seems to be the normal course of scientific discourse…someone publishes, and others either agree, or present alternative methods to calculate the result.

Where is the “denial”?

Presumably Cox et al. emphasised the 1-sigma uncertainty range since the IPCC report climate sensitivity range of 1.5-4.5 °C is a 66% likely range.

However the two ranges are not comparable. Cox et al. provide a statistical uncertainty range for a single study, ignoring structural uncertainty and systematic biases resulting from their choice of model and method. The IPCC range, on the other hand, encompasses the overall uncertainty across a very large number of studies, using different methods all with their own potential biases and problems (e.g., resulting from biases in proxy data used as constraints on past temperature changes, etc.) There is a number of single studies on climate sensitivity that have statistical uncertainties as small as Cox et al., yet different best estimates – some higher than the classic 3 °C, some lower.

Thus, the robust information here is not that this latest study in itself reduces uncertainty. It is that for the past four decades, studies of climate sensitivity with all kinds of different methods keep clustering around the best estimate of 3 °C, making us ever more certain that the real climate sensitivity is indeed close to that value.

I have a question that relates to the middle question and reply at #2 from Ed Davies.

Would extreme weather events be less probable after equilibrium is reached, even though the mean temperature would be higher?

I realize that “extreme” is a moving target, but I have always assumed that the redistribution of energy during the transition phase would be more erratic/intense than the extremes of the new, equilibrium, climate regime.

Answers would be appreciated; I am not invested in my guess.

[Response:Extremes are often considered as the “tails” of the statistical distribution or the probability density function (pdf). I tried to explain that in a previous post. A new equilibrium due to changed forcing implies a shift in the statistical distribution, and hence the occurrence of extremes. We assume that natural variability will still be present in an equilibrium climatic state. -rasmus]

The original heading for the Guardian article (usually one of the few papers in the UK to give AGW decent coverage) stated that following the new research the more extreme temperature increase predictions are ‘not credible’.

Which is nonsense. At the most it would make then less likely. The Guardian has recently changed editor so hoping they don’t let their science reporting slip.

Excellent post! The main limitation of the study is the time scale issue. The Hasselmann model has a single time scale which is controlled by the heat capacity C which can be visualised as corresponding to the depth of a single well-mixed ocean box which damps the temperature fluctuations. The real ocean damps temperature fluctuations on multiple time scales differently. The example with the volcano driven variability shows this nicely.

A simple diffusive ocean model would be more appropriate as has been shown by many studies of the mixing of tracers or of anthropogenic carbon dioxide in the ocean. Indeed, Jim Hansen used such a diffusive mixing for representing ocean heat uptake in several of his seminal papers. The response of a diffusive ocean model to white noise forcing will be different than in the Hasselmann 1-box model and would thus yield a different relationship between the observed temperature fluctuations and the equilibrium climate sensitivity.

Other feedbacks in the climate system also operate on longer time scales, e.g. permafrost carbon. Clearly on interannual and decadal time scales this feedback plays no role, but might be different on a 50-100yr time scale, which is the time scale IPCC implicitly addressed with the 1.5-4.5°C range.

That the article in question was deemed headline-worthy by The Guardian is significant. Culture is part of the climate system: e.g., scientific thought interacts with mass and elite beliefs and through them, potentially, with emissions. One might define a new term, at least a soft or analogical one: “science sensitivity,” the tendency of increasing global temperature to decrease future warming through scientific understanding leading to cultural and behavioral change. Journalism is a major path in this feedback but, alas, a weak one: even honest media like the Guardian favor, as in this case, tales of the unexpected or apparently anomalous, science bites dog. (If the Nature paper’s climate sensitivity range had matched that of most prior research, I doubt it would have made the news.) “News” is thus a highpass filter for science (and everything else). That which changes slowly or not at all, being persistent or structural rather than spiky and “new,” tends not to get through. So we have the phenomenon that many people who consume an endless diet of news (even the non-fakest) never gain much substantive structural understanding of climate (or much else).

“Science sensitivity” is therefore low. The most important part of the science signal, the part that isn’t changing or novel every day or week, gets attenuated by news media.

Another way of saying it: Although we are seeing less outright false balance in climate coverage than a decade or two ago, bias against mainstream science understanding persists in the relatively subtle form of selective reporting of eyebrow-raising claims, which strengthen the impression that scientists are always changing their story, in which case, shrug. Our narrative-making powers are too often confounded by an endless shriek of high-frequency news noise.

What would help, but which news media are structurally unlikely to deliver, are regular headlines more like this:

“10,566 Papers Across Many Fields Confirm Climate Change in 2017: Scientists Pursue Questions, but Core Narrative Is More Fruitful than Ever.”

But from an editor’s point of view, there’s no _news_ there . . .

DDS 1: “The claim of reduced uncertainty for equilibrium climate sensitivity is premature” This is what many climate skeptics have been saying for years and they have been called deniers for their efforts.

How does it feel to be a denier? Probably feels like you are doing science and you do not care what other less informed may say. Welcome to the camp of “the deniers”, those for who the science is not settled.

BPL: Doesn’t follow. Deniers are insisting that climate sensitivity has to be low. Scientists are saying that it’s uncertain. Do you understand the difference?

Dan DaSilva, I am trying to parse your odd comment. It seems that you are trying to construct a strawman. ie the scientist are saying the estimates of climate sensitivity is settled whereas “skeptics” claim it not. However, where is the claim that you making? The IPCC reports extensively on ECS estimates and put it between 1.5 and 4.5 with median at around 3. Whether you regard that as “settled” or not depends on your point of view. If you knew for certain that sensitivity was 4.5, then that would require a stronger policy response than if you knew for certain that it was 1.5.

However, the new study is presenting evidence to narrow that range (though median is still around 3). As with any study, before it is accepted, scientists need to be assured it is valid. Clearly not. However, this is not “denial”. It is examination of the methodology and assumptions. By contrast, climate “skeptics” are usually of the “I dont like the policy options, therefore climate science is wrong” or “not what my tribe believes” kind. Instead of publishing credible critiques of climate science, jump to misrepresentation,accusations of fraud, conspiracy theories, strawman arguments, anecdotes, cherry picking and outright denial of the evidence. A very big difference. See any of those in Rasmus’s article? Compare that with a “skeptic” blog.

Thanks Rasmus, informative even with my poor math background.

David Spratt has an interesting take on the denial aspects of the Cox paper:

http://www.climatecodered.org/2018/01/new-study-on-climate-sensitivity-not.html

And what about Proistosescu et al

http://advances.sciencemag.org/content/3/7/e1602821

“They provide a nice idealised and theoretical description, but they should not be interpreted as an accurate reflection of the real world.”

Thank you, thank you, thank you.

This was exactly my impression of the study from careful reading of the articles on it. Too bad the main message from most media coverage was so distorted!

Reading some comments reassures me I need not apologise for ignorance. The Cox et al paper claims more precision estimating the ECS than the IPCC, but it seems the accuracy is little altered. The best guess goes from 3C to 2.8C. Hardly reassuring, and less so now other scientists have expressed concern over the methodology.

In any case what we actually do is more important and picking a number. We aren’t going to have enough time to do what we need to do whatever the value is, because most people see no urgency whatsoever, and it will take some time for them to die off.

Especially Trump. His doctor said he might live to 200.

Mr Rahmstorf

I can point you to 75 papers that give the sensitivity as a lot less than 3C

http://notrickszone.com/50-papers-low-sensitivity/#sthash.8K6XmGUz.Qg4obgfY.dpbs

Mr Scadden

I read a SKEPTIC BLOG THAT PUBLISHES REAL SCIENCE

https://wattsupwiththat.com/2018/01/22/claim-climate-sensitivity-narrowed-to-2-8c/

Some basic background info would surely help here:

https://www.gfdl.noaa.gov/transient-and-equilibrium-climate-sensitivity/

Except that the ‘government shutdown’ has pulled all noaa sites from the web, how ridiculous is that? Trump has gone off the deep end, hasn’t he. . . Regardless, I copied this some time ago, so here’s the key point:

“The transient climate response, or TCR, is traditionally defined in terms of a particular calculation with a climate model: starting in equilibrium, increase CO_2 at a rate of 1% per year until the concentration has doubled (about 70 years). The amount of warming around the time of doubling is referred to as the TCR. If the CO_2 is then held fixed at this value, the climate will continue to warm slowly until it reaches TEQ”

I’m still unclear on the timeframe to reach TEQ in this (highly simplified) scenario – even if humans eliminate fossil fuels in 7 years, even if the 1% per year rate is exceeded, what are they really saying here? That permafrost won’t keep outgassing if humans quit fossil fuels 70 years from now? Why is that credible?

So this is really just a benchmark measure of the climate models – and there are so many other factors in the real world, such as carbon feedbacks (permafrost, shallow marine methane, etc.), human use of fossil fuels over the next 50 years, etc., let alone random events like massive volcano emissions (Pinatubo upset many projections, but provided a useful test of things like water vapor feedback), etc.

Hi, please can you elaborate on why you chose the word ‘premature’ in the article’s title?

For people who get their opinions from the Daily Malice, this is what peer reviews are all about. Forensic exploration of scientific hypotheses. I don’t understand it either, but in the end I do know this kind of discussion leads to sound consensus and greater confidence in the conclusions finally agreed

It is regrettable that the author of this piece did not bother to speak to the authors of the work that they are critiquing. Likewise, the journalist of the Guardian article did not speak to any of us. There is really no excuse for that when we make ourselves available to discuss the work (in my case on twitter). If you really want to know what we did, rather than guessing, we would be happy to write an explanation for you. All you need to do is ask.

[Response: Very much appreciated! On the other hand, a discussion like this is very valuable, and I’m not the only person who had issues with the paper. If the paper was unclear or there are parts that are misunderstood, it’s even better to have such a discussion. We may not end up with the same view, but I can live with that. -rasmus]

I appreciate the response to my comment #8; as often happens I was perhaps too brief and unclear with my question:

A. We are now in a period of transition, where the energy in the climate system is increasing. (Rising temperature.)

B. If and when we stop messing with the planet, there will eventually be a new equilibrium with energy in = energy out. (Stable, but higher than pre-industrial, temperature.)

The comparison I’m looking for is between a few hundred years in A and a few hundred years in B.

This is a question about the physics of the system, if anyone can give an educated projection. What will the probability distribution of extreme “weather” look like in each case?

In other words, is the rate of change in A a factor in creating more intense storms, or destabilization of ice, or other effects we are concerned with?

Dan DaSilva,

Thanks for the comic relief. We can always count on you for a laugh!

Zebra,

Tamino has looked at extreme temperature and precipitation events to some extent and found that it looks as if the distribution mainly shifts toward the right with the width remaining about the same.

Nonequilibrium is not something physics does particularly well. Near equilibrium, probably a little better, and that is probably closer to what we have.

Gilman at #11 — can’t really blame the Guardian when the commentary in Nature itself announced “A compelling analysis suggests that we can rule out high estimates of this sensitivity.”

A minor point, but the Guardian article linked to here isn’t written by Guardian staff. It’s a wire story written by the news agency Agence France-Presse (AFP).

Hi Folks

I fully expect people to be sceptical about our study (as it promises a lot for apparently so little), but unfortunately some of the views above are based-on a critique which misrepresents what we actually did. I would therefore like to correct some misconceptions so that people can at least be sceptical about our work for the right reasons…:-).

1) We use an Emergent Constraint (EC) approach, which means that we use GCMs to determine the relationship between variability and ECS. The Hasselmann model is merely used to inform our search for the most appropriate metric of variability.

2) We do not assume the Hasselmann model is a good representation of the historical warming. Of course it isn’t and we say that very clearly in our paper: “The constant heat capacity C in this model is a simplification that is known to be a poor representation of ocean heat uptake on longer timescales. However, we find that it still offers very useful guidance about global temperature variability on shorter timescales”.

3) We reduce the impact of long-timescales on our EC by linearly-detrending in a 55 year window (though other window-widths work almost as well), and then calculating a metric of variability (PSI) that is independent of the heat capacity. This will work so long as the effective heat capacity is near constant within the window, and that holds so long as the window separates the fast (~5 years) and slow (a few hundred years) timescales of complex climate models (see for example Geoffroy et al., 2013).

4) We do not ignore slow feedbacks. These are included in the ECS values diagnosed from the complex climate models. However, variability is a constraint on ECS most likely because the uncertainty in ECS across current climate models is dominated by fast feedbacks (notably clouds), and these affect the variability. Obviously, slow feedbacks would not be seen in short-term variability, but these are not the main cause of uncertainty in ECS.

5) As Kevin Cowtan rightly says, we did check that the coverage of the observational data in HadCRUT4 did not affect our emergent constraint. We also checked that using different observational datasets (NOAA, Berkeley, GISTEMP) gave similar results (results shown in Extended Data).

If you still have questions please do not hesitate to get back to me. We are attempting to do objective climate science, rather than to support a particular advocacy position, so direct communication would be greatly appreciated.

Thanks

Peter Cox

Thanks for the clarification Peter – my comment on the time scales was wrong: The Hasselmann model is used in their study only as a smart theoretical underpinning of the relationship between the temperature fluctuations and the ECS. A more sophisticated stochastic model with more time scale modes would also lead to a more or less linear relationship – as the ESMs used in the study do.

This leaves the question open why the study of Brown and Caldeira in Nature of December 2017 came to an opposite conclusion, also by constraining the CMIP5 ESM model simulations ensemble, albeit with other metrics.

@Peter Cox,

From the abstract: ”ECS is defined as the global mean warming that would occur if the atmospheric carbon dioxide (CO2) concentration were instantly doubled and the climate were then brought to equilibrium with that new level of CO2.

Why was the 70-year gradual increase to double the CO2 omitted? It would seem that if you wanted to examine this using GCMs and temperature variability, you’d want to run the more realistic scenario of a gradual 1% increase in CO2 per year followed by adjustment to equilibrium. The claim that this doesn’t make any difference, i.e. instantaneous doubling vs. a slow increase to doubling, that’s hard to understand.

Second, in terms of the Paris Agreements, as the abstract states: ”The possibility of a value of ECS towards the upper end of this range reduces the feasibility of avoiding 2 degrees Celsius of global warming, as required by the Paris Agreement.”

Clearly the ECS isn’t the only factor, it might not even be the main factor. The overall carbon dioxide and methane and nitrous oxide and aerosol content of the atmosphere over the next 80 years is what matters, so it seems that carbon cycle models are needed (with the major uncertainty there being future human behavior, followed by permafrost and shallow marine responses). This is a complex problem – for example, given that roughly 50% of fossil fuel CO2 emissions are absorbed by the ocean and land, what happens if we stop all fossil fuel combustion? Does atmospheric CO2 then start to decline as a result of these absorption processes, or are those sinks just taking up the excess, or will the warm Arctic permafrost emissions rule this out?

In terms of advocacy, this is tricky; people in the fossil fuel sector will doubtless claim that lower climate sensitivity means rapid reductions in fossil fuel use are not necessary, so they can go on with their plans for more gas and oil development without breaching the Paris Agreements, etc. This isn’t a criticism of the study, just a comment on the public relations industry (which doesn’t see science results as anything other than talking points that may or may not serve their agenda).

@zebra

I think the extreme weather factor is all about the increasing lower-tropospheric water vapor content, which plays out in storms as a latent heat issue. Some have claimed that since the poles warm faster than the equator, the pole-to-equator temperature differential will decline, but that claim ignores seasonality as well as the very large temperature differential from pole to equator; a 6C warming at the poles and a 3C warming at the equator would have little effect on the overall temperature differences; so warmer wetter air mixing with cold dry Arctic air means more severe and unstable midlatitude weather, regardless of whether we are in a transient or an equilibirium position. And of course, other factors being constant, more subtropical water vapor means more energy for hurricanes too.

Zebra @23

“In other words, is the rate of change in A a factor in creating more intense storms, or destabilization of ice, or other effects we are concerned with?”

It’s hard to see why it would be. More heat energy = more intense storms. This seems like an adequate explanation.

22 & 27 Peter Cox. Was very easy to see that was coming.

If you really want to know what we did, rather than guessing, we would be happy to write an explanation for you. All you need to do is ask. — If you still have questions please do not hesitate to get back to me…. direct communication would be greatly appreciated.

Communications Experts have recently coined the term “MA Rodger Syndrome” to describe this dynamic.

The simple solution is called Active Listening: Clearly, listening is a skill that we can all benefit from improving. By becoming a better listener, you will improve your productivity, as well as your ability to influence, persuade and negotiate.

What’s more, you’ll avoid (unnecessary) conflict and misunderstandings and not come across as a “Mr. Know It All” so often.

https://www.mindtools.com/CommSkll/ActiveListening.htm

eg “Our personal filters, assumptions, judgments, and beliefs can distort what we hear. As a listener, your role is to understand what is being said.”

This may require you to reflect what [ YOU THINK / IMAGINE ] is being said and ask [ CLEAR OPEN-ENDED ] questions to check for correct understanding [ BEFORE engaging in any criticism/critique of what you ONLY BELIEVE was said/intended.]

That’s known as Known Science btw.

Which proves that shooting from the hip and shooting one’s mouth off doesn’t help one bit. :-)

What would also help is applying the following 3 key principles:

1) Maintain or enhance Self-esteem

2) Listen and respond with Empathy

3) Ask for help in solving the problem.

Easy to say. Easy to do.

Spencer at #25: I don’t blame the Guardian particularly, but want to draw attention to the overwhelming tendency of media to report delta, real or apparent — a systematic bias that, however understandable, contributes (I suggest) to public misperception of science. If the sentence in Nature had read “A compelling analysis suggests existing range of estimates for climate sensitivity is spot on,” I doubt the study would have been reported as news.

Even peer-reviewed journals can suffer from novelty bias (see, e.g., https://www.sciencenews.org/blog/science-public/journal-bias-novelty-preferred-which-can-be-bad ). In popular science reporting, novelty bias is practically the point. One aspect of this is single-paper headlining (case in point), though single papers rarely shift fields. Surely this sort of thing may distort communication of science to the public — and thus society’s material response to climate change.

Only 1 in 10 Americans feel well-informed about climate change (http://environment.yale.edu/climate-communication-OFF/files/ClimateChangeKnowledge2010.pdf). Much of the rest of the world isn’t hugely better off. It’s worth probing the why.

Alan “I read a SKEPTIC BLOG THAT PUBLISHES REAL SCIENCE”

I believe that must be a typo. Shouldnt it be “I read a CONSPIRACY THEORY BLOG THAT PUBLISHES NONSENSE ONLY FOR PEOPLE WITHOUT CRITICAL THINKING SKILLS”. Like CO2 Snow in Antarctica? El Nino warming the ocean? The numerous “we’re cooling” nonsense? Give us a break.

One can also assume you havent read the “75 papers with low sensitivity” (nor the compiler apparently).

Alan, you dont have to work very hard at WUWT to find “misrepresentation,accusations of fraud, conspiracy theories, strawman arguments, anecdotes, cherry picking and outright denial of the evidence”

in just about any article. Try it some time.

On blending: I’ve now repeated the Psi calculation using blended air/sea rather than air temperatures from the models. The effect is insignificant. While there may be issues to be addressed, blending is not one of them.

Ray Ladbury and Racetrack Playa,

Thanks, you have helped me to clarify the question (always the hardest part).

Going back to Ed Davies and Rasmus’ replies at #2: We know that there are feedbacks and system responses (e.g. ocean convection and advection of energy) that operate on various time scales.

Ray, let’s plot the distribution on the vertical axis for some metric related to RP’s water vapor content (isn’t there something called “hurricane energy” or the like?), and create a time series.

My conjecture– and yes, I realize that it is only a conjecture– is that such a variable may exhibit excursions, in response to the delta forcing, that overshoot its ultimate equilibrium values, as a result of the responses and feedbacks mentioned above.

If we think about all of the variables that can possibly impact humans, it may be that the bumpier part of the ride is going to happen during the transition, even if the mean temperature never exceeds the future equilibrium value.

#6 zebra

“Denial” is in the mind. It needs only emotion.

Dan DaSilva: ““Denial” is in the mind. It needs only emotion.”

Wait. Nobody say anything. Let’s see if he gets it.

Or, Dan, would you like to come in again?

Dan (@37): I couldn’t agree more that climate denial is largely a product of emotional. It’s certainly not based on science…

As a french retired engineer, (not a climatologist…) I have some naiive questions that puzzle me (or rather… make me feel unconfortable !) Many thanks in advance for comments !

– I understand that the 2018 forcing, (with feedbacks related to more than 400 ppm CO2), may be close to 2 W /m2 (assuming “central values only”… i. e. disregarding, in most of this msg, the various confidence intervals.)

– ~10 -15 years ago, this central value was rather 1,6 W /m2 (as the sum of 2,8 W /m2 of positive feedbacks and 1,2 W /m2 negative ones, according to AR4-WG1, I believe.) This implies an energy flow over 800 TW (according to Earth surface: ~509 .10**12 m2.)

– Without forgetting a significant interannual variability in OHC increase… the average value, 10-15 years ago, was close to 8 ZJ per year, that is #250 TW on average.

Since this OHC increase is considered to be more than 90% of the “heating rate”… (according to AR5-WG1), it seems to me that there is a “gap” (over 500 TW), of “non heating”… energy flux ! (?)

– I understand that less than 10 TW may be explained by ice melting… In order to “lodge” somewhere… this “puzzling 500 TW gap”… I do not see any other “spot” than the #40 PW of latent heat flux that maintain the water cycle…

– Should I assume that this item of Earth energy flux balance may have increased by ~1,2% during the second half of the 20th century ? (For instance, with a shortening of ~1,2% of the ~9,5 days average “life time of water vapour” – or “temps de residence”… in french – that may be inferred from 20th century hydrographic data ?)

– If these considerations are correct, (I guess they aren’t !), what may be expected about the variability of this “share”, (over 60%), of “non heating”… energy flux ?

Another set of questions related to climate sensitivity:

========================================

– the responses to the seasonal forcing (#10 W /m2 in the Southern Hemisphere, and twice as much in the NH), are both very close to the value of 0,57 °C / W /m2.

Of course, this “annual sensitivity”… should not be considered as a kind of proxy for the one related to a forcing… indeed 10 times smaller… but “on going”; and steadily increasing: about ~0,3 W /m2 every 10 years; (for the present status of atm. CO2 concentration.)

However, the corresponding increase in surface T (global average), of 0,17°C /decade, also leads to less than 0,6 °C / W /m2.

– Historically, transient climate sensitivity (TCS) has been evaluated thanks to satellite measurements series of Earth Radiative Balance (e g “ERBS” time series, followed by “CERES”, or even the french “SCARAB” on a Russian Meteor).

When OHC increase became availaible, it yielded new values of TCS which, by the way, are lower than those derived from ERB series.

– If, (as I believe), measurements of OHC increase are more accurate (or less inaccurate…), than those of ERB, should we consider to limit the upper range of values for climate sensitivity ?

Thank you Prof. Cox for your comments. I reread your paper in their light and understood it better.

You write:

“The variance of the net radiative forcing is approximately equal to the variance of the top-of-the-atmosphere flux …”

How strong is the evidence for the approximation ?

sidd

Thomas, #31:

Very true–all except for the very last three words.

Not to be a Debbie Downer, but in fact it’s quite difficult to do. For one thing, what “[ YOU THINK / IMAGINE ] is being said” is very often, from one’s own point of view, so obviously and transparently “[ REALLY WHAT ] is being said” that the recommended reflection process is completely short circuited as (apparently) unnecessary. For example, I’m sure that Killian really thinks that people are lying when he accuses them of doing so. And that assessment is typically pre-conscious; it happens BEFORE rational questioning can kick in. So you have to train yourself to ‘pause’ before reacting, to allow reflection its chance. It can be done, but it is emphatically not easy.

For another, the problem is compounded by our own emotional responses. True, the ‘maintenance of Self-esteem’ helps with that, but the recommended ‘maintenance’ is a highly non-trivial problem, and comes without a user’s manual–or rather, with a plethora of partially contradictory ones, all of which are unauthorized by the manufacturer. That’s exacerbated by a notable lack of model uniformity… For example, I react angrily when someone tells me I’m lying, or ascribes motives to me that are not mine, or perhaps which I do not wish to acknowledge as mine.

For a third, speech (and writing) is full of emotional content even (or sometimes perhaps especially) when we think we are being the most purely rational. Zingers are always tempting, and the desire to ‘win’ is powerful. Thus, even while counseling rational tranquility and Zen-like detachment, one may smuggle in a little dig, such as “Communications Experts have recently coined the term “MA Rodger Syndrome” to describe this dynamic.” Call it the Thomas Syndrome–or maybe the Kevin McKinney Syndrome.

All which is not to say Thomas’s advice about Active Listening isn’t good. It is. It just isn’t as easy as all that.

Kinda like carbon mitigation.

Thx for insightful genuine comments K McK. Smarter than the avg bear.

Do remember to include chicken or egg (what came first) and context because they are important Natural Variations. :)

How about this brutally simplified calculation for a lower bound of equilibrium temperature sensitivity:

– there seems to be a consensus that transient t.s. < equilibrium t.s.

– today, the trend line is a + 1 C (see Columbia graph)

– CO2 is at 410, which is 1.46 * 280

– rise is logarithmic, log(base2) of 1.46 = 0.55

– 1/0.55 = 1.8

– therefore, a lower bound for ETS is 1.8 C

Would it be possible to do a similar estimate for an upper bound of ETS? Could that be deduced from temperature not rising faster?

Re: earth energy imbalance

Incognitoto wrote:

“I understand that the 2018 forcing, (with feedbacks related to more than 400 ppm CO2), may be close to 2 W /m2 ”

My understanding is that the imbalance is under 1 watt/m^2 . Perhaps a citation for 2 watt/m^2 would help ?

sidd

Sidd, #45–

That was then, and of course this is now.

Here’s a quick ref., based on AR5 Chapter 8.

http://climate.envsci.rutgers.edu/climdyn2013/IPCC/IPCC_WGI12-RadiativeForcing.pdf

See slide 5.

Thomas, #45–

[Fist bump]

“Do remember to include chicken or egg (what came first) and context because they are important…”

Sorry, I always have trouble with those two. Memory issues, I expect.

Re: radiative imbalance

Does anyone have the answer to Mr. Icognitoto’s question ? Where is the heat going if not the ocean ?

sidd

OK I have a question.

Peter Cox says in his response about the “Hasselmann model” and the issues raised by Rasmus about it:

1) We use an Emergent Constraint (EC) approach, which means that we use GCMs to determine the relationship between variability and ECS. The Hasselmann model is merely used to inform our search for the most appropriate metric of variability.

2) We do not assume the Hasselmann model is a good representation of the historical warming. Of course it isn’t and we say that very clearly in our paper: “The constant heat capacity C in this model is a simplification that is known to be a poor representation of ocean heat uptake on longer timescales. However, we find that it still offers very useful guidance about global temperature variability on shorter timescales”.

—

Rasmus says in intro that: “It was based on a study recently published in Nature (Cox et al. 2018), however, I think its conclusions are premature.

The calculations in question involved both an over-simplification and a set of assumptions which limit their precision, if applied to Earth’s real climate system.

They provide a nice idealised and theoretical description, but they should not be interpreted as an accurate reflection of the real world.”

—

I had asked in another thread whether this “paper” was more theoretical or practical, and so welcome the discussion.

—

So Rasmus also says: “This demonstration suggests that the Hasselmann model underestimates the climate sensitivity and the over-simplified framework on which it is based precludes high precision.”

—

But Cox says several other things as well, which Rasmus has not yet addressed, mentioned, agreed or disagreed with.

So where are ‘we’ as readers now?

Does Cox properly address Rasmus’ concerns?

Does Rasmus still hold that “its conclusions are premature”?

If so then why does what Cox said above pts 1-5 make no difference?

Does Cox question the calcs provided by Rasmus above?

Are they appropriate and if not, then why not?

Who is more right?

Are the two protagonists talking about the same things in the same way or are they perhaps talking past each other? I have no idea – above my pay grade.

But I am at the edge of my seating waiting to find out ….

[Response: Thanks for those questions, Thomas. I don’t think that Cox addressed my concerns adequately, and I still think the conclusions of the paper are premature. In the METHODS description of the paper, it is stated that they hypothesize that the Hasselmann model is a “reasonable approximation to the short-term variations”. Then by assuming that the forcing term “ can be approximated by white noise”, they use the mathematical equation (1) describing the Hasselmann model to come up with the solution

can be approximated by white noise”, they use the mathematical equation (1) describing the Hasselmann model to come up with the solution  and a ratio of

and a ratio of  and

and  being

being  . I think this is a very crude approach, due to the brutal simplification and several assumptions/approximations which do not really hold. Hence, the calculations cannot give a high precision that reflect the real world.

. I think this is a very crude approach, due to the brutal simplification and several assumptions/approximations which do not really hold. Hence, the calculations cannot give a high precision that reflect the real world. may also be sensitive to temperature (e.g. snow-albedo feedback), and probably should be

may also be sensitive to temperature (e.g. snow-albedo feedback), and probably should be  as well. If the terms

as well. If the terms  and

and  are no longer constant, but functions of

are no longer constant, but functions of  , then that changes the mathematics and the derivations of the solutions. Perhaps they can be assumed to be roughly constant, but that would require an extra evaluation. We should not assume that the climate sensitivity is constant either, unless there are studies suggesting it is. On the other hand, such simple models and analysis are beautiful and useful for discussing topics like these. It’s just that they should not be misinterpreted for something they are not. -rasmus]

, then that changes the mathematics and the derivations of the solutions. Perhaps they can be assumed to be roughly constant, but that would require an extra evaluation. We should not assume that the climate sensitivity is constant either, unless there are studies suggesting it is. On the other hand, such simple models and analysis are beautiful and useful for discussing topics like these. It’s just that they should not be misinterpreted for something they are not. -rasmus]

I also had a number of other points, which Cox did not respond to. An by the way (my fault), the term

Thomas @31, his comments on communication. I agree totally, but its also sometimes a simple thing, where people write quick comments due to time pressure, and they lack clarity, or we misinterpret what people say. It sometimes leads to unfortunate personal feuds.