Global climate models (GCM) are designed to simulate earth’s climate over the entire planet, but they have a limitation when it comes to describing local details due to heavy computational demands. There is a nice TED talk by Gavin that explains how climate models work.

We need to apply downscaling to compute the local details. Downscaling may be done through empirical-statistical downscaling (ESD) or regional climate models (RCMs) with a much finer grid. Both take the crude (low-resolution) solution provided by the GCMs and include finer topographical details (boundary conditions) to calculate more detailed information. However, does more details translate to a better representation of the world?

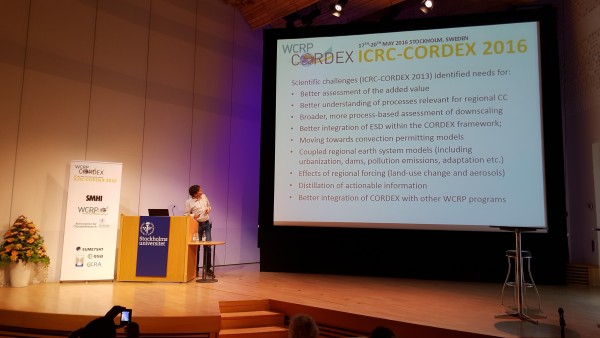

The question of “added value” was an important topic at the International Conference on Regional Climate conference hosted by CORDEX of the World Climate Research Programme (WCRP). The take-home message was mixed on whether RCMs provide a better description of local climatic conditions than the coarser GCMs.

RCMs can add details such as the influence of lakes, sea breeze, mountain ranges, and sharper weather fronts. Systematic differences between results from RCMs and observations may not necessarily be less than those for GCMs, however.

There is a distinction between an improved climatology (basically because of topographic details influencing rainfall) and higher skill in forecasting change, which is discussed in a previous post.

Global warming implies large-scale changes as well as local consequences. The local effects are moderated by fixed geographical conditions. It is through downscaling that this information is added to the equation. The added value of the extra efforts to downscale GCM results depends on how you want to make use of the results.

The discussion during the conference left me with a thought: Why do we not see more useful information coming out of our efforts? An absence of added-value is surprising if one considers downscaling as a matter of adding information to climate model results. Surprising results open up for new research and calls for explanations for what is going on. But added-value depends on the context and on which question is being asked. Often this question is not made explicitly.

There are also complicating matters such as the varying effects that arise when one combines different RCMs with different GCMs (known as the “GCM/RCM matrix”) or whether you use ESD rather than RCMs.

I think that we perhaps struggle with some misconceptions in our discourse on added-value. Even if RCMs cannot provide high-resolution climate information, it doesn't imply that downscaling is impossible or that it is futile to predict local climate conditions.

There are many strategies for deriving local (high-resolution/detailed) climate information in addition to RCM and ESD.

Statistics is often predictable and climate can be regarded as weather statistics. The combination of data with a number of statistical analyses is a good start, and historical trends provide some information. It is also useful to formulate good and clear research questions.

I don't think it's wrong to say that statistics is a core issue in climatology, but climate research still has some way to go in terms of applying state-of-the-art methods.

I have had very rewarding discussions with statisticians from NCAR, Exeter, UCL, and Computing Norway, and looking at a problem with a statistics viewpoint often gives a new angle. It may perhaps give a new direction when the progress goes in circles.

There are for instance still missing perspectives on extremes: present work includes a set of indices and return value analysis, but excludes record-breaking event statistics (Benestad, 2008) and event count statistics (Poisson processes).

Another important issue is to appreciate the profound meaning of random samples and sample size. This aspect also matters for extreme events which always involve small statistical samples (by definition – tails of the distribution) and therefore we should expect to see patchy and noisy maps due to random sampling fluctuations.

Patchy maps were (of course) presented for extreme values at the conference, but we can extract more information from such analyses than just thinking that the extremes are very geographically heterogeneous. Such maps reminded me of a classical mistake whereby different samples of different size are compared, such as zonal mean still found in recent IPCC assessment reports (Benestad, 2005).

There was a number of interesting questions raised, such as “What is information?” Information is not the same as data, and we know that observations and models often represent different things. Rain gauges sample an area less than 1 m2, phenomena producing precipitation often have scales of square kms, and most RCMs predict the area average for 100 km2. This has implications for model evaluation.

When it comes to model performance, there is a concept known as “bias correction” that was debated. It is still a controversial topic and has been described as a way to get the “right answer for the wrong reason”. It may increase the risk of mal-adaptation if it’s not well-understood (due to overconfidence).

Related issues included ethics as well as a term that seemed to invoke a range of different interpretations: ”distillation”. My understanding of this concept is the process of extracting the essential information needed about climate for a specific purpose, however, such terms are not optimal when they are non-descriptive.

Another such term is “climate services“, however, there has been some good efforts in explaining e.g. putting climate services in farmers' hands.

Much of the discussion during the conference was from the perspective of providing information to decision-makers, but it might be useful to ask “How do they make use of weather/climate information in decision-making? What information have they used before? What are the consequences of a given outcome?” In many cases, a useful framing may be in terms of risk management and co-production of knowledge.

The perspective of how the information is made use of cannot be ignored if we are going to answer the question of whether the RCMs bring added-value. However, it is not the task of CORDEX to act as a climate service or get too involved with the user community.

Added-value may be associated with both a science question or how the information is used to aid decisions, and the WCRP has formulated a number of “grand challenges”. These “grand challenges” are fairly general and we need “sharper” questions and hypotheses that can be subjected to scientific tests. There are some experiments that have been formulated within CORDEX, but at the moment these are the first step and do not really address the question of added-value.

On the other hand, added-value is not limited only to science questions and CORDEX is not just about specific science-questions, but should also be topic-driven (e.g. develop downscaling methodology) to support the evolution of the research community and its capacity.

Future activities under CORDEX may be organised in terms of “Flagship pilot studies” (FPS) for scientists who want an official “endorsement” and more coordination of their work. CORDEX may also potentially benefit with more involvement with hydrology and statistics.