In a new paper in Science Express, Karl et al. describe the impacts of two significant updates to the NOAA NCEI (née NCDC) global temperature series. The two updates are: 1) the adoption of ERSST v4 for the ocean temperatures (incorporating a number of corrections for biases for different methods), and 2) the use of the larger International Surface Temperature Initiative (ISTI) weather station database, instead of GHCN. This kind of update happens all the time as datasets expand through data-recovery efforts and increasing digitization, and as biases in the raw measurements are better understood. However, this update is going to be bigger news than normal because of the claim that the ‘hiatus’ is no more. To understand why this is perhaps less dramatic than it might seem, it’s worth stepping back to see a little context…

Global temperature anomaly estimates are a product, not a measurement

The first thing to remember is that an estimate of how much warmer one year is than another in the global mean is just that, an estimate. We do not have direct measurements of the global mean anomaly, rather we have a large database of raw measurements at individual locations over a long period of time, but with an uneven spatial distribution, many missing data points, and a large number of non-climatic biases varying in time and space. To convert that into a useful time-varying global mean needs a statistical model, good understanding of the data problems and enough redundancy to characterise the uncertainties. Fortunately, there have been multiple approaches to this in recent years (GISTEMP, HadCRUT4, Cowtan & Way, Berkeley Earth, and NOAA NCEI), all of which basically give the same picture.

Composite of multiple estimates of the global temperature anomaly from Skeptical Science.

Once this is understood, it’s easy to see why there will be updates to the historical estimates over time: the raw measurement dataset used can be expanded, biases can be better understood and characterised, and the basic statistical methods for stitching it all together can be improved. Generally speaking these changes are minor and don’t effect the big picture.

Ocean temperature corrections are necessary and reduce the global warming trend

The saga of ocean surface temperature measurements is complicated, but you can get a good sense of the issues by reviewing some of the discussion that followed the Thompson et al. (2008) paper. For instance, “Of buckets and blogs” and “Revisiting historical ocean surface temperatures”. The basic problem is that method for measuring sea surface temperature has changed over time and across different ships, and this needs to be corrected for.

The new NOAA NCEI data is only slightly different from the previous version

The sum total of the improvements discussed in the Science paper are actually small. There is some variation around the 1940s because of the ‘bucket’ corrections, and a slight increase in the trend in the recent decade:

Figure 2 from Karl et al (2015), showing the impact of the new data and corrections. A) New and old estimates, B) the impact of all corrections on the new estimate.

The second panel is useful, demonstrating that the net impact of all corrections to the raw measurements is to reduce the overall trend.

The ‘hiatus’ is so fragile that even those small changes make it disappear

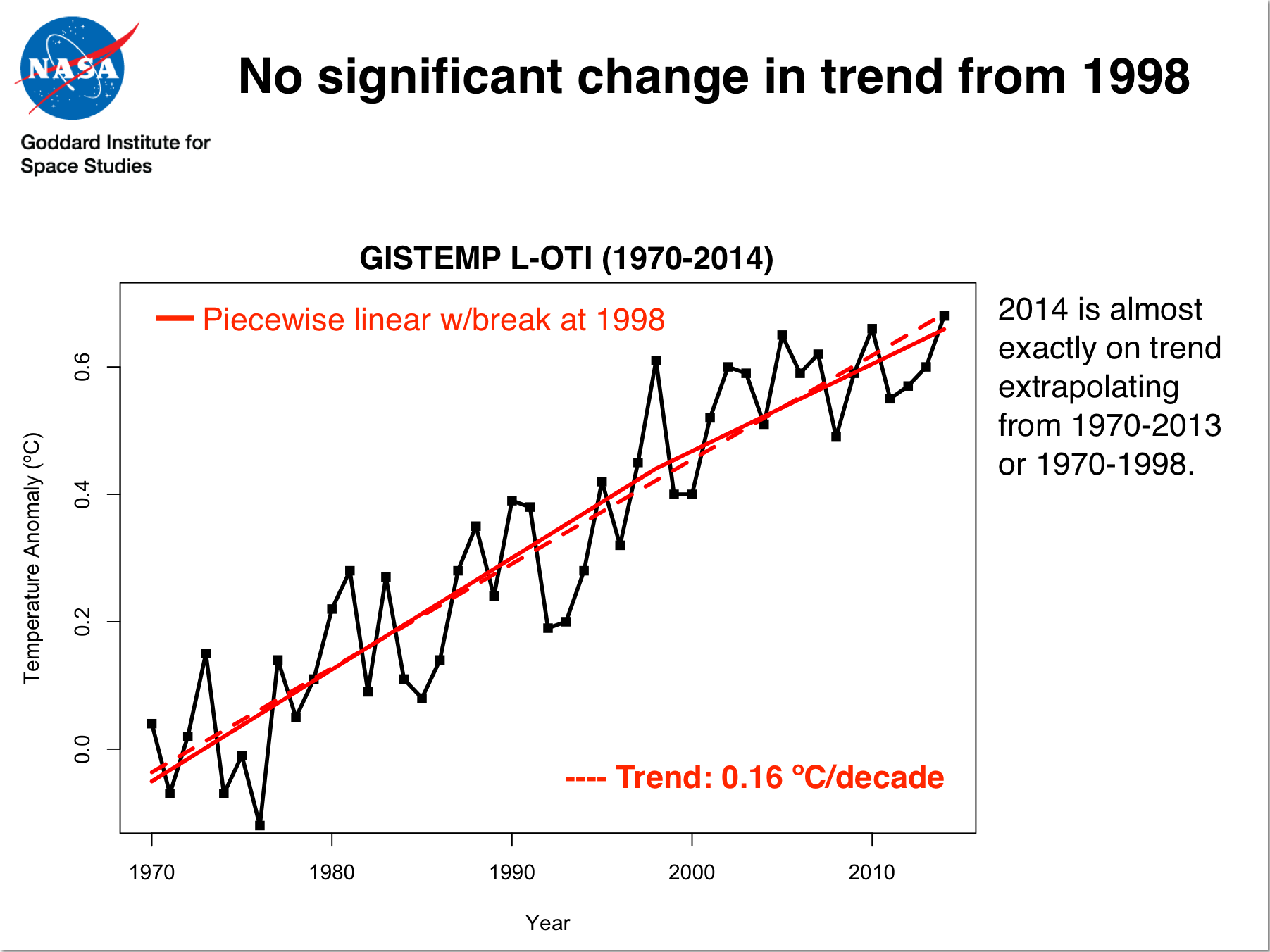

The ‘selling point’ of the paper is that with the updates to data and corrections, the trend over the recent decade or so is now significantly positive. This is true, but in many ways irrelevant. This is because there are two very distinct questions that are jumbled together in many discussions of the ‘hiatus’: the first is whether there is any evidence of a change in the long-term underlying trend, and the second is how to attribute short-term variations. That these are different is illustrated by the figure I made earlier this year:

As should be clear, there is no evidence of any significant change in trend post-1997. Nonetheless, if you just look at the 1998-2014 trend and ignore the error bars, it is lower – chiefly as a function of the pattern of ENSO/PDO variability. With the NOAA updates, the recent trends goes from 0.06±0.07 ºC/decade to 0.11±0.07ºC/decade, becoming ‘significant’ at the 95% level. However this is very much an example of where changes in (statistical) significance are not that (practically) significant. Using either record in the same analysis as shown in the last figure would give the same result – that there is no practical or statistical evidence that there has been a change in the underlying long-term trend (see Tamino’s post on this as well). The real conclusion is that this criteria for a ‘hiatus’ is simply not a robust measure of anything.

Model-observation comparisons are not greatly affected by this update

I’ve been remiss in updating these comparisons (see 2012, 2011, and 2010), but this is a good opportunity to do so. First, I show how the CMIP3 model-data comparisons are faring. This is a clean continuation to what I’ve shown before:

It is clear that temperatures are well within the expected range, regardless of the NCDC/NCEI version. Note that the model range encompasses all of the simulated internal variability as well as an increasing spread over time which is a function of model structural uncertainty. These model simulations were performed in 2004 or so, using forcings that were extrapolated from 2000.

More recently (around 2011) a wider group of centers and with more, and more up-to-date models, performed the CMIP5 simulations. The basic picture is similar over the 1950 to present range or looking more closely at the last 17 years:

The current temperatures are well within the model envelope. However, I and some colleagues recently looked closely at how well the CMIP5 simulation design has held up (Schmidt et al., 2014) and found that there have been two significant issues – the first is that volcanoes (and the cooling associated with their emissions) was underestimated post-2000 in these runs, and secondly, that solar forcing in recent years has been lower than was anticipated. While these are small effects, we estimated that had the CMIP5 simulations got this right, it would have had a noticeable effect on the ensemble. We illustrate that using the dashed lines post-1990. If this is valid (and I think it is), that places the observations well within the modified envelope, regardless of which product you favour.

The contrary-sphere really doesn’t like it when talking points are challenged

The harrumphing from the usual quarters has already started. The Cato Institute sent out a pre-rebuttal even before the paper was published, replete with a litany of poorly argued points and illogical non-sequiturs. From the more excitable elements, one can expect a chorus of claims that raw data is being inappropriately manipulated. The fact that the corrections for non-climatic effects reduce the trend will not be mentioned. Nor will there be any actual alternative analysis demonstrating that alternative methods to dealing with known and accepted biases give a substantially different answer (because they don’t).

The ‘hiatus’ is no more?

Part of the problem here is simply semantic. What do people even mean by a ‘hiatus’, ‘pause’ or ‘slowdown’? As discussed above, if by ‘hiatus’ or ‘pause’ people mean a change to the long-term trends, then the evidence for this has always been weak (see also this comment by Mike). If people use ‘slowdown’ to simply point to a short-term linear trend that is lower than the long-term trend, then this is still there in the early part of the last decade and is likely related to an interdecadal period (through at least 2012) of more La Niña-like conditions and stronger trade winds in the Pacific, with greater burial of heat beneath the ocean surface.

So while not as dead as the proverbial parrot, the search for dramatic explanations of some anomalous lack of warming is mostly over. As is common in science, anomalies (departures from expectations) are interesting and motivating. Once identified, they lead to a reexamination of all the elements. In this case, there has been an identification of a host of small issues (and, in truth, there are always small issues in any complex field) that have involved the fidelity of the observations (the spatial coverage, the corrections for known biases), the fidelity of the models (issues with the forcings, examinations of the variability in ocean vertical transports etc.), and the coherence of the model-data comparisons. Dealing with those varied but small issues, has basically lead to the evaporation of the original anomaly. This happens often in science – most anomalies don’t lead to a radical overhaul of the dominant paradigms. Thus I predict that while contrarians will continue to bleat about this topic, scientific effort on this will slow because what remains to be explained is basically now well within the bounds of what might be expected.

References

- T.R. Karl, A. Arguez, B. Huang, J.H. Lawrimore, J.R. McMahon, M.J. Menne, T.C. Peterson, R.S. Vose, and H. Zhang, "Possible artifacts of data biases in the recent global surface warming hiatus", Science, vol. 348, pp. 1469-1472, 2015. http://dx.doi.org/10.1126/science.aaa5632

- B. Huang, V.F. Banzon, E. Freeman, J. Lawrimore, W. Liu, T.C. Peterson, T.M. Smith, P.W. Thorne, S.D. Woodruff, and H. Zhang, "Extended Reconstructed Sea Surface Temperature Version 4 (ERSST.v4). Part I: Upgrades and Intercomparisons", Journal of Climate, vol. 28, pp. 911-930, 2015. http://dx.doi.org/10.1175/JCLI-D-14-00006.1

- D.W.J. Thompson, J.J. Kennedy, J.M. Wallace, and P.D. Jones, "A large discontinuity in the mid-twentieth century in observed global-mean surface temperature", Nature, vol. 453, pp. 646-649, 2008. http://dx.doi.org/10.1038/nature06982

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

Joe- I don’t view the observational (temperature) uncertainty as being the limiting factor in deriving sensible TCR/ECS estimates from the observational record. It is more likely that contributions from internal variability, uncertain forcings, and the construction of a model relating sensitivity to e.g., near-surface T change and ocean heat uptake are far more limiting. A lot of the observation based estimates are likely biased low, as outlined in the Ringberg report just due to assumptions of linearity in the evolution of surface temperature in response to some given radiative nudge on the system.

Whether or not a ‘hiatus’ exists seems mostly semantical, with some dependence on your choice of favorite dataset, but from a climate dynamics standpoint it’s really an effort to take an order 0.1C change and give some fractional attribution to a bunch of different factors that conspired to give you that 0.1C change. That could be taking your favorite three and four letter acronyms to describe some state of the atmosphere-ocean, and some forcing uncertainty issues thrown in, but there’s not much compelling evidence there’s any big implication for sensitivity.

Hey Joe Duarte @44

As you hint at, using anomalies completely removes constant biases. Going back to my example, replace the numbers with symbols (e.g. Bbuoy, Bship) and do the maths, it turns out you only need the different Bbuoy-Bship to make the correction properly.

Your next point is a good one that’s filled plenty of pages in the studies. ERSST did it globally and HadSST basin-by-basin with the same results (0.12 C), suggesting little effect. The 20-year satellite record matches HadSST3 very well (Merchant et al., 2012, Figure 14), and looking at Figure 9 of Huang et al. (2014) the HadSST & ERSST difference variations are about ~0.02 C since 1980. It looks like infrared sensors on satellites, HadSST3 and ERSST4 are all pretty close over the past couple of decades, and the satellites support the newer series over the older ones.

The biggest disagreement is before the satellite period where ERSST has some surprising jumps around the 1940s. HadSST looks more believable there, but luckily if you’re talking “hiatus” or “slowdown” that doesn’t really matter.

I’ve never seen any sensible statistical evidence for a “slowdown” or “hiatus”. Every time someone claims one they have to (a) ignore statistical uncertainty or (b) allow magical “jumps” in the temperature record just before their chosen “slowdown” period. These “jumps” are usually hidden by removing data from before the “slowdown” or by not showing the longer-term trend.

I find the standard physical model-comparison approach to be the clearest for me. We have the physical models and their uncertainty, and we have the observations. If observations fall outside the model uncertainty then we must find out why. Even though surface observations still fall within the model range, they were getting toward the lower end and that led to interesting work like Kosaka & Xie (2013) and England et al. (2014).

Merchant et al. (2012): http://onlinelibrary.wiley.com/doi/10.1029/2012JC008400/pdf

@35 Buck:

Look at the second graph in figure 2. The corrections clearly make the past WARMER, not cooler, which directly refutes your assumption.

James, it’s just not fair to refute Buck with an obvious fact like that.

Referring to the comparison of T anomaly and model since 1950:

First I am a little puzzled by the y-axis. Heating the melts ice adds heat but does not change temperature. Any comparison of temperature to heat added will show less temperature rise than expected if ice has melted. Is there any correction to account for surface heat instead of surface T by considering the melted ice volume? Or is this a small effect?

Perhaps related to that: the data match the best model to about 1995. Since then the data seems to track about 1/2 the error band below the best model. Is there an explanation for that? Or is it just chance?

Thank you

James, Jim,

Sorry I was not precise. The corrections have made period just before the notorious hiatus colder (~1980 to ~2000) and the pause period warmer. Also worth noting that if the the ‘hiatus’ is so fragile that even those small changes make it disappear, so is the warming signal. ;)

Karl Denninger, in his market-ticker blog is upset:

When You Don’t Get The Answer You Want, Lie

It’s time to close NOAA, unfortunately.

https://market-ticker.org/akcs-www?post=230227

@Buck…

Nice verbal statement. Statistically completely false. The amount of “correction” required to disappear the warming signal is far, far in excess of that which has, in fact, disappeared the “hiatus”.

The “hiatus” has always been an “effect” which requires ignoring the bias introduced by cherrypicking. By any correct use of accepted statistical inference, it never was shown to be probable. Warming is far more robust and does not require such an invalid procedure.

dhogaza, what’s with the snarkiness?

Buck, the ‘hiatus’ is not just fragile, there is no evidence, and never has been, that it ever exited in the first place.

Tamino demonstrates this quite clearly here:

https://tamino.wordpress.com/2015/04/30/slowdown-skeptic

and here:

https://tamino.wordpress.com/2015/01/20/its-the-trend-stupid-3/

That the noise of natural variability can temporarily be strong enough to make the underlying warming signal seem to “disappear” for short periods is nothing new. Sulphate aerosols from a large volcanic eruption can do so, such as Pinatubo in 1991-93. A series of La Ninas combined with the absence of a strong El Nino can do so, such as over recent years. Or, an exceptionally strong El Nino can greatly exceed the trend, as with 1997-98. But only for a short time in all those cases. Eventually we will revert to the underlying trend caused by earth’s radiative imbalance, which has been there all along.

Chris J @ 55:

Are you referring to the bottom figure? If so, then comparisons are temperature-to-temperature. Somewhere around 2% of heat has gone into melting ice according to this:

http://www.carbonbrief.org/blog/2013/03/worlds-oceans-are-getting-warmer-faster/

which sounds about right. Models do simulate ice melt and the heat that goes there, but it’s very small compared with the oceans. And the oceans appear to explain why the observed temperatures have fallen below the model median expectations.

In particular, if you look at models where changes in the Pacific match what actually happened and add in global warming, then you match the observed changes pretty well. In particular, the trade winds over the Pacific reached record-breaking strength recently, which helps stir heat into the oceans. If this is a natural cycle rather than a response to global warming (and I’d guess that it is, but I haven’t seen attribution work on this yet?), then when it reverses we expect temperatures to shoot up. And in that analysis, there’s also a limit to how long the trades can slow down warming: they need to keep getting stronger to keep offsetting the extra greenhouse warming.

We can’t rule out that the models just predict too much global warming, but there doesn’t appear to be any solid evidence for that yet. Some relevant papers:

Risbey et al.:

http://www.nature.com/nclimate/journal/v4/n9/full/nclimate2310.html

Kosaka & Xie:

http://www.nature.com/nature/journal/v501/n7467/full/nature12534.html

England et al.:

http://www.nature.com/nclimate/journal/v4/n3/abs/nclimate2106.html

Meehl et al.:

http://www.nature.com/nclimate/journal/v1/n7/abs/nclimate1229.html

Foster & Rahmstorf:

http://iopscience.iop.org/1748-9326/6/4/044022

jgnfld, over what time periods are you talking about. The hiatus has been going on (or not going on) about 15 to 20 years depending data set. The earth has been warming since the last ice age. Warming caused by CO2 emmisions has been going for maybe 50 to 75 years right?

Martin Cohen:

Sigh. An all-too-predictable reaction from an AGW-denier and Tea Party founder.

but what can scientists do? Deniers gonna deny.

“The ‘selling point’ of the paper is that with the updates to data and corrections, the trend over the recent decade or so is now significantly positive.”

Not really. According to the paper, its only significant with a p-value of 0.10. Thats another way of saying not statistically significant.

Now does that mean there is no such thing as global warming and the hiatus is real? No. It means it’s too a noisy of a dataset to find trends over 17 years. But please don’t try to misrepresent the general public (most of whom have never taken a statistics class) what the data can and cannot tell you. It’s only going to backfire and further erode the public’s trust in science.

Sorry, Buck, but all three of the assertions in your reply to jgnfld are flat out wrong.

First, the hiatus is nothing more than spurious pattern recognition based on too short a time span. Fortunately there is a branch of mathematics that is specifically designed to test the validity of perceived patterns. It’s called statistics. I suggest you read the two posts by Tamino, a statistician with expertise in noisy time series analysis, which I pointed you to.

Second, the warming following the end of the last glaciation peaked 8000 to 6000 years ago at what is known as the Holocene Climate Maximum (or Optimum in some sources). The long term trend in earth’s mean temperature has been downward ever since, with natural excursions above and below the trend, of course. That is until recently.

Which brings us to third, warming caused by fossil fuel CO2 emissions began around 265 years ago with the start of the coal-fueled industrial revolution in England. Although small at first it continuously picked up speed since then such that roughly half of all our fossil carbon CO2 has been emitted to the atmosphere since 1950.

As they say, you are entitled to your own opinion, but you are not entitled to make up your own facts.

#62

When the May figure for GISTemp comes out (presumably) next week, the “hiatus” will most likely be no more. GISTemp will most likely show “statistically significant” global warming since October 1998.

Willie Soon is presenting his latest travesty of of the temperature record online , live from the Heartland Institute’s latest powwow in DC

http://www.yaleclimateconnections.org/2015/06/new-noaa-reports-shows-no-recent-warming-slowdown-or-pause/

@61 Mark R,

Thank you for your answer. Speaking as a tourist it seems that what is best modelled is “extra heat in” (ie forcings) with greenhouses gases being one of the forcings. To me the natural unit is integrated energy. is compare the integrated forcing with the total climate-relevant heat change: sum Cp *deltaT (Sea water) + H_melting*mass ice melted + C_p *delta T (air) + C_p delta T ground + …

Because you plot T(air) vs time in fact you are doing the opposite of what you are at times accused of. That is to say you may be understating in the plots the size of the effect.

#67–One of the more amusing iterations, IMO.

If the SST record were not in need of improvement, the Navy and the Admiralty would not have kicked their old sample collection buckets overboard s a generation ago.</a.

Doesn’t the observation that “The ‘hiatus’ is so fragile that even those small changes make it disappear” work in reverse such that “The ‘warming’ is so fragile that even small changes make it appear?” My understanding of this type of statistical sampling is to try to make a sample represent a true population and we estimate all the errors in the standard deviation including random and systemic errors. My concern would be whether the error bars shifted in the updated dataset and why. If the old dataset is outside the error range, it seems there is an issue with estimating error and if it’s not, then what changed? The only acceptable, non-controversial shift would be a change in mean that is within the old error bars and new error bars that are smaller and also within the older range. Any shifts in variance that is outside the original raises more fundamental concerns about how error and bias are estimated. Just like pre-election polls that alter their raw sample data to account for known “likely voter” biases in a number of areas, we expect the final result to live within the boundaries of the poll. Results outside the boundaries (or changes in polling methodology that shift a poll outside previous boundaries) are an indication that the sampling and adjustments have larger errors than previously thought. If the old data said something like 10 +/- 2C and the new data is 10.1 +/- 1.5C, it’s within the old error bars and should represent an improvement. If it says 10.1 +/- 2.5C, it’s outside the old range and indicates the range error estimate previously was incorrect. Shouldn’t the real focus be on the range. not the mean?

#72 Tim Beatty,

Here’s what I don’t understand. What difference does it make?

I’m serious; can you tell me what the question is that you are trying to answer? If the mean is off a bit, or the error range is off a bit, what would be your new understanding of the effects of CO2 on radiant energy transfer in Earth’s climate system?

Would everything be OK with continuing to burn fossil fuels until they are all gone? Would we say, well, don’t bother to ramp up renewables for a few more decades, because…?

This really has gotten silly, and on both ‘sides’.

by Jay Lawrimore, Chief, Data Set Branch, Center for Weather and Climate, NOAA’s National Centers for Environmental Information at NOAA.

“With the improvements to the land and ocean data sets and the addition of two more years of data, NCEI scientists found that there has been no hiatus in the global rate of warming. This finding is consistent with the expected effect of increasing greenhouse gas concentrations and with other observed evidence of a changing climate such as reductions in Arctic sea ice extent, melting permafrost, rising sea levels, and increases in heavy downpours and heat waves.

“Underestimating the rate of warming

“This work highlights the importance of data stewardship and continuously striving to improve the accuracy and consistency of temperature data sets.

“While these improvements in the land and ocean temperature record reveal a rate of warming greater than previously documented, ****** we also found that our computed trends likely continue to underestimate the true rate of warming.*****

This is due at least in part to a lack of surface temperature observations in large parts of the Arctic where warming is occurring most rapidly.

“Preliminary calculations of global temperature trends using estimates of temperatures in the Arctic indicate greater rates of warming than the 1998-2014 trend of 0.19F per decade reported in this study. Future data set development efforts will include a focus on further improvements to the temperature record in this area of the world.”

https://theconversation.com/improved-data-set-shows-no-global-warming-hiatus-42807

Whether one says, “the hiatus is fragile,” or one says, “the warming is fragile,” the real lesson here is that over short periods, internal variability can obscure the long-term trend. Answer: don’t look at short periods. On timescales of 30 years or longer, the climate is screaming, “We’re warming!”

It is not that short-term variation isn’t interesting. It is. That is the real reason why climate scientists have been looking at the so-called hiatus. It is not because they have deep doubts about forcing due to anthropogenic CO2.

#72, Tim–https://www.realclimate.org/index.php/archives/2015/06/noaa-temperature-record-updates-and-the-hiatus/comment-page-2/?wpmp_tp=1#sthash.TS1QGFLW.dpuf

Short answer: No.

Long answer: No, because the ‘hiatus’ is largely the product of a cherry-picked interval that unduly restricts sample size. The warming signal, by contrast, emerges from a much larger data set and accordingly offers much greater statistical significance.

There is no ‘symmetry’ between the two.

[Response: Indeed. The attempt to prove the existence of global warming with only 15 years of data would be at least fragile. -stefan]

#72 CMIP5 models are touted as subdecadal in accuracy so a multi-decadal sample set should be sufficient. But my question can be shortened to “What are the estimated errors on measurements and how much did they change with the new release?” We have error bars associated with the model and we seem to think that having a measurement mean within the model error bars is acceptable. I don’t believe it is the case and I don’t think it’s statistically viable to change the means of the measurement without acknowledging a change in error window of the measurement. Measurements are a sampling, usually of a grid, and each grid is going to have sampling error. It is not enough merely to republish measured means that are withing the error window of the model, it also needs to account for the error in measurement. This is fairly obvious with statements like “sparsely covered arctic grids.” Including arctic measurements increases measurement error spreads and it is the measurement error window that is important here, not the mean. If we are now weighting the arctic more heavily, the measurement error is also now a larger component. Where is that error represented in measured data set and how does it overlap with the model error? Weighting data to increase the representation of areas that have more error is generally a giant red flag. How do you know whether you are amplifying random error or systemic bias by increasing weights of sparsely covered grids?

#8 how does Karl et al have a smaller error bar than hadCrut4 when they overweight a higher error area such as the arctic? From that it appears they regress only over time and do not account for individual grid error. Do they treat grid error as a bias that is time invariant and therefore regresses away as the interval increases? I would think that would be incorrect. it seems to me that measurement error on the left side of the graph (the long interval) should have variance in error that reflects the residual error in each grid. Whence, the Cowtan and Way error bars, for example, should be larger than hadCrut4 since they weighted a region with larger error more heavily. These all seem to have the same regressed error which indicates to me it is only time based error, not time and location error. This would be easy to see if the measurement error of tropics were compared to the mweasurement error of the arctic. Over equivalent time periods, the arctic should have higher error.

#73 The difference isn’t in the radiative levels of CO2 or otehr GHG’s. Those are well understood. The fundamental question is that CO2 rise rather continuously, the energy model is unbalanced so where is the heat? There have been many explanations put borth inlcluding deep ocean heating, ozone chemistry, volcanoes reducing incident energy, trade winds and, of course, Cowtan and Way along with this latest data set that shows the energy imbalance is still tracking CO2. It matters because of there are other features that affect radiative balance, we need to understand and model them accurately. The significance is substantial in that if it’s really deep ocean heating, sea level rise is more of a problem. If it’s a dataset issue with the rate of warming, it is not so disastrous and the consequences and solutions can be be drastically different. It’s very important to understand the sources and sinks of energy if we are to place it in proper perspective because a 300 year problem is not the same as a 30 year problem.

+KR “And if as in this situation offsets between co-located instrument systems are shown to be consistent (0.12°C), a global correction is the only reasonable approach.”

How was it decided that the ship-based measurements are more accurate than the buoy measurements?

> Martin Smith

you’re asking the question answered above.

Martin Smith: “How was it decided that the ship-based measurements are more accurate than the buoy measurements?”

You are making the incorrect assumption that choosing one implies that it is “more accurate”.

The ship-based measurements are more accurate at measuring the temperature where the ship-based estimates sample the temperature. The buoy measurements are more accurate at measuring the temperature where the buoys sample the temperature.

The two are different. You can’t mix them arbitrarily and expect to look at trends. You need to decide how different they are, and shift them to match where you have overlap. It does not matter whether you shift one up or the other down. The choice does not affect the resulting trend.

Others: please note that there is a user mwsmith12 over at Skeptical Science asking much the same questions. I’m not sure if he’s really interesting in understanding the answer.

“The attempt to prove the existence of global warming with only 15 years of data would be at least fragile.”

– See more at: https://www.realclimate.org/index.php/archives/2015/06/noaa-temperature-record-updates-and-the-hiatus/comment-page-2/#comment-632617

Yes, Guy Callendar had data from 1880-1934 when he wrote “The Artificial Production of Carbon Dioxide and its Effect on Temperature”, published in 1938:

http://onlinelibrary.wiley.com/doi/10.1002/qj.49706427503/epdf

Scholar James Fleming writes flatly that “Callendar established the CO2 theory of climate change in its recognizably modern form.”

Callendar’s story:

http://hubpages.com/hub/Global-Warming-Science-And-The-Wars

> “If the idea is that the slowdown is over too short a period, a Gambler’s Fallacy type of thing, then the requisite period should be defined up front. Twenty years? 25? 30? Whatever it is, it should be defined up front based on valid constraints so that people can stop arguing in circles over the duration issue.”

The time period is an attribute of the dataset. It depends on the level of noise in the data. So to define the number up front would be to misunderstand how statistical analysis works. We don’t pick a number, the data has the property based on the amount of noise in it. It is different for different datasets, but for Global Mean Surface Temperature (GMST) it turns out to be about a 30-year period centered on the point at which you are trying to determine what the rate of change in GMST is.

Re. “How was it decided that the ship-based measurements are more accurate than the buoy measurements?”

Probably the Grand Weather Hoax Wizard (my guess is Jim Hansen) decided this in his Fortress of Climatude far north in the Arctic and sent out the word to all of his lockstep minions that this was how it was going to work.