The New York Times, 12 December 2027: After 12 years of debate and negotiation, kicked off in Paris in 2015, world leaders have finally agreed to ditch the goal of limiting global warming to below 2 °C. Instead, they have agreed to the new goal of limiting global ocean heat content to 1024 Joules. The decision was widely welcomed by the science and policy communities as a great step forward. “In the past, the 2 °C goal has allowed some governments to pretend that they are taking serious action to mitigate global warming, when in reality they have achieved almost nothing. I’m sure that this can’t happen again with the new 1024 Joules goal”, said David Victor, a professor of international relations who originally proposed this change back in 2014. And an unnamed senior EU negotiator commented: “Perhaps I shouldn’t say this, but some heads of state had trouble understanding the implications of the 2 °C target; sometimes they even accidentally talked of limiting global warming to 2%. I’m glad that we now have those 1024 Joules which are much easier to grasp for policy makers and the public.”

This fictitious newspaper item is of course absurd and will never become reality, because ocean heat content is unsuited as a climate policy target. Here are three main reasons why.

1. Ocean heat content is extremely unresponsive to policy.

While the increase in global temperature could indeed be stopped within decades by reducing emissions, ocean heat content will continue to increase for at least a thousand years after we have reached zero emissions. Ocean heat content is one of the most inert components of the climate system, second only to the huge ice sheets on Greenland and Antarctica (hopefully at least – if the latter are not more unstable than we think).

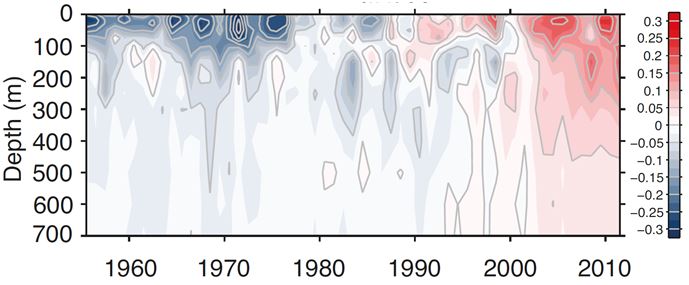

Figure 1. Ocean heat content in the surface layer (top panel, various data sets) and the mid-depth (700-2000 m) and deep ocean (bottom panel), from the IPCC AR5 (Fig. 3.2 – see caption there for details). Note that uncertainties are larger than for global mean temperature, the data don’t go as far back (1850 for global mean temperature) and data from the deep ocean are particularly sparse, so that only a trend line is shown.

2. Ocean heat content has no direct relation to any impacts.

Ocean heat content has increased by about 2.5 X 1023 Joules since 1970 (IPCC AR5). What would be the impact of that? The answer is: it depends. If this heat were evenly distributed over the entire global ocean, water temperatures would have warmed on average by less than 0.05 °C (global ocean mass 1.4 × 1021 kg, heat capacity 4 J/gK). This tiny warming would have essentially zero impact. The only reason why ocean heat uptake does have an impact is the fact that it is highly concentrated at the surface, where the warming is therefore noticeable (see Fig. 1). Thus in terms of impacts the problem is surface warming – which is described much better by actually measuring surface temperatures rather than total ocean heat content. Surface warming has no simple relation to total heat uptake because that link is affected by ocean circulation and mixing changes. (By the way, neither has sea-level rise due to thermal expansion, because the thermal expansion coefficient is several times larger for warm surface waters than for the cold deep waters – again it is warming in the surface layers that counts, while the total ocean heat content tells us little about the amount of sea-level rise.)

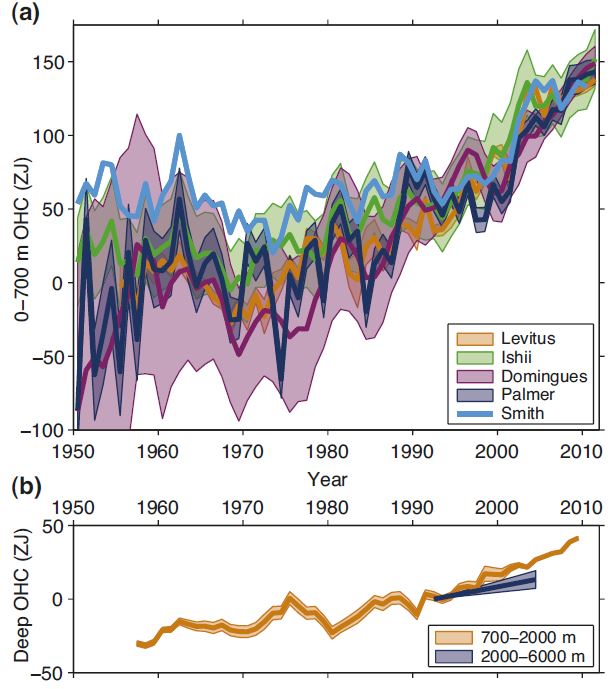

Figure 2. Temperature anomaly in °C as a function of ocean depth and time since 1955. (Source: Fig. 3.1 of the IPCC AR5.)

3. Ocean heat content is difficult to measure.

The reason is that you have to measure tiny temperature changes over a huge volume, rather than much larger changes just over a surface. Ocean heat content estimates have gone through a number of revisions, instrument calibration issues etc. If we were systematically off by just 0.05 °C throughout the oceans due to some instrument drift, the error would larger than the entire ocean heat uptake since 1970. If the surface measurements were off by 0.05 °C, this would be a negligible correction compared to the 0.7 °C surface warming observed since 1950.

Two basic ocean physics facts

Let us compare the ocean to a pot of water on the stove in order to understand (i) that heat content is an integral quantity and (ii) the response time of the ocean.

Imagine you’ve recently turned on the stove. The heat content of the water in the pot will increase over time with a constant setting of the stove (note that zero emissions correspond to a constant setting – emitting more greenhouse gases turns up the heat). How much heat is in the water thus depends mainly on the past history (how long the stove has been on) rather than its current setting (i.e. on whether you’ve recently turned the element up a bit). That is why it is an integral quantity – it integrates the heating rate over time. You can tell from the units: heat content is measured in Joules, heating rate in Watts which is Joules per second, i.e. per unit of time.

The water in the pot heats up much faster than the global ocean. The water in the pot may be typically ~10 cm deep and heated at a rate of 1500 Watt or so from below. But the ocean is on average 3700 meters deep (thus has a huge heat capacity) and is heated at a low power input of the order of ~1 Watt per square meter of surface area. Also it is heated from above and not well mixed but highly stratified. Warm water floats on top, which hinders the penetration of heat into the ocean. Water in parts of the deep ocean has been there for more than a millennium since last exposed to the surface. Therefore it will take the ocean thousands of years to fully catch up with the surface warming we have already caused. That is why limiting ocean heat content to 1024 Joules is not possible even if we stop global warming right now – even though this amount is four times the amount of heating already caused since 1970. Ocean heat content simply does not respond on policy-relevant time scales.

If you turn your setting on the stove higher or lower, what you immediately change is the rate of heating – the wattage. So would limiting the rate of ocean heat uptake be a suitable policy target? At least it would be responsive to policy at a relevant time scale, like surface temperature. But here reasons two and three come into play. The rate of heat uptake has even less connection to any impacts than the heat content itself. And the time series of this rate is extremely noisy.

Charles Saxon in the New Yorker on the impacts of deep ocean heat content on society.

So why do Victor and Kennel propose to use deep ocean heat content as policy target?

In a recent interview, David Victor has explained why he wants to “ditch the 2 °C warming goal”, as the title of his Nature commentary with Charles Kennel reads:

There are some other indicators that look much more promising. One of them is ocean heat content.

The reason that Victor and Kennel gave for preferring ocean heat content over a global mean surface temperature target is this:

Because energy stored in the deep oceans will be released over decades or centuries, ocean heat content is a good proxy for the long-term risk to future generations and planetary-scale ecology.

I criticized this because the deep ocean will not release any heat in the next thousand years but rather continue to absorb heat. In his response at Dot Earth, Victor replied that I had “plucked this sentence out of context”. However, in their article there simply is no context that would explain how “energy stored in the deep oceans will be released over decades or centuries” or how this would make it “a good proxy for the long-term risk”. This statement is plainly wrong, and Victor would have been more credible to simply admit that. Victor there further argues that “the data suggest [OHC] is a more responsive measure” than surface temperature, but what he means by that, given the huge thermal inertia of the oceans, beats me.

My impression is this. Victor and Kennel appear to have been taken in by the rather overblown debate on the so-called ‘hiatus’, not realizing that this is just about minor short-term variability in surface temperature and has no bearing on the 2 °C limit whatsoever. In this context they may have read the argument that ocean heat content continues to increase despite the ‘hiatus’ – which is a valid argument to show that there still is a radiative disequilibrium and the planet is still soaking up heat. But it does not make ocean heat content a good policy target. The lack of response to short-term wiggles like the so-called ‘hiatus’ points at the fact that ocean heat content is very inert, which is also what makes it unresponsive to climate policy and hence a bad policy target. So my impression is that they have not thought this through.

I agree with the criteria that a metric for a policy goal needs to be (a) related to impacts we care about (otherwise why would you want to limit it) and (b) something that can be influenced by policy. A more technical third requirement is that it must be something we can measure well enough, with well-established data sets going far enough back in time to understand baseline variability.

But it seems clear to me that global mean surface temperature is the one metric that best meets these requirements. It is the one climate variable most clearly linked to radiative forcing, through the planetary energy budget equation. The nearly linear relation of cumulative emissions and global temperature allows one to read the remaining CO2 emissions budget off a graph (Fig. SPM.10 of the AR5 Summary for Policy Makers) once the global temperature target is agreed. Most impacts scale with global temperature, and how a whole variety of climate risks – from declining harvests to the risk of crossing the threshold for irreversible Greenland ice sheet loss – depends on temperature has been thoroughy investigated over the past decades. And finally we have four data sets of global temperature in close agreement (up to ~0.1 °C), natural variability on the relevant time scales is small (also ~0.1 °C) compared to the 2 °C limit, and models reproduce the global temperature evolution over the past 150 years quite well when driven by the known forcings.

I find the arguments made by Victor and Kennel highly self-contradictory. They find global mean temperature too variable to use as a policy target (that is the thrust of their “hiatus” argument) – but they propose much more noisy indicators like an index of extreme events. They think global surface temperature is affected by “all kinds of factors” – and propose ocean heat content, which is determined by the history of surface temperature. Or the surface area in which conditions stray by three standard deviations from the local and seasonal mean temperature, which is a straight function of global surface temperature with some noise added. They argue surface temperature is something which can’t directly be influenced by policy – and propose deep ocean heat content where this is a hundred times worse. They say limiting warming to 2 °C is “effectively unachievable” – and then say “it’s not going to be enough to stop warming at 2 degrees“. I simply cannot see a logically coherent argument in all this.

My long-time friend and colleague Martin Visbeck launching an Argo float in the Pacific.

The bottom line

Should we monitor heat storage in the global ocean? By all means, yes! The observational oceanography community has long been making heroic efforts in this difficult area, not least by getting the Argo system off the ground (or rather into the water). That is no small achievement, which has revolutionized observational physical oceanography for the upper 2,000 meters of the world ocean. Currently Deep Argo is under development to cover depths up to 6,000 meters (see e.g. News&Views piece by Johnson and Lyman just published in Nature). These efforts need and deserve secure long-term funding.

Is ocean heat storage a good target for climate policy, to replace the 2 °C limit? Certainly not! I’ve outlined the reasons above.

[p.s. I am grateful that David Victor has apologized to me for comparing it to “methods of the far right” that I introduced him as a “political scientist” in my previous post (as in fact he is in the intro to his interview). This matter is now settled and forgotten, with no hard feelings.]

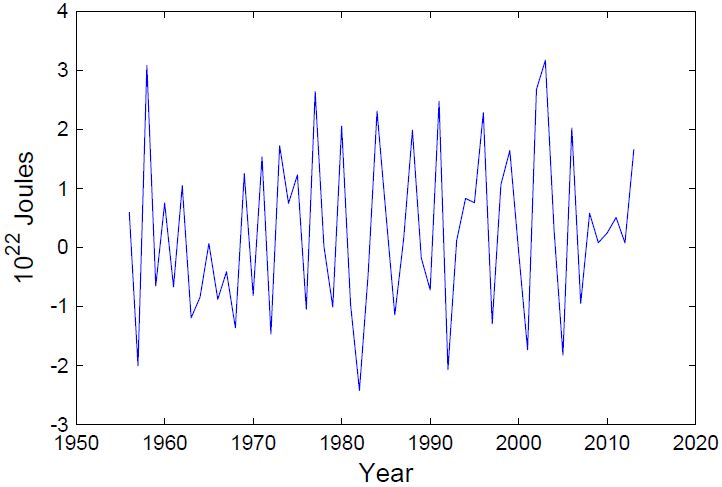

Update 21 October: I thank David Victor and Charles Kennel for responding to this article below. Answering this again would turn the discussion in circles – I’ve made my points and I think our readers now have a good basis to form their own opinion. Just one piece of additional data that might be informative, since Victor and Kennel below suggest to use the rate of heat content change for a well-measured portion of the ocean, rather than absolute heat content. Below I plotted the annual heat uptake of the upper 700 meters. (I already made this plot last week, it is the basis of me saying above that this measure is very noisy – the data can be downloaded from NOAA.)

I fully agree with the author of this article. Not only is the idea of using the ocean temperature as a target flawed from the point of view of a “hiatus” that never actually existed but it smacks of another, “long view” technique the fossil fuel industry can use to claim they can continue to burn massive amounts of fossil fuels without “undue” deleterious effects on our climate and biosphere.

I have two questions for climate scientists. The first one is about ocean temperatures with particular regard to heat exchange at the surface. It is a fact that the surface of two thirds of the planet is ocean and that part (on the average) is getting warmer.

Have the models incorporated the effect of salinity on ocean surface vapor pressure changes? It is a fact that fresh water has a higher specific heat than salt water. However, salt water, because of the molecular adhesive forces of the sodium and chloride ions, requires more energy, despite having a lower specific heat, to release (evaporate) H2O and heat into the atmosphere. Then there is the effect of more fresh water coming off of glaciers reducing salinity in some ocean surface areas which, in theory, would increase the heat storage capacity in the surface layers even while the evaporation rate is facilitated.

I ask all this because it seems logical that, if there is empirical evidence that the surface layers of the ocean are warming at a faster rate than the atmosphere, a punctuated equilibrium phenomenon could be building up where, at a certain point, a combination of reduced salinity and increased temperature could cause a large amount of heat exchange from the oceans into the atmosphere, thereby exacerbating atmospheric heating.

And all this is above and beyond he deleterious effects of decreased pH on the thousands of species of marine CaCo3 shelled organisms due to green House gases.

My second question is, considering there are billions of internal combustion engines running 24/7 on the surface of our planet and in constant and intimate contact with the atmosphere, do the models that predict the rate of atmospheric warming from green house gases also include the infrared radiation from all those internal combustion engines?

The green house gas pollution comes from engines that are only 18 to 22% efficient, except for the large turbines run by utilities that can reach up to 60%. My point is that we have nearly 80% of the energy produced from internal combustion engines being converted to heat that immediately enters the atmospheric mix. Is this not considered statistically significant in the light of the massive amount of IR heat per square meter that we have arriving on the planet from the sun?

If an estimate of that heat load and a scientific study to measure and quantify it has not been done, it should be done. We have alternatives. If it could be proven scientifically that the IR contribution by the internal combustion engines is deleterious to the biosphere, it would boost the transition to non thermal mechanical energy applications like solar powered electric vehicles or at least batteries charged exclusively by solar power for said vehicles.

About 157,000 vehicles are manufactured each day on this planet. Why should we allow our vehicles to get only 20% mechanical energy and release 80% heat into the atmosphere when an electric motor is at least 70% efficient?

We need scientists to speak loudly and clearly that fossil fuels are deleterious to the biosphere. The status quo is not healthy or sustainable. You know that. Can you help to end the fossil fuel age? It’s high time we stopped digging out own graves on behalf of profit over planet.

for A.G. Gelbert:

LMGTFY; this search: heat from power climate

Found: http://carboncycle2.lbl.gov/resources/experts-corner/fossil-fuel-combusion-heat-vs-greenhouse-gas-heat.html

which answers your second question:

#44 Roger

Well, it is another matter of discussion what state variables are easier, or more robust, or more reliable to obtain, but your claim was that Stefan gave an incorrect description of the underlying physics.

Somehow you seem to use exactly the same physics in your argument, what I would regard good news in the end

All the best,

Marcus

#51 (A.G. Gelbert – See more at: https://www.realclimate.org/index.php/archives/2014/10/ocean-heat-storage-a-particularly-lousy-policy-target/comment-page-2/#comments)–

“…if there is empirical evidence that the surface layers of the ocean are warming at a faster rate than the atmosphere…”

I think the case is rather the reverse. I used the woodfortrees site to plot sea surface temps (hadsst, in red) versus global surface temps (hadcrut4, in green), adding, for good measure, the UAH lower tropospheric record. As you’ll see if you click on the link below, the first two track very tightly over most of the record–not surprising, since most of the globe is ocean–but the global temps diverge over the last couple of decades, warming more quickly than the ocean. (The UAH atmosphere data, too, seems to warm more quickly.)

http://www.woodfortrees.org/plot/hadsst3gl/mean:13/plot/hadcrut4gl/mean:13/plot/uah/offset:0.15/mean:25

Here’s a ‘closeup’ of the period since satellite observations began in 1979:

http://www.woodfortrees.org/plot/hadsst3gl/mean:13/from:1979/plot/hadcrut4gl/mean:13/from:1979/plot/uah/offset:0.15/mean:25/from:1979

Lastly, the linear trends of the data shown in the last graph:

http://www.woodfortrees.org/plot/hadsst3gl/mean:13/from:1979/trend/plot/hadcrut4gl/mean:13/from:1979/trend/plot/uah/offset:0.15/mean:25/from:1979/trend

A.G. Gelbert, you may also be interested in work like this:

http://www.ocean-sci.net/9/183/2013/os-9-183-2013.pdf

It is mostly concerned with improving the accuracy of satellite measurements of sea surface salinity, but you can see the complex pattern of freshening (and its reverse–the subtropical areas of the Atlantic are getting saltier, particularly in the NH, as are some other areas to lesser extents) observed over the global ocean. (Fig. 1 in the link.)

A. G. Gelbert 51, “green house gas pollution comes from engines that are only 18 to 22% efficient”

Fission or fusion production of electrical energy is not that much more efficient. About 2/3 of the heat produced to generate power would end up back in the oceans; exacerbating the problem.

Since the essence of global warming is ocean warming, it seems to me there is no answer without the conversion of some of this heat to work and the removal of as much as possible of the rest to the safety of the deep oceans.

Re #50 If this is the basis you are using to not use OHC changes to monitor global warming; i.e.

“The only metric we have that goes back far enough for policy purposes, which is what is being discussed here, is the surface temperature and precipitation records.”

you are missing policy-relevant studies such as

Llovel et al 2014: Deep-ocean contribution to sea level and energy budget not detectable over the past decade. Nature Climate Change. http://www.nature.com/nclimate/journal/vaop/ncurrent/full/nclimate2387.html

and

Levitus et al 2012: World ocean heat content and thermosteric sea level change (0–2000 m), 1955–2010. Geophysical Research Letters. http://onlinelibrary.wiley.com/doi/10.1029/2012GL051106/abstract

We discuss what can be interpreted from such studies in our post

An alternative metric to assess global warming. http://judithcurry.com/2014/04/28/an-alternative-metric-to-assess-global-warming/

Stefan,

There is higher thermal expansion of sea water at great pressure than near atmospheric pressure. For example, at 2C and 6000 meters, the coefficient of thermal expansion is about 60% of that at the surface at 25C. (http://www.esr.org/documents/hpeters/siedlerpeters86.pdf) So substantial warming of very deep water, were this to occur, would contribute quite a lot to sea level rise.

If it will take a millenium for the effects of the current climate situation to dissipate due to OHC, can precisely the same argument not be made over the OHC changes due to the LIA, and in fact any other period, extreme or otherwise ? If the inertia is strong, we can not be confident any climate effects we are seeing now are not largely just artifacts of earlier climate events echoing through time.

On the other hand if we are confident previous OHC changes have not (via their inertia) been responsible for current climate changes, then clearly high frequency changes can occur without being swamped by the earlier OHC changes, and we can be confident that the OHC inertia induced changes will, again, not make much difference to the high frequency climate at that point in the future. Therefore not being something to be concerned about.

#57

Now some, not Eli to be sure, might think this is the same Levitus paper where somebunny, not #57 to be sure posted a comment about “The Overstatement of Certainty In the Levitus et al 2012 paper.

Just sayin.

> A.G. Gelbert

> … it is a fact …

Can you cite that? I tried to check the claim and found

http://www.newton.dep.anl.gov/askasci/phy00/phy00618.htm

Same question for the following statement about sodium and chlorine — cite please. I couldn’t find that anywhere else.

> A.G. G …

Note at that link, there’s a basis to make both statements, as Ali Khounsary of

Argonne National Laboratory points out — but these are detectable differences, not big ones.

Here are some numbers on the second point: http://van.physics.illinois.edu/qa/listing.php?id=1457

Gavin, thank you for your response to my comment at #10.

In response to modelling of the oceans you say-

“All current coupled climate models include wavelength-dependent penetration of SW into the ocean and some even include the impacts of varying Chlorophyll on that penetration. Rather than being a fundamental flaw, it is in fact a very minor difference”

I would say the differences between SW opacity and translucency are not minor but major, critically so, and that climate models cannot possibly be modelling this correctly. The simplest check is the 255K base assumption for “surface without atmosphere”. The oceans are –

– IR opaque

– SW translucent

– Internally convecting

– Intermittently illuminated

– Have effective (not apparent) IR emissivity lower than SW absorptivity.

For these conditions simple empirical experiment shows that the 255K base assumption is in error by around 80C for the oceans. Globally this would mean “surface without atmosphere” should be around 312K not 255K.

This may seem an incredible claim, but errors of exactly this magnitude occurred between SB modelling for the Lunar regolith and empirical measurement from the Diviner mission. Even advanced 3D GCMs are showing surface temps being raised from around 255K to 288K by our radiative atmosphere not lowered from 312K to 288K. The parametrisations in the models for the true greybody properties of the oceans therefore cannot be correct.

[Response: You are totally confused as to where the 255 K comes from (surface temp in the absence of atmosphere but same albedo – nothing to do with ocean processes at all), and the difference btw the moon and Earth is mostly associated with heat capacity and is easily understandable. All of the issues you quote are actually included in ocean models, and the results are in complete contradiction with your claim. In any case, this is totally off-topic. – gavin]

Hank, given that the vapor pressure of fresh water is higher than that of salted water, the fresh water will cool faster since there will be more evaporation. Other than that it would be like the Mpemba effect, you need to tightly define the conditions to determine the outcome.

Stefan:

Bob Tisdale is already trying to invert the sense of your post, albeit it seems unlikely that Anthony Watts will tolerate much by wayo f resonse.

Roger,

In the Judith Curry post that you mention in #57 I think you have a minor error and also do something unnecessary. Your basic model is

Delta Q = Delta F – Delta T/lambda

where Delta Q, you claim, is the radiative imbalance. This isn’t strictly correct. Delta Q is actually the change in system heat uptake rate over the time interval considered (i.e., it is the rate today minus the rate at the beginning). In your calculation you consider the period 1955-2010 which, I assume, is because this is the period for which we have OHC data. You then seem to calculate Delta Q using the change in OHC over this period divided by the time interval. This isn’t strictly correct, as it should have been the rate in 2010 minus the rate in 1955. Of course, we can’t really calculate an instantaneous rate, given the variability in the data, but we can at least estimate it if we consider a suitable time interval. Also, since you assumed it was in equilibrium in 1955 (probably not quite correct) the error was probably reasonably small.

On the other hand, you actually don’t need to consider only the 1955-2010 time interval. You can do what others (Otto et al., Lewis & Curry) have done, which is to consider the full instrumental temperature period (i.e., mid 1800s to now). The longer the time period, the more likely it is that you can average out any influence from internal variability. To use this longer baseline, all you need is some estimate for the system heat uptake rate at the beginning. Otto et al. used 0.08 W.m^2 and Lewis & Curry used 0.15 W/m^2. I think this may be why you got some slightly surprising results when you carried out your analysis.

#65 Russell if you don’t mind could you give a 200 words subsumptions what they even have in mind, I am getting a headache with the first paragraphs.

Your stomach seems better than mine

Russell # 65,

You may be surprised what Watts tolerates. He has a very open moderation policy. The shameful censorship of RC and SKS is in stark contrast.

Watts hates my guts. I an an empiricist and hard sceptic, not a lukewarmer. Yet Watts (fervent lukewarmer) still allows my comments (typically after 24 hour delay ) at his site. I may not agree with his ‘lukewarm” position, but I cannot accuse him of inappropriate censorship.

Russell, you are free to comment there. Just don’t disguise your name or vilify others.

ATTP, however if you estimate the heat uptake rate from the global warming or SST warming, you might as well use 2C as your goal, not the OHC which you are inferring from it.

Given the incongruity of his comments here, I thought to gain a better understanding of where Roger A. Pielke Sr. is coming from by examining his link @57. . Just as …and Then There’s Physics @66 has described, this link is to a posting on the planet Climateetcia. It is entitled An alternative metric to assess global warming by Roger A. Pielke Sr., Richard T. McNider, and John Christy. It is an interesting title as the ‘alternative’ discussed is pretty much the same as the original.

The genesis of the work is an objection to the equation ΔQ = ΔF – ΔT/ λ as “the actual implementation of the equation can be difficult” because of ΔT, a quantity which “has been shown to have issues with its accurate quantification” apparently.

To overcome this difficulty, a new equation is proposed.

This equation is then used for the period 1950/55 -2010/11 (I’m not sure what happened to all that ‘annual averaging’ mind) using Levitus+10% for the imbalance, AR5 SPM for both the forcing and for ΔT and finally a value for the strength of climate feedback per ºC from Soden et al (2008) (which Pielke et al. manage to mis-attribute). (The astute observer will note the continued presence of that pesky ΔT in this ‘alternative’ metric.)

Thus, with all variables quantified, the equation can be used to compare measured and calculated values. This apparently shows a “discrepancy”, although not a guaranteed “discrepancy” due to uncertainties. To address this situation, the “next step” needs current time (2014) estimates and the “apparent discrepancy” also needs a “higher level of attention.”

I would guess the questions posed by Roger A. Pielke Sr. @5 derive from all this “need”. However I remain unclear as to the purpose of this ‘alternative’ metric. Perhaps it will be used to achieve a guaranteed “discrepancy.” Or perhaps, because the ‘alternative’ is the same as the original and λ=1/ECS, this upside-down topsy-turvy analysis will allow us to obtain that long-ellusive derivation of an accurate ECS. Or perhaps not.

“and Then There’s Physics” – Re# 66,

the equation you refer to is 1.1 in

National Research Council, 2005: Radiative forcing of climate change: Expanding the concept and addressing uncertainties. Committee on Radiative Forcing Effects on Climate Change, Climate Research Committee, Board on Atmospheric Sciences and Climate, Division on Earth and Life Studies, The National Academies Press, Washington, D.C., 208 pp.

http://www.nap.edu/openbook.php?record_id=11175&page=21

You wrote

“you claim, is the radiative imbalance. This isn’t strictly correct. Delta Q is actually the change in system heat uptake rate over the time interval considered (i.e., it is the rate today minus the rate at the beginning).”

This is exactly my point. We can use the change in system heat uptake over a time interval to diagnose the average TOA radiative imbalance over this time period. Since there are other heat sources/sinks besides the ocean (e.g. sea ice melt, permafrost melt, ground heating, troposphere etc), it is not all of it, but we have a reasonably good idea of the fraction that involves the oceans.

This is an approach that Jim Hansen used in his response to John Christy and I in

http://pielkeclimatesci.files.wordpress.com/2009/09/1116592hansen.pdf

where he wrote

“The Willis et al. measured heat storage of 0.62 W/m2 refers to the decadal mean for the upper 750 m of the ocean. Our simulated 1993-2003 heat storage rate was 0.6 W/m2 in the upper 750 m of the ocean. The decadal mean planetary energy imbalance, 0.75 W/m2 , includes heat storage in the deeper ocean and energy used to melt ice and warm the air and land. 0.85 W/m2 is the imbalance at the end of the decade.”

Now I have some questions for you:

1. What is the best estimate (2014) of the current global averaged radiative imbalance?

2. How do you estimate this?

3. What is the current (2014) radiative forcing from added CO2 concentrations (above the pre-industrial concentrations)? We do not need the difference between these two time periods, which the IPCC presents, but the current forcing given that some of the added CO2 has been adjusted to by the warming up to this time.

As to our results in our post http://judithcurry.com/2014/04/28/an-alternative-metric-to-assess-global-warming/, I would welcome your analysis using the approach we recommend.

Roger Sr.

Steve Fitzpatrick 58, “substantial warming of very deep water, were this to occur, would contribute quite a lot to sea level rise.”

Steve I believe what is being assumed is a redistribution of the surface heat to the deep and since as you point out the thermal coefficient is less – at 1000 meters and 4C it is about half a tropical surface temperature of 25C – it is clear sea level rise would be less.

MARodger – Unfortunately, you miss the point. The term “alternative” means that instead of using an equation such as 1.1 in

National Research Council, 2005: Radiative forcing of climate change: Expanding the concept and addressing uncertainties. Committee on Radiative Forcing Effects on Climate Change, Climate Research Committee, Board on Atmospheric Sciences and Climate, Division on Earth and Life Studies, The National Academies Press, Washington, D.C., 208 pp.

where a global average temperature anomaly is used directly, we can bypass that need in order to assess the global average TOA radiative imbalance. We do not need the 2C threshold as the primary metric to diagnose global warming. There is a more accurate measure.

Among the benefits of letting the oceans do the time and space integration for us, is that there is no time lag involved as with the global average temperature anomaly where it takes time to respond to a radiative imbalance. There is no unresolved heating as long as we can accurately estimate all of the Joules added to the climate system.

Moreover, rather than directly using satellite and other measures of changes in radiative fluxes over time to diagnose the global averaged radiative imbalance, we can use the time-space integration of heating provided by the oceans.

Now that we have an improved monitoring of ocean heat content (from Argo and satellites), we should move beyond the surface global average surface temperature anomaly as the primary metric to communicate global warming to policymakers. Certainly as climate scientists, we should be using the ocean heat content changes as a primary metric to assess global warming both in the real world and in the climate models.

Roger Sr.

Regarding the comment @73.

The equation 1-1 referred to within National Research Council, 2005 (on p19) is dH/dt = f – T’/λ, where T’ is the change in surface temperature which is, goodness, just like the quantity taken from the AR5 SPM and “directly” used within that “alternative.” I’m not sure how such use is supposed to “bypass that need.”

Concerning the bulk of the comment @73, I have already given my opinion on it @42 above. (I note @42 that my description of how I calculated trends from 30 month periods of Levitus OHC data using linear regressions was very poorly worded.)

Roger,

Thanks for the response.

This is exactly my point. We can use the change in system heat uptake over a time interval to diagnose the average TOA radiative imbalance over this time period. Since there are other heat sources/sinks besides the ocean (e.g. sea ice melt, permafrost melt, ground heating, troposphere etc), it is not all of it, but we have a reasonably good idea of the fraction that involves the oceans.

I agree with this, but I think you’re missing my point. The average rate of change over some time interval is not the same as the current value. Something we would probably like to know is the current TOA imbalance (i.e., how much we are out of equilibrium now), rather than the average for the last 60 years. Obviously we can’t determine it instantaneously, but using a suitable time interval (decade maybe) we could do and indeed do do so. Of course, if the radiative imbalance has been constant, then the average and the instantaneous would be the same, but this would not be true as we approach equilibrium.

As to your questions :

1. What is the best estimate (2014) of the current global averaged radiative imbalance?

2. How do you estimate this?

I’ll answer 1 and 2 together. If you consider the latest OHC estimates then its increased by about 10^23 Joules in the last 10 years. If I average that over the whole globe and assume (as I think you did) that this is associated with 90% of the energy increase, then I get an average for the last decade of 0.7 W/m^2. I realise this isn’t, strictly speaking, the value for 2014. I’m simply suggesting that if we want to estimate the value today, we’d want to use as short an interval as possible, rather than a very long time interval.

3. What is the current (2014) radiative forcing from added CO2 concentrations (above the pre-industrial concentrations)? We do not need the difference between these two time periods, which the IPCC presents, but the current forcing given that some of the added CO2 has been adjusted to by the warming up to this time.

I’m not sure I quite get what you’re asking here. Typically a radiative forcing is defined as some change, rather than as some value at an instant in time. So, for example, the change in anthropogenic forcings relative to 1750 is about 2 Wm-2. If the TOA imbalance today is around 0.7Wm-2 then that suggests that the Planck response plus other feedbacks (plus solar and volcanoes) is 0.7 Wm-2 less than the change in anthropogenic forcings. Maybe you could rephrase this, as I’m not sure I’m getting what you’re asking here.

As to our results in our post http://judithcurry.com/2014/04/28/an-alternative-metric-to-assess-global-warming/, I would welcome your analysis using the approach we recommend.

I think the method is quite useful but – as I said above – I think how you determine Delta Q is wrong. Your method is really just a variant of the Otto et al./Lewis & Curry energy balance method. The main difference seems to be that they use the OHC data to estimate the change in system heat uptake rate and use model estimated changes in forcings to estimate the TCR and ECS. You seem to have used model estimates for the feedbacks and the changes in forcings to estimate the change in system heat uptake rate. From Otto et al. (and Lewis & Curry) we could conclude that the feedbacks seem (at the moment) smaller than the climate models suggest. This appears to be roughly the same as you get since you conclude that using the model feedbacks you get a higher radiative imbalance than the OHC data suggests. As I said, I think these methods are quite useful, but am not quite sure what you’re concluding from this. Given the uncertainties, these simple estimates are not inconsistent with results from GCMs.

“We do not need the 2C threshold as the primary metric to diagnose global warming.” – See more at: https://www.realclimate.org/index.php/archives/2014/10/ocean-heat-storage-a-particularly-lousy-policy-target/comment-page-2/#comment-613964

Huh? It’s not a metric for diagnosis of anything; it’s a policy benchmark to (hopefully) avoid the worst damage, as discussed above. Let’s not lose track of the fact that this thread isn’t about attribution, it’s about mitigation policy calibration.

#72, Jim Baird,

What I was addressing was this sentence from the post:

“By the way, neither has sea-level rise due to thermal expansion, because the thermal expansion coefficient is several times larger for warm surface waters than for the cold deep waters”

Which is not really accurate. The coefficient of expansion for cold deep water (eg 6000 meters, 2C) is not a factor of several times less than warm surface water (say 25C); the surface expansion coefficient at 25C is only about 50% greater than for 6000 meters at 2C. Warming at great depth does not cause as much expansion as similar warming at the surface, of course, but the difference is not as large as you might think if you ignore the influence of pressure on the coefficient. The same quantity of heat accumulated at any depth in the ocean will cause comparable thermal expansion; yes, somewhat less at great depth, but not a large factor less.

and Then There’s Physics – We actually are remarkably close in our views.

On #1 and #2 we are in agreement.

On #3, in terms of the definition of radiative forcing, in the NRC (2005) report it is given that

“Radiative forcing is reported in the climate change scientific literature as a change in energy flux at the tropopause, calculated in units of watts per square meter (W m−2)”

Also, it is written that this forcing is a

“perturbation .. due to a change in concentration of the radiatively active gases, a change in solar radiation reaching the Earth, or changes in surface albedo. “

A change in energy flux can be referred to as an “energy flux divergence”. The time scale is not defined, but while we may average over time to obtain more robust estimates, it is a differential quantity. Indeed, the term “perturbation” is used. Thus, there is a current (2014) radiative flux divergence, although one would need a model or other reanalysis approach to obtain.

I agree this is not an easy task, which is why, presumably, the IPCC presents as a change since 1750. In the previous IPCC assessment they actually labeled their figure as the radiative fluxes in 2005 (they said otherwise in a footnote). That confusing labeling was corrected in the most recent report, but the IPCC assessment is silent on the current fluxes.

On #4, what I have concluded is that the model predictions are not properly simulating all of the radiative feedbacks. We estimated in a way that was different from Lewis and Curry, but come up with a similar conclusion. I agree that the climate modeled values may still be within the observational uncertainty, but suggest this is an analysis approach that should be used in the future for model evaluation.

With the diagnostic of ocean heat changes in order to obtain robust estimates of the global average TOA radiative imbalance, there is more solid scientific basis to communicate global warming than just the global average surface temperature anomaly. Ocean heat content change assessments provide an excellent tool to assist in policy making.

Roger Sr.

[Response: “Perturbations” have to be defined with respect to a base case. There is no such thing as a radiative forcing without this. Given the large anthropogenic impact of the industrial revolution, estimating forcing with respect to 1750 makes sense. You can of course estimate forcing with respect to any time you like, so if you want such a number, please specify the base period you would support. – gavin]

I still don’t understand Roger’s insistence in engaging in metric-comparison. Of course, everything being discussed in this post is important for scientists to understand. You can’t diagnose the state of the climate system in a comprehensive way without both surface temperature and ocean heat content changes, just like we also want to understand humidity, albedo, ocean acidity, precipitation, snow cover, etc. It strikes me as very odd to say that one of these variables is inherently “better” than another.

Regarding which of these is “best” to talk to policymakers about, it’s probably worth asking this to policymakers in a given context, rather than just assuming what they want to hear about. Making the leap of OHC being a “3-D” field or a measure of TOA radiative imbalance to being the metric policymakers want to hear about doesn’t follow in any coherent way.

I for one am not very interested in the radiative imbalance for the sake of the radiative imbalance, but because ocean heat uptake has offset a portion of the current radiative forcing and as a result the planet is cooler than it otherwise would be without this uptake. How future global warming is expressed (in surface temperature) is determined by future ocean heat uptake and its spatial structure.

As others have pointed out though, Roger keeps confusing a diagnostic with a benchmark that people are willing to accept given the non-zero future carbon emissions. Stefan has made a compelling case that surface temperature is useful in this respect, largely because of its straightforward link to cumulative carbon emissions and because of how well many things scale with global temperature (including impacts).

Konrad writes :

I may not agree with his ‘lukewarm” position, but I cannot accuse him of inappropriate censorship.

Russell, you are free to comment there. Just don’t disguise your name or vilify others.

This is counterfactual – unless you are one of his censors.

I think the primary metric should be CO2 equivalent PPM. It directly links to emissions while everything else is results. Why 280, 350, whatever? That is when you talk about your favorite secondary metric.

Gavin – You wrote “Perturbations” have to be defined with respect to a base case. It seems you and I have slightly different views on this.

Please refer to

Ellis et al. 1978: The annual variation in the global heat balance of the Earth. J. Geophys. Res., 83, 1958-1962. http://pielkeclimatesci.files.wordpress.com/2010/12/ellis-et-al-jgr-1978.pdf

See their figure 4. They present the monthly net radiative flux at the top of the atmosphere. The monthly value they provide could be presented as a perturbation from the annual mean.

Their values have undoubtedly been updated. However, using their format, I am asking for the part of such a net flux that is due to CO2 over and beyond the pre-industrial level given that some of the increase has been compensated for by warming (and thus a larger outgoing long wave flux).

I also would like to see the GISS model values for the current global annual averae radiative imbalance based on the model projections.

Roger,

Gavin can probably deal with this better than I can, but I’m not really getting what you’re asking. Even the figure from Ellis et al. (1978) is still relative to some baseline. I’m assuming it’s relative to an annual average, which is presumably 0 W/m^2.

I don’t understand what you mean by this

I am asking for the part of such a net flux that is due to CO2 over and beyond the pre-industrial level given that some of the increase has been compensated for by warming (and thus a larger outgoing long wave flux).

Consider some base period (call it 1750) when we are in equilibrium. Since that time we’ve increased atmospheric CO2 which has produced a net change in anthropogenic radiative forcing of about 2 W/m^2 (okay, there are uncertainties, but let’s ignore that for now). The temperature has also risen and produced a negative feedback (Planck response). There have also been other feedbacks due to that temperatures rise; some positive (water vapour), some negative (lapse rate feedback), some a bit uncertain (clouds). Overall, the change in anthropogenic forcing plus the Planck response, plus the other feedbacks have resulted in a state in which we have a TOA imbalance today of about 0.7 W/m^2. I don’t know how else to describe the process or how you can determine some part of this that is due to CO2.

Kevin McKinney wrote: “Let’s not lose track of the fact that this thread isn’t about attribution, it’s about mitigation policy calibration.”

Any discussion of mitigation policy with deniers inevitably retreats towards discussion of whether warming can be attributed to anthropogenic forcing at all, and then towards whether warming is occurring at all.

Gavin – To make sure my perspective is clear.

The global average radiative imbalance = global average radiative forcings + global average radiative feedbacks (1)

This is true over any time period of assessment.

What we need (in my view), given that we can diagnose the global average radiative imbalance from ocean heat changes, are the components that make up the forcings and feedbacks. The Wielicki et al 2013 paper provides useful information on the component parts of the feedbacks. However, the forcing components are not clear. These certainly are not the differences from 1750 since there has been some adjustment over time from the feedbacks.

I am requesting the components for the forcing including from CO2 and the other greenhouse gases (and the other terms) that comprise equation (1).

Roger Sr.

[Response: That only makes sense as a perturbation from a state when the climate was in quasi-equilibrium – and since that probably hasn’t been the case since the pre-industrial, the forcing terms you are looking fr are exactly what IPCC provided (i.e. relative to 1750 conditions). – gavin]

green house gas pollution comes from engines that are only 18 to 22% efficient

Actually, it does not matter how efficient a heat engine is in creating useful work, energy is conserved and whether you do useful work or not that enthalpy of formation is delivered to the local environment. The crux of global warming is that there is a nightly relief valve freeing that energy into deep space, that some of the reaction byproducts are interfering with. For instance, we do very little work with the solar irradiance and that is by far the greatest energy input into the planetary system. Whether we do useful work with it or not, that energy will have to be released for the system to maintain an equilibrium temperature state. Efficiency is a by a large a microscopically small problem if the reaction byproducts of the energy conversion process are greenhouse gas neutral.

What is being discussed is a policy goal. Policy goals need to be simply stated, understandable by the policymakers and the general public. Ocean heat content, TOA radiative flux and the other suggestions here are not. Even CO2 mixing ratio has no immediate feedback into daily life.

There is settled agreement that 3C or higher would be a disaster, 2C is PERHAPS bearable and 1C would be ok, but have some consequences we might not like.

The attempt to do away with the 2C target is simply a gish gallop

Eli,

“There is settled agreement that 3C or higher would be a disaster, 2C is PERHAPS bearable and 1C would be ok, but have some consequences we might not like.”

I assume those are relative to pre-industrial temperatures (say, 1850). If so, then I do wonder about the consequences of 1C, considering that the current warming is perhaps 0.85C since 1850. I mean, there should be some pretty obvious negative effects already visible. Can you point out the negatives you expect/observe for 1C and 2C above pre-industrial temperatures?

Gavin – you wrote

[Response: That only makes sense as a perturbation from a state when the climate was in quasi-equilibrium – and since that probably hasn’t been the case since the pre-industrial, the forcing terms you are looking for are exactly what IPCC provided (i.e. relative to 1750 conditions). – gavin]

Thank you for clarifying. We disagree on this. I hope others on this and other weblogs address if they agree with you that the current 2014 radiative forcing is the same as the difference between 1750 and now. Also, if they agree with you that equation (1) only applies in a quasi-equilibrium climate system.

Roger Sr.

Can you point out the negatives you expect/observe for 1C and 2C above pre-industrial temperatures?

Irreversible sea level rises and ocean acidification for starters. Once that heat gets into the deep ocean, it is not going to radiate back into space for quite a while. It’s good that we have these kinds of thermal sinks and buffers, but they are not going to last forever. We’re headed for the Eocene.

Roger,

I agree with Gavin and is essentially the point I was trying to make in my first comment. Your equation 1 is

Delta Q = Delta F – Delta T/lambda

You seem to assume that Delta Q is the radiative imbalance. As Gavin points out, this is only true if you start from an equilibrium state. More generally it is the change in system heat uptake rate (i.e., the difference between the radiative imbalance at the end and at the beginning). You can read the first paragraph in Section 2 of Lewis & Curry for example. Otto et al. describe it similarly.

Also, if they agree with you that equation (1) only applies in a quasi-equilibrium climate system.

I don’t think this is what Gavin said. Delta Q is only the radiative imbalance, if you’re considering a system that starts in equilibrium.

Actually, I should probably add that in the equation

Delta Q = Delta F – Delta T/lambda

all the Delta terms are changes. Delta Q being the change in system heat uptake rate, Delta F being the change in radiative forcing and Delta T being the change in temperature. All of these have to be changes over the same time interval. Delta Q will only be the same as the radiative imbalance at the final time interval if it is zero (in equilibrium) at the beginning. That’s why I don’t understand what you mean by the current 2014 radiative forcing. It’s possible for there to be a net radiative imbalance at any instant in time (difference between incoming and outgoing flux), but I don’t understand how one can define a radiative forcing at some instant in time.

Roger A. Pielke Sr. @85

I regret having to point out an error for a second time but it is now directly made on this thread and it is of some significance. So it bears repeating and in some detail.

You attribute Wielicki et al. (2013) as “provid[ing] useful information on the component parts of the [global average radiative] feedbacks.” This is incorrect. It is certainly a mis-attribution. I believe it is also a misunderstanding of the nature of the data being ‘provided’.

It is correct to say that Figure 1, of that paper (entitled ‘Achieving Climate Change Absolute Accuracy in Orbit’ and complete with an impressive list of 50 authors,) presents values and maps of the geographical distribution for “…means of decadal feedback for temperature, water vapor, surface albedo, and clouds.” But that Figure 1 is reproduced from elsewhere. And to be clear, this data is not the outcome of any ‘accurate orbital’ data-gathering. The CLARREO mission which is the subject of Wielicki et al. (2013) has “a launch readiness date of no earlier than 2023.” It thus would be a surprise if Figure 1 did present such highly-prized data from existing satellites when the paper is describing why such data is “critical for assessing changes in the Earth system and climate model predictive capabilities” and how necessary data will be provided by CLARREO.

As already pointed out @70 above, the data presented in that Figure 1, indeed the figure itself, orignates as Figure 8 of Boden et al. (2008) ‘Quantifying Climate Feedbacks Using Radiative Kernels’. That Figure 8 is described as – “Multimodel ensemble-mean maps of the temperature, water vapor, albedo, and cloud feedback computed using climate response patterns from the IPCC AR4 models and the GFDL radiative kernels.” The data is the output of computer models and thus somewhat different in nature to satellite-measured data.

The true nature of this graphic is made clear in Wielicki et al. (2013). However I believe its true nature is far from clear in the mind of Roger A. Pielke Sr.

Eli at #66. Yes, I agree. I’m certainly not arguing for OHC as a target. I’m actually trying to point out that Roger doesn’t appear to be using his own equation correctly.

Steve (#88),

“An important change is the emergence of a category of summertime extremely hot outliers, more than three standard deviations (3°) warmer than the climatology of the 1951-1980 base period. This hot extreme, which covered much less than 1% of Earth’s surface during the base period, now typically covers about 10% of the land area. It follows that we can state, with a high degree of confidence, that extreme anomalies such as those in Texas and Oklahoma in 2011 and Moscow in 2010 were a consequence of global warming because their likelihood in the absence of global warming was exceedingly small.” http://pubs.giss.nasa.gov/abs/ha00610m.html

So, increased morality and crop loss from heatwaves is a set of pretty obvious negative effects already visible.

and Then There’s Physics

You wrote

“ Delta Q being the change in system heat uptake rate, Delta F being the change in radiative forcing and Delta T being the change in temperature. All of these have to be changes over the same time interval. Delta Q will only be the same as the radiative imbalance at the final time interval if it is zero (in equilibrium) at the beginning.”

Why do you conclude Delta Q is the same as the radiative imbalance only if it is zero (in equilibrium) at the beginning? Since you agree that Delta Q is the change in system heat uptake rate over a time interval, which we can obtain from ocean heat storage changes over this period, what else does it represent in your view?

You are claiming that heat storage changes over any arbitrary time slice cannot be used to diagnose the global averaged radiative imbalance over this time period since some radiative imbalance is missed. Where is the heating from this missed imbalance?

Roger Sr.

MARodger – I am sorry but I am missing your point. We used the Wielicki et al. (2013) paper only because they provided a convenient set of information to estimate the global averaged radiative feedbacks. We do not claim these values are from ‘accurate orbital’ data-gathering. Nor are they the final word, as you noted.

Since you feel that other better current estimates of the global average radiative feedbacks are available (from models and/or observations), please provide them and we can then use in the analysis framework we provide.

This thread (with the other comments) has been quite informative already, as I am finding out, much to my surprise, that the current global average radiative forcing is accepted to be the difference between now and 1750. This indicates an even poorer performance of the multi-decadal global climate model projections when we compare with the global averaged radiative imbalance and our the estimate of the feedbacks that we have used so far.

Roger Sr.

Chris Dudley – To support your report on the occurrence of more heat waves, see also our paper

Gill, E.C., T.N Chase, R.A. Pielke Sr, and K. Wolter, 2013: Northern Hemisphere summer temperature and specific humidity anomalies from two reanalyses. J. Geophys. Res., 118, 1–9, DOI: 10.1002/jgrd.50635. Copyright (2010) American Geophysical Union. http://pielkeclimatesci.files.wordpress.com/2013/08/r-341.pdf

Our conclusion reads in part

“We find that: (1) areas of the NHEXT, including the southwestern tip of Greenland, experienced a summer heat wave during 2012 that was almost as extreme in spatial extent and magnitude as the Russian heat wave of 2010, (2) there is an increasing trend in summer heat waves and positive specific humidity anomalies and a decreasing trend in summer cold waves and negative specific humidity anomalies..”

I agree that this is a valuable, societally and environmentally critical metric to assess long term climate trends. It is, in my view, more useful than the 2C criteria.

Roger Sr.

Roger,

Why do you conclude Delta Q is the same as the radiative imbalance only if it is zero (in equilibrium) at the beginning? Since you agree that Delta Q is the change in system heat uptake rate over a time interval, which we can obtain from ocean heat storage changes over this period, what else does it represent in your view?

Let me try and clarify. I agree that Delta Q is the change in system heat uptake rate over the time interval. That I don’t dispute. I also agree that you can get it from the OHC. However, this is maybe where we differ. In my view, the correct way is to use the OHC to determine the system heat uptake rate at the end of the interval (i.e., 2000 – 2010, for example) and at the beginning (1955 – 1965, for example). Therefore Delta Q only matches the radiative imbalance at the end of the time interval if the radiative imbalance happens to be zero at the beginning. Also, it’s not correct to determine Delta Q using the total change in OHC divided by the total time interval, since that is the average system heat uptake rate, not the change in system heat uptake rate.

If you consider Otto et al. (who use the same basic approach as you suggest) their calculation uses a base interval of 1860-1879 and then consider different recent decades (1970s, 1980s, 1990s, 2000s). For the 2000s, they estimate a system heat uptake rate of 0.73 W/m^2. For the base interval of 1860-1879 they assume a value of 0.08 W/m^2. Therefore the Delta Q in their calculation is 0.73 – 0.08 = 0.65 W/m^2 (see Table S1, for example).

I should add that I’ve written a post about this in which I do suggest that your interpretation is wrong. If you think I mis-represent you in any way, feel free to point that out, either here or there.

Eli Rabett wrote: “There is settled agreement that 3C or higher would be a disaster, 2C is PERHAPS bearable and 1C would be ok, but have some consequences we might not like.”

It is self-evident that the warming that has already occurred is already having consequences that we don’t like, and has already ensured worse consequences that we will like a lot less.

There is already a steady stream of “disasters” that are at least in part directly attributable to anthropogenic CO2 emissions.

The mitigation policy goal is straightfoward: zero emissions, as soon as possible.