I have written a number of times about the procedure used to attribute recent climate change (here in 2010, in 2012 (about the AR4 statement), and again in 2013 after AR5 was released). For people who want a summary of what the attribution problem is, how we think about the human contributions and why the IPCC reaches the conclusions it does, read those posts instead of this one.

The bottom line is that multiple studies indicate with very strong confidence that human activity is the dominant component in the warming of the last 50 to 60 years, and that our best estimates are that pretty much all of the rise is anthropogenic.

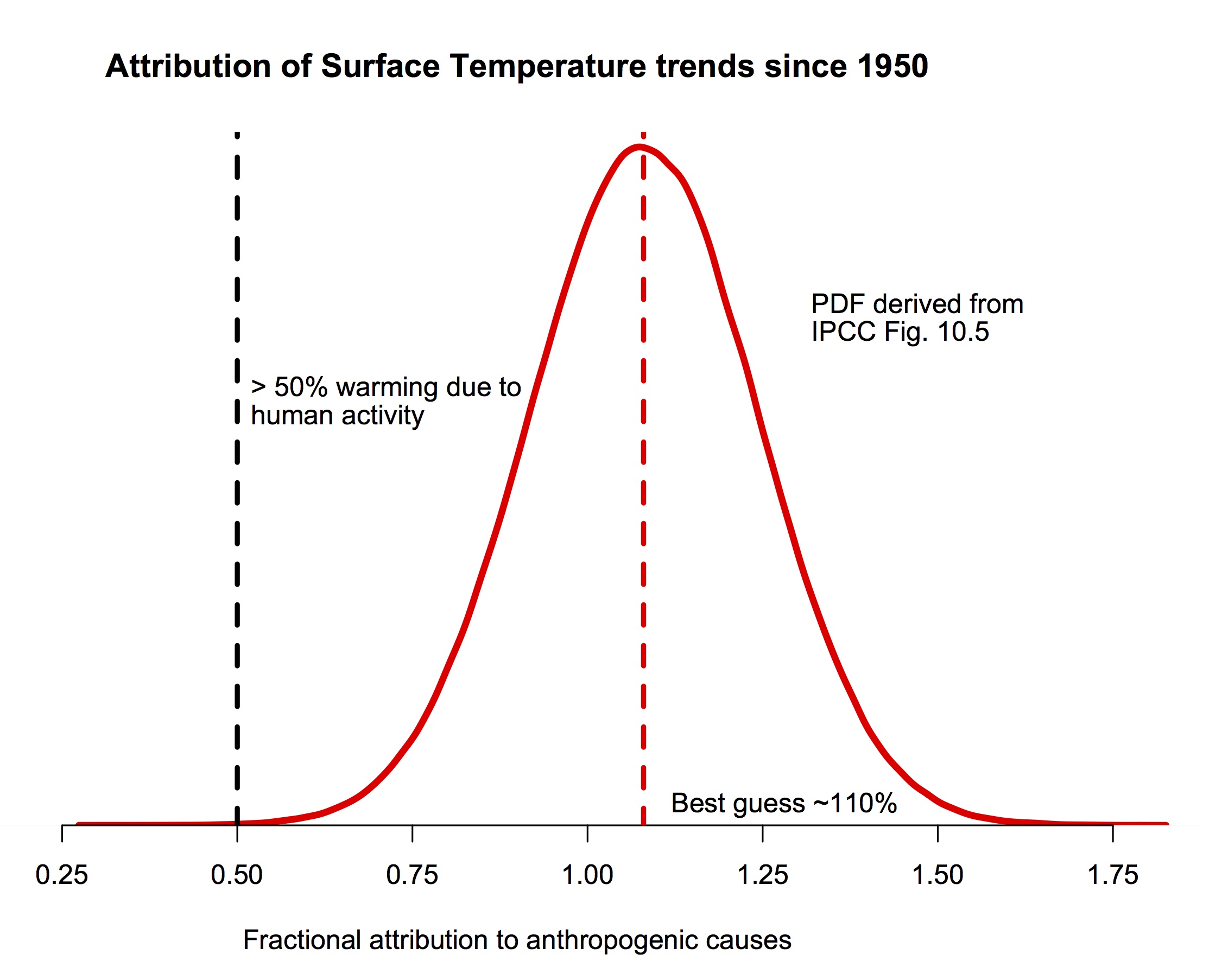

The probability density function for the fraction of warming attributable to human activity (derived from Fig. 10.5 in IPCC AR5). The bulk of the probability is far to the right of the “50%” line, and the peak is around 110%.

If you are still here, I should be clear that this post is focused on a specific claim Judith Curry has recently blogged about supporting a “50-50” attribution (i.e. that trends since the middle of the 20th Century are 50% human-caused, and 50% natural, a position that would center her pdf at 0.5 in the figure above). She also commented about her puzzlement about why other scientists don’t agree with her. Reading over her arguments in detail, I find very little to recommend them, and perhaps the reasoning for this will be interesting for readers. So, here follows a line-by-line commentary on her recent post. Please excuse the length.

Starting from the top… (note, quotes from Judith Curry’s blog are blockquoted).

Pick one:

a) Warming since 1950 is predominantly (more than 50%) caused by humans.

b) Warming since 1950 is predominantly caused by natural processes.

When faced with a choice between a) and b), I respond: ‘I can’t choose, since i think the most likely split between natural and anthropogenic causes to recent global warming is about 50-50′. Gavin thinks I’m ‘making things up’, so I promised yet another post on this topic.

This is not a good start. The statements that ended up in the IPCC SPMs are descriptions of what was found in the main chapters and in the papers they were assessing, not questions that were independently thought about and then answered. Thus while this dichotomy might represent Judith’s problem right now, it has nothing to do with what IPCC concluded. In addition, in framing this as a binary choice, it gives implicit (but invalid) support to the idea that each choice is equally likely. That this is invalid reasoning should be obvious by simply replacing 50% with any other value and noting that the half/half argument could be made independent of any data.

For background and context, see my previous 4 part series Overconfidence in the IPCC’s detection and attribution.

Framing

The IPCC’s AR5 attribution statement:

It is extremely likely that more than half of the observed increase in global average surface temperature from 1951 to 2010 was caused by the anthropogenic increase in greenhouse gas concentrations and other anthropogenic forcings together. The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

I’ve remarked on the ‘most’ (previous incarnation of ‘more than half’, equivalent in meaning) in my Uncertainty Monster paper:

Further, the attribution statement itself is at best imprecise and at worst ambiguous: what does “most” mean – 51% or 99%?

Whether it is 51% or 99% would seem to make a rather big difference regarding the policy response. It’s time for climate scientists to refine this range.

I am arguing here that the ‘choice’ regarding attribution shouldn’t be binary, and there should not be a break at 50%; rather we should consider the following terciles for the net anthropogenic contribution to warming since 1950:

- >66%

- 33-66%

- <33%

JC note: I removed the bounds at 100% and 0% as per a comment from Bart Verheggen.

Hence 50-50 refers to the tercile 33-66% (as the midpoint)

Here Judith makes the same mistake that I commented on in my 2012 post – assuming that a statement about where the bulk of the pdf lies is a statement about where it’s mean is and that it must be cut off at some value (whether it is 99% or 100%). Neither of those things follow. I will gloss over the completely unnecessary confusion of the meaning of the word ‘most’ (again thoroughly discussed in 2012). I will also not get into policy implications since the question itself is purely a scientific one.

The division into terciles for the analysis is not a problem though, and the weight of the pdf in each tercile can easily be calculated. Translating the top figure, the likelihood of the attribution of the 1950+ trend to anthropogenic forcings falling in each tercile is 2×10-4%, 0.4% and 99.5% respectively.

Note: I am referring only to a period of overall warming, so by definition the cooling argument is eliminated. Further, I am referring to the NET anthropogenic effect (greenhouse gases + aerosols + etc). I am looking to compare the relative magnitudes of net anthropogenic contribution with net natural contributions.

The two IPCC statements discussed attribution to greenhouse gases (in AR4) and to all anthropogenic forcings (in AR5) (the subtleties involved there are discussed in the 2013 post). I don’t know what she refers to as the ‘cooling argument’, since it is clear that the temperatures have indeed warmed since 1950 (the period referred to in the IPCC statements). It is worth pointing out that there can be no assumption that natural contributions must be positive – indeed for any random time period of any length, one would expect natural contributions to be cooling half the time.

Further, by global warming I refer explicitly to the historical record of global average surface temperatures. Other data sets such as ocean heat content, sea ice extent, whatever, are not sufficiently mature or long-range (see Climate data records: maturity matrix). Further, the surface temperature is most relevant to climate change impacts, since humans and land ecosystems live on the surface. I acknowledge that temperature variations can vary over the earth’s surface, and that heat can be stored/released by vertical processes in the atmosphere and ocean. But the key issue of societal relevance (not to mention the focus of IPCC detection and attribution arguments) is the realization of this heat on the Earth’s surface.

Fine with this.

IPCC

Before getting into my 50-50 argument, a brief review of the IPCC perspective on detection and attribution. For detection, see my post Overconfidence in IPCC’s detection and attribution. Part I.

Let me clarify the distinction between detection and attribution, as used by the IPCC. Detection refers to change above and beyond natural internal variability. Once a change is detected, attribution attempts to identify external drivers of the change.

The reasoning process used by the IPCC in assessing confidence in its attribution statement is described by this statement from the AR4:

“The approaches used in detection and attribution research described above cannot fully account for all uncertainties, and thus ultimately expert judgement is required to give a calibrated assessment of whether a specific cause is responsible for a given climate change. The assessment approach used in this chapter is to consider results from multiple studies using a variety of observational data sets, models, forcings and analysis techniques. The assessment based on these results typically takes into account the number of studies, the extent to which there is consensus among studies on the significance of detection results, the extent to which there is consensus on the consistency between the observed change and the change expected from forcing, the degree of consistency with other types of evidence, the extent to which known uncertainties are accounted for in and between studies, and whether there might be other physically plausible explanations for the given climate change. Having determined a particular likelihood assessment, this was then further downweighted to take into account any remaining uncertainties, such as, for example, structural uncertainties or a limited exploration of possible forcing histories of uncertain forcings. The overall assessment also considers whether several independent lines of evidence strengthen a result.” (IPCC AR4)

I won’t make a judgment here as to how ‘expert judgment’ and subjective ‘down weighting’ is different from ‘making things up’

Is expert judgement about the structural uncertainties in a statistical procedure associated with various assumptions that need to be made different from ‘making things up’? Actually, yes – it is.

AR5 Chapter 10 has a more extensive discussion on the philosophy and methodology of detection and attribution, but the general idea has not really changed from AR4.

In my previous post (related to the AR4), I asked the question: what was the original likelihood assessment from which this apparently minimal downweighting occurred? The AR5 provides an answer:

The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

So, I interpret this as scything that the IPCC’s best estimate is that 100% of the warming since 1950 is attributable to humans, and they then down weight this to ‘more than half’ to account for various uncertainties. And then assign an ‘extremely likely’ confidence level to all this.

Making things up, anyone?

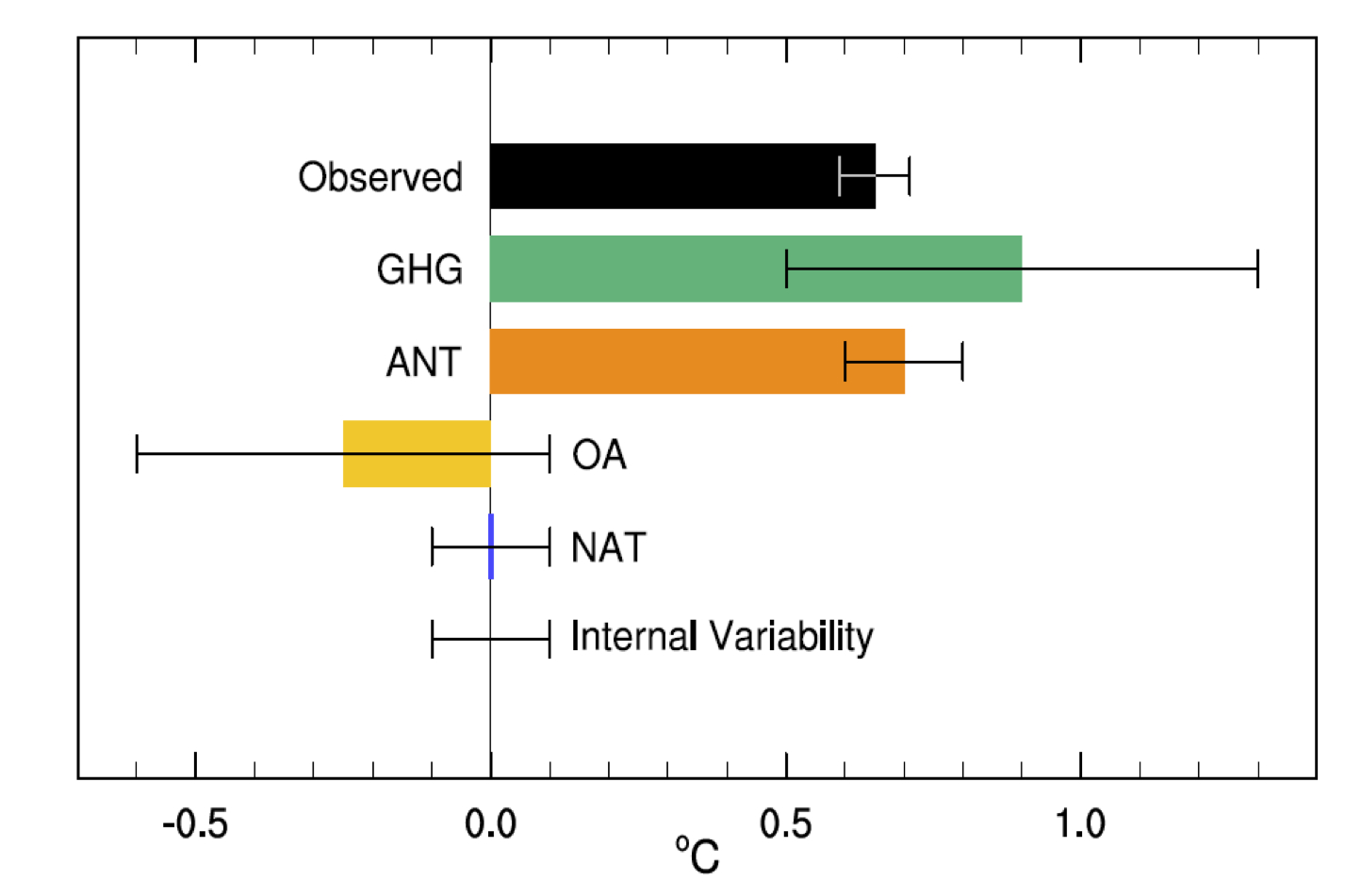

This is very confused. The basis of the AR5 calculation is summarised in figure 10.5:

The best estimate of the warming due to anthropogenic forcings (ANT) is the orange bar (noting the 1𝛔 uncertainties). Reading off the graph, it is 0.7±0.2ºC (5-95%) with the observed warming 0.65±0.06 (5-95%). The attribution then follows as having a mean of ~110%, with a 5-95% range of 80–130%. This easily justifies the IPCC claims of having a mean near 100%, and a very low likelihood of the attribution being less than 50% (p < 0.0001!). Note there is no ‘downweighting’ of any argument here – both statements are true given the numerical distribution. However, there must be some expert judgement to assess what potential structural errors might exist in the procedure. For instance, the assumption that fingerprint patterns are linearly additive, or uncertainties in the pattern because of deficiencies in the forcings or models etc. In the absence of any reason to think that the attribution procedure is biased (and Judith offers none), structural uncertainties will only serve to expand the spread. Note that one would need to expand the uncertainties by a factor of 3 in both directions to contradict the first part of the IPCC statement. That seems unlikely in the absence of any demonstration of some huge missing factors.

I’ve just reread Overconfidence in IPCC’s detection and attribution. Part IV, I recommend that anyone who seriously wants to understand this should read this previous post. It explains why I think the AR5 detection and attribution reasoning is flawed.

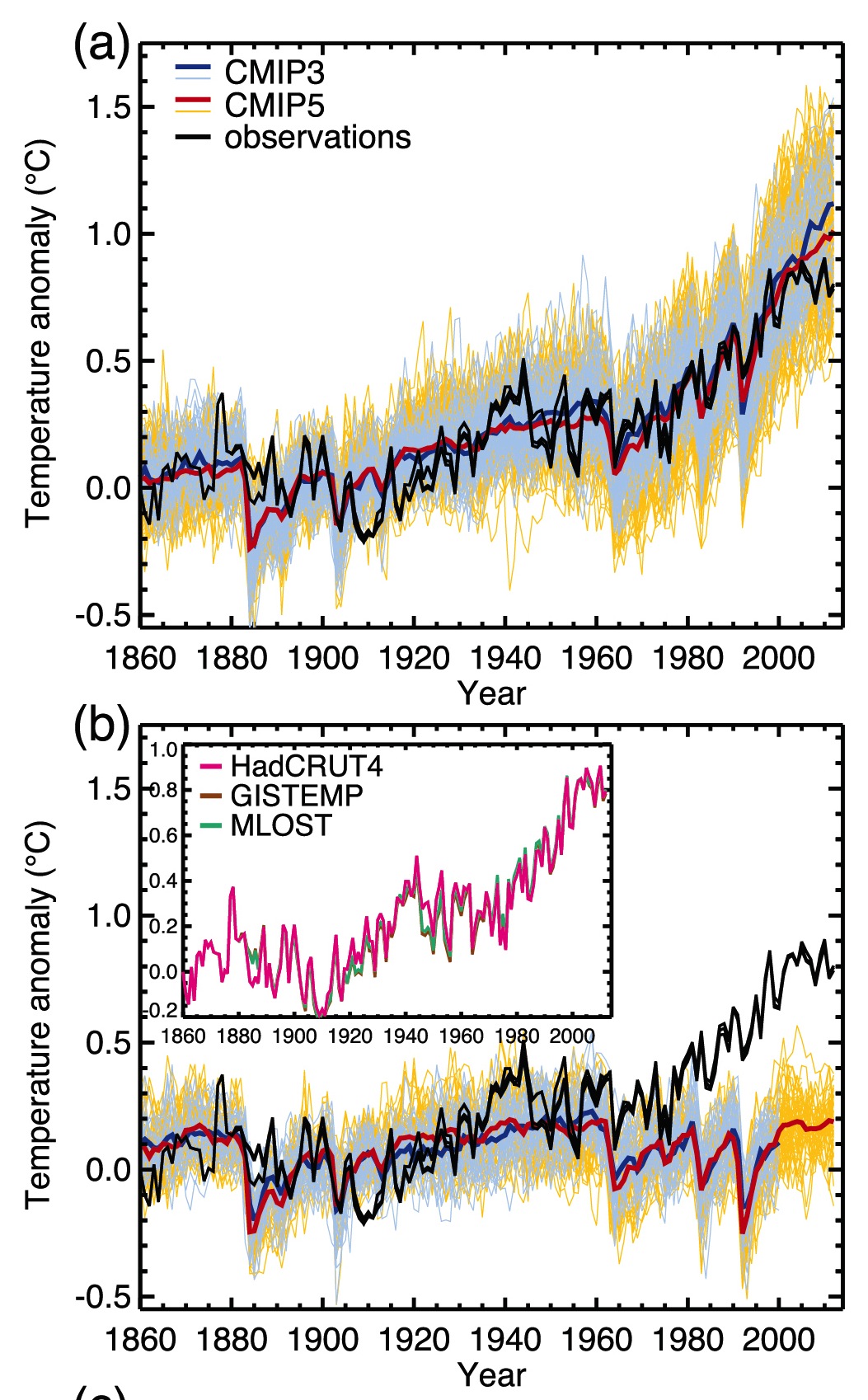

Of particular relevance to the 50-50 argument, the IPCC has failed to convincingly demonstrate ‘detection.’ Because historical records aren’t long enough and paleo reconstructions are not reliable, the climate models ‘detect’ AGW by comparing natural forcing simulations with anthropogenically forced simulations. When the spectra of the variability of the unforced simulations is compared with the observed spectra of variability, the AR4 simulations show insufficient variability at 40-100 yrs, whereas AR5 simulations show reasonable variability. The IPCC then regards the divergence between unforced and anthropogenically forced simulations after ~1980 as the heart of the their detection and attribution argument. See Figure 10.1 from AR5 WGI (a) is with natural and anthropogenic forcing; (b) is without anthropogenic forcing:

This is also confused. “Detection” is (like attribution) a model-based exercise, starting from the idea that one can estimate the result of a counterfactual: what would the temperature have done in the absence of the drivers compared to what it would do if they were included? GCM results show clearly that the expected anthropogenic signal would start to be detectable (“come out of the noise”) sometime after 1980 (for reference, Hansen’s public statement to that effect was in 1988). There is no obvious discrepancy in spectra between the CMIP5 models and the observations, and so I am unclear why Judith finds the detection step lacking. It is interesting to note that given the variability in the models, the anthropogenic signal is now more than 5𝛔 over what would have been expected naturally (and if it’s good enough for the Higgs Boson….).

Note in particular that the models fail to simulate the observed warming between 1910 and 1940.

Here Judith is (I think) referring to the mismatch between the ensemble mean (red) and the observations (black) in that period. But the red line is simply an estimate of the forced trends, so the correct reading of the graph would be that the models do not support an argument suggesting that all of the 1910-1940 excursion is forced (contingent on the forcing datasets that were used), which is what was stated in AR5. However, the observations are well within the spread of the models and so could easily be within the range of the forced trend + simulated internal variability. A quick analysis (a proper attribution study is more involved than this) gives an observed trend over 1910-1940 as 0.13 to 0.15ºC/decade (depending the dataset, with ±0.03ºC (5-95%) uncertainty in the OLS), while the spread in my collation of the historical CMIP5 models is 0.07±0.07ºC/decade (5-95%). Specifically, 8 model runs out of 131 have trends over that period greater than 0.13ºC/decade – suggesting that one might see this magnitude of excursion 5-10% of the time. For reference, the GHG related trend in the GISS models over that period is about 0.06ºC/decade. However, the uncertainties in the forcings for that period are larger than in recent decades (in particular for the solar and aerosol-related emissions) and so the forced trend (0.07ºC/decade) could have been different in reality. And since we don’t have good ocean heat content data, nor any satellite observations, or any measurements of stratospheric temperatures to help distinguish potential errors in the forcing from internal variability, it is inevitable that there will be more uncertainty in the attribution for that period than for more recently.

The glaring flaw in their logic is this. If you are trying to attribute warming over a short period, e.g. since 1980, detection requires that you explicitly consider the phasing of multidecadal natural internal variability during that period (e.g. AMO, PDO), not just the spectra over a long time period. Attribution arguments of late 20th century warming have failed to pass the detection threshold which requires accounting for the phasing of the AMO and PDO. It is typically argued that these oscillations go up and down, in net they are a wash. Maybe, but they are NOT a wash when you are considering a period of the order, or shorter than, the multidecadal time scales associated with these oscillations.

Watch the pea under the thimble here. The IPCC statements were from a relatively long period (i.e. 1950 to 2005/2010). Judith jumps to assessing shorter trends (i.e. from 1980) and shorter periods obviously have the potential to have a higher component of internal variability. The whole point about looking at longer periods is that internal oscillations have a smaller contribution. Since she is arguing that the AMO/PDO have potentially multi-decadal periods, then she should be supportive of using multi-decadal periods (i.e. 50, 60 years or more) for the attribution.

Further, in the presence of multidecadal oscillations with a nominal 60-80 yr time scale, convincing attribution requires that you can attribute the variability for more than one 60-80 yr period, preferably back to the mid 19th century. Not being able to address the attribution of change in the early 20th century to my mind precludes any highly confident attribution of change in the late 20th century.

This isn’t quite right. Our expectation (from basic theory and models) is that the second half of the 20th C is when anthropogenic effects really took off. Restricting attribution to 120-160 yr trends seems too constraining – though there is no problem in looking at that too. However, Judith is actually assuming what remains to be determined. What is the evidence that all 60-80yr variability is natural? Variations in forcings (in particularly aerosols, and maybe solar) can easily project onto this timescale and so any separation of forced vs. internal variability is really difficult based on statistical arguments alone (see also Mann et al, 2014). Indeed, it is the attribution exercise that helps you conclude what the magnitude of any internal oscillations might be. Note that if we were only looking at the global mean temperature, there would be quite a lot of wiggle room for different contributions. Looking deeper into different variables and spatial patterns is what allows for a more precise result.

The 50-50 argument

There are multiple lines of evidence supporting the 50-50 (middle tercile) attribution argument. Here are the major ones, to my mind.

Sensitivity

The 100% anthropogenic attribution from climate models is derived from climate models that have an average equilibrium climate sensitivity (ECS) around 3C. One of the major findings from AR5 WG1 was the divergence in ECS determined via climate models versus observations. This divergence led the AR5 to lower the likely bound on ECS to 1.5C (with ECS very unlikely to be below 1C).

Judith’s argument misstates how forcing fingerprints from GCMs are used in attribution studies. Notably, they are scaled to get the best fit to the observations (along with the other terms). If the models all had sensitivities of either 1ºC or 6ºC, the attribution to anthropogenic changes would be the same as long as the pattern of change was robust. What would change would be the scaling – less than one would imply a better fit with a lower sensitivity (or smaller forcing), and vice versa (see figure 10.4).

She also misstates how ECS is constrained – all constraints come from observations (whether from long-term paleo-climate observations, transient observations over the 20th Century or observations of emergent properties that correlate to sensitivity) combined with some sort of model. The divergence in AR5 was between constraints based on the transient observations using simplified energy balance models (EBM), and everything else. Subsequent work (for instance by Drew Shindell) has shown that the simplified EBMs are missing important transient effects associated with aerosols, and so the divergence is very likely less than AR5 assessed.

Nic Lewis at Climate Dialogue summarizes the observational evidence for ECS between 1.5 and 2C, with transient climate response (TCR) around 1.3C.

Nic Lewis has a comment at BishopHill on this:

The press release for the new study states: “Rapid warming in the last two and a half decades of the 20th century, they proposed in an earlier study, was roughly half due to global warming and half to the natural Atlantic Ocean cycle that kept more heat near the surface.” If only half the warming over 1976-2000 (linear trend 0.18°C/decade) was indeed anthropogenic, and the IPCC AR5 best estimate of the change in anthropogenic forcing over that period (linear trend 0.33Wm-2/decade) is accurate, then the transient climate response (TCR) would be little over 1°C. That is probably going too far, but the 1.3-1.4°C estimate in my and Marcel Crok’s report A Sensitive Matter is certainly supported by Chen and Tung’s findings.

Since the CMIP5 models used by the IPCC on average adequately reproduce observed global warming in the last two and a half decades of the 20th century without any contribution from multidecadal ocean variability, it follows that those models (whose mean TCR is slightly over 1.8°C) must be substantially too sensitive.

BTW, the longer term anthropogenic warming trends (50, 75 and 100 year) to 2011, after removing the solar, ENSO, volcanic and AMO signals given in Fig. 5 B of Tung’s earlier study (freely accessible via the link), of respectively 0.083, 0.078 and 0.068°C/decade also support low TCR values (varying from 0.91°C to 1.37°C), upon dividing by the linear trends exhibited by the IPCC AR5 best estimate time series for anthropogenic forcing. My own work gives TCR estimates towards the upper end of that range, still far below the average for CMIP5 models.

If true climate sensitivity is only 50-65% of the magnitude that is being simulated by climate models, then it is not unreasonable to infer that attribution of late 20th century warming is not 100% caused by anthropogenic factors, and attribution to anthropogenic forcing is in the middle tercile (50-50).

The IPCC’s attribution statement does not seem logically consistent with the uncertainty in climate sensitivity.

This is related to a paper by Tung and Zhou (2013). Note that the attribution statement has again shifted to the last 25 years of the 20th Century (1976-2000). But there are a couple of major problems with this argument though. First of all, Tung and Zhou assumed that all multi-decadal variability was associated with the Atlantic Multi-decadal Oscillation (AMO) and did not assess whether anthropogenic forcings could project onto this variability. It is circular reasoning to then use this paper to conclude that all multi-decadal variability is associated with the AMO.

The second problem is more serious. Lewis’ argument up until now that the best fit to the transient evolution over the 20th Century is with a relatively small sensitivity and small aerosol forcing (as opposed to a larger sensitivity and larger opposing aerosol forcing). However, in both these cases the attribution of the long-term trend to the combined anthropogenic effects is actually the same (near 100%). Indeed, one valid criticism of the recent papers on transient constraints is precisely that the simple models used do not have sufficient decadal variability!

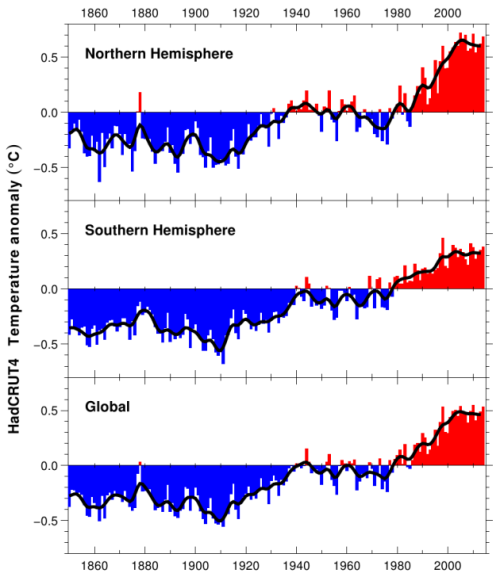

Climate variability since 1900

From HadCRUT4:

The IPCC does not have a convincing explanation for:

- warming from 1910-1940

- cooling from 1940-1975

- hiatus from 1998 to present

The IPCC purports to have a highly confident explanation for the warming since 1950, but it was only during the period 1976-2000 when the global surface temperatures actually increased.

The absence of convincing attribution of periods other than 1976-present to anthropogenic forcing leaves natural climate variability as the cause – some combination of solar (including solar indirect effects), uncertain volcanic forcing, natural internal (intrinsic variability) and possible unknown unknowns.

This point is not an argument for any particular attribution level. As is well known, using an argument of total ignorance to assume that the choice between two arbitrary alternatives must be 50/50 is a fallacy.

Attribution for any particular period follows exactly the same methodology as any other. What IPCC chooses to highlight is of course up to the authors, but there is nothing preventing an assessment of any of these periods. In general, the shorter the time period, the greater potential for internal variability, or (equivalently) the larger the forced signal needs to be in order to be detected. For instance, Pinatubo was a big rapid signal so that was detectable even in just a few years of data.

I gave a basic attribution for the 1910-1940 period above. The 1940-1975 average trend in the CMIP5 ensemble is -0.01ºC/decade (range -0.2 to 0.1ºC/decade), compared to -0.003 to -0.03ºC/decade in the observations and are therefore a reasonable fit. The GHG driven trends for this period are ~0.1ºC/decade, implying that there is a roughly opposite forcing coming from aerosols and volcanoes in the ensemble. The situation post-1998 is a little different because of the CMIP5 design, and ongoing reevaluations of recent forcings (Schmidt et al, 2014;Huber and Knutti, 2014). Better information about ocean heat content is also available to help there, but this is still a work in progress and is a great example of why it is harder to attribute changes over small time periods.

In the GCMs, the importance of internal variability to the trend decreases as a function of time. For 30 year trends, internal variations can have a ±0.12ºC/decade or so impact on trends, for 60 year trends, closer to ±0.08ºC/decade. For an expected anthropogenic trend of around 0.2ºC/decade, the signal will be clearer over the longer term. Thus cutting down the period to ever-shorter periods of years increases the challenges and one can end up simply cherry picking the noise instead of seeing the signal.

A key issue in attribution studies is to provide an answer to the question: When did anthropogenic global warming begin? As per the IPCC’s own analyses, significant warming didn’t begin until 1950. Just the Facts has a good post on this When did anthropogenic global warming begin?

I disagree as to whether this is a “key” issue for attribution studies, but as to when anthropogenic warming began, the answer is actually quite simple – when we started altering the atmosphere and land surface at climatically relevant scales. For the CO2 increase from deforestation this goes back millennia, for fossil fuel CO2, since the invention of the steam engine at least. In both cases there was a big uptick in the 18th Century. Perhaps that isn’t what Judith is getting at though. If she means when was it easily detectable, I discussed that above and the answer is sometime in the early 1980s.

The temperature record since 1900 is often characterized as a staircase, with periods of warming sequentially followed by periods of stasis/cooling. The stadium wave and Chen and Tung papers, among others, are consistent with the idea that the multidecadal oscillations, when superimposed on an overall warming trend, can account for the overall staircase pattern.

Nobody has any problems with the idea that multi-decadal internal variability might be important. The problem with many studies on this topic is the assumption that all multi-decadal variability is internal. This is very much an open question.

Let’s consider the 21st century hiatus. The continued forcing from CO2 over this period is substantial, not to mention ‘warming in the pipeline’ from late 20th century increase in CO2. To counter the expected warming from current forcing and the pipeline requires natural variability to effectively be of the same magnitude as the anthropogenic forcing. This is the rationale that Tung used to justify his 50-50 attribution (see also Tung and Zhou). The natural variability contribution may not be solely due to internal/intrinsic variability, and there is much speculation related to solar activity. There are also arguments related to aerosol forcing, which I personally find unconvincing (the topic of a future post).

Shorter time-periods are noisier. There are more possible influences of an appropriate magnitude and, for the recent period, continued (and very frustrating) uncertainties in aerosol effects. This has very little to do with the attribution for longer-time periods though (since change of forcing is much larger and impacts of internal variability smaller).

The IPCC notes overall warming since 1880. In particular, the period 1910-1940 is a period of warming that is comparable in duration and magnitude to the warming 1976-2000. Any anthropogenic forcing of that warming is very small (see Figure 10.1 above). The timing of the early 20th century warming is consistent with the AMO/PDO (e.g. the stadium wave; also noted by Tung and Zhou). The big unanswered question is: Why is the period 1940-1970 significantly warmer than say 1880-1910? Is it the sun? Is it a longer period ocean oscillation? Could the same processes causing the early 20th century warming be contributing to the late 20th century warming?

If we were just looking at 30 year periods in isolation, it’s inevitable that there will be these ambiguities because data quality degrades quickly back in time. But that is exactly why IPCC looks at longer periods.

Not only don’t we know the answer to these questions, but no one even seems to be asking them!

This is simply not true.

Attribution

I am arguing that climate models are not fit for the purpose of detection and attribution of climate change on decadal to multidecadal timescales. Figure 10.1 speaks for itself in this regard (see figure 11.25 for a zoom in on the recent hiatus). By ‘fit for purpose’, I am prepared to settle for getting an answer that falls in the right tercile.

Given the results above it would require a huge source of error to move the bulk of that probability anywhere else other than the right tercile.

The main relevant deficiencies of climate models are:

- climate sensitivity that appears to be too high, probably associated with problems in the fast thermodynamic feedbacks (water vapor, lapse rate, clouds)

- failure to simulate the correct network of multidecadal oscillations and their correct phasing

- substantial uncertainties in aerosol indirect effects

- unknown and uncertain solar indirect effects

The sensitivity argument is irrelevant (given that it isn’t zero of course). Simulation of the exact phasing of multi-decadal internal oscillations in a free-running GCM is impossible so that is a tough bar to reach! There are indeed uncertainties in aerosol forcing (not just the indirect effects) and, especially in the earlier part of the 20th Century, uncertainties in solar trends and impacts. Indeed, there is even uncertainty in volcanic forcing. However, none of these issues really affect the attribution argument because a) differences in magnitude of forcing over time are assessed by way of the scales in the attribution process, and b) errors in the spatial pattern will end up in the residuals, which are not large enough to change the overall assessment.

Nonetheless, it is worth thinking about what plausible variations in the aerosol or solar effects could have. Given that we are talking about the net anthropogenic effect, the playing off of negative aerosol forcing and climate sensitivity within bounds actually has very little effect on the attribution, so that isn’t particularly relevant. A much bigger role for solar would have an impact, but the trend would need to be about 5 times stronger over the relevant period to change the IPCC statement and I am not aware of any evidence to support this (and much that doesn’t).

So, how to sort this out and do a more realistic job of detecting climate change and and attributing it to natural variability versus anthropogenic forcing? Observationally based methods and simple models have been underutilized in this regard. Of great importance is to consider uncertainties in external forcing in context of attribution uncertainties.

It is inconsistent to talk in one breath about the importance of aerosol indirect effects and solar indirect effects and then state that ‘simple models’ are going to do the trick. Both of these issues relate to microphysical effects and atmospheric chemistry – neither of which are accounted for in simple models.

The logic of reasoning about climate uncertainty, is not at all straightforward, as discussed in my paper Reasoning about climate uncertainty.

So, am I ‘making things up’? Seems to me that I am applying straightforward logic. Which IMO has been disturbingly absent in attribution arguments, that use climate models that aren’t fit for purpose, use circular reasoning in detection, fail to assess the impact of forcing uncertainties on the attribution, and are heavily spiced by expert judgment and subjective downweighting.

My reading of the evidence suggests clearly that the IPCC conclusions are an accurate assessment of the issue. I have tried to follow the proposed logic of Judith’s points here, but unfortunately each one of these arguments is either based on a misunderstanding, an unfamiliarity with what is actually being done or is a red herring associated with shorter-term variability. If Judith is interested in why her arguments are not convincing to others, perhaps this can give her some clues.

References

- M.E. Mann, B.A. Steinman, and S.K. Miller, "On forced temperature changes, internal variability, and the AMO", Geophysical Research Letters, vol. 41, pp. 3211-3219, 2014. http://dx.doi.org/10.1002/2014GL059233

- K. Tung, and J. Zhou, "Using data to attribute episodes of warming and cooling in instrumental records", Proceedings of the National Academy of Sciences, vol. 110, pp. 2058-2063, 2013. http://dx.doi.org/10.1073/pnas.1212471110

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

- M. Huber, and R. Knutti, "Natural variability, radiative forcing and climate response in the recent hiatus reconciled", Nature Geoscience, vol. 7, pp. 651-656, 2014. http://dx.doi.org/10.1038/ngeo2228

[Response: Nothing like the actuality – more like 5 W/m2 absolute level uncertainties. – gavin]

While this is clearly so – it is clearly not relevant to changes in toa flux. I did explicitly discuss the difference between absolutes and anomalies.

‘This paper highlights how the emerging record of satellite observations from the Earth Observation System (EOS) and A-Train constellation are advancing our ability to more completely document and understand the underlying processes associated with variations in the Earth’s top-of-atmosphere (TOA) radiation budget. Large-scale TOA radiation changes during the past decade are observed to be within 0.5 Wm-2 per decade based upon comparisons between Clouds and the Earth’s Radiant Energy System (CERES) instruments aboard Terra and Aqua and other instruments…

[Response: This statement is simply not true as you are interpreting it. The CERES fluxes do not have that level of absolute precision. The EBAF product has been tuned to have a ~0.8 W/m2 imbalance to match estimates of the heat imbalance from ocean heat content estimates and modeling. From the Allan poster you cite below: “CERES cannot measure the absolute net radiation to sufficient accuracy for quantifying the magnitude of the net radiation imbalance.” – gavin]

With the availability of multiple years of data from new and improved passive instruments launched as part of the Earth Observing System (EOS) and active instruments belonging to the A-Train constellation (L’Ecuyer and Jiang 2010), a more complete observational record of ERB variations and the underlying processes is now possible. For the first time, simultaneous global observations of the ERB and a multitude of cloud, aerosol, and surface properties and atmospheric state data are available with a high degree of precision.’

http://meteora.ucsd.edu/~jnorris/reprints/Loeb_et_al_ISSI_Surv_Geophys_2012.pdf

When we are looking at changes in the system – anomalies provide information as to the source of change.

[Response: Only if you know the baseline. – gavin]

‘Satellite measurements from CERES have provided a stable record of changes in the radiation balance since 2000(e.g. see Fig. 4).

CERES cannot measure the absolute net radiation to sufficient accuracy for quantifying the magnitude of the net radiation imbalance…

From this summary of recent work – http://www.nceo.ac.uk/posters/2011_1climate_Richard_ALLAN_reading.pdf

A net radiative flux anomalies uncertainty of ±0.31 Wm-2 was estimated.

[Response: This is derived from the OHC changes. – gavin]

This is fair comment – and I am sure I made a similar comment yesterday – although the routine and disconcerting disappearance of comments into some spam box or other – only to reappear – makes it difficult to be absolutely sure.

The Allan et al poster – http://www.nceo.ac.uk/posters/2011_1climate_Richard_ALLAN_reading.pdf – and the paper it is based on – shows consistency between ocean heat and SORCE and CERES – even without an absolute value for radiative imbalance.

[Response: You have no idea what you are talking about. CERES products *use* SORCE data as the incoming solar flux. – gavin]

It is not that difficult – if the net flux anomalies trend is positive the planet is warming by definition. Last decade the decrease in flux out exceeded the decline in flux in from the Sun – giving some warming.

[Response: The CERES net flux data are not absolutely calibrated, therefore can average basically any number between -5 and 5 W/m2. ]

The cause of that warming was a decrease in reflected shortwave.

https://watertechbyrie.files.wordpress.com/2014/06/ceres-bams-2008-with-trend-lines1.gif.

As of 2014 the net trend is effectively zero.

http://watertechbyrie.files.wordpress.com/2014/06/ceres_ebaf-toa_ed2-8_anom_toa_net_flux-all-sky_march-2000toapril-2014.png

148 MARodger: I hope during that interval he learns a bit about words and how they mean something on there own, and that when you use words you should select them so as to match the meaning you are wishing to convey.

One of the topics on my perpetually growing reading list will be the changing energetics of the changing hydrological cycle. I hope that by next year there is more quantitative information. Next on my reading list will be the book referenced above on thermodynamics of clouds.

Rob Ellison – ‘It is not that difficult – if the net flux anomalies trend is positive the planet is warming by definition.’

If the absolute net flux remained negative throughout but with an upward trend the planet would be cooling, just at a gradually slower rate. A positive net flux in absolute numbers is what’s needed for the planet to be warming. Trend would just tend to indicate a change in the rate of warming/cooling.

Looking at models, the one member per model CMIP5 mean at Climate Explorer gives a negative TOA flux (rsdt-(rsut+rlut)) trend for the 2000-2009 period and a probably insignificant positive trend for 2000-2014.

Response: This statement is simply not true as you are interpreting it. The CERES fluxes do not have that level of absolute precision. The EBAF product has been tuned to have a ~0.8 W/m2 imbalance to match estimates of the heat imbalance from ocean heat content estimates and modeling. From the Allan poster you cite below: “CERES cannot measure the absolute net radiation to sufficient accuracy for quantifying the magnitude of the net radiation imbalance.” – gavin]

I did quote the statement from Allen et al – and mentioned the problem several times. Put this aside – one product reports in absolute – if uncertain – terms and one as anomalies. There is no direct comparison – and it is the calibration problem that is insurmountable – as I believe I said.

CERES and SORCE are different products of course – so I don’t understand your other point that I don’t understand what I am talking about because CERES uses SORCE?

d(W&H)/dt = energy in – energy out : all in Joules/s

W&H is work and heat.

An increase in ocean heat would suggest that the radiative imbalance is positive (i.e. d(W&H)/dt>0).

e.g. http://watertechbyrie.files.wordpress.com/2014/06/vonschuckmannampltroan2011-fig5pg_zpsee63b772.jpg

What contributes to that is the change in incoming energy measured by SORCE – relatively minor at the surface but negative last decade at least in the Argo period.

http://lasp.colorado.edu/data/sorce/total_solar_irradiance_plots/images/tim_level3_tsi_24hour_640x480.png

So that leaves changes in outgoing energy to explain the warming – simples. Absolute radiative imbalance is not required – and it is the precision of radiative changes that is significant.

http://watertechbyrie.files.wordpress.com/2014/06/ceres-bams-2008-with-trend-lines1.gif

How is this not obvious?

@4 Sep 2014 at 4:25 PM:

You mean d(W&H)/dt = power in – power out, right? Or do you mean Δ(W&H) = energy in – energy out?

Rob Ellison,

I have to say I have no idea at this point what it is you believe is obvious. As an attempt to draw out what you’re actually saying, I’ll refer back to a quote from one of your first comments:

‘A steric sea level rise of 0.2mm +/-0.8mm/year?

Which can be found at – http://www.tos.org/oceanography/archive/24-2_leuliette.html

Which has the merit of being consistent with CERES net.

http://watertechbyrie.files.wordpress.com/2014/06/ceres_ebaf-toa_ed2-8_anom_toa_net_flux-all-sky_march-2000toapril-2014.png ‘

————————

If you understand the point about error in the absolute flux from CERES why do you believe it shows consistency with a steric SLR of 0.2mm/yr? Steric SLR will (mostly, aside from the halosteric component) reflect the absolute TOA imbalance, not the trend in the TOA imbalance. Technically it could be considered consistent, but so a could a steric trend of 2mm/yr. The uncertainty in the absolute flux makes such a statement meaningless.

Marler said:

Is that the Curry book on clouds? I took a look at that text and commented on Curry’s site in the link below, in particular concerning the author’s boneheaded misapplication of Bose-Einstein statistics to condensation and nucleation of water:

http://judithcurry.com/2014/09/04/thermodynamics-kinetics-and-microphysics-of-clouds/#comment-624613

The nitpicking ankle-biting defenders such as Marler came out in droves but they were not eventually not able to defend her claims. Amazingly she had no relevant citations for her Bose-Einstein assertion!

Anyone notice the huge contradiction between an author (Curry) that declares that all of climate science is bound by uncertainty, yet in Curry’s own research, the physics is stated by assertion, with zero uncertainty implied? How convenient….

‘d(W&H)/dt = energy in – energy out : all in Joules/s

You mean d(W&H)/dt = power in – power out, right? Or do you mean Δ(W&H) = energy in – energy out?’

A Joule/s is obviously a Watt. A Watt.s is obviously a Joule. The units are W.s/s = J/s. It seems more satisfactory to talk energy but there is no essential difference.

Paul S confuses the lack of absolutes with trend – which are changes in radiant flux in a period.

‘The overall slight rise (relative heating) of global total net flux at TOA between the 1980’s and 1990’s is confirmed in the tropics by the ERBS measurements and exceeds the estimated climate forcing changes (greenhouse gases and aerosols) for this period. The most obvious explanation is the associated changes in cloudiness during this period.’ http://isccp.giss.nasa.gov/projects/browse_fc.html

An increasing trend in net flux is a relative warming by definition. If ocean heat is increasing there is presumably an absolute increase in warming and we can look at SW and IR – as well as TSI – to see how the system is changing.

As far as the webbly is concerned – no one is suggesting that bosons or fermions play any role in real world droplet nucleation rates in a supersaturated atmosphere. The suggestion from the text is that nucleation rates obey Boltzmann statistics generally but in certain conditions of high surfactant loads and low tempertures the governing rates have Bose-Einstein statistics.

Without knowing more about the derivation and the assumptions – it is impossible to say anything sensible. Nor is this a significant point in the microphysics of clouds. It is merely on webbly’s part tedious, repetitive and misguided nitpicking for no obvious sensible or rational purpose.

[Response: Pot, meet kettle. -gavin]

> Bose-Einstein condensation

I wondered if that was just a misnomer, bad translation of a Russina original, but I gather the book uses the wrong math in the text? So it appears from your comment: “These particles don’t have integer spin, despite what you say in the book.”

Is that the Russian author’s work? Was that in any of the “jointly published 23 journal articles” mentioned in the intro, where a reviewer or subsequent citing author could have picked it up? Or does the “new research that has previously not been published” describe the Bose-Einstein condensation idea? Has anyone subsequently shown any consequence of that assumption in citing papers?

The intro makes it sound like that book overthrows 20th Century cloud physics.

To uncover Bose-Einstein statistics in particles with mass outside of a laboratory environment (e.g. supercooled Helium) is certainly novel. At least I have never run into it.

Curry and co-author include no citation to previous work, nor is there any experimental evidence to back their claim up.

Their claim is extraordinary and if true they should think about submitting their theory to a prestigious physics journal such as Physical Review Letters.

Hank,

Water particles do have integer spin, I got that part wrong. But what did not pass the sanity check for me was that it is very rare for mass particle systems to exhibit properties where the full Bose-Einstein statistics come into play. Usually exp(E/kT) is much larger than 1 which means one just sticks to applying Maxwell-Boltzmann statistics, but then they assert the other extreme can occur as well. That is hard to believe that cloud droplet nucleation covers that range in activation energy, E — from much greater than kT to much lower than kT.

And no citations to back any of this up.

Following on from my whittering @150, a quick(ish) trudge through the crowd of Curry’s Uncertainty Elves before pointing out the way to work round them.

Perhaps it is best to kick off with Curry’s March 2014 APS presentation. The slides prepared for her presentation (The actual slides used and presentation transcript is here.) conclude with very strong unambiguous accusations against the APS 2007 AGW statement. (No sign of Uncertainty Elves here!!) “Such statements don’t meet the norms of responsible advocacy.” Also “Institutionalizing consensus can slow down scientfic progress and pervert the self-correctng mechanisms of science” Within the slides, the basis for such accusations is apparently Curry’s “View emphasizing natural variation” which is apparently contrary to the finding of IPCC AR5 (and that is also her “existential threat to the mainstream theory”).

Central within this emphasis of natural variation (although not exclusively so and perhaps not even principally so within the presentation transcript) is the role of multi-decadal modes of natural internal variation which include AMO & PDO which Curry for some reason asserts “are superimposed on the anthropogenic warming trend, and should be included in atribution studies and future projections.” There is also Synchronised Coupled Climate Shifts that are “hypothesised” and Wyatt’s Staduim Wave Hypothesis with its quasi-periodic 50-80 year tempo. WSWH is described as providing a “plausible explanation for the hiatus in warming” and perhaps the others are seen in the same vein. Their role in the 20th century temperature wobble (which peaked in 1940) is not evident from Curry’s presentation but talk elsewhere (eg her 50:50 blog) strongly implies a global climate role for Big Natural Oscillation that is as important as AGW’s role through the second half of the 20th century.

However, beyond curve-fitting and the argument that AMO PDO SCCS WSWH could in some way explain (apparently) the ‘hiatus’ and that they therefore also predict a continuing ‘hiatus’ (which does continue still), there is no explanation to drive away the crowd of Uncertainty Elves that continues to gather. Why and how are AMO PDO SCCS and WSWH the favoured plausable set of ‘hiatus’ explanations?

Indeed this queer quartet are not the only such explanations provided by Curry. A separate explanation (given a whole slide of its own in the prepared set) is ENSO as argued by Kosaka & Xie (2013), a thesis which concurs with the MLR work of Foster & Rahmsdorf (2011). These works considers the well-established impact of ENSO on global temperature but it is apparently dismissed by Curry as a candidate explanation for the “hiatus”. And this ignorance is perhaps the start-point for debunking her Big Natural Oscillation (BNO) hypothesis.

Curry apparently considers that singly or in some combination, AMO PDO SCCS and WSWH contributed big time to the 20th century temperature wobble (WSWH apparently taking pride of place), thus taking the role of BNO.

Yet ENSO also waggles global temperature and if you plot out a multi-year average of ENSO (MYA-ENSO) you get a wobble identical in timing to PDO (see graph here). Now, PDO is a major element in the WSWH thesis, while prior to WSWH it was Curry’s favourite for BNO. Yet the sole argument for PDO as BNO (its phase shifts) also supports MYA-ENSO as BNO. MYA-ENSO shifts at exactly the same time so is just as convincingly as PDO and additionally MYA-ENSO actually has a physical basis. Indeed, Curry’s presentation says “ENSO doesn’t just produce interannual variability, but also variability on decadal-plus timescales,” that is as MYA-ENSO.

The problem Curry has with MYA-ENSO is that, unlike AMO PDO SCCS or WSWH, ENSO’s impact can be quantified and it can be shown to be far too weak to act as Curry’s BNO, to power those past “multidecadal swings of the global temperature.” So why is PDO any different to ENSO. Or why AMO, SCCS or WSWH?

But to shake off that throng of Uncertainty Elves, it is worth asking a slightly different question – If ENSO is not strong enough to be Curry’s BNO, how big does BNO actually need to be?

To be continued…

MA Rodger

Excellent analysis. Look forward to your next installment, if it hasn’t been posted as I write this.

well, the warming from 1910 to 1940 Looks exactly the same, as from 1970 to 2000.

The first one must be mostly natural.

The second warming Period could be some where anthropogenic.

If you show the IPCC Temperature Line from 1900 to 2010 without some greenhouse forcing, this shows almost a flat line with a width of +/-0,1K.

A stable climate for more than 100y and in this Funny Glamour some one here believe.

LOL

Continuing from my blather @163

If ENSO is far too weedy to act as Curry’s Big Natural Oscillatio (BNO) (as demonstrated in Zhou & Tung 2013 Fig1a), how big does BNO actually need to be? (Note that Zhou & Tung fail to ask this or any other question before embarking on their little curve-fitting expedition.)

.

A fair description of something representing Curry’s BNO would presumably have an amplitude half the size of the 1950-to-date global temperature rise. The average ΔT between the first and last half-decades of this period using an average of HadCRUT(C&W), GISS & NCDC yield a rise of 0.62°C so BNO will be 0.31°C peak-to-peak (ie +/- 0.155°C). As a reality check, this is almost the full size of the average detrended wobble from these three temperature records (0.33°C). A sinusoidal profile and a period of 60 years can be also be fairly assumed with peaks occuring in, say, AD1940 and AD2000 (de-trended it’s more exactly 1941 & 2004).

It is also fair to adopt ECS=1.5°C as Curry appears to strongly advocate the notion that worldwide AGW mitigation is not an urgent requirement. (An ECS=2.0°C puts us six decades away from generating forcings capable dangerous warming (+2.0°C) from just CO2 forcing alone assuming present CO2 emissions were to remain constant (when the rate has actually been continually increasing). Yet significant reductions of CO2 emissions is a multi-decade task. Thus to fail to support urgent AGW mitigation, such support that Curry derides by saying they “don’t meet the norms of responsible advocacy,” can only mean ECS is effectively considered to be significantly lower than 2.0°C.)

.

BNO can be visualised as operating in a number of different theoretical modes. One such mode is that heat from the climate is stored in some form away from (and thus not interacting with) the general climate system. Energy would accumulate in storage during the cooling/cool periods of BNO and then this store would be expended both to realise and also to maintain the warm periods. Let us style this ‘storeage’ version of BNO as BNO(S).

To achieve such a cycle, BNO(S) must at a minimum warm the surface and atmosphere of the planet by a total of 0.31°C during 1970-99 which would require more than perhaps 20 ZJ during the warming phase, equally divided between the first half and the last half of this 1970-99 period. (This 20 ZJ is a guestimate and assumed a low estimate – two decades-worth of recent warming (~0.155°C/decade) forced by say 0.6Wm^-2 with 10% warming the atmosphere and immediate surface. Higher estimates would alter the timing of the overall storage requirement, not the size of the storage requirement.)

And having achieved these +/-0.155°C changes in temperature, BNO(S) will require further energy fluxes. When above the equilibrium level BNO(S) will require additional surface energy to maintain the global temperature and will require to store energy away when temperature is below the equilibrium level. The average temperature above equilibrium during the 30 year warm phase will be +2/π x 0.155°C which with ECS=1.5°C results in BNO(S) requiring 120ZJ from storage to maintain the 1985-2014 warm phase and the accumulating storage of an identical amount during the cool phases 1925-84 and 2015-44.

Thus overall BNO(S) will require 1970-84 additional storage of +50 ZJ, then 1985-1999 removal of -70 ZJ from storage and 2000-14 removal of -50 ZJ from storage. Storage would accumulate +70ZJ 2015-2029, completing the cycle.

Are such levels of storage feasable given our knowledge of planet Earth?

One place where such energy could be stored is within the oceans. In her APS presentation Curry presents Figure 1 of Balmaseda et al (2013) (Paper PDF and Fig 1 web-graph) as her favourite record of OHC. This graphic suggests the following changes in OHC 0-2000m over the various time periods of the proposed BNO(S) cycle, with the final 5 years-to-2014 values taken from Levitus 0-2000m.

ΔOHC ignoring volcanic cooling episodes.

1958-69 -30(+/-25) ZJ

1970-84 +40(+/-10) ZJ

1985-99 +50 ZJ

2000-14 +200 ZJ

ΔOHC subtracting volcanic cooling epsiodes.

1958-69 0(+/-25) ZJ

1970-84 + 70(+/-10) ZJ

1985-99 +100 ZJ

2000-14 +200 ZJ

Energy flows into storage required by BNO(S)

1955-69 ?+70 ZJ

1970-84 +50 ZJ

1985-99 -70 ZJ

2000-14 -50 ZJ

It can be plainly seen that the ΔOHC 0-2000m record cannot sensibly be attributed to any BNO(S) storage. For BNO(S) alone in its last quarter cycle 2000-14 (for which we have the best data OHC) the ΔOHC 0-2000m record is 4x larger and of opposite sign (contradicting the assumption of BNO=AGW by suggesting AGW is 5x bigger in magnitude than BNO(S) for this period if BNO(S) did exist using 0-2000m ocean storage).

Any storage changes of the required size below 2000m would surely provide a detectable reduction in SLR measurements (contributing -15mm 1970-99, +7.5mm 2000-2014, +0.05mmyr^-2 1985-2014). Further, studies addressing global OHC below 2000m (reviewed in IPCC AR5 Section 3.2.4) find not a sign of net cooling of the size required by BNO(S).

Thus, assuming there has been no decimal point slippage or sum such within the above proof, it can be concluded that BNO(S) cannot operate by way of thermal storage within the oceans.

Of course, there are other means by which BNO(S) could store 120 ZJ. These will be considered next….

You miss Judith’s comment on the climate model’s inability to track early 1900s warming and 1945 onwards cooling.

If the models, with ALL the information can not track, then how can you conceive they could track future temps?

The simple answer is they can not. You should admit this, and from that point you can begin to be honest in your assessment.

I think you know this already. I read a trite kind of viewpoint from you, which said the models are trying to go from the very small, to the very large effects, and that’s hard to do. So you know it, but you have to use your tricks and games because you think you are right, with your huge ego.

But planet earth is talking, and it says “YOU ARE WRONG.”

Maybe you can help me with something I’ve never understood. Why on Earth would you think that the failure of the models would benefit your side of the argument?

Independent of all the models, we know with 100% certainty that CO2 is a greenhouse gas.

Independent of all the models, we know that adding CO2 to the atmosphere warms the planet.

Independent of all the models, we know that the planet is about 33 degrees warmer solely as a result of the greenhouse effect.

You contend that the models lack skill for hindcasting the warming due in the early portion of the last century and the lack of warming during the 1945-1975 period. Perhaps you are unaware of the progress that has been made in attribution during these periods to a combination of lower volcanism, increased insolation and increased greenhouse warming in the former period and to increased aerosols from fossil fuel burning in the latter. (That would be expected if you get your climate news from Aunt Judy.)

However, even if you were correct, the failures in the models argue for HIGHER climate sensitivity rather than lower. How on Earth do you come to the conclusion that this bolsters the argument of you and other smug, complacent ignoramuses favoring inaction.

Here’s a clue, Ed. Science doesn’t work like that. If you want to show we are wrong, then come up with a better model–one that explains current and past trends and allows all of us to give a big sigh of relief. Feel free to tell this to Aunt Judy, as she appears to have forgotten how science works, as well.

The planet and all of science is talking, and it says “You are an imbecile.”

“But planet earth is talking, and it says “YOU ARE WRONG.””

– See more at: https://www.realclimate.org/index.php/archives/2014/08/ipcc-attribution-statements-redux-a-response-to-judith-curry/comment-page-4/#comment-592888

Er, no, it’s not.

“Why on Earth would you think that the failure of the models would benefit your side of the argument?”

You don’t know what I think. Here it is. CO2 is a greenhouse gas. That was an AHA moment in the understanding of earth’s climate system. Add more heat, as to a pot, and it gets hot. But in my view earth’s climate system is extraordinarily complicated, with non-linear interactions.

As a child, when scientists were puzzling out ice age beginnings, the idea was that extra heat could cause it. The idea was that the arctic did not freeze over, leading to more water uptake, and massive snows that decreased albedo. It’s all possible.

Regarding the inflammatory parts of my post, I’m frankly tired of activists turning off air-conditioners to trick people. I’m tired of “Hide the decline.” I’m tired of every weather event being tied to global warming. Too much snow? Global warming. Too little snow? Global warming.

The only difference between you and me is I Don’t know. but neither do you.

So after all that with OHC @166, where to look for the energy store to power Judith Curry’s BNO(S)?

The Earth’s core is quite an energetic place that could easily hide 120ZJ but the problem is that it is rather well insulated which dampens fluctuations out from its cooling process. That cooling process is also rather small.

Now measuring the heat content of the Earth’s core to spot the 120ZJ storage would be a bit of an ask. The radiogenic and primordial heat pool totals approximately 12,600,000,000ZJ. So it’s better to compare the peak +/-6ZJ pa energy flow required by BNO(S) with the actual measured flow through the crust from the core which according to Davies & Davies (2010) (PDF) totals only 1.47ZJ pa with zero waggles. Indeed, it is such a steady flow of heat coming out that some have used borehole temperature gradients to try to produce a surface temperature record over past centuries (eg Huang et al 2000 or Beltrami et al 2011). There simply cannot then be a BNO(S) powered from this route.

Latent heat allows energy storage without temperature change. But freezing ice to release 120ZJ to power the last 30 years of BNO(S) would lower sea level by a rather noticable one metre if it were land ice being frozen. Of course, sea ice wouldn’t have that difficulty but there is only 5,000 cu km of summer sea ice left, it having been melting away for a few decades now when BNO(S) requires not melting but the freezing of 360,000 cu km.

And the other sort of latent heat, a decrease in atmospheric water vapour is also the stuff of fantasy requiring a change of 50,000 cu km when the atmosphere only contains (and only can contain) ~13,000 cu km without crazy temperature increases.

Estimates of kinetic energy and available potential energy within the oceans yield values far too small for our needs with variablilty even smaller. So where else to look?

Chemical energy might work well to provide a multi-decadal storage cycle with biology the converting mechanism except again there is no evident cycling of such a storage capacity to fit the bill. That such cycling should be plain to see is suggested by human deforestation which leaves a pretty noticable scar across the globe but yields just 0.08ZJ pa (along with a whole a lot of emitted CO2) and no sign of it being a full multi-decadal cycle either.

Variations in the speed of the earth’s spin in the form of length of day may fire the imaginations of curve-fitters with graphs like this from Dickey et al (2011) matching Length of Day against global temperature shorn of AGW. But the actual changes in spin, while having the sort of ups and downs being sought for BNO(S) only provide energy storage for 3% of BNO(S) energy requirements and most of that is forced by non-climatic effects.

So I’m running out if ideas here. I don’t know. Speculating, perhaps Huang (2005) could have missed some of those exhotic phase shifts that deep high-pressure water experiences. Perhaps the electrical or magnetic effects Curry mentioned and was quizzed about in her APS presentation (p158 before Christy & Lindzen dived in and changed the subject to cosmic rays and clouds) are what hold the key to the missing heat storage. And it should be an encouragement that the 120ZJ store is so ellusive. When it is found, if it is ever found, fat chance, with its 60 year cycling, its very rarety will make it more credible.

In the meantime the BNO(S) hypothesis has gained the attributes of what in Probability Theory is technically called a dead duck. (Thus it may also be possible to class it a Black Anatidae.) It would be useful to examine which assumptions need to be changed to breath a little life into BNO(S) but then by doing so we would transform it from the BNO(S) of Judith Curry into something different.

So it is more appropriate to first consider other theoretical modes of BNO to see if one of them maybe can hold just a little shred credibility….

ed barbar wrote: “I’m tired of every weather event being tied to global warming.”

You’d better move to another planet, then.

Because THIS planet is globally warmed. Which means that ALL the weather on this planet, all the time, is affected by global warming in some way.

> Curry … APS presentation

also discussed at http://rabett.blogspot.com/2014/02/like-lambs-to-slaughter.html

From the transcript, starting at 159:

Ed Barbar:

Ed, where do you get the idea that anyone is turning off air-conditioners to trick people? What makes you think “hide the decline” is anything but an AGW-denier dog whistle? You may call yourself a skeptic, but it appears you’ve un-skeptically taken to heart what you’ve read on denier blogs.

You may not know, but that doesn’t mean nobody does. A genuine skeptic recognizes that on complex topics like climate, there may actual experts who know more than he does, and that some experts are more credible than others. If you’re sincerely interested in the scientific case for AGW, you can’t really do better than to start with this free 36-page booklet published jointly by the US National Academy of Sciences and the Royal Society of the UK: Climate Change: Evidence and Causes.

It’s written for educated laypersons, and represents the combined expertise of two of the world’s most respected scientific societies. That’s not to say the NAS and the RS can’t be mistaken, but for anyone who isn’t himself an expert in climate science, they’re a better bet than some guy on a blog. If you don’t trust them, you have no reason to trust anyone else either.

Ed Barbar: “You don’t know what I think.”

Well, whose fault is that, Ed? Anyway, thanks for telling us what you are tired of. Want to know what I’m tired of? I’m tired of scientific illiterates pretending to understand complicated physical systems and throwing around words like “complexity” and “nonlinear” as if they were talismans to put the fear of dragons into us.

So, basically, you (and Aunt Judy) are saying, “Oh, woe is me. It’s all too complicated.” Well, I’m sorry it’s too complicated for you to understand, but if it’s all the same to you, the real scientists would like to get on with the process of understanding climate. You see, they aren’t scared by complexity or nonlinearity. They know how to deal with it. Now, maybe they’ll be wrong, but they aren’t afraid of that either, because they know their colleagues will correct them.

There are worse things than being wrong, Ed. Bullshitting is one of them. Bullshit is uncorrectable. It lives forever in its same worse than useless form. It remains “not even wrong” forever.

So, you are tired of the theatre. Fine. Read the damned science. It’s unequivocal

[edit]

Mal, Ed’s blown up the one rather famous occasion when “turning off the air conditioners” happened. The story has grown in the rebunking. You can look it up:

And to correct that, rather than “turning off” the Senator simply overloaded the air conditioning; this is the exact quote:

You know how this tool works:

MARogers, methinks you’re looking too hard.

The first question to ask when looking for a BNO is:

“Why have these only started oscillating in the last century or so? Where is the recording of BNO’s for the last thousand years”

Because like the hockey stick or not, it’s a fact that temperature variations today far exceed those of recent history. If one truly wants to posit BNO’s for today’s oscillations one really has to show where they were the last few millenia.

MA Rodger and Hank Roberts mention the APS transcript:

Curry really wants to be the first should her crazy theory of Bose-Einstein statistics on cloud nucleation pans out. This is what her co-author said on her blog.

That’s not science, its more like buying lottery tickets.

Thanks Hank, it’s hard to keep up with proliferating AGW-denier memes (“No matter how cynical you become, it’s never enough to keep up.” -Lily Tomlin). Many of them may have some kernel of reality at their center, with layer upon layer of distortion accreted around them.

Unfortunately, one suspects that a 2×4 upside the head would only provoke response in kind from determined deniers.

David Miller @178.

While the hockey stick is very strong evidence as you describe (and as I also described on a different thread recently), I would consider it a step too far to argue that it is compelling evidence for AGW on its own. It is compelling, but that’s because there is a whole lot of inter-supporting evidence of which the hockey stick is but a small yet important part.

As for my whitterings here, seeking extra evidence, for or against, is always useful.

So you can look forward to my next installment which considers if there are signs of what I term BNO(R), a radiative-powered BNO. (I note the text editor is having fun with interpreting (R.).)

I am hoping my thought experiments here are of greater merit that the “expert presentation” of Dr Curry at the APS which certainly operates at a level of technical intricacy that is beyond my abilities to grasp.

The following extract (p134) is an exemplar of that.

And on the subject of the hockey stick and what it demonstrates, be advised that the ever-incisive analysis of Dr Curry when applied to Mann et al 1998 led her to be “misled” by all that ‘hide-the-decline’ nonsense.

Also no sign of any change in her 2011 view of the scientific implications. That was that once the ‘decline’ and sampling issues are factored in, Dr Curry and her Uncertainty Elves on Climateetcia believe we should question whether global millenia temperature reconstructions in fact “make any sense at all.” Myself, I’d reckon it would cost the lives of a lot of her Uncertainty Elves defending such a position and assume Dr Curry is of the opinion she won’t have to.

Does this complicated argument boil down to “the anthropogenic increase in CO2 would increase temperatures more than measured so other forcings and natural variation must be negative”? Is that it?

[Response: No. If that was the case, the scaling on the model ANT pattern would be substantially less then one. The other forcings would have unphysical scalings if the sign was wrong in the forcing datasets. – gavin]

Having dismissed the possibility of Judith Curry’s BNO existing as BNO(S) above @171, another theoretical method for BNO is now considered.

BNO could result from radiative forcing created somehow by internal variation within the climate system. Would such radiative forcing be evident given what we know about planet Earth? Can a radiative version of BNO exist?

Let us term this hypothesis BNO(R). (Note that a BNO(R)-like waggle may be the result of external forcing, but if some necessary evidence is absent for BNO(R) it cannot evist whatever the cause.)

.

The Earth’s climate is a large system and it takes time to react to forcings. Thus a short-sharp 0.31°C wobble in global temperature requires a lot more force than a slower 0.31°C wobble. One consequence of this reaction time provides BNO(R) with a potential signature. If the length of BNO(R) cycle shortens while the amplitude of the forcing remains constant, it will result in a smaller amplitude of the temperature response, and if the cycle lengthens it will increase in amplitude of the temperature wobble.

(The following back-of-the-envelope calculations make the assumption that 50% of equilibrium is completed within 10 years and 75% of equilibrium completed within 30 years.)

For a 60-year cycle, if the forcing appeared very quickly, effectively as a step change, this sudden appearance would give the climate all of the 30-year half-cycle of BNO(R) to react, 30-years to create the 0.31°C change in temperature. At this point the forcing is quickly removed to restore the original temperature over the following 30 years to thus complete the oscillation. If equilibrium temperature were actually achieved, the forcing would require to be 0.76 Wm^-2 for ECS=1.5°C but for BNO(R) at 75% equilibrium over 30 years, the forcing will need to be larger, perhaps ~50% larger due to the 60-year period being too short to achieve equilibrium. Thus BNO(R) could then be created by a square wave forcing of perhaps ~1.15Wm^-2 peak-to-peak.

If the forcing is applied more gently (and withdrawn more gently) in the manner of a sine wave, the time available for the climate to react shrinks further and the forcing when fully applied will require to be larger. Resorting to a spreadsheet, a BNO(R) driven by a a 60-year oscillating forcing would appear to require an oscillating forcing of perhaps 1.8Wm^-2 peak-to-peak.

Because of its slow change from warming to cooling (and visa versa) and because equilibrium is not achieved, there will be a lag between the cycle of forcing and the cycle of warming. This results from the cooling forcing having to cancel out the remaining un-equilibrated warming before it can begin its cooling effect. Such a lag for a sine wave would be perhaps a be as long as 8 years.

When AGW is added to the BNO(R) cycle, the length of lag to peak temperatures increases significantly to more than a decade and potentially doubling, while the length of lag of minimum temperatures behind minimum forcing decreases significantly, potentially halving.

It would be possible to model the climate when forced in such a manner. (Strangely I have no memory of seeing such modelling. Given all the ink spilt over various BNO hypotheses, an absence of such models would be surely bizarre. So where are they?) At its most simple, the results from my spreadsheet (graphed here) appear to suggest BNO(R)’s impact on late 20th century global temperature would be significantly smaller than the 50% espoused by Judith Curry.

However, a search for some reconsiliation between the global temperature record and possible BNO(R) forcings by altering its strength and/or timing (be it in the form of square wave or sine wave or any other wave) may show nothing other than the existence of many competing and controversial alternatives. Rather, it may be more productive to examine other evidence for the existence of a BNO(R)…..

> gavin

Um, more help please? fruitless: https://www.google.com/search?q=+the+model+ANT+pattern

Gavin, I’ve re-read your post and in particular your description of 10.5. I think my brief summary is exactly correct. Model estimates of GHGs are considerably larger than the measured temperature increase. ANT is somewhat larger than the measured temperature increase. So the conclusion is that natural forcings (or whatever you want to call the non ANT forcings) have been negative during the time period.

I’m afraid I didn’t understand your comment about ANT scaling. In what way is it scaled? In 10.5 it seems to simply be an estimate of the temperature change caused by all human activities. That is an absolute number not a scaled number.

[Response: There is plenty of explanation in the IPCC chapter, and in the papers referenced therein. I suggest you read what was actually done instead of relying on my paraphrasing. – gavin]

Hank – I think you’ll find these abbreviations together in many papers

ANT = anthropogenic forcing

NAT = natural forcing

ALL = natural plus anthropogenic

CTL = preindustrial control run

SUL = sulfate aerosol forcings

Thanks Kevin and Gavin, and for the link to the final text IPCC chapter — with illustrations.

Funny how the bigger the wreck, the harder it is to realize it’s happening.

Data obtained from observations of the planet Climateetcia suggests that Judy Curry has yet to give her definitive response to this post. The cause of what appears a change of mind is a web-review of Daniel C. Dennett’s book Intuition Pumps and Other Tools for Thinking which led Judy Curry to write:-

And my own whittering goes on. @183 it was shown that the global temperature record does not immediately demonstrate a convincing BNO(R) (that is a ‘radiatively forced’ version of Judith Curry’s Big Natural Oscillation) but it was also asserted that applying some judicious curve-fitting would render any such a demonstration inconclusive. Thus to be conclusive we should look elsewhere. Indeed, anybody properly arguing for or against BNO(R) should be looking elsewhere, shouldn’t they? So let’s do them all a favour and look for them.

.

The energy requirements for BNO(R) are shown to be quite large – an oscillating radative forcing of 1.15Wm^-2 peak-to-peak if of square-wave form, 1.8Wm^-2 if of sine-wave form. When compared with natural wobbles surmised by the IPCC (shown in AR5 WG1 Figure 10.1f), the square-wave version of BNO(R) would be as big as any natural wobble but far more long-lasting, and the sine-wave version would dwarf them all with its peak-to-peak height not far short of the total AGW forcing since 1850 (helpfully plotted in Fig 10.1g). And because of their on/off nature, the radiative imbalance engendrered by such wobbling should be more noticeable.

Data from measurements of the Earth Radiation Budget at the top of the atmosphere is available from CERES since 2000. So the 14 years of CERES data, with or without error-bars – Do they show signs of BNO(R)? Or perhaps they show signs of the absence of BNO(R)?

Plotting out the CERES data & the imbalances modelled on my simplistic speadsheet (two clicks down here) give mixed results. (I’m not greatly familiar with CERES ERB data. Hey, I hope I haven’t plotted it upside-down.)

Simplistically, a square-wave type BNO(R) could be sweetly fitted to the data with a fully-allowable shift vertically. Of course. the drop in TOA ERB at the start of the CERES data may be nothing to do with any BNO(R) drop, and could be simply the last half of an oscillation as we see occuring 2008-2014. For instance, Lobe et al (2012) attributes much of this 2000-1 drop (the LW component of it) to ENSO. Yet even if signs of the step-change were entirely absent from the data, the timing of the step could easily have been a little earlier than the CERES data and would then not reoccur for 30 years. Thus no evidence either for or against the square-type of BNO(R) is available from CERES, so far.

The sin-wave type BNO(R) is less easy to reconcil with the CERES data although probably not conclusively so. The BNO(R) sine-type required-imbalance exceeds the level of potential drift of instrument calibration suggested by Loeb et al (0.5Wm^-2/decade) yet there is no sign of a trend in imbalance as required by the sine-type BNO(R).

A watching brief would be strongly recommended with this data, except it is not the only measure of TOA ERB.

The TOA ERB can be surmised with some accuracy from OHC as the overwhelming proportion of the imbalance ends up warming the oceans. Assuming this proportion to be a constant-yet-conservative 85%, how does this compare with measurements of OHC?

If BNO(R) existed there should be significant ΔOHC to account for it and also for AGW. Additionally there should be some slowdown in ΔOHC evident over the last decade, the decade we have the best data for.