I have written a number of times about the procedure used to attribute recent climate change (here in 2010, in 2012 (about the AR4 statement), and again in 2013 after AR5 was released). For people who want a summary of what the attribution problem is, how we think about the human contributions and why the IPCC reaches the conclusions it does, read those posts instead of this one.

The bottom line is that multiple studies indicate with very strong confidence that human activity is the dominant component in the warming of the last 50 to 60 years, and that our best estimates are that pretty much all of the rise is anthropogenic.

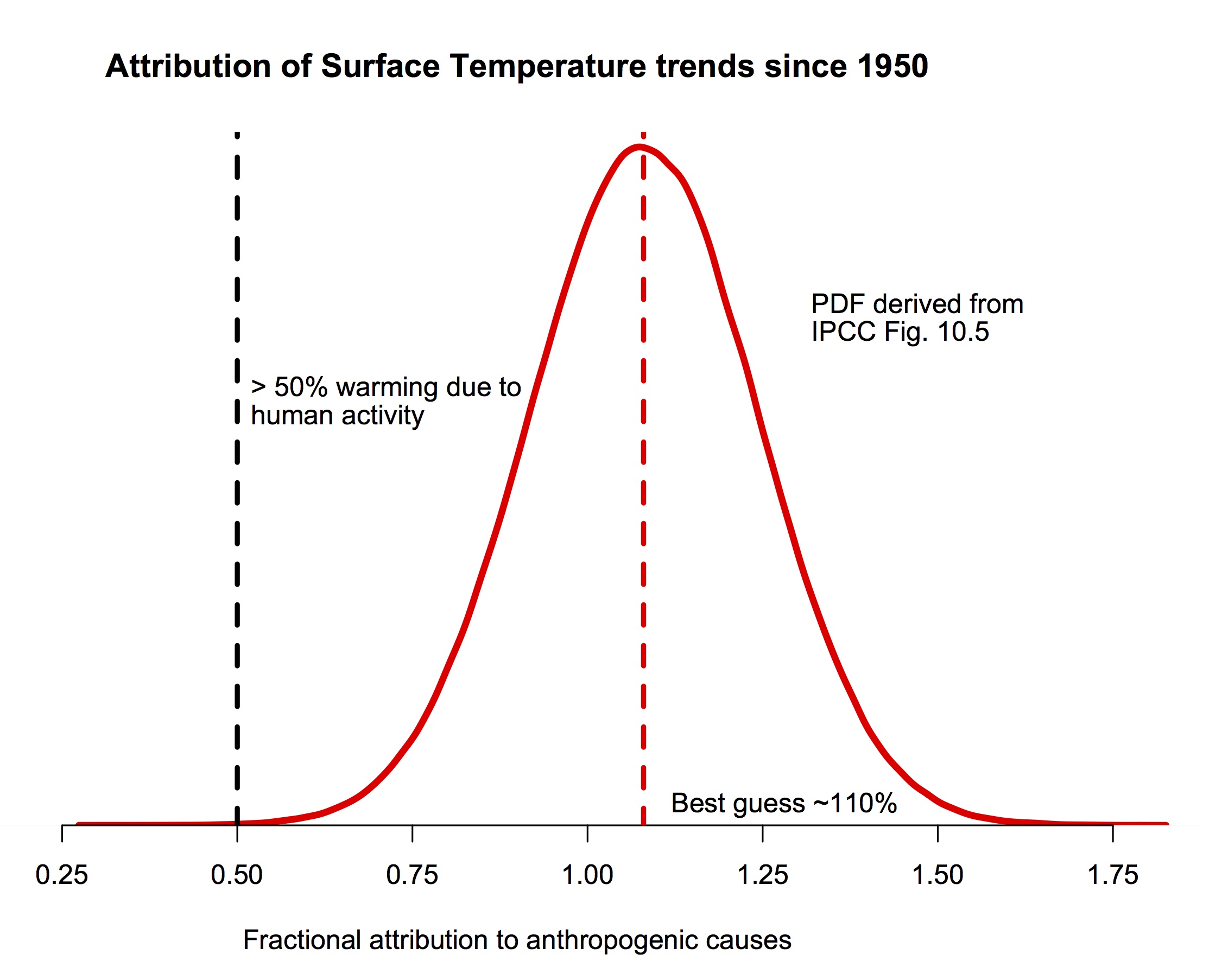

The probability density function for the fraction of warming attributable to human activity (derived from Fig. 10.5 in IPCC AR5). The bulk of the probability is far to the right of the “50%” line, and the peak is around 110%.

If you are still here, I should be clear that this post is focused on a specific claim Judith Curry has recently blogged about supporting a “50-50” attribution (i.e. that trends since the middle of the 20th Century are 50% human-caused, and 50% natural, a position that would center her pdf at 0.5 in the figure above). She also commented about her puzzlement about why other scientists don’t agree with her. Reading over her arguments in detail, I find very little to recommend them, and perhaps the reasoning for this will be interesting for readers. So, here follows a line-by-line commentary on her recent post. Please excuse the length.

Starting from the top… (note, quotes from Judith Curry’s blog are blockquoted).

Pick one:

a) Warming since 1950 is predominantly (more than 50%) caused by humans.

b) Warming since 1950 is predominantly caused by natural processes.

When faced with a choice between a) and b), I respond: ‘I can’t choose, since i think the most likely split between natural and anthropogenic causes to recent global warming is about 50-50′. Gavin thinks I’m ‘making things up’, so I promised yet another post on this topic.

This is not a good start. The statements that ended up in the IPCC SPMs are descriptions of what was found in the main chapters and in the papers they were assessing, not questions that were independently thought about and then answered. Thus while this dichotomy might represent Judith’s problem right now, it has nothing to do with what IPCC concluded. In addition, in framing this as a binary choice, it gives implicit (but invalid) support to the idea that each choice is equally likely. That this is invalid reasoning should be obvious by simply replacing 50% with any other value and noting that the half/half argument could be made independent of any data.

For background and context, see my previous 4 part series Overconfidence in the IPCC’s detection and attribution.

Framing

The IPCC’s AR5 attribution statement:

It is extremely likely that more than half of the observed increase in global average surface temperature from 1951 to 2010 was caused by the anthropogenic increase in greenhouse gas concentrations and other anthropogenic forcings together. The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

I’ve remarked on the ‘most’ (previous incarnation of ‘more than half’, equivalent in meaning) in my Uncertainty Monster paper:

Further, the attribution statement itself is at best imprecise and at worst ambiguous: what does “most” mean – 51% or 99%?

Whether it is 51% or 99% would seem to make a rather big difference regarding the policy response. It’s time for climate scientists to refine this range.

I am arguing here that the ‘choice’ regarding attribution shouldn’t be binary, and there should not be a break at 50%; rather we should consider the following terciles for the net anthropogenic contribution to warming since 1950:

- >66%

- 33-66%

- <33%

JC note: I removed the bounds at 100% and 0% as per a comment from Bart Verheggen.

Hence 50-50 refers to the tercile 33-66% (as the midpoint)

Here Judith makes the same mistake that I commented on in my 2012 post – assuming that a statement about where the bulk of the pdf lies is a statement about where it’s mean is and that it must be cut off at some value (whether it is 99% or 100%). Neither of those things follow. I will gloss over the completely unnecessary confusion of the meaning of the word ‘most’ (again thoroughly discussed in 2012). I will also not get into policy implications since the question itself is purely a scientific one.

The division into terciles for the analysis is not a problem though, and the weight of the pdf in each tercile can easily be calculated. Translating the top figure, the likelihood of the attribution of the 1950+ trend to anthropogenic forcings falling in each tercile is 2×10-4%, 0.4% and 99.5% respectively.

Note: I am referring only to a period of overall warming, so by definition the cooling argument is eliminated. Further, I am referring to the NET anthropogenic effect (greenhouse gases + aerosols + etc). I am looking to compare the relative magnitudes of net anthropogenic contribution with net natural contributions.

The two IPCC statements discussed attribution to greenhouse gases (in AR4) and to all anthropogenic forcings (in AR5) (the subtleties involved there are discussed in the 2013 post). I don’t know what she refers to as the ‘cooling argument’, since it is clear that the temperatures have indeed warmed since 1950 (the period referred to in the IPCC statements). It is worth pointing out that there can be no assumption that natural contributions must be positive – indeed for any random time period of any length, one would expect natural contributions to be cooling half the time.

Further, by global warming I refer explicitly to the historical record of global average surface temperatures. Other data sets such as ocean heat content, sea ice extent, whatever, are not sufficiently mature or long-range (see Climate data records: maturity matrix). Further, the surface temperature is most relevant to climate change impacts, since humans and land ecosystems live on the surface. I acknowledge that temperature variations can vary over the earth’s surface, and that heat can be stored/released by vertical processes in the atmosphere and ocean. But the key issue of societal relevance (not to mention the focus of IPCC detection and attribution arguments) is the realization of this heat on the Earth’s surface.

Fine with this.

IPCC

Before getting into my 50-50 argument, a brief review of the IPCC perspective on detection and attribution. For detection, see my post Overconfidence in IPCC’s detection and attribution. Part I.

Let me clarify the distinction between detection and attribution, as used by the IPCC. Detection refers to change above and beyond natural internal variability. Once a change is detected, attribution attempts to identify external drivers of the change.

The reasoning process used by the IPCC in assessing confidence in its attribution statement is described by this statement from the AR4:

“The approaches used in detection and attribution research described above cannot fully account for all uncertainties, and thus ultimately expert judgement is required to give a calibrated assessment of whether a specific cause is responsible for a given climate change. The assessment approach used in this chapter is to consider results from multiple studies using a variety of observational data sets, models, forcings and analysis techniques. The assessment based on these results typically takes into account the number of studies, the extent to which there is consensus among studies on the significance of detection results, the extent to which there is consensus on the consistency between the observed change and the change expected from forcing, the degree of consistency with other types of evidence, the extent to which known uncertainties are accounted for in and between studies, and whether there might be other physically plausible explanations for the given climate change. Having determined a particular likelihood assessment, this was then further downweighted to take into account any remaining uncertainties, such as, for example, structural uncertainties or a limited exploration of possible forcing histories of uncertain forcings. The overall assessment also considers whether several independent lines of evidence strengthen a result.” (IPCC AR4)

I won’t make a judgment here as to how ‘expert judgment’ and subjective ‘down weighting’ is different from ‘making things up’

Is expert judgement about the structural uncertainties in a statistical procedure associated with various assumptions that need to be made different from ‘making things up’? Actually, yes – it is.

AR5 Chapter 10 has a more extensive discussion on the philosophy and methodology of detection and attribution, but the general idea has not really changed from AR4.

In my previous post (related to the AR4), I asked the question: what was the original likelihood assessment from which this apparently minimal downweighting occurred? The AR5 provides an answer:

The best estimate of the human induced contribution to warming is similar to the observed warming over this period.

So, I interpret this as scything that the IPCC’s best estimate is that 100% of the warming since 1950 is attributable to humans, and they then down weight this to ‘more than half’ to account for various uncertainties. And then assign an ‘extremely likely’ confidence level to all this.

Making things up, anyone?

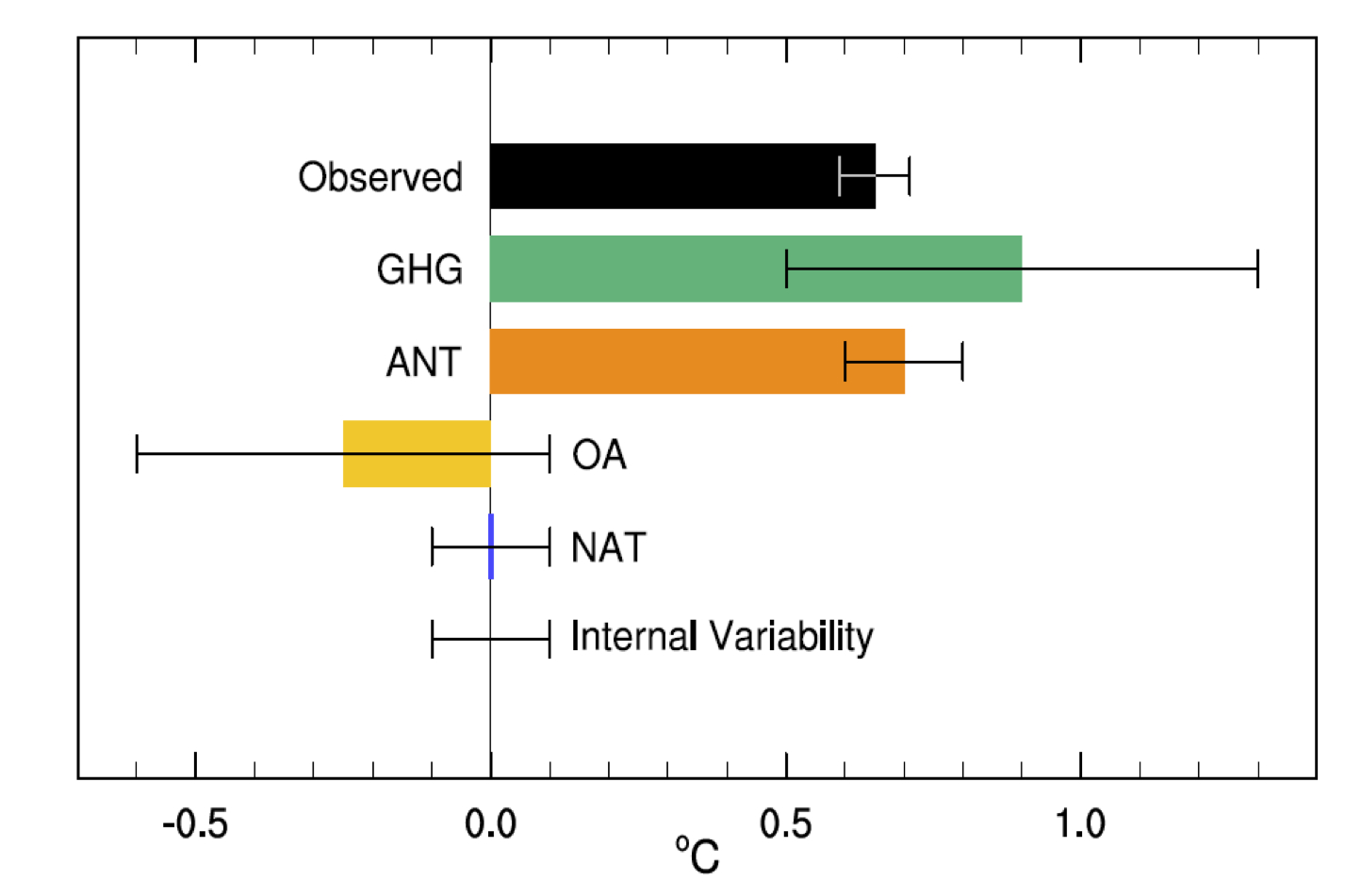

This is very confused. The basis of the AR5 calculation is summarised in figure 10.5:

The best estimate of the warming due to anthropogenic forcings (ANT) is the orange bar (noting the 1𝛔 uncertainties). Reading off the graph, it is 0.7±0.2ºC (5-95%) with the observed warming 0.65±0.06 (5-95%). The attribution then follows as having a mean of ~110%, with a 5-95% range of 80–130%. This easily justifies the IPCC claims of having a mean near 100%, and a very low likelihood of the attribution being less than 50% (p < 0.0001!). Note there is no ‘downweighting’ of any argument here – both statements are true given the numerical distribution. However, there must be some expert judgement to assess what potential structural errors might exist in the procedure. For instance, the assumption that fingerprint patterns are linearly additive, or uncertainties in the pattern because of deficiencies in the forcings or models etc. In the absence of any reason to think that the attribution procedure is biased (and Judith offers none), structural uncertainties will only serve to expand the spread. Note that one would need to expand the uncertainties by a factor of 3 in both directions to contradict the first part of the IPCC statement. That seems unlikely in the absence of any demonstration of some huge missing factors.

I’ve just reread Overconfidence in IPCC’s detection and attribution. Part IV, I recommend that anyone who seriously wants to understand this should read this previous post. It explains why I think the AR5 detection and attribution reasoning is flawed.

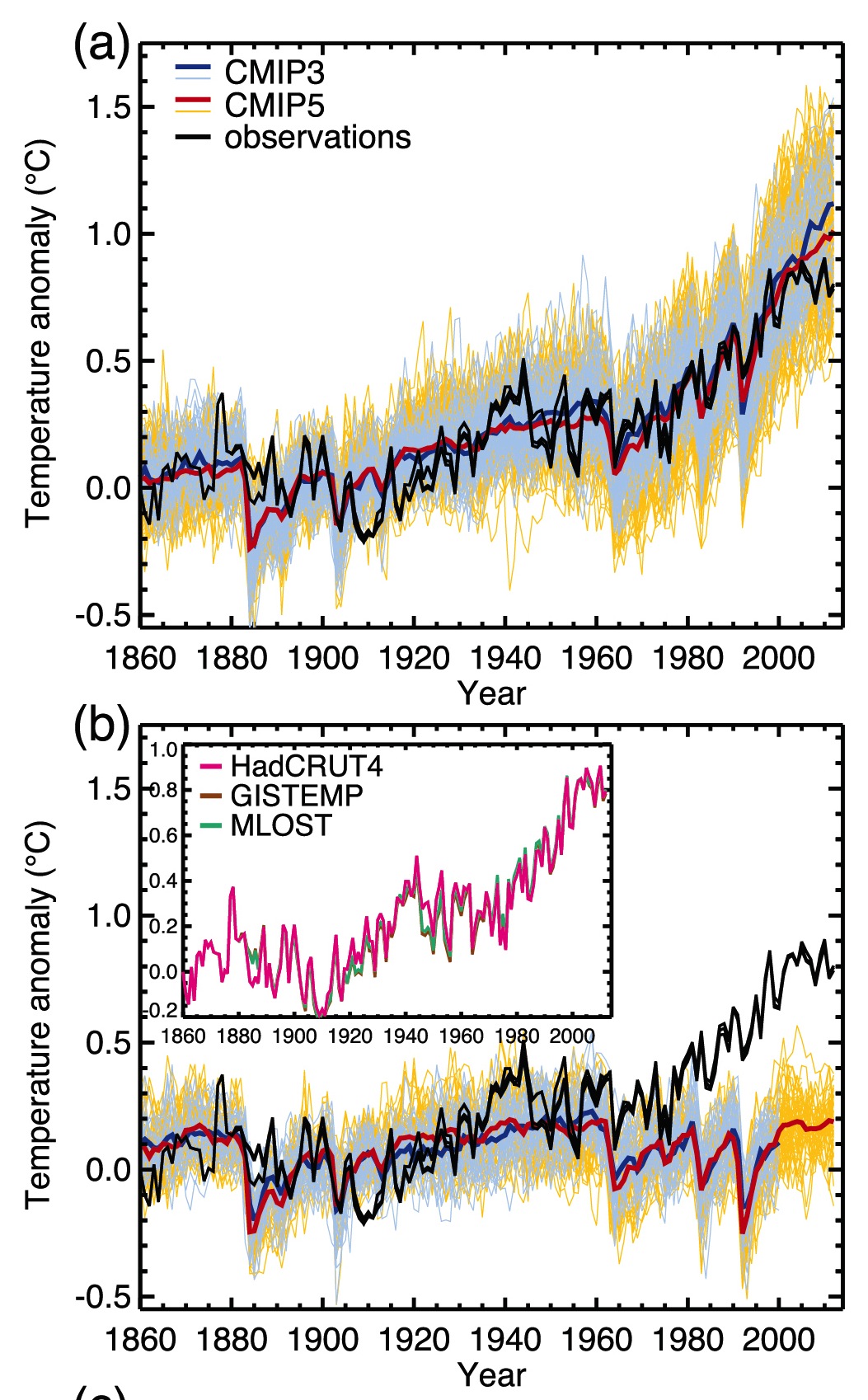

Of particular relevance to the 50-50 argument, the IPCC has failed to convincingly demonstrate ‘detection.’ Because historical records aren’t long enough and paleo reconstructions are not reliable, the climate models ‘detect’ AGW by comparing natural forcing simulations with anthropogenically forced simulations. When the spectra of the variability of the unforced simulations is compared with the observed spectra of variability, the AR4 simulations show insufficient variability at 40-100 yrs, whereas AR5 simulations show reasonable variability. The IPCC then regards the divergence between unforced and anthropogenically forced simulations after ~1980 as the heart of the their detection and attribution argument. See Figure 10.1 from AR5 WGI (a) is with natural and anthropogenic forcing; (b) is without anthropogenic forcing:

This is also confused. “Detection” is (like attribution) a model-based exercise, starting from the idea that one can estimate the result of a counterfactual: what would the temperature have done in the absence of the drivers compared to what it would do if they were included? GCM results show clearly that the expected anthropogenic signal would start to be detectable (“come out of the noise”) sometime after 1980 (for reference, Hansen’s public statement to that effect was in 1988). There is no obvious discrepancy in spectra between the CMIP5 models and the observations, and so I am unclear why Judith finds the detection step lacking. It is interesting to note that given the variability in the models, the anthropogenic signal is now more than 5𝛔 over what would have been expected naturally (and if it’s good enough for the Higgs Boson….).

Note in particular that the models fail to simulate the observed warming between 1910 and 1940.

Here Judith is (I think) referring to the mismatch between the ensemble mean (red) and the observations (black) in that period. But the red line is simply an estimate of the forced trends, so the correct reading of the graph would be that the models do not support an argument suggesting that all of the 1910-1940 excursion is forced (contingent on the forcing datasets that were used), which is what was stated in AR5. However, the observations are well within the spread of the models and so could easily be within the range of the forced trend + simulated internal variability. A quick analysis (a proper attribution study is more involved than this) gives an observed trend over 1910-1940 as 0.13 to 0.15ºC/decade (depending the dataset, with ±0.03ºC (5-95%) uncertainty in the OLS), while the spread in my collation of the historical CMIP5 models is 0.07±0.07ºC/decade (5-95%). Specifically, 8 model runs out of 131 have trends over that period greater than 0.13ºC/decade – suggesting that one might see this magnitude of excursion 5-10% of the time. For reference, the GHG related trend in the GISS models over that period is about 0.06ºC/decade. However, the uncertainties in the forcings for that period are larger than in recent decades (in particular for the solar and aerosol-related emissions) and so the forced trend (0.07ºC/decade) could have been different in reality. And since we don’t have good ocean heat content data, nor any satellite observations, or any measurements of stratospheric temperatures to help distinguish potential errors in the forcing from internal variability, it is inevitable that there will be more uncertainty in the attribution for that period than for more recently.

The glaring flaw in their logic is this. If you are trying to attribute warming over a short period, e.g. since 1980, detection requires that you explicitly consider the phasing of multidecadal natural internal variability during that period (e.g. AMO, PDO), not just the spectra over a long time period. Attribution arguments of late 20th century warming have failed to pass the detection threshold which requires accounting for the phasing of the AMO and PDO. It is typically argued that these oscillations go up and down, in net they are a wash. Maybe, but they are NOT a wash when you are considering a period of the order, or shorter than, the multidecadal time scales associated with these oscillations.

Watch the pea under the thimble here. The IPCC statements were from a relatively long period (i.e. 1950 to 2005/2010). Judith jumps to assessing shorter trends (i.e. from 1980) and shorter periods obviously have the potential to have a higher component of internal variability. The whole point about looking at longer periods is that internal oscillations have a smaller contribution. Since she is arguing that the AMO/PDO have potentially multi-decadal periods, then she should be supportive of using multi-decadal periods (i.e. 50, 60 years or more) for the attribution.

Further, in the presence of multidecadal oscillations with a nominal 60-80 yr time scale, convincing attribution requires that you can attribute the variability for more than one 60-80 yr period, preferably back to the mid 19th century. Not being able to address the attribution of change in the early 20th century to my mind precludes any highly confident attribution of change in the late 20th century.

This isn’t quite right. Our expectation (from basic theory and models) is that the second half of the 20th C is when anthropogenic effects really took off. Restricting attribution to 120-160 yr trends seems too constraining – though there is no problem in looking at that too. However, Judith is actually assuming what remains to be determined. What is the evidence that all 60-80yr variability is natural? Variations in forcings (in particularly aerosols, and maybe solar) can easily project onto this timescale and so any separation of forced vs. internal variability is really difficult based on statistical arguments alone (see also Mann et al, 2014). Indeed, it is the attribution exercise that helps you conclude what the magnitude of any internal oscillations might be. Note that if we were only looking at the global mean temperature, there would be quite a lot of wiggle room for different contributions. Looking deeper into different variables and spatial patterns is what allows for a more precise result.

The 50-50 argument

There are multiple lines of evidence supporting the 50-50 (middle tercile) attribution argument. Here are the major ones, to my mind.

Sensitivity

The 100% anthropogenic attribution from climate models is derived from climate models that have an average equilibrium climate sensitivity (ECS) around 3C. One of the major findings from AR5 WG1 was the divergence in ECS determined via climate models versus observations. This divergence led the AR5 to lower the likely bound on ECS to 1.5C (with ECS very unlikely to be below 1C).

Judith’s argument misstates how forcing fingerprints from GCMs are used in attribution studies. Notably, they are scaled to get the best fit to the observations (along with the other terms). If the models all had sensitivities of either 1ºC or 6ºC, the attribution to anthropogenic changes would be the same as long as the pattern of change was robust. What would change would be the scaling – less than one would imply a better fit with a lower sensitivity (or smaller forcing), and vice versa (see figure 10.4).

She also misstates how ECS is constrained – all constraints come from observations (whether from long-term paleo-climate observations, transient observations over the 20th Century or observations of emergent properties that correlate to sensitivity) combined with some sort of model. The divergence in AR5 was between constraints based on the transient observations using simplified energy balance models (EBM), and everything else. Subsequent work (for instance by Drew Shindell) has shown that the simplified EBMs are missing important transient effects associated with aerosols, and so the divergence is very likely less than AR5 assessed.

Nic Lewis at Climate Dialogue summarizes the observational evidence for ECS between 1.5 and 2C, with transient climate response (TCR) around 1.3C.

Nic Lewis has a comment at BishopHill on this:

The press release for the new study states: “Rapid warming in the last two and a half decades of the 20th century, they proposed in an earlier study, was roughly half due to global warming and half to the natural Atlantic Ocean cycle that kept more heat near the surface.” If only half the warming over 1976-2000 (linear trend 0.18°C/decade) was indeed anthropogenic, and the IPCC AR5 best estimate of the change in anthropogenic forcing over that period (linear trend 0.33Wm-2/decade) is accurate, then the transient climate response (TCR) would be little over 1°C. That is probably going too far, but the 1.3-1.4°C estimate in my and Marcel Crok’s report A Sensitive Matter is certainly supported by Chen and Tung’s findings.

Since the CMIP5 models used by the IPCC on average adequately reproduce observed global warming in the last two and a half decades of the 20th century without any contribution from multidecadal ocean variability, it follows that those models (whose mean TCR is slightly over 1.8°C) must be substantially too sensitive.

BTW, the longer term anthropogenic warming trends (50, 75 and 100 year) to 2011, after removing the solar, ENSO, volcanic and AMO signals given in Fig. 5 B of Tung’s earlier study (freely accessible via the link), of respectively 0.083, 0.078 and 0.068°C/decade also support low TCR values (varying from 0.91°C to 1.37°C), upon dividing by the linear trends exhibited by the IPCC AR5 best estimate time series for anthropogenic forcing. My own work gives TCR estimates towards the upper end of that range, still far below the average for CMIP5 models.

If true climate sensitivity is only 50-65% of the magnitude that is being simulated by climate models, then it is not unreasonable to infer that attribution of late 20th century warming is not 100% caused by anthropogenic factors, and attribution to anthropogenic forcing is in the middle tercile (50-50).

The IPCC’s attribution statement does not seem logically consistent with the uncertainty in climate sensitivity.

This is related to a paper by Tung and Zhou (2013). Note that the attribution statement has again shifted to the last 25 years of the 20th Century (1976-2000). But there are a couple of major problems with this argument though. First of all, Tung and Zhou assumed that all multi-decadal variability was associated with the Atlantic Multi-decadal Oscillation (AMO) and did not assess whether anthropogenic forcings could project onto this variability. It is circular reasoning to then use this paper to conclude that all multi-decadal variability is associated with the AMO.

The second problem is more serious. Lewis’ argument up until now that the best fit to the transient evolution over the 20th Century is with a relatively small sensitivity and small aerosol forcing (as opposed to a larger sensitivity and larger opposing aerosol forcing). However, in both these cases the attribution of the long-term trend to the combined anthropogenic effects is actually the same (near 100%). Indeed, one valid criticism of the recent papers on transient constraints is precisely that the simple models used do not have sufficient decadal variability!

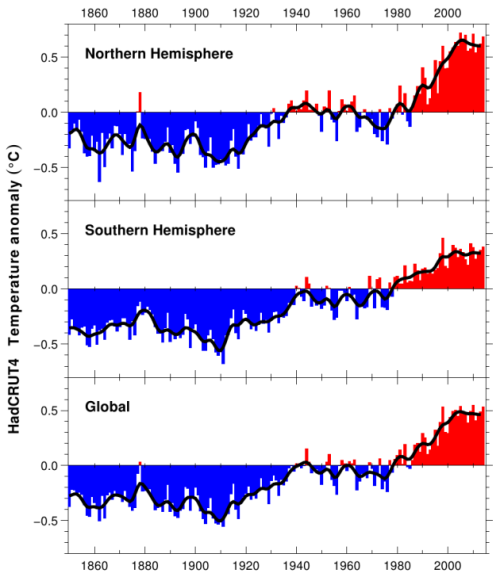

Climate variability since 1900

From HadCRUT4:

The IPCC does not have a convincing explanation for:

- warming from 1910-1940

- cooling from 1940-1975

- hiatus from 1998 to present

The IPCC purports to have a highly confident explanation for the warming since 1950, but it was only during the period 1976-2000 when the global surface temperatures actually increased.

The absence of convincing attribution of periods other than 1976-present to anthropogenic forcing leaves natural climate variability as the cause – some combination of solar (including solar indirect effects), uncertain volcanic forcing, natural internal (intrinsic variability) and possible unknown unknowns.

This point is not an argument for any particular attribution level. As is well known, using an argument of total ignorance to assume that the choice between two arbitrary alternatives must be 50/50 is a fallacy.

Attribution for any particular period follows exactly the same methodology as any other. What IPCC chooses to highlight is of course up to the authors, but there is nothing preventing an assessment of any of these periods. In general, the shorter the time period, the greater potential for internal variability, or (equivalently) the larger the forced signal needs to be in order to be detected. For instance, Pinatubo was a big rapid signal so that was detectable even in just a few years of data.

I gave a basic attribution for the 1910-1940 period above. The 1940-1975 average trend in the CMIP5 ensemble is -0.01ºC/decade (range -0.2 to 0.1ºC/decade), compared to -0.003 to -0.03ºC/decade in the observations and are therefore a reasonable fit. The GHG driven trends for this period are ~0.1ºC/decade, implying that there is a roughly opposite forcing coming from aerosols and volcanoes in the ensemble. The situation post-1998 is a little different because of the CMIP5 design, and ongoing reevaluations of recent forcings (Schmidt et al, 2014;Huber and Knutti, 2014). Better information about ocean heat content is also available to help there, but this is still a work in progress and is a great example of why it is harder to attribute changes over small time periods.

In the GCMs, the importance of internal variability to the trend decreases as a function of time. For 30 year trends, internal variations can have a ±0.12ºC/decade or so impact on trends, for 60 year trends, closer to ±0.08ºC/decade. For an expected anthropogenic trend of around 0.2ºC/decade, the signal will be clearer over the longer term. Thus cutting down the period to ever-shorter periods of years increases the challenges and one can end up simply cherry picking the noise instead of seeing the signal.

A key issue in attribution studies is to provide an answer to the question: When did anthropogenic global warming begin? As per the IPCC’s own analyses, significant warming didn’t begin until 1950. Just the Facts has a good post on this When did anthropogenic global warming begin?

I disagree as to whether this is a “key” issue for attribution studies, but as to when anthropogenic warming began, the answer is actually quite simple – when we started altering the atmosphere and land surface at climatically relevant scales. For the CO2 increase from deforestation this goes back millennia, for fossil fuel CO2, since the invention of the steam engine at least. In both cases there was a big uptick in the 18th Century. Perhaps that isn’t what Judith is getting at though. If she means when was it easily detectable, I discussed that above and the answer is sometime in the early 1980s.

The temperature record since 1900 is often characterized as a staircase, with periods of warming sequentially followed by periods of stasis/cooling. The stadium wave and Chen and Tung papers, among others, are consistent with the idea that the multidecadal oscillations, when superimposed on an overall warming trend, can account for the overall staircase pattern.

Nobody has any problems with the idea that multi-decadal internal variability might be important. The problem with many studies on this topic is the assumption that all multi-decadal variability is internal. This is very much an open question.

Let’s consider the 21st century hiatus. The continued forcing from CO2 over this period is substantial, not to mention ‘warming in the pipeline’ from late 20th century increase in CO2. To counter the expected warming from current forcing and the pipeline requires natural variability to effectively be of the same magnitude as the anthropogenic forcing. This is the rationale that Tung used to justify his 50-50 attribution (see also Tung and Zhou). The natural variability contribution may not be solely due to internal/intrinsic variability, and there is much speculation related to solar activity. There are also arguments related to aerosol forcing, which I personally find unconvincing (the topic of a future post).

Shorter time-periods are noisier. There are more possible influences of an appropriate magnitude and, for the recent period, continued (and very frustrating) uncertainties in aerosol effects. This has very little to do with the attribution for longer-time periods though (since change of forcing is much larger and impacts of internal variability smaller).

The IPCC notes overall warming since 1880. In particular, the period 1910-1940 is a period of warming that is comparable in duration and magnitude to the warming 1976-2000. Any anthropogenic forcing of that warming is very small (see Figure 10.1 above). The timing of the early 20th century warming is consistent with the AMO/PDO (e.g. the stadium wave; also noted by Tung and Zhou). The big unanswered question is: Why is the period 1940-1970 significantly warmer than say 1880-1910? Is it the sun? Is it a longer period ocean oscillation? Could the same processes causing the early 20th century warming be contributing to the late 20th century warming?

If we were just looking at 30 year periods in isolation, it’s inevitable that there will be these ambiguities because data quality degrades quickly back in time. But that is exactly why IPCC looks at longer periods.

Not only don’t we know the answer to these questions, but no one even seems to be asking them!

This is simply not true.

Attribution

I am arguing that climate models are not fit for the purpose of detection and attribution of climate change on decadal to multidecadal timescales. Figure 10.1 speaks for itself in this regard (see figure 11.25 for a zoom in on the recent hiatus). By ‘fit for purpose’, I am prepared to settle for getting an answer that falls in the right tercile.

Given the results above it would require a huge source of error to move the bulk of that probability anywhere else other than the right tercile.

The main relevant deficiencies of climate models are:

- climate sensitivity that appears to be too high, probably associated with problems in the fast thermodynamic feedbacks (water vapor, lapse rate, clouds)

- failure to simulate the correct network of multidecadal oscillations and their correct phasing

- substantial uncertainties in aerosol indirect effects

- unknown and uncertain solar indirect effects

The sensitivity argument is irrelevant (given that it isn’t zero of course). Simulation of the exact phasing of multi-decadal internal oscillations in a free-running GCM is impossible so that is a tough bar to reach! There are indeed uncertainties in aerosol forcing (not just the indirect effects) and, especially in the earlier part of the 20th Century, uncertainties in solar trends and impacts. Indeed, there is even uncertainty in volcanic forcing. However, none of these issues really affect the attribution argument because a) differences in magnitude of forcing over time are assessed by way of the scales in the attribution process, and b) errors in the spatial pattern will end up in the residuals, which are not large enough to change the overall assessment.

Nonetheless, it is worth thinking about what plausible variations in the aerosol or solar effects could have. Given that we are talking about the net anthropogenic effect, the playing off of negative aerosol forcing and climate sensitivity within bounds actually has very little effect on the attribution, so that isn’t particularly relevant. A much bigger role for solar would have an impact, but the trend would need to be about 5 times stronger over the relevant period to change the IPCC statement and I am not aware of any evidence to support this (and much that doesn’t).

So, how to sort this out and do a more realistic job of detecting climate change and and attributing it to natural variability versus anthropogenic forcing? Observationally based methods and simple models have been underutilized in this regard. Of great importance is to consider uncertainties in external forcing in context of attribution uncertainties.

It is inconsistent to talk in one breath about the importance of aerosol indirect effects and solar indirect effects and then state that ‘simple models’ are going to do the trick. Both of these issues relate to microphysical effects and atmospheric chemistry – neither of which are accounted for in simple models.

The logic of reasoning about climate uncertainty, is not at all straightforward, as discussed in my paper Reasoning about climate uncertainty.

So, am I ‘making things up’? Seems to me that I am applying straightforward logic. Which IMO has been disturbingly absent in attribution arguments, that use climate models that aren’t fit for purpose, use circular reasoning in detection, fail to assess the impact of forcing uncertainties on the attribution, and are heavily spiced by expert judgment and subjective downweighting.

My reading of the evidence suggests clearly that the IPCC conclusions are an accurate assessment of the issue. I have tried to follow the proposed logic of Judith’s points here, but unfortunately each one of these arguments is either based on a misunderstanding, an unfamiliarity with what is actually being done or is a red herring associated with shorter-term variability. If Judith is interested in why her arguments are not convincing to others, perhaps this can give her some clues.

References

- M.E. Mann, B.A. Steinman, and S.K. Miller, "On forced temperature changes, internal variability, and the AMO", Geophysical Research Letters, vol. 41, pp. 3211-3219, 2014. http://dx.doi.org/10.1002/2014GL059233

- K. Tung, and J. Zhou, "Using data to attribute episodes of warming and cooling in instrumental records", Proceedings of the National Academy of Sciences, vol. 110, pp. 2058-2063, 2013. http://dx.doi.org/10.1073/pnas.1212471110

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

- M. Huber, and R. Knutti, "Natural variability, radiative forcing and climate response in the recent hiatus reconciled", Nature Geoscience, vol. 7, pp. 651-656, 2014. http://dx.doi.org/10.1038/ngeo2228

I don’t really understand Prof. Curry’s continued problem with the form of probabilistic statements made by the IPCC, c.f. the “Uncertainty Monster” paper (the monster turns out to be something like an ewok). The statement essentially gives the probabiilty that a bound on some quantity holds. This is very similar to PAC (probably approximatly correct) bounds used in computational learning theory, which suggests there isn’t any real problem with ambiguity or imprecision.

The point I think Judith is missing is that it is not intended to be a more precise statement than “we are 95% sure that the anthropogenic component of the increase in tempertures since 1950 is at least 50%.” Even that directly contradicts her position as it means that the IPPC only allocate a probability of 5% to the possibility of an anthropogenic component as low as 50% (i.e. it isn’t really plausible).

“what would the temperature would have done in the absence” has an extra “would” I think.

Curry seems confused on ‘warming in the pipeline’ since this comes about from stabilization of forcing which has not occurred.

Dumb question – if the fraction of warming attributable to human activities is 1.1, does it to be interpreted that absent human activities, there would be a cooling trend?

Thanks in advance!

Um, I’m getting a little confused about an attribution greater-than 100%. Does this mean that the trend without human influences would have been ‘cooling’, ie that the human effects are offsetting influences that would have (before we destablizing the system) gone the opposite direction?

[Response: exactly. – gavin ]

I think part of the issue in regards to Judith’s insistence of a binary mode of thought processes, and also in regards to asking when AGW began, is simply an artifact of Modernist thinking. It seems to be a common theme in the “skeptical” blogosphere. Skeptics want the time and date AGW started, they fail to understand why surface temperature is a poor overall measurement of added energy, they provide endless straw men by creating a false dichotomy of equal choice, and I posit that these are idea of people who fail to grasp the probabilistic (maybe pluralistic is a better term here) choice of postmodern reality. This fundamental flaw seems to be at least partially responsible for the disconnect seen in your post.

It’s like an upscale version of Two New Sciences, with Gavin in the role of Salviati and JC in the role of poor Simplicio. It’s not kind to Ms. Curry, but it’s a fine tutorial for the rest of us. : )

Gavin,

Thanks for this detailed take-down.

You write “I don’t know what she refers to as the ‘cooling argument’”.

I’m pretty sure that’s in response to me bringing up the same point on twitter as you do here, namely that internal variability may as well have been cooling or warming (we can’t exclude the possibility that int. var. had a cooling influence, hence also the net anthro contribution is not capped at 100%.

Her response doesn’t make a lot of sense though, since the fact that the globe warmed up doesn’t negate the argument above.

Thanks, Gavin. Typo in last sentence (remove “has”).

Gavin,

Lets stick with the IPCC time scale. My comment concerns figure 10.5 (shown above).

The ‘Observed temperature’ rise since 1950 is given as 0.65±0.05 C, while both NAT and Internal Variability are shown as 0.0±0.1 C.

Tung and Zhou (2013) present evidence of an AMO induced natural 60y signal. The correct way to remove this from the analysis is to compare two dates where that are in phase with the AMO oscillation. Therefore the start date should be 1940 and not 1950.

[Response: The CORRECT way to do this, as Gavin alludes to, is to first remove an estimate of the forced component. See Mann & Emanuel (2006) and Mann et al (2014). -mike ]

See this graph for the result

The observed temperature rise since 1940 is then 0.45±0.05 C. So comparing this to figure 10.5 we see that the INT component should really have been 0.2±0.05 with the ANT component at 0.45±0.05. Therefore the PDF Bell curve you show for the attribution should instead be sitting at 150%.

So it is more like 75-25

What would it take to convince you that this PDF needs to be shifted to the left? The models running hot would sure seem to qualify as one possible condition. Not clear how long / how large the discrepancy would need to be before this would be seriously considered. Are there any guidelines here?

The description here suggests that natural variation was a net negative from 1950 to 2000 and has now entered a large net negative during the hiatus. Surely the natural forcing will switch to positive at some point? With all this built up suppressed AGW energy we should expect a big bounce back in temperature, correct? When would this be expected to happen?

[Response: Attribution and sensitivity are different issues. In the present case where the (negative) aerosol forcing is one of the key uncertainties, they are basically orthogonal. To shift the pdf in any major way you would need to show that the patterns of change that were used were quite different and that the fingerprinting would be rebalanced. There is some uncertainty in the solar pattern when you start to include more interactive components, but given the small forcing, this is not going to move things far. People have tried putting in independent fingerprints for the internal variability e.g. ENSO, or AMOC variability (DelSole et al I think), but it doesn’t change much. I agree that if the natural components turn positive (as they surely will eventually) you will see an increased trend (obviously), but I can’t predict when that will occur. – gavin]

Too bad the IPCC reports are so detailed that it becomes easy for folks to cite lines out of context.

Thanks to Dr Schmidt and all the others here for a decade of explaining the science and exposing the shell games.

People have tried putting in independent fingerprints for the internal variability e.g. ENSO, or AMOC variability (DelSole et al I think), but it doesn’t change much. I agree that if the natural components turn positive (as they surely will eventually) you will see an increased trend (obviously), but I can’t predict when that will occur. – gavin] –

As far as the is AMO are concerned, it is the atmospheric pressure that leads the way, and currently it is on a down slope.

http://www.vukcevic.talktalk.net/AP-SST.htm

The fingerprint’s mystery will most likely be unravelled by geologists

http://www.vukcevic.talktalk.net/EAS.htm

Thanks for the post. It’s a nice illustration of why using short time periods to cherry pick your way to the answer you want isn’t such a good idea.

An especial thanks for the references to your earlier posts at the top. I know squat about attribution studies, and I will definitely read them.

Gavin, Thanks so much for finding the time to write this line-by-line commentary. This is really helpful.

As Naomi Oreskes and Erik Conway thoroughly documented in Merchants of Doubt, these are the same amoral tactics used in the tobacco/health, acid rain, second-hand smoke, ozone depletion (etc.) denial “debates” — of either deliberately or disingenuously cherry-picking data and using scientific methods and honest appraisal of probabilities to distort science. Thus do dishonest, anti-science brokers help ruin the public’s understanding about dire public health and environmental problems. Perhaps Curry falls into the minority “naive or ill-informed” category, but regardless, her confused arrogance is inexcusable.

This comes from a realclimate post by Kyle Swanson on a 2009 paper – https://www.realclimate.org/index.php/archives/2009/07/warminginterrupted-much-ado-about-natural-variability/.

http://watertechbyrie.files.wordpress.com/2014/06/swanson-realclimate.png

The paper updated a 2007 paper to include the 1998/2002 climate shift. Several climate shifts were identified in the 20th century – around 1910, 1944, 1976 and 1998. These are of course inflection points in the global surface temperature record.

Anastasios Tsonis, of the Atmospheric Sciences Group at University of Wisconsin, Milwaukee, and colleagues used a mathematical network approach to analyse abrupt climate change on decadal timescales. Ocean and atmospheric indices – in this case the El Niño Southern Oscillation, the Pacific Decadal Oscillation, the North Atlantic Oscillation and the North Pacific Oscillation – can be thought of as chaotic oscillators that capture the major modes of climate variability. Tsonis and colleagues calculated the ‘distance’ between the indices. It was found that they would synchronise at certain times and then shift into a new state.

It is no coincidence that shifts in ocean and atmospheric indices occur at the same time as changes in the trajectory of global surface temperature. Our ‘interest is to understand – first the natural variability of climate – and then take it from there. So we were very excited when we realized a lot of changes in the past century from warmer to cooler and then back to warmer were all natural,’ Tsonis said.

We may do as Swanson does and exclude ‘dragon-kings’ (Sornette 2009) at times of climate shifts. We may average over cool (1944-1976) and warm (1977-1998) regimes and assume that the increase in temperature is entirely anthropogenic. It gives a rate of warming of some 0.07 degrees C/decade and a total rise in surface temperature of some 0.4 degrees C since 1944.

What we cant assume is that all of the recent warming (1977 to 1998) was anthropogenic. We may use models to disaggregate the trend.

http://www.pnas.org/content/106/38/16120/F3.large.jpg

A vigorous spectrum of interdecadal internal variability presents numerous challenges to our current understanding of the climate. First, it suggests that climate models in general still have difficulty reproducing the magnitude and spatiotemporal patterns of internal variability necessary to capture the observed character of the 20th century climate trajectory. Presumably, this is due primarily to deficiencies in ocean dynamics. Moving toward higher resolution, eddy resolving oceanic models should help reduce this deficiency. Second, theoretical arguments suggest that a more variable climate is a more sensitive climate to imposed forcings (13). Viewed in this light, the lack of modeled compared to observed interdecadal variability (Fig. 2B) may indicate that current models underestimate climate sensitivity. Finally, the presence of vigorous climate variability presents significant challenges to near-term climate prediction (25, 26), leaving open the possibility of steady or even declining global mean surface temperatures over the next several decades that could present a significant empirical obstacle to the implementation of policies directed at reducing greenhouse gas emissions (27). However, global warming could likewise suddenly and without any ostensive cause accelerate due to internal variability. To paraphrase C. S. Lewis, the climate system appears wild, and may continue to hold many surprises if pressed. http://www.pnas.org/content/106/38/16120.full

Sensitivity here is a dynamic sensitivity – greatest in the region of ‘climate shifts’. Nor can we assume that the next shift will follow the 20th century pattern and be to warmer conditions – indeed it seems safer to assume not as the Sun cools from the modern grand maxima. Climate is wild but there seem broadly recurrent multiple equilbria as climate falls into one attractor basin or other.

It seems oddly tendentious to deny for instance the role of natural variability on the basis that some of the recent changes in these long standing climate patterns may be influenced by greenhouse gases. But that seems to be the post hoc rationalization de jour.

Rob Ellison says:

in this case the El Niño Southern Oscillation, the Pacific Decadal Oscillation, the North Atlantic Oscillation and the North Pacific Oscillation – can be thought of as chaotic oscillators that capture the major modes of climate variability.

If you equate ‘chaotic’ with ‘by chance’, I’d rather go with

“Nothing in nature is by chance……something appears to be chance only because of our lack of knowledge.” Spinoza

be it the natural or the anthropogenic variability, except that in the contest of two, I wouldn’t bet my shirt on the anthropo’s supremacy.

Please write clearly. Two suggestions: Put your last paragraph first, using it as an organizing principle. At least your readers will know where you are going instead of trying to figure it out by plowing through all the jargon.

Sugestion 2: If you are writing for the general public–which you are–, have an intelligent non-expert read it in front of you. Watch to see where they struggle. Maybe then you will see where the yawns begin.

I write this not to be nasty, but out of frustration. I agree that Curry is anything but intelligent in her observations. But you just bury your arguments under piles of jargon and poor organization.

JC lists her terciles highest to lowest. Doesn’t that mean your “respectively” values are in the wrong order? Ie your 99.5% goes with her >66%

[Response: I just noticed that too. I was thinking of them going left-to-right from less to more. My 99.5% does go with the >66% category. – gavin]

‘One important development since the TAR is the apparent unexpectedly large changes in tropical mean radiation flux reported by ERBS (Wielicki et al., 2002a,b). It appears to be related in part to changes in the nature of tropical clouds (Wielicki et al., 2002a), based on the smaller changes in the clear-sky component of the radiative fluxes (Wong et al., 2000; Allan and Slingo, 2002), and appears to be statistically distinct from the spatial signals associated with ENSO (Allan and Slingo, 2002; Chen et al., 2002). A recent reanalysis of the ERBS active-cavity broadband data corrects for a 20 km change in satellite altitude between 1985 and 1999 and changes in the SW filter dome (Wong et al., 2006). Based upon the revised (Edition 3_Rev1) ERBS record (Figure 3.23), outgoing LW radiation over the tropics appears to have increased by about 0.7 W/m2 while the reflected SW radiation decreased by roughly 2.1 W/m2 from the 1980s to 1990s (Table 3.5).’ AR4 WG1 3.4.4.1

Simpler – we may assume that all warming since 1944 was anthropogenic – it works out at some 0.07 degrees /decade and 0.4 degrees C in total. It may not be so.

Re: 18, Stormy… Gavin isn’t quite writing for the public, but for a rather select subset, largely with strong scientific credentials and well-versed in the topic. For this crowd, his level of synthesis and exposition is spot on, effeciently and effectively bringing us up to speed. Expanded exposition often occurs in the comment strings…

Lorenz was able to show that even for a simple set of nonlinear equations (1.1), the evolution of the solution could be changed by minute perturbations to the initial conditions, in other words, beyond a certain forecast lead time, there is no longer a single, deterministic solution and hence all forecasts must be treated as probabilistic. The fractionally dimensioned space occupied by the trajectories of the solutions of these nonlinear equations became known as the Lorenz attractor (figure 1), which suggests that nonlinear systems, such as the atmosphere, may exhibit regime-like structures that are, although fully deterministic, subject to abrupt and seemingly random change. http://rsta.royalsocietypublishing.org/content/369/1956/4751.full

It is all completely deterministic – but resists simple characterization. Deterministic chaos does imply randomness.

The US National Academy of Sciences (NAS) defined abrupt climate change as a new climate paradigm as long ago as 2002. A paradigm in the scientific sense is a theory that explains observations. A new science paradigm is one that better explains data – in this case climate data – than the old theory. The new theory says that climate change occurs as discrete jumps in the system. Climate is more like a kaleidoscope – shake it up and a new pattern emerges – than a control knob with a linear gain.

The theory of abrupt climate change is the most modern – and powerful – in climate science and has profound implications for the evolution of climate this century and beyond. A mechanical analogy might set the scene. The finger pushing the balance below can be likened to changes in greenhouse gases, solar intensity or orbital eccentricity. The climate response is internally generated – with changes in cloud, ice, dust and biology – and proceeds at a pace determined by the system itself. Thus the balance below is pushed past a point at which stage a new equilibrium spontaneously emerges. Unlike the simple system below – climate has many equilibria. The old theory of climate suggests that warming is inevitable. The new theory suggests that global warming is not guaranteed and that climate surprises are inevitable.

http://watertechbyrie.files.wordpress.com/2014/06/unstable-mechanical-analogy-fig-1-jpg1.jpg

Many simple systems exhibit abrupt change. The balance above consists of a curved track on a fulcrum. The arms are curved so that there are two stable states where a ball may rest. ‘A ball is placed on the track and is free to roll until it reaches its point of rest. This system has three equilibria denoted a, b and c in the top row of the figure. The middle equilibrium b is unstable: if the ball is displaced ever so slightly to one side or another, the displacement will accelerate until the system is in a state far from its original position. In contrast, if the ball in state a or c is displaced, the balance will merely rock a bit back and forth, and the ball will roll slightly within its cup until friction restores it to its original equilibrium.’(NAS, 2002)

In a1 the arms are displaced but not sufficiently to cause the ball to cross the balance to the other side. In a2 the balance is displaced with sufficient force to cause the ball to move to a new equilibrium state on the other arm. There is a third possibility in that the balance is hit with enough force to cause the ball to leave the track, roll off the table and under the sofa there to plot revolution with the dust balls and lost potato crisps.

In the spectrum of risk – rolling under the sofa is a possibility. It is all completely deterministic – but resists simple characterization.

In the words of Michael Ghil (2013) the ‘global climate system is composed of a number of subsystems – atmosphere, biosphere, cryosphere, hydrosphere and lithosphere – each of which has distinct characteristic times, from days and weeks to centuries and millennia. Each subsystem, moreover, has its own internal variability, all other things being constant, over a fairly broad range of time scales. These ranges overlap between one subsystem and another. The interactions between the subsystems thus give rise to climate variability on all time scales.’

The theory suggests that the system is pushed by greenhouse gas changes and warming – as well as solar intensity and Earth orbital eccentricities -past a threshold at which stage the components start to interact chaotically in multiple and changing negative and positive feedbacks – as tremendous energies cascade through powerful subsystems. Some of these changes have a regularity within broad limits and the planet responds with a broad regularity in changes of ice, cloud, Atlantic thermohaline circulation and ocean and atmospheric circulation.

Dynamic climate sensitivity implies the potential for a small push to initiate a large shift. Climate in this theory of abrupt change is an emergent property of the shift in global energies as the system settles down into a new climate state. The traditional definition of climate sensitivity as a temperature response to changes in CO2 makes sense only in periods between climate shifts – as climate changes at shifts are internally generated. Climate evolution is discontinuous at the scale of decades and longer.

In the way of true science – it suggests at least decadal predictability. The current cool Pacific Ocean state seems more likely than not to persist for 20 to 40 years from 2002. The flip side is that – beyond the next few decades – the evolution of the global mean surface temperature may hold surprises on both the warm and cold ends of the spectrum (Swanson and Tsonis, 2009).

typo … deterministic chaos does (not) imply randomness. – See more at: https://www.realclimate.org/index.php/archives/2014/08/ipcc-attribution-statements-redux-a-response-to-judith-curry/comment-page-1/#comment-587033

Another thing that Judith Curry said recently in an interview in BIGOIL dot com, that has gone viral:

Oilprice.com: ”You’ve also talked about the climate change debate creating a new literary genre. How is this ‘Cli-Fi’ phenomenon contributing to the intellectual level of the public debate and where do you see this going?”

Judith Curry: ”I am very intrigued by Cli-Fi as a way to illuminate complex aspects of the climate debate. There are several sub-genres emerging in Cli-Fi – the dominant one seems to be dystopian (e.g. scorched earth). I am personally very interested in novels that involve climate scientists dealing with dilemmas, and also in how different cultures relate to nature and the climate. I think that Cli-Fi is a rich vein to be tapped for fictional writing.”

http://oilprice.com/Interviews/The-Kardashians-and-Climate-Change-Interview-with-Judith-Curry.html

> to be tapped

Been tapped: Fallen Angels

Nicely organized and well-reasoned argument Gavin.

FYI, Curry is planning an upcoming rebuttal for Climate Etc.

I think we need Tamino to do a statistical analysis of how quickly Judith Curry has a new post on her blog, after Gavin and company do a take down of something she has just written on there, compared with how often she posts on her blog generally… Judging by the comments on both her blog and Real Climate, it appears she had a new post up only three hours after Gavin posted his take down of her! The new one of course having nothing to do with her 50/50 argument. You can just hear Judy thinking. “Hey my readers, look at my new post, and skip over the previous 50/50 one! This new one is nice and shiny!!” Tamino? Are you out there?

Thanks Gavin! I may have occasion to reference your article in a letter to the editor next week. Good timing. Obama has at least at last made a start. Congresswoman Cheri Bustos’ office asked me for the letter to the editor.

Dear Gavin Schmidt: I am glad that you responded at length. I think that side-by side printouts and comparisons of what Prof Curry wrote and what you have written highlight a lot of the differences amongst a large number of people whose overall judgments are closer to hers or to yours. I’ll comment on only 1 of your comments:

This is also confused. “Detection” is (like attribution) a model-based exercise, starting from the idea that one can estimate the result of a counterfactual: what would the temperature have done in the absence of the drivers compared to what it would do if they were included? GCM results show clearly that the expected anthropogenic signal would start to be detectable (“come out of the noise”) sometime after 1980 (for reference, Hansen’s public statement to that effect was in 1988). There is no obvious discrepancy in spectra between the CMIP5 models and the observations, and so I am unclear why Judith finds the detection step lacking. It is interesting to note that given the variability in the models, the anthropogenic signal is now more than 5𝛔 over what would have been expected naturally – See more at: https://www.realclimate.org/index.php/archives/2014/08/ipcc-attribution-statements-redux-a-response-to-judith-curry/#more-17409

I agree with you that detection and attribution are inextricably bound up with models (quantitative, semiquantitative, conceptual) of what would have happened in the absence of human interventions (anthropogenic CO2 and other human interventions need somehow to be distinguished, but they are correlated over the historical record, hence hard to disentangle.) I doubt that anyone can make a strong argument that we know from scientific research what would have happened in the absence of human interventions, and mathematical models are all over the place. Since the GCMs have clearly overpredicted the overall trend in global average mean temperature, and since there are other epochs where there fit to the overall trend is poor, I think that you confidence in an estimate of natural variability based on them is misplaced.

I think that the debate makes stimulating reading, and I am glad, as I said, that you wrote out your critique at such length.

There are a few things I can’t understand at all about Judith’s position:

1) The assumption she makes at the start that because warming is judged extremely likely >50% anthropogenic, it is therefore asserted by IPCC to be between 50-100%. I’ve no idea where she gets this from, and she’s done it before and Bart V called her out on this.

2) The significance of attribution in the 1910-1940 warming. Presumably if this is internal variability, then it makes it less likely, not more, that later warming was also internal variability, as reversion to the mean by cooling would be expected. If forced, then it’s irrelevant to the argument.

3) The failure to work through to a logical conclusion. Judith states “i think the most likely split between natural and anthropogenic causes to recent global warming is about 50-50”. A PDF (unless lopsided) centered at 50% anthro makes it equally likely that anthropogenic forcing is 0% and 100%. Does Judith really believe that 0.65degC and zero degC rises are equally likely results of the changes in antho forcing over the period 1950-2010? This seems inconceivable!

4) There are a number of places where Judith makes statements based on inability to attribute in shorter time periods than the IPCC choose to consider. Regardless as to whether she’s right in this, it’s an apples vs oranges comparison.

Have I misunderstood any of this or is she really clearly wrong on all these? I’m very wary of assuming I know better than someone as well qualified as she is.

Detection in this context is detection of an anthropogenic signal. Detection of warming is not particularly model dependent but has to do with observational uncertainties, or in the case of the linear trend reported in AR4,”The 100-year linear trend (1906-2005) of 0.74 [0.56 to 0.92]°C,” the statistics of a linear fit to the observations.

Predictable:

This response is hard to stomach — as a rebuttal, Curry said that

So Hansen called it right in 1980 without having any signal to work with?

[Response: Hansen was in 1988, and there was sufficient signal then – though that was disputed strongly at the time. – gavin]

All of this is why Joe Romm refers to Curry as a “confusionist”.

There’s a difference between being truly confused, and deliberately trying to create confusion.

Here’s the problem:

> Gavin isn’t quite writing for the public, but for a rather select subset,

> largely with strong scientific credentials and well-versed in the topic.

Dr. Curry isn’t.

Would someone do a quick surveys of climate scientists, asking what they think of these points?

Then, a comparable survey average-voter types, asking what they think of “I can’t find a single point that he has scored” as a rebuttal — was that convincing, for them? Probably, if they know what they want to hear, eh?

Who wins? The people who cast the votes.

My point is: It doesn’t matter what the scientists think, until the bodies stack up.

Cassandra’s generally proven right after it’s way too late to take corrective action.

Nobody much bemoans the damage due to delays managing lead in gasoline, lead in paint, asbestos in everything, tobacco, antibiotics in agriculture, mercury going from coal to fish to fetus. Yeah, a few public health people look and say, how can we consistently be so stupid for so long about problems that are so clearly understood? Who profits from the delays, the most deadly form of denial?

Those aren’t counted as profits, although the delays managing all those problems have been quite profitable for some. They’re accounted as sunk costs, buried bodies, dead past, and political history — how we’ve ignored those problems for many years.

Dr. Curry is writing advocacy science, and succeeding at it.

The point of advocacy science isn’t to do science — it’s to give policymakers a reference they can wave and assert as sufficient reason for doing what they want to do.

I have considerable interest in attribution, since it is what I do for a living. I must say, having read this post and all the ones linked to it including the IPCC chapters, that I do not recognize some of the claims as being part of attribution as applied by practitioners. Specifically, you claim that both attribution and detection are necessarily model based. The terms “attribution” and “detection” apply to phenomena that either occurred in the past or continue to occur, they do not refer to predictions about the future. Attribution and detection are evidence-based. The logic structures associated with evidence are fundamentally different than the logic structures associated with predictive modelling. In your first post from 2010 you further elaborate that:

“The overriding requirement however is that the model must be predictive. It can’t just be a fit to the observations.”

When performing an objective attribution study, the logic structure used to track progress towards resolution is fitted as precisely as possible to observations. That is how everyone else does it using long-established methods, anyway. Modelling is generally shunned in attribution in favor of observation, but I do agree that climate science must turn to modelling when necessary, and that the statements in the 2010 post about using a lab are quite accurate and insightful. But what I have read suggests to me that 1) for some reason climate scientists eschewed established methods for attribution in favor of a newly invented one, 2) overly relied upon expert judgement in favor of direct refutation of alternatives, and 3) ended up steering a tortuous but narrow path through what should have been a much broader logic tree resolution. Are there specific reasons why climate scientists chose not to use established methods for attribution? I cannot find the answer to that question in what I have read.

[Response: The logic of attribution in climate sense was discussed in an earlier post. It isn’t so different to what you are talking about, other than the climate is a continuous system (i.e. not a function of discrete and exclusive causes). You do need a model though (which can be statistical, heuristic, or physics-based like a GCM) – observations on their own are not sufficient. – gavin]

Well, I’ve read Gavin’s piece twice (now thrice), I can’t find a single point that he has scored with respect to my main arguments.

If history is any guide, “main arguments” are even now shape-shifting so as to become invisible in any further discussion, following a rhetorical process that is virtually impossible to trace on sentence-by-sentence basis but which will inevitably arrive at “I’m not sure what Gavin is talking about.”

34, SecularAnimist: There’s a difference between being truly confused, and deliberately trying to create confusion.

Yes. Is someone deliberately trying to create confusion, and could you show it with some exact quotes? My reading of Gavin Schmidt and Judith Curry is that neither is or has been trying to create confusion. I think that Prof Curry is correct that Dr. Schmidt gives more weight to the GCM output than she does; on this point, my view has come closer to Prof Curry’s. I do not see that either of them is deliberately trying to create confusion.

The GCMs are admirable accomplishments, embodying a great range of knowledge in computable form. But all of the inputs are approximations (parameter estimates, equations, numerical methods), and the output to date shows that they have made bad predictions about “out of sample” data — the trend since they were published. It’s possible that in the long term the Earth mean surface temperature will evolve much more as predicted by the GCM mean (or some other well-supported summary of the GCMs, or even one that has best accuracy among all of them), but to date nothing like that has been demonstrated. Until then, any claim based on GCM output can’t reasonably be said to “debunk” any other claim that is not based on GCM output.

I think what I wrote above is literally true: if you print out both long posts (or open them in side-by-side windows) you can see pretty clearly where the authors disagree.

From a high level I guess what this PDF is telling me is:

The human contribution to the recent warming is as likely to be 170% (with a -70% natural variation cooling component) as it is to be a 50/50 split between natural and AGW contribution?

With the recent hiatus it would seem to me that the upper end (170%) possibility becomes less and less likely. There would have to be some pretty powerful negative forces at play to hold down an extra 70% warming over 50 years and now suppressing it even harder during the pause.

Empirically I would say the longer and further the observations stay below the projections, the more the PDF should shift leftward.

Otherwise these powerful negative forces need to identified and measured somehow? What could possibly be applying a -70% forcing over 60 years?

Oh dear, that cartoon at the end of Curry’s response says a lot about her.

I can’t help it. Every time I think of JC, Cersei Lannister springs to mind. Do you think they could be related? Sample quote: ‘When you play the game of thrones, you win or you die. There is no middle ground.’ or perhaps: “Someday, you’ll sit on the throne, and the truth will be what you make it.”

Just a segment of a comment I left at JC’s blog that is relevant to the comment above that Gavin wrote (quoting Judith’s response) and Matt Skaggs. Since I was responding to a comment, some context might be lost…

…the fundamental point here is that there can be no “planet climate model” and “planet observations” when talking about attribution. The two have to be married in some way. This idea of simply looking at data sounds sciency to the uninitiated, but without a coherent theory to link aspects of observed OHC, stratospheric cooling, vertical T profiles, warming patterns, etc, then you’re left with no story to tell and just a bunch of numbers on a computer that are only mildly interesting. In fact, there are several studies that do try to think about attribution from an observations-only perspective; in general, what they gain from avoiding assumptions about the model’s veracity in simulating the shape and timing of the expected responses, they lose more from making more substantial assumptions, many of which are crucial to JC’s concerns, such as how to separate forced+internal components or the timescale of response. Relaxing some of these assumptions can be done, but then a ‘model’ is being constructed implicitly.

The other point is that attribution studies evaluate the extent to which patterns of model response to external forcing (i.e., fingerprints) simulations explain climate change in *observations.* Indeed, possible errors in the amplitudes of the external forcing and a models response are accounted for by scaling the signal patterns to best match observations, and thus the robustness of the IPCC conclusion is not slaved to uncertainties in aerosol forcing or sensitivity being off. The possibility of observation-model mismatch due to internal variability must also be accounted for…so in fact, attribution studies sample the range of possible

forcings/responses even more completely than a climate model does. Fundamentally, a number of physically plausible hypotheses about what else might be causing the 1950-present warming signal are being evaluated. Appeals to unknown unknowns to create the spatio-temporal patterns of GHGs, and to cancel out the radiative effects of what we know to be important, seems like much more of a stretch than the robustness of current methods.

Of course, the models might be doing everything wrong- aerosols might not locally cool and have a distinct pattern in space/time, CO2 might not cool the stratosphere, and solar might have a completely different fingerprint on vertical temperature profiles. There are a number of reasons to doubt that the models are not useful in this respect, but since we’re making things up then there’s no point in defending what models seem to do well. Phlogiston might be the unifying theory we need. It is easy to make claims that the model internal variability is way off and can simultaneously lead to observed patterns (e.g., upper ocean heat content anomalies, tropospheric warming, etc) but this is not a serious criticism until there’s something more that has been demonstrated. It is also easy to hand-wave about “multi-decadal variability” (that is fully acknowledged to exist in the real world). Regardless, the question of why every scientist doesn’t think the way she does seems self-evident at this point.

I leave with this gem:

################

“From what I read on twitter, most serious scientists seem to think Gavin makes points that make you look foolish.”

curryja | August 28, 2014 at 1:14 pm |

“yeah, scientists like Michael Mann and Chris Colose. Almost as scientific as a Cook/Lewandowsky survey. ”

##########################

I never did anything to be in the same conversation as what Mike has accomplished, but I’ll take what I can get :-) I’m at least honored to be disliked by the same person.

Matthew R. Marler wrote: “Is someone deliberately trying to create confusion, and could you show it with some exact quotes?”

Judith Curry’s entire response to Gavin Schmidt’s critique is an exercise in obfuscation, misdirection and evasion.

Which is typical of Curry’s consistently “confused” ruminations on global warming and climate change. Somehow, even though numerous climate scientists have endeavored at length, with great patience, to help her clear up her various “misunderstandings” of the science, she has managed to remain steadfastly confused, and always in the direction of finding nothing worth worrying about — and certainly nothing worth DOING anything about. And in spite of having her errors repeatedly and clearly pointed out to her, she persists in purveying her “confusion” to an audience of admiring deniers.

Chris Colose wrote:

”

I’m at least honored to be disliked by the same person.

”

Indeed.

Dr Curry receives good scientific criticism as a personal affront, while simultaneously supposing that her distribution of bad scientific criticism is her moral imperative.

It’s so pathetic that it’s actually funny.

If you irritate Dr Curry, you are not only in good company, but you must also be doing something right.

Although I find the evidence compelling that we can, with high certainty, attribute almost all post-1950 warming to anthropogenic GHG emissions, with internal variability playing a minor role, I’m not sure the above discussions substantiate this conclusion as convincingly as might be hoped. It’s not that Gavin’s argument is wrong, but rather that it omits separate, independent evidence that reinforces the same conclusion from a very different angle.

The arguments made above, and disputed by Judith Curry, are based on GCM simulations. In my view, an even stronger argument is derived from a combination of observational data and a set of basic physics parameters involving the earth’s energy budget. At the risk of appearing to “shout”, I’d like to emphasize this point by stating it, bolded, on a separate line:

The strong dominance of anthropogenic warming since 1950 can be demonstrated with high confidence without any dependence on complicated climate models.

For those familiar with recent geophysics data, this conclusion will come as no surprise. In fact, among those most responsible for advancing this approach to the issue, Isaac Held has made the point repeatedly, most recently in an AGU meeting attended by Dr. Curry, and earlier in a blog post – Heat Uptake and Internal Variability. His description should be read for details, but the essence of the evidence lies in the observation that ocean heat uptake (OHC) has been increasing during the post-1950 warming. Since OHC uptake efficiency associated with surface warming is low compared with the rate of radiative restoring (increase in energy loss to space as specified by the climate feedback parameter), an important internal contribution must lead to a loss rather than a gain of ocean heat; thus the observation of OHC increase requires a dominant role for external forcing.

In my view, there is no plausible mechanism for circumventing this conclusion, which is why a certainty level of 95 percent seems reasonable. That it is not 100 percent is a necessary caution against the possibility of an obscure undetected mechanism that allows internal variability to add OHC rather than subtract it in conjunction with a serious overestimation of the role of GHGs (but this creates inconsistencies of its own as Held has pointed out).

To me, the principle that the IPCC attribution is GCM-independent is a game changer, and deserves more emphasis that it has received. This might also reduce the extent to which the discussion revolves around the virtues and deficiencies of GCMs.

With the recent hiatus it would seem to me that the upper end (170%) possibility becomes less and less likely.

Except – bizarrely – the certainty will actually increase. According to the PDF the AGW contribution is 110% of all warming since 1950. If the pause continues then the AGW contribution will increase as a proportion of total warming and the central value will shift rightwards.

Anastasios Tsonis, of the Atmospheric Sciences Group at University of Wisconsin, Milwaukee, and colleagues used a mathematical network approach to analyse abrupt climate change on decadal timescales. Ocean and atmospheric indices – in this case the El Niño Southern Oscillation, the Pacific Decadal Oscillation, the North Atlantic Oscillation and the North Pacific Oscillation – can be thought of as chaotic oscillators that capture the major modes of climate variability. Tsonis and colleagues calculated the ‘distance’ between the indices. It was found that they would synchronise at certain times and then shift into a new state.

It is no coincidence that shifts in ocean and atmospheric indices occur at the same time as changes in the trajectory of global surface temperature. Our ‘interest is to understand – first the natural variability of climate – and then take it from there. So we were very excited when we realized a lot of changes in the past century from warmer to cooler and then back to warmer were all natural,’ Tsonis said.

Four multi-decadal climate shifts were identified in the last century coinciding with changes in the surface temperature trajectory. Warming from 1909 to the mid 1940’s, cooling to the late 1970’s, warming to 1998 and declining since. The shifts are punctuated by extreme El Niño Southern Oscillation events. Fluctuations between La Niña and El Niño peak at these times and climate then settles into a damped oscillation. Until the next critical climate threshold – due perhaps in a decade or two if the recent past is any indication.

So the fundamental question is why 1950 and not the inflection point in global surface temperature at 1944. It makes a difference – and the difference is ENSO.

http://www.metoffice.gov.uk/hadobs/hadcrut4/data/current/update_diagnostics/global_n+s.gif

HadCRUT4

Year Anomaly

1944 0.150

1950 -0.174

The difference is almost exactly 50% of the ‘observation’. 1944 would seem justifiable on theoretical grounds if we are truly trying to net out warmer and cooler multidecadal regimes.

In looking at Judy’s writing on this subject, my initial reaction is that she can’t really be that dumb, can she? But then I am left with the inescapable conclusion that her audience is the gullible, and she’s deliberately trying to deceive them, and that isn’t a palatable conclusion either. Frankly, the most charitable conclusion I can come to is that she is deceiving herself, first and foremost.

Frankly, I’ve never come away from anything Aunt Judy wrote with any new understanding. Her analysis is shallow and her logic flawed. You cannot draw scientific conclusions when you simply reject the best science available. Is it uncertain? Of course, but it is at least an edifice to build on. That is the thing about science: even if you start out with the wrong model, empirical evidence will eventually correct you, and you’ll know why you were wrong, as well. Judy’s biggest problem is that she’s afraid to be wrong, so she’s forever stuck in the limbo of “not even wrong”.

I was thinking about this paper in 1981: