Does global warming make extreme weather events worse? Here is the #1 flawed reasoning you will have seen about this question: it is the classic confusion between absence of evidence and evidence for absence of an effect of global warming on extreme weather events. Sounds complicated? It isn’t. I’ll first explain it in simple terms and then give some real-life examples.

The two most fundamental properties of extreme events are that they are rare (by definition) and highly random. These two aspects (together with limitations in the data we have) make it very hard to demonstrate any significant changes. And they make it very easy to find all sorts of statistics that do not show an effect of global warming – even if it exists and is quite large.

Would you have been fooled by this?

Imagine you’re in a sleazy, smoky pub and a stranger offers you a game of dice, for serious money. You’ve been warned and have reason to suspect they’re using a loaded dice here that rolls a six twice as often as normal. But the stranger says: “Look here, I’ll show you: this is a perfectly normal dice!” And he rolls it a dozen times. There are two sixes in those twelve trials – as you’d expect on average in a normal dice. Are you convinced all is normal?

You shouldn’t be, because this experiment is simply inconclusive. It shows no evidence for the dice being loaded, but neither does it provide real evidence against your prior suspicion that the dice is loaded. There is a good chance for this outcome even if the dice is massively loaded (i.e. with 1 in 3 chance to roll a six). On average you’d expect 4 sixes then, but 2 is not uncommon either. With normal dice, the chance to get exactly two sixes in this experiment is 30%, with the loaded dice it is 13%[i]. From twelve tries you simply don’t have enough data to tell.

Hurricanes

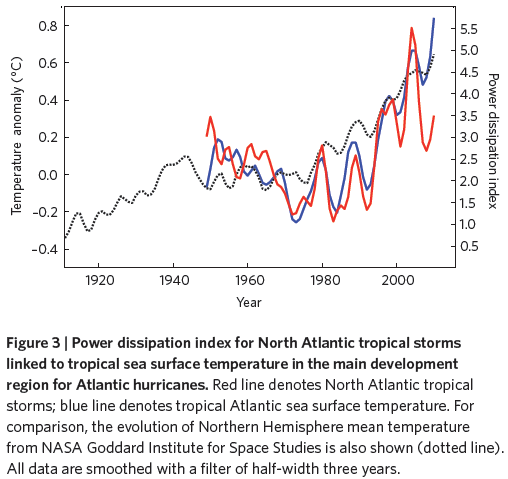

In 2005, leading hurricane expert Kerry Emanuel (MIT) published an analysis showing that the power of Atlantic hurricanes has strongly increased over the past decades, in step with temperature. His paper in the journal Nature happened to come out on the 4th of August – just weeks before hurricane Katrina struck. Critics were quick to point out that the power of hurricanes that made landfall in the US had not increased. While at first sight that might appear to be the more relevant statistic, it actually is a case like rolling the dice only twelve times: as Emanuel’s calculations showed, the number of landfalling storms is simply far too small to get a meaningful result, as those data represent “less than a tenth of a percent of the data for global hurricanes over their whole lifetimes”. Emanuel wrote at the time (and later confirmed in a study): “While we can already detect trends in data for global hurricane activity considering the whole life of each storm, we estimate that it would take at least another 50 years to detect any long-term trend in U.S. landfalling hurricane statistics, so powerful is the role of chance in these numbers.” Like with the dice this is not because the effect is small, but because it is masked by a lot of ‘noise’ in the data, spoiling the signal-to-noise ratio.

Heat records

The number of record-breaking hot months (e.g. ‘hottest July in New York’) around the world is now five times as big as it would be in an unchanging climate. This has been shown by simply counting the heat records in 150,000 series of monthly temperature data from around the globe, starting in the year 1880. Five times. For each such record that occurs just by chance, four have been added thanks to global warming.

You may be surprised (like I was at first) that the change is so big after less than 1 °C global warming – but if you do the maths, you find it is exactly as expected. In 2011, in the Proceedings of the National Academy we described a statistical method for calculating the expected number of monthly heat records given the observed gradual changes in climate. It turns out to be five times the number expected in a stationary climate.

Given that this change is so large, that it is just what is expected and that it can be confirmed by simple counting, you’d expect this to be uncontroversial. Not so. Our paper was attacked with astounding vitriol by Roger Pielke Jr., with repeated false allegations about our method (more on this here).

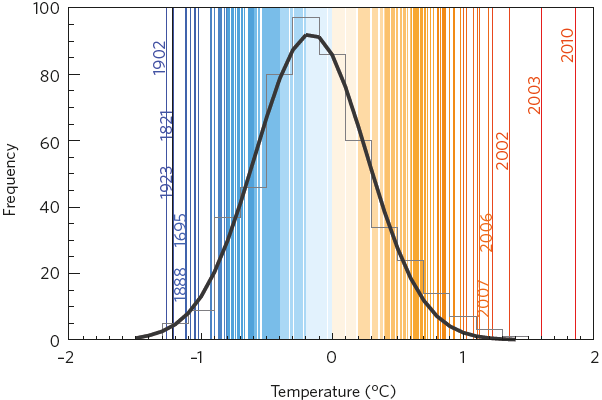

European summer temperatures for 1500–2010. Vertical lines show the temperature deviations from average of individual summers, the five coldest and the five warmest are highlighted. The grey histogram shows the distribution for the 1500–2002 period with a Gaussian fit shown in black. That 2010, 2003, 2002, 2006 and 2007 are the warmest summers on record is clearly not just random but a systematic result of a warming climate. But some invariably will rush to the media to proclaim that the 2010 heat wave was a natural phenomenon not linked to global warming. (Graph from Barriopedro et al., Science 2011.)

Heat records can teach us another subtle point. Say in your part of the world the number of new heat records has been constant during the past fifty years. So, has global warming not acted to increase their number? Wrong! In a stationary climate, the number of new heat records declines over time. (After 50 years of data, the chance that this year is the hottest is 1/50. After 100 years, this is reduced to 1/100.) So if the number has not changed, two opposing effects must have kept it constant: the natural decline, and some warming. In fact, the frequency of daily heat records has declined in most places during the past decades. But due to global warming, they have declined much less than the number of cold records, so that we now observe many more hot records than cold records. This shows how some aspects of extreme events can be increased by global warming at the same time as decreasing over time. A curve with no trend does not demonstrate that something is unaffected by global warming.

Drought

Drought is another area where it is very easy to over-interpret statistics with no significant change, as in this recent New York Times opinion piece on the serious drought in California. The argument here goes that man-made climate change has not played “any appreciable role in the current California drought”, because there is no trend in average precipitation. But that again is a rather weak argument, because drought is far more complex than just being driven by average precipitation. It has a lot to do with water stored in soils, which gets lost faster in a warmer climate due to higher evaporation rates. California has just had its warmest winter on record. And the Palmer Drought Severity Index, a standard measure for drought, does show a significant trend towards more serious drought conditions in California.

The cost of extreme weather events

If an increase in extreme weather events due to global warming is hard to prove by statistics amongst all the noise, how much harder is it to demonstrate an increase in damage cost due to global warming? Very much harder! A number of confounding socio-economic factors clouds this issue which are very hard to quantify and disentangle. Some factors act to increase the damage, like larger property values in harm’s way. Some act to decrease it, like more solid buildings (whether from better building codes or simply as a result of increased wealth) and better early warnings. Thus it is not surprising that the literature on this subject overall gives inconclusive results. Some studies find significant damage trends after adjusting for GDP, some don’t, tempting some pundits to play cite-what-I-like. The fact that the increase in damage cost is about as large as the increase in GDP (as recently argued at FiveThirtyEight) is certainly no strong evidence against an effect of global warming on damage cost. Like the stranger’s dozen rolls of dice in the pub, one simply cannot tell from these data.

The emphasis on questionable dollar-cost estimates distracts from the real issue of global warming’s impact on us. The European heat wave of 2003 may not have destroyed any buildings – but it is well documented that it caused about 70,000 fatalities. This is the type of event for which the probability has increased by a factor of five due to global warming – and is likely to rise to a factor twelve over the next thirty years. Poor countries, whose inhabitants hardly contribute to global greenhouse gas emissions, are struggling to recover from “natural” disasters, like Pakistan from the 2010 floods or the Philippines and Vietnam from tropical storm Haiyan last year. The families who lost their belongings and loved ones in such events hardly register in the global dollar-cost tally.

It’s physics, stupid!

While statistical studies on extremes are plagued by signal-to-noise issues and only give unequivocal results in a few cases with good data (like for temperature extremes), we have another, more useful source of information: physics. For example, basic physics means that rising temperatures will drive sea levels up, as is in fact observed. Higher sea level to start from will clearly make a storm surge (like that of the storms Sandy and Haiyan) run up higher. By adding 1+1 we therefore know that sea-level rise is increasing the damage from storm surges – probably decades before this can be statistically proven with observational data.

There are many more physical linkages like this – reviewed in our recent paper A decade of weather extremes. A warmer atmosphere can hold more moisture, for example, which raises the risk of extreme rainfall events and the flooding they cause. Warmer sea surface temperatures drive up evaporation rates and enhance the moisture supply to tropical storms. And the latent heat of water vapor is a prime source of energy for the atmosphere. Jerry Meehl from NCAR therefore compares the effect of adding greenhouse gases to putting the weather on steroids.

Yesterday the World Meteorological Organisation published its Annual Statement on the Climate, finding that “2013 once again demonstrated the dramatic impact of droughts, heat waves, floods and tropical cyclones on people and property in all parts of the planet” and that “many of the extreme events of 2013 were consistent with what we would expect as a result of human-induced climate change.”

With good physical reasons to expect the dice are loaded, we should not fool ourselves with reassuring-looking but uninformative statistics. Some statistics show significant changes – but many are simply too noisy to show anything. It would be foolish to just play on until the loading of the dice finally becomes evident even in highly noisy statistics. By then we will have paid a high price for our complacency.

The Huffington Post has the story of the letters that Roger Pielke sent to two leading climate scientists, perceived by them as threatening, after they criticised his article: FiveThirtyEight Apologizes On Behalf Of Controversial Climate Science Writer. According to the Huffington Post, Pielke wrote to Kevin Trenberth and his bosses:

Once again, I am formally asking you for a public correction and apology. If that is not forthcoming I will be pursuing this further. More generally, in the future how about we agree to disagree over scientific topics like gentlemen?

Pielke using the word “gentlemen” struck me as particularly ironic.

How gentlemanly is it that on his blog he falsely accused us of cherry-picking the last 100 years of data rather than using the full available 130 years in our PNAS paper Increase of extreme events in a warming world, even though we clearly say in the paper that our conclusion is based on the full data series?

How gentlemanly is it that he falsely claims “Rahmstorf confirms my critique (see the thread), namely, they used 1910-2009 trends as the basis for calculating 1880-2009 exceedence probabilities,” when I have done nothing of the sort?

How gentlemanly is it that to this day, in a second update to his original article, he claims on his website: “The RC11 methodology does not make any use of data prior to 1910 insofar as the results are concerned (despite suggestions to the contrary in the paper).” This is a very serious allegation for a scientist, namely that we mislead or deceive in our paper (some colleagues have interpreted this as an allegation of scientific fraud). This allegation is completely unsubstantiated by Pielke, and of course it is wrong.

We did not respond with a threatening letter – not our style. Rather, we published a simple statistics tutorial together with our data and computer code, hoping that in this way Pielke could understand and replicate our results. But until this day we have not received any apology for his false allegations.

Our paper showed that the climatic warming observed in Moscow particularly since 1980 greatly increased the chances of breaking the previous July temperature record (set in 1938) there. We concluded:

For July temperature in Moscow, we estimate that the local warming trend has increased the number of records expected in the past decade fivefold, which implies an approximate 80% probability that the 2010 July heat record would not have occurred without climate warming.

Pielke apparently did not understand why the temperatures before 1910 hardly affect this conclusion (in fact increasing the probability from 78% to 80%), and that the linear trend from 1880 or 1910 is not a useful predictor for this probability of breaking a record. This is why we decomposed the temperature data into a slow, non-linear trend line (shown here) and a stochastic component – a standard procedure that even makes it onto the cover picture of a data analysis textbook, as well as being described in a climate time series analysis textbook. (Pielke ridicules this method as “unconventional”.)

He gentlemanly writes about our paper:

That some climate scientists are playing games in their research, perhaps to get media attention in the larger battle over climate politics, is no longer a surprise. But when they use such games to try to discredit serious research, then the climate science community has a much, much deeper problem.

His praise of “serious research” by the way refers to a paper that claimed “a primarily natural cause for the Russian heat wave” and “that it is very unlikely that warming attributable to increasing greenhouse gas concentrations contributed substantially to the magnitude of this heat wave.” (See also the graph above.)

Update (1 April):

Top hurricane expert Kerry Emanuel has now published a very good response to Pielke at FiveThirtyEight, making a number of the same points as I do above. He uses a better analogy than my dice example though, writing:

Suppose observations showed conclusively that the bear population in a particular forest had recently doubled. What would we think of someone who, knowing this, would nevertheless take no extra precautions in walking in the woods unless and until he saw a significant upward trend in the rate at which his neighbors were being mauled by bears?

The doubling of the bear population refers to the increase in hurricane power in the Atlantic which he showed in his Nature article of 2005 – an updated graph of his data is shown below, from our Nature Climate Change paper A decade of weather extremes.

Related posts:

[i] For the math-minded: if a dice has a probability of 1/n to roll a six (a normal dice has n=6) and you roll it k times, the probability p to find m sixes is p = k!/[(k–m)!m!] × (n-1)(k–m)/nk.

“Here is the #1 flawed reasoning you will have seen about this question: it is the classic confusion between absence of evidence and evidence for absence”.

I think that we need to be very careful with this logic as it gives you an excuse to believe any relationship that you want to believe in. There is a reason that science uses the null hypothesis of ‘no relationship’ and then tries to prove that wrong beyond 95% certainly. The reason is that its very easy to come up with supposed relationships and we need a check on ourselves to make sure that the data actually supports our supposed relationship.

I am wondering if you support the mantra “absence of evidence is not evidence of absence” when it comes to claims about cosmic rays causing global warming. I am using this example because there is a reasonable physical link between the two but the observational data does not support the link. I consider the observational evidence to be pretty damning to the supposed link but maybe I should reconsider?

“…we estimate that it would take at least another 50 years to detect any long-term trend in U.S. landfalling hurricane statistics, so powerful is the role of chance in these numbers.” Like with the dice this is not because the effect is small, but because it is masked by a lot of ‘noise’ in the data, spoiling the signal-to-noise ratio.”

To me this DOES mean the effect is small. I think it makes total sense to compare the effect relative to the natural variability in the data. Going back to cosmic rays, if there is an effect it must be tiny relative to other sources of variability given that no effect is detectable in the observational data.

[Reply: The calculation by Kerry Emanuel specifically applied to a 75% increase in hurricane power. I would not call that small, but perhaps that is a value judgment.

The key issue here is that some tests with data are highly informative, but some are not, and there is a way to tell the difference. For example, rolling the dice 120 times rather than 12 times would allow you to distinguish with high confidence between a normal dice and one that rolls twice as many sixes.

The proper framework to calculate how informative data are is Bayesian statistics. That is why I included the word “prior” in my dice example – a key term of Bayesian statistics – and I refer back to this near the end where I talk about good physical reasons to expect that global warming will increase certain types of extremes, even prior to doing any data analysis. I can’t give an intro to Bayesian statistics here (Wikipedia does a good job), but it provides a mathematical formula that allows you to calculate by how much any new data should change your prior expectations. In the example above: 12 rolls of dice should not change it much, but 120 rolls surely would. That is why a negative result is not the same as an uninformative result. Rolling the dice 5000 times without anything suspicious showing up is a negative result, but a highly informative one. You can apply the same to the data tests about a link of climate to galactic cosmic rays. My article is about people who point to uninformative statistics to draw strong conclusions which are not warranted from these data. -Stefan]

Thanks Stefan! SKS has a related post on extreme weather events which includes some of the Munich Re information. Another metric which is useful in looking at this is weather related electrical grid disruptions. Evan Mills from the Lawrence Berkeley National Lab put together a presentation on this a while back: http://evanmills.lbl.gov/presentations/Mills-Grid-Disruptions-NCDC-3May2012.pdf

#1–But, Patrick, bear in mind that there is no shortage of folks out there clamoring to assert that absence of evidence IS evidence of absence, when it comes to climate-related matters. Your points are well-taken, but miss this crucial facet. Stefan is not, I think, proposing a change in scientific standards of proof, but rather debunking an ideologically-motivated fallacy prevalent in ‘blog science.’

Stefan, thanks. This wraps up a number of points in a very neat bundle, and is, I think, very clear.

Considering extreme events over the current millennium so far–including several mentioned above–I arrive at the unscientific but not unreasonable rough estimate that climate change so far has cost on the order of 100,000 premature deaths and in excess of $100 billion US. That’s big in absolute terms, but small relative to total global economic output and population. But then, that’s for just .7 C warming.

The article is correct to point out that this is one of the common lines of poor reasoning used but it is not the most common fallacy used in discussing extreme weather as it pertains to climate change. That fallacy would be the fallacy of confirming instances (used on both sides): The fallacy of confirming instances is committed when (as you might guess) an arguer only points to evidence that confirms their hypothesis, ignoring disconfirming evidence and the entire data set.

On the denier side it goes like this: “it’s very cold where I am–colder than usual, which is an instance of evidence that confirms that AGW is false.” On the AGW side, the same fallacy is used during heat spells/droughts “it’s very hot where I am–hotter than usual–which is an instance of confirming evidence for AGW.”

As I’m sure many of you know, both lines of argument confuse weather for climate but the underlying fallacy is that the entire data set isn’t being considered.

[Reply: Ami, I did consider including this as an example. The statement “last winter was cold where I live, hence I doubt global warming” is just an extreme example of drawing unwarranted conclusions from one uninformative data point. -Stefan]

nice post, but it’s a die, not a dice.

Thank you for this very enlightening article. I’d pass it on, if I wouldn’t fear that those most in need of reading it would simply stop reading after the very first sentence, because it’s too difficult for them to wrap their head around.

In order to understand those people who are simply yelling “It’s not true!” — with hands over their ears and eyes — it would be interesting to look at them from a psychological point of view. I would assume, very quickly, we see fear.

I seriously think none of these climate-change-deniers-lets-just-keep-destroying-our-world people actually believe themselves. Or, at least, they are very good at fooling themselves.

What would be the result of even daring to believe that the climate is changing? It would rob them of so many certainties. It would point out their responsibility and at the same time be so overwhelmingly huge and insurmountable that they would feel weak. I know I do. I can barely live with it. (Sometimes I wish I could put my head into the sand and fool myself into thinking everything’s just fine.)

But, for many, feeling weak is just not acceptable. It’s simply not an option. And in order to not feel weak when faced with realities of climate change you need to do accept your responsibility and do something. There is a great need for everybody to change in our own behavior, in our actions and to engage in causing change in industries and governments.

And what is even scarier than feeling weak? Having to change.

Extremely helpful post. For Patrick, (#1), regarding your critique of “the classic confusion between absence of evidence and evidence for absence” — it may help to recall this post is about statistical evidence. This is tied up rather nicely at the end, reminding us that the evidence for tried and true physics is there, even absent the statistical evidence.

On that line, I think, is where your GCM example comes up short. Yes, there are physical mechanisms to connect GCM’s to aerosol formation — however, GCM’s aren’t the only forcing on the system. In particular, we have a very strong reason to connect GHG’s to observed warming, and multiple lines of physics and data for bracketing the magnitude of this effect — which all but relegates GCM’s to the trivial-influience-at-best bin. So… no statistical connection, and physics that suggests at most a minor influence.

All the heat in the system affects all the weather. All the weather is affected by all the heat in the system. AGW adds heat to the system. There is no old heat and new heat, there is only heat, and all the heat affects all the weather. With the additional heat, there is enough heat that weather that we have never seen before can occur. However, that does not mean that the kinds of weather that we have seen before are not being affected by the additional heat from AGW.

It is not that the dice are loaded, it is that we are playing with more dice. Even if the dice are loaded, the highest one can roll from two dice is a twelve. However, with more heat in the system, we are seeing record after record being broken. This is like rolling 13s, 14s, and 16s. Yes, we are still rolling some lower numbers, but somebody has slipped more dice into the cup, and soon enough we will be rolling 19s and 25s.

[Reply: This is the classic question of whether extreme events are getting more frequent or more extreme. The dice analogy is limited here because dice have a finite range of numbers. For extreme events, in contrast, you have a probability distribution curve without such a cut-off value: the more outlandish the extreme, the less likely it is. (This part of the distribution is the “tail”.) So an “increase in extremes” can be described as an increase in probability of an extreme with a given value (say temperature), or an increase in this value for a given probability of occurrence. These two things are actually just two different ways of looking at the same thing and not two different phenomena. -Stefan]

Patrick Browm, who knows how large the effect is? That is the purpose of research. What it says is that with the static and number of events it will take 50 years to have any certainty. Does not mean the effect is small.

Even for an actuary, the behaviour of a distribution at its extremes is not intuitive. As you suggested above, changing the mean by a small amount massively increases the size of a tail, as you’ve shifted much more of the distribution up. I wrote a blog post about the Australian bushfires along these lines:

http://www.actuarialeye.com/2013/10/20/extreme-weather-events-theyre-not-as-extreme-any-more/

Basically, if you only care about the extreme events, they become massively more likely even with just a small increase in the mean of a distribution. If (as I have also read) the distribution itself becomes more extreme, then you magnify the effect. In Australia, the extreme events are bushfires, which come from extreme heat and wind at the same time, and are probably more linked to temperature than cyclones. The Climate Institute here in Australia has done a fair bit of research on this topic (linked in my post above), which is probably worth wider distribution. There is less signal to noise ratio for bushfires than there is for tropical cyclones/hurricanes.

Thank You Stefan for your intuitive article. Some of the argument you present would appear obvious to the logical mind and indeed are. Although one could argue successfully that in some of these cases the disturbances in weather patterns, although amplified by GW, would not have been overly destructive without other considerations, some man made and some naturally occurring.

The drought in California has been exasperated by the need on the farming industry, drawing water from an aquifer that’s essentially sand. Soil compactions from over pumping and lack of rain has collapsed the aquifer beneath the San Fernando valley to the point where if it where to rain excessively, now one would get lakes on the surface and would not refill the aquifer.

The disparaging numbers you pointed out, between the noted cold events and the number of high heat events are one of the most telling observations we have as an indicator of GW.

I’ve read several papers about the war in Syria and agw. These paper tried to and successfully did put a reasonable spin on political events and policy that were plausible to an uneducated political reader, that is was politics and not the prolonged drought that caused the reduction of 75% of agro output, and nearly that much in death of farm animals and thus the war. Personally I’m not buying into that, without the prolong drought (which was predicted by NOAA) there would not have been the destruction of the food production of Syria. No matter what the political landscape was.

Now on to Hurricanes, it’s reasonable to believe that all storms are subject to agw to one degree or another. Not being that familiar with the conditions in the North Pacific, hurricane Haiyan a category 6? Hurricane may fall into the category of unequivocally Amplified by agw. That said, Hurricane Sandy was not*…and there’s the rub…there has just has not been enough time to extrapolate a significant pattern to these storms to include them into the solid block of “caused by AGW.”

*I could tell you why, but it’s a lot of typing. I just do not have the time right now.

Best,

Warren

When I see the deniers abusing statistics, I usually skim on without analyzing the logic of whatever scientistic fallacy they are relying on. This article has clarified matters greatly for me, but it still seems to me that those mistake absence of evidence for evidence of absence are deliberately abusing logic for ulterior reasons that are perhaps unfathomable. Those avidly searching for any excuse for complacency can certainly find a rationale in every form of logical abuse. It is high time we simply ignore these mindless purveyors of misinformation and get down to the real work of transitioning to the Solar Age.

This result is partly a consequence of the law of large numbers and the affine transformation of a stochastic distribution. In the simplest case, if the mean increases as N then the standard deviation increases by N/sqrt(N) = sqrt(N). The SD term is the epistemic noise based on counting statistics.

This is also observed in statistical mechanics, where the Boltzmann and Fermi-Dirac and Bose Einstein distribution all show a similar fluctuation scaling with temperature. As absolute temperature goes above zero, the fluctuations increase as a function of temperature. That’s what the physics says. It would take a very unusual situation for the variance to tighten with increasing temperature.

Take the case of the sun. Even though the sunspot activities look small relative to the suns output, these are huge fluctuations in local temperature. That’s because the sun is so hot to begin with.

Note the famous expression “The die is cast”. “Dice” is plural. …

Hi Guys, Thanks for your interest in our work. We went into that smokey bar and did some math on those dice. You can see it here:

http://iopscience.iop.org/1748-9326/6/1/014003/fulltext/

The math is easy, and we’d be happy to re-run with other assumptions. Or you can replicate easy enough.

More generally, the burden of proof for those arguing about the existence of a “celestial teapot” lies with those who assert its existence, not those who can’t see it. See:

http://en.wikipedia.org/wiki/Russell's_teapot

Thanks!

Thanks Stefan for this “Bayesian update.” Here at dosbat is another calculation,

http://dosbat.blogspot.co.uk/2014/02/uk-wet-summers-post-2007-how-unusual.html

with a different method suggested in the first comment. Is this post reasonable in your view?

And now the hard one: cumulative likelyhoods. How should we estimate the cumulative likelyhood of a very extreme event occurring at least once within a certain number of future years?

For instance, what is the cumulative likelyhood of a combination of drought and floods causing a very widespread famine before 2030? 2040? 2050?

I do not expect anyone to figure this out quickly but I think you will agree that it is worth working on.

As Jim Larsen says in comment number 5 above, the word should be “die” and not “dice.” “Die” is the singular (one die), “dice” is plural (two dice or, I suppose, two dies).

Hence, if you lose one die, that’s bad, but a pair o’ dice lost. . . that’s terrible.

Stefan,

I agree that extreme values are very difficult metrics to use. However, extremes are an example of order statistics, which can provide a fairly complete description of the distribution. Has anyone been looking at use of order statistics to discern changes?

When describing the delay in using statistical evidence as proof due to the noise, it is important to point out to many people that when you get that proof, it applies to the past as well as the time when it is found.

An analogy I like is with the Great Recession. When the crash hit and everything plummeted, we ALL knew we were in a recession. But the government office in the US that makes official statements on recessions waited a year or more to see the trends in the GDP before declaring that – we had been a recession all that time.

I think it’s also worthwhile to point out that statistical evidence only provides correlation. Either way, you need the physics for causation and attribution.

#12–I don’t think that we can just ‘ignore’ them (unfortunately.) Affecting the social, economic and political ‘climate’ (sorry, that’s cringe-worthy) is essential to effect the transition to a low-carbon economy–and these folks are part of that mix. Can’t let the discussion get ‘swift-boated!’

That said, it’s also important to rebut with efficiency and dispatch. There is indeed substantive work to be done, and it surely is possible to waste inordinate amounts of time ‘wrestling with the pig.’

Oh my goodness! I certainly agree that continued warming will increase the frequency of a variety of extremes related to heat, sea level, precipitation, etc. and in fact, some of that is already happening. But please, please don’t repeat the absurd claim that “absence of evidence is not evidence of absence”. Of course, it’s evidence of absence – what it’s not is PROOF of absence. It doesn’t take a huge amount of Bayesian inference to encounter innumerable examples of absence of evidence providing evidence of absence – in criminal trials as well as everyday life. Even in the examples given, it’s easy to see how the results of the dice roll provided evidence (not PROOF) for the fairness of the dice by shifting the probabilities away from those expected with dice that yield six twice as often as fair dice.

Stefan, it’s an interesting analogy, but I’m not sure I’m convinced. Patrick Brown’s post (#1 above) on the null hypothesis is a very good one, particularly as it relates to your claim that “physics” allows us to “know” that these things will happen in the future.

Essentially, I think your physics examples greatly simplify what is an extraordinarily complex system. For instance, “basic physics” tells us that aerosol emissions will – all things begin equal – cool the planet, but that doesn’t let me leap to the conclusion that our planet is getting cooler; the opposite is in fact the case. Aerosols are but one among many “basic physics” processes occurring at the same time, and the net result of all of these processes working together is what translates into actual effects we can see taking place in real life.

Let’s take your hurricane example. I have no disagreement whatsoever with the physical processes outlined by Kerry Emanuel, but you can’t simply leap to the conclusion that it means we’re in for greater hurricane damage in the US because of this. Climate change also has the ability to affect ocean currents, wind patterns, and probably a dozen other factors that determine the size and strength of these storms. Heck, some still believe climate change could stop the Gulf Stream; if that happens, how many hurricanes would hit the US?

As you are well aware, this is a significant reason why the climate models have to be as complicated as they are; “basic physics” in a vacuum can’t always get us to the right answer.

Starting with a null hypothesis and demonstrating statistical significance is far from perfect, but it prevents people (including those on the other side) from citing “basic physics” as proof something will happen in our complex world regardless of what the actual data say. You may well be right that many of the results we’re seeing are because the dice are currently loaded against the science, but in my opinion, that kind of conclusion is simply not scientific.

While waiting for my earlier comment to appear, I want to apologize for having used the unnecessary adjective “absurd” to characterize the claim that absence of evidence is not evidence of absence. The claim is not correct, but it’s understandable, given the ease of confusing evidence with proof. There was no need for the excessively critical word.

As long as we’re talking about extreme weather events and attribution… although Kerry Emanuel is usually the go-to guy for the study of increasing tropical cyclone intensity, his 2005 and 2011 (linked to above by Stefan) papers being the most cited, there is a limitation of scope in that only the North Atlantic basin is covered by these papers, AFAIK.

Admittedly, the data from other ocean basins is much more patchy. However, I came across a paper last year that the rabbit had posted a link to in the aftermath of Haiyan, and found it quite compelling as well as easily accessible to this non-scientist:

The increasing intensity of the strongest tropical cyclones

The authors used a technique called quantile regression to overcome the patchiness of the data, and found:

It’s no slam dunk by any means:

But that’s already 6 years ago, and there will be more studies of this nature, no doubt. And data quality/coverage also improves over time. Not one to steal the rabbit’s thunder, here’s a link to the original post for context:

Narderev Sano at the Warsaw Conference

“With normal dice, the chance to get exactly two sixes in this experiment is 30%, with the loaded dice it is 13%”

At the risk of dropping my dunce cap at your feet, shouldn’t the second percentage be higher than the first? It reads like it’s less likely to roll a six with dice loaded to prefer sixes.

[Response: The loaded die gives more sixes than the fair one (the expected value is 4 in 12 throws), but it is less likely to give *exactly* one or two sixes. It is much more likely to give 4 or more. Think of a distribution being shifted to higher values. – gavin]

> more studies

That one http://www.nature.com/nature/journal/v455/n7209/abs/nature07234.html

has been cited by almost 400 since 2008

It’s interesting that six and seven years ago I used the same reliance on physics, logic and common sense to say the climate was changing overall much faster than was thought at the time. I said the physics paired with the changes we were seeing, changes the literature said shouldn’t have been happening yet, *had* to indicate a higher sensitivity. Had to.

I was told repeatedly I had to prove it, a clear impossiblity given the lag between current conditions and scientific process. Glad to see at least one scientist is getting more comfortable with saying we know things *must be* happening even when we can’t yet provide the evidence, let alone proof.

As things move faster and faster and we fall further and further behind the climate change curve, being comfortable with knowing, as opposed to direct evidence and proof, will be ever more critical because policy and action will increasingly proceed much faster than evidence collection can be done.

Get comfortable with uncertainty, ladies and gents.

Wait, isn’t this really a case hundreds of rolls of the dice and picking pattern clusters? Hundreds of rolls will undoubtedly have clusters of highs and lows. We have thousands of thermometers. California warmth and polar vortex cold are connected by random weather not by a cluster of temperature. Similarly, Sandy and storm surge are affected by sea level rise but high tide is much more of an issue as is the angle it hit. The ongoing nor’easter is more powerful than Sandy but sea level rise doesn’t appear to be a factor. The drought in California is anthropogenic but mostly farming and population. Really the metric for damage should be against non-weather related incidents vs. weather related ones. The Tsunami’s and earthquakes would be a random metric to compare to hurricanes. Japan is fortunate enough to have all three. The Tsunami destroyed a nuclear reactor. That doesn’t mean Tsunami’s are getting more powerful. That was not possible 50-100 years ago. Really, though, this statistics problem is one of many rolls and it’s so extensive that finding 3 sigma events is probable if you don’t have a good a control for spatial variation. A flood here, a drought there, hurricane here, hurricane there, etc is a poor argument that those events are statistically significant or related.

David C,

I agree with some of your points but, regarding null hypothesis significance testing, we’re talking about datasets which we believe, a priori, will not demonstrate significance according to standard detection methods even in the presence of a trend.

When we expect the null hypothesis will not be rejected even if it’s wrong, what conclusions can be drawn from a result which fails to reject the null? What’s the point in such a test?

How about a bit of discussion on the next extreme weather event coming to theaters near you

the 2014 El Nino!

Paul S.,

I actually think the IPCC does a very good job of this with it’s “confidence” (“evidence” and “agreement”) and “likelihood” ratings. “Extremely Likely” in this parlance generally matches the statistical significance test. To your question, if you have a physical process that reasonably describes an event and can also see a trend in the data (say, with 80% certainty), I think that’s something worth discussing, and the IPCC assessments often do. What I reacted to in Stefan’s post is his claim that “basic physics” can still let you “know” something will occur when you don’t see any trend at all (i.e., the loaded dice example).

In my mind, AR5 also got this right when it stated – for the most part – “limited”/ “lack of evidence” and “low confidence” in extreme trends for floods on a global scale, hail/thunderstorms, tropical cyclones, drought/dryness on a global scale, and trends for both frequency and intensity of hurricanes. We do know that there are trends in temperature extremes and precipitation extremes (which are backed solidly by physics in a warming climate), but for the other metrics we don’t really see trends at all. That doesn’t mean these things won’t happen – it could simply be coincidence with the dice loaded against us – but every year that goes by without a trend makes that less and less likely; there are probably other physical processes working to counteract those we’ve identified.

There is a tendency on our side to ascribe every possible bad thing that happens to global warming (though not as much as the other side does whenever it’s cold or snows) regardless of what the science says. As much as we know about “basic physics,” I would still like to see at least some kind of a trend borne out in actual data before we can confidently “know” they will ultimately lead to the effect we’re claiming in the real world.

Paul S.,

I actually think the IPCC does a very good job of describing what conclusions can be drawn with its “confidence” (“evidence” and “agreement”) and “likelihood” metrics. “Extremely Likely” in this parlance is loosely associated with statistical significance (“95-100%”), but the IPCC discusses many other confidence and likelihood levels in AR5. To answer your question, if one has a physical process that reasonably describes what should happen in the real world coupled with a trend (say, to the 80% significance level), I think it’s definitely something worth discussing, especially if we don’t have the data to reach 95% significance. Where I disagree with Stefan is that I don’t think that with “basic physics” you can “know” something will happen when you don’t see any trend at all (as in his loaded dice example).

I think the IPCC also got it right when discussing extremes in AR5. Although there are definite trends (and physical processes to explain them) in temperature and precipitation extremes, the IPCC in AR5 WG1 Chapter 2 states a “lack of evidence” and/or “low confidence” in trends for hurricane intensity/frequency, small-scale severe weather (hail/thunderstorms/tornados), and both drought and floods on a global scale (though drought and flood extremes will almost certainly change on a local level). That doesn’t mean these extreme events won’t happen more frequently – the dice could be loaded against us – but it doesn’t give us any confidence to “know” that they will either.

Roger Pielke Jr – It looks to me that your paper essentially finds the same results as Kerry Emanuel’s which was already highlighted in the post.

In Discussion, Emanuel’s paper states:

‘We caution that the question of when a statistically robust trend can be detected in damage time series should not be confused with the question of when climate-induced changes in damage become a significant consideration…

‘Thus, if climate change effects are anticipated, or detected in basin-wide storm statistics, sensible policy decisions should depend on the projected overall shift in the probability of damage rather than on a high-threshold criterion for trend emergence.’

This seems to get to the heart of the matter so do you agree with this principle?

Roger Pielke’s reference to Russell’s “celestial teapot” is the kind of misdirection I’ve come to expect from him.

The example is irrelevant. Not only is there no evidence for such a teapot, there is no scientific reason to expect it either; in fact there’s every scientific reason to expect it doesn’t exist. It’s the combination of lack of evidence, lack of reason, and a priori extreme implausibility that drives home Russell’s point, justifying not just the burden of proof, but a heavy one.

Regarding increase in the frequency and extremity of extreme weather events, they are a priori very plausible and there is scientific reason to expect them. While we are well advised to be cautious in claiming detection, we are even better advised to distrust those who claim, or (as Pielke so often does) do all they can to insinuate that a lack of detection constitutes detection of a lack, while what’s really lacking is the guts to say anything they can be pinned down to.

There’s even evidence for changes in extreme weather. In many cases the evidence isn’t strong enough to reach the de facto standard of frequentist statistical significance (like getting 4 sixes in 12 rolls, to modify Stefan’s original example), but it still constitutes evidence. Stefan’s appeal to a Bayesian statistical approach is wise — not only does it put such evidence in proper perspective, it enables us to make good (quantitative) use of prior knowledge. Perhaps that’s why even long-time frequentists like me have come to recognize the essential advantages of Bayesian statistics.

Re: RPJr comment #15 and tamino # 34 Eli has a note

http://rabett.blogspot.com/2014/03/might-help-more-if-link-supports-your.html

What does Bayesian data analysis have to do with political science?

[Reply: By the way – the cover image of that Gelman et al. book “Bayesian Data Analysis” has a nice example of decomposing data (in this case the number of births) into a “fast non-periodic component” and a “slow trend”. The latter is what we called “non-linear trend” in our analysis of the Moscow temperature record, to make sure it cannot be confused with a linear trend. Someone did nevertheless – but as much as we try to be clear in our writing, it will never be sufficient with people who want to misunderstand. -Stefan]

On basic physics and extreme rainfall…

An example from engineering hydrology is the method used to estimate very extreme rainfall – the so-called probable maximum precipitation. For short durations (up to about 6 hours) common methods are based on the old US thunderstorm model, and have as their key parameter a thing called extreme 24 hour persisting dew point. That’s a measure of the maximum amount of water that the relevant chunk of atmosphere can physically hold.

On a planet that is nearly covered with water (>85%), it’s no surprise that as temperature rises, in most places so does extreme dew point, and therefore also extreme rainfall. This is not just theoretical. It directly affects numbers used to design real things, and their resulting cost.

Regarding relation between extreme rainfall and main global temperature, for me it is something quite clear …

In many places it happens what in Spain: it´s in late summer and automn when maximum rainfall has always happend in Mediterranean cost, just when sea water is warmest, and atmosphere is very warm too.

In Atlantic cost such rainfall increase does not happen: neither water nor atmosphere have got so warm …

When dealing with public perceptions, KISS should be the mantra. Keep it Simple Stupid. The vast majority of people have no feel for statistical issues. There are several things that are well proven and simple to understand – for example, global termperature increase, sea level rise, polar ice cover, glacier retreat, and snow cover. These should be emphasized exclusively, outside of scientific journals. The latter three are easy to show with pictures.

Talking too the public about extremes is confusing and not helpful. Information about extreme temperatures adds almost nothing to information about trends in global temperature. Moreover, talking about extremes might encourange the natural tendency to focus on immediate knowledge. Here on the East Cost, it was very cold this winter and hurricane activity was very low last year. That kind of thing can lead people to be skeptical about global warming if they are told they are to expect more extreme hot waves and more hurricanes.

Again, don’t muddy the message.

What would Yes Minister make of all this?

Minister: Of course the warming could just be natural?

Humphrey: No no no Minister don’t use the word natural … Human caused!

Minister: Well say it was natural just for arguments sake, wouldn’t the natural slight warming also cause extreme reactions, sorry I mean slightly more extreme weather, or not as the case may be?

Humphrey: Minister, that is a logical trap, I mean it is extremely likely to be logical but also a trap, absence of evidence and evidence for absence is the mantra, now practice, practice, practice. Remember the Rumsfeld known knowns talk we had.

This situation is very different from teapots in deep space. We have reasons to believe that some extreme events will increase in frequency or intensity due to climate change (e.g. based on first principles or model simulations). Some of these effects cannot yet be detected statistically, but from this it does not follow that an increase do not exist, and it does not follow that such an increase is unimportant. For example, an extra hurricane or an increase in wind speed in some hurricanes can have substantial impact on human lives.

We should beware of thinking that an effect which is “insignificant” statistically is necessarily “insignificant” ethically (this is an equivocation, a logical fallacy due to the use of words with different meaning). In some cases even high increases in risk can be statistically indetectable. See e.g. the discussion on “Limits of Scientific Knowledge—Indetectable Effects” in http://scholar.lib.vt.edu/ejournals/SPT/v8n1/hansson.html

Typo alert:

‘Dice’ is plural. The singular of dice is ‘die’ (which, appropriately enough, is what we’re all going to do…)

I may be on “uncertain” statistical grounds here, but I recall the concept of skewness as a method of describing the “tails” of a normal frequency curve. It appears that skewness, and several formulas seem applicable, provides a testable procedure to compare extreme weather events over time, for example, some recent work on summertime temperatures by Volodin and Yurova in 2013: http://link.springer.com/article/10.1007%2Fs00382-012-1447-4.

Nicely done, Professor Rahmstorf! As to the difficulty of understanding, understanding risk at the level of digging dice rolls is minimal competence for modern policy, and I would say any citizen should master that. (Of course, I’m a statistician and I would say so.) Professor David Spiegelhalter has a nice set of videos and such at http://understandinguncertainty.org/ for doing that.

The other little thing — which may be in the geophysics or meteorological literature or may not, I do not know — is that if the far tail of a distribution (suppose Normal) is watched, it much more sensitive in terms of signal to noise to a change in the distribution in that direction than the middle is. Of course, this assumes that far tail has enough observations on it in the baseline case to serve as a comparison, and that may be the rub.

As an example — and again I do not know if Professor Emanuel or anyone has done anything like this — in the case of tropical storms, there are “out of main season” tropical storms historically, and if the propensity on numbers of storms or severity of storms were to increase, that special population, if big enough historically, might serve as a sensitive means of finding this.

Donald Campbell,

Skewness is simply the third moment of the distribution. The first two are mean and variance (square of the standard deviation). Essentially, what you are describing is a characterization of a distribution by its moments–the so-called method of moments. This is quite viable, but the higher the moment, the greater the need for lots of data to accurately estimate that moment. You can go one further and use kurtosis–the 4th moment, which gives a relative measure of the proportion of the distribution in the peak to in its tails. There are any number of other statistics one can also use–the ratio of the standard deviation to the mean, the range, and so on.

One problem with distributions with thicker tails–the estimators converge much more slowly than they do for well behaved distributions like the Normal. And when you are dealing with extremes of the distribution, things are even more fraught.

I alluded to order statistics above as a possible method for looking at how distributions are changing.

perwis @ 43 mentions the ethics of climate extremes. Note the discussion here and my unanswered question at comment # 17 above. The seriousness of climate extremes will come to world attention long before all the carbon is burned.

Barry and Gavin

Re: #27.

The dodgy gambler would be disappointed if he had got the ‘expected’ result for a normal die i.e. two sixes. He could be reassured by the fact that his intervention would have raised the probability of getting exactly four sixes, which is what he was expecting, by a factor of 2.68. Note that the inequality discussed in #27 has been reversed.

What about the extreme case of exactly ten sixes out of twelve throws which is 655 times more likely with the dodgy dice?

I have not bothered to check my numerical working because you can see similar effects in a graph provided by Tamino on three changed dice. (The spam filter might be objecting to it but, not the search engine).

In this comment I considered waiting until the extreme event was repeated:

increased freq. 1/10 to 1/5 easier to repeat than 1/1000 to 1/500.

t marvell #41: people talk about this stuff anyway, so why not get the theory right and clear?

One of the biggest stumbling blocks in persuading the public is unusually cold weather. It is also deceptive to pick a particularly hot period as indicative of global warming (it could just be a big El Niño for example).

We can’t over-simplify a complex subject just because some people are willfully stupid.