Since 1998 the global temperature has risen more slowly than before. Given the many explanations for colder temperatures discussed in the media and scientific literature (La Niña, heat uptake of the oceans, arctic data gap, etc.) one could jokingly ask why no new ice age is here yet. This fails to recognize, however, that the various ingredients are small and not simply additive. Here is a small overview and attempt to explain how the different pieces of the puzzle fit together.

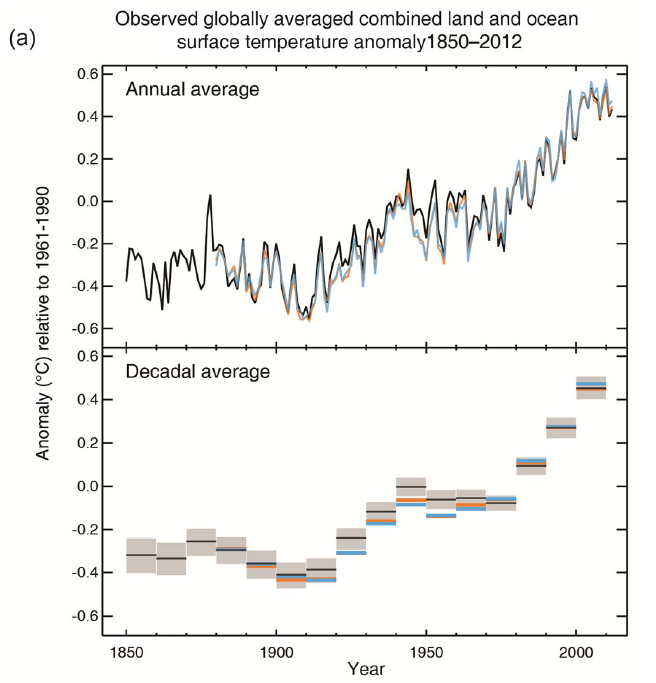

Figure 1 The global near-surface temperatures (annual values at the top, decadal means at the bottom) in the three standard data sets HadCRUT4 (black), NOAA (orange) and NASA GISS (light blue). Graph: IPCC 2013.

First an important point: the global temperature trend over only 15 years is neither robust nor predictive of longer-term climate trends. I’ve repeated this now for six years in various articles, as this is often misunderstood. The IPCC has again made this clear (Summary for Policy Makers p. 3):

Due to natural variability, trends based on short records are very sensitive to the beginning and end dates and do not in general reflect long-term climate trends.

You can see this for yourself by comparing the trend from mid-1997 to the trend from 1999 : the latter is more than twice as large: 0.07 instead of 0.03 degrees per decade (HadCRUT4 data).

Likewise for data uncertainty: the trends of HadCRUT and NASA data hardly differ in the long term, but they do over the last 15 years. And the small correction proposed recently by Cowtan & Way to compensate for the data gap in the Arctic almost does not change the HadCRUT4 long-term trend, but it changes that over the last 15 years by a factor of 2.5.

Therefore, it is a (by some deliberately promoted) misunderstanding to draw conclusions from such a short trend about future global warming, let alone climate policy. To illustrate this point, the following graph shows one simulation from the CMIP3 model ensemble:

Figure 2 Temperature evolution in a model simulation with the MRI model. Other models also show comparable “hiatuses” due to natural climate variability. This is one of the standard simulations carried out within the framework of CMIP3 for the IPCC 2007 report. Graph: Roger Jones.

In this model calculation, there is a “warming pause” in the last 15 years, but in no way does this imply that further global warming is any less. The long-term warming and the short-term “pause” have nothing to do with each other, since they have very different causes. By the way this example refutes the popular “climate skeptics” claim that climate models cannot explain such a “hiatus” – more on that later.

Now for the causes of the lesser trend of the last 15 years. Climate change can have two types of causes: external forcing or internal variability in the climate system.

External forcing: the sun, volcanoes & co.

The possible external drivers include the shading of the sun by aerosol pollution of the atmosphere by volcanoes (Neely et al., 2013) or Chinese power plants (Kaufmann et al. 2011). Second, a reduction of the greenhouse effect of CFCs because these gases have been largely banned in the Montreal Protocol (Estrada et al., 2013). And third, the transition from solar maximum in the first half to a particularly deep and long solar minimum in the second half of the period – this is evidenced by measurements of solar activity, but can explain only part of the slowdown (about one third according to our correlation analysis).

It is likely that all these factors indeed contributed to a slowing of the warming, and they are also additive – according to the IPCC report (Section 9.4) about half of the slowdown can be explained by a slower increase in radiative forcing. A problem is that the data on the net radiative forcing are too imprecise to better quantify its contribution. Which in turn is due to the short period considered, in which the changes are so small that data uncertainties play a big role, unlike for the long-term climate trends.

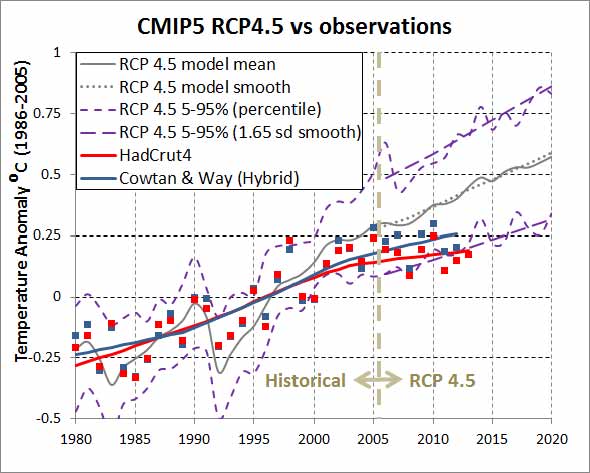

The latest data and findings on climate forcings are not included in the climate model runs because of the long lead time for planning and executing such supercomputer simulations. Therefore, the current CMIP5 simulations run from 2005 in scenario mode (see Figure 6) rather than being driven by observed forcings. They are therefore driven e.g. with an average solar cycle and know nothing of the particularly deep and prolonged solar minimum 2005-2010.

Internal variability: El Niño, PDO & co.

The strongest internal variability in the climate system on this time scale is the change from El Niño to La Niña – a natural, stochastic “seesaw” in the tropical Pacific called ENSO (El Niño Southern Oscillation).

The fact that El Niño is important for our purposes can already be seen by how much the trend changes if you leave out 1998 (see above): El Niño years are particularly warm (see chart), and 1998 was the strongest El Niño year since records began. Further evidence of the crucial importance of El Niño is that after correcting the global temperature data for the effect of ENSO and solar cycles by a simple correlation analysis, you get a steady warming trend without any recent slowdown (see next graph and Foster and Rahmstorf 2011). ENSO is responsible for two thirds of the correction. And if you nudge a climate model in the tropical Pacific to follow the observed sequence of El Niño and La Niña (rather than generating such events itself in random order), then the model reproduces the observed global temperature evolution including the “hiatus” (Kosaka and Xie 2013) .

One can also ask how the observed warming fits the earlier predictions of the IPCC . The result looks like this (Rahmstorf et al 2011):

Figure 3 Comparison of global temperature (average over 5 data sets, including 2 satellite series) with the projections from the 3rd and 4 IPCC reports. Pink: the measured values. Red: data after adjusting for ENSO, volcanoes and solar activity by a multivariate correlation analysis. The data are shown as a moving average over 12 months. From Rahmstorf et al. 2012.

And what about the ocean heat storage ? That is no additional effect, but part of the mechanism by which El Niño years are warm and La Niña years are cold at the Earth’s surface. During El Niño the ocean releases heat, during La Niña it stores more heat. The often-cited data on the heat storage in the ocean are therefore just further evidence that El Niño plays a crucial role for the “pause”.

Leading U.S. climatologist Kevin Trenberth has studied this for twenty years and has just published a detailed explanatory article. Trenberth emphasizes the role of long-term variations of ENSO, called pacific-decadal oscillation (PDO). Put simply: phases with more El Niño and phases with predominant La Niña conditions (as we’ve had recently) may persist for up to two decades in the tropical Pacific. The latter brings a somewhat slower warming at the surface of our planet, because more heat is stored deeper in the ocean. A central point here: even if the surface temperature stagnates our planet continues to take up heat. The increasing greenhouse effect leads to a radiation imbalance: we absorb more heat from the sun than we emit back into space. 90% of this heat ends up in the ocean due to the high heat capacity of water. The fact that the ocean continues to heat up, without pause, demonstrates that the greenhouse effect has not subsided, as we have discussed here.

How important the effect of El Niño is will be revealed at the next decent El Niño event. I have already predicted last year that after the next El Niño a new record in global temperature will be reached again – a forecast that probably will be confirmed or falsified soon.

The Arctic data gap

Recently, Cowtan & Way have shown that recent warming was underestimated in the HadCRUT data. After using satellite data and a smart statistical method to fill gaps in the network of weather stations, the global warming trend since 1998 is 0.12 degrees per decade – that is only a quarter less than the long-term trend of 0.16 degrees per decade measured since 1980. Awareness of this data gap is not new – Simmons et al. have shown already in 2010 that global warming is underestimated in the HadCRUT data, and we have discussed the Arctic data hole repeatedly since 2008 at RealClimate. NASA GISS has always filled the data gaps by interpolation, albeit with a simpler method, and accordingly the GISTEMP data show hardly a slowdown of warming.

The spatial pattern

Cohen et al. have shown two years ago that it is mainly the recent cold winters in Eurasia that have contributed to the flattening of the global warming curve (see figure).

Figure 4 Observed temperature trends in the winter months. Despite the significant global warming in the annual mean, there was a winter cooling in Eurasia. CRUTem3 data (land only!), from Cohen et al. 2012.

They argue that an explanation for the “pause” in global warming would have to explain this particular pattern. But this is not compelling: there could be two independent mechanisms superimposed. One that dampens global warming – which would have to be explained by the global energy balance. And a second one that explains the cold Eurasian winters, but without affecting the global mean temperature. I think the latter is likely – these recent cold winters are part of the much-discussed “warm Arctic – cold continents” pattern (see, eg, Overland et al 2011) and could be related to the dwindling ice cover on the Arctic Ocean, as we explained here. Since the heat is just moved around, with Eurasian cold linked to a correspondingly warmer Arctic, this hardly affects the global mean temperature – unless you’re looking at a data set with a large data gap in the Arctic …

What does it add up to?

How does all that fit together now? As described above, I think (just like Trenberth) that natural variability, in particular ENSO and PDO, is the main reason the recent slower warming. From the perspective of the planetary energy balance heat storage in the ocean is the key mechanism.

If the warming is steady after adjusting for ENSO, volcanoes and solar cycles, does the additional correction for the Arctic data gap by Cowtan & Way mean that the warming after these adjustments has even accelerated? That could be, but only by a small amount. As you can see in Figure 6 of our paper (Foster and Rahmstorf), the slowdown is gone after said adjustment in the GISS data and the two satellites series, but there still is some slowdown in the two data sets with the Arctic gap, ie HadCRUT and NCDC. Adding the trend correction by 0.08 degrees per decade from Cowtan & Way to our ENSO-adjusted HadCRUT trend from 1998, you end up at about 0.2 degrees per decade, practically the same value as we got for the GISS data. If one further adds the effect of the above forcings (without the solar activity already accounted for) this would add a few hundredths of a degree. The result would be a bit faster warming than over the entire period since 1980, but probably less than the 0.29 °C per decade measured over 1992-2006. Nothing to get excited about. Especially since based on the model calculations you’d expect anyway trends around 0.2 degrees per decade, because models predict not a constant but a gradually accelerating warming. Which brings us to the comparison with models.

Comparison with models

Figure 5 Comparison of the three measured data sets shown at the outset with earlier IPCC projections from the first (FAR), 2nd (SAR) 3rd (TAR) and 4th (AR4) IPCC report, as well as with the CMIP3 model ensemble. As you can see the data move within the projected ranges. Source: IPCC AR5, Figure 1.4. (Small note: “climate skeptics” brought an earlier, erroneous draft version of this graphic to the public, although it was marked in block letters as a temporary placeholder by IPCC.)

When comparing data with models, one needs to understand a key point: the models also produce internal variations, including ENSO, but as this (similar to the weather in the models) is a stochastic process, the El Niños and La Niñas are distributed randomly over the years. Therefore, only in rare cases a model will randomly produce a sequence that is similar to the observed sequence with reduced warming from 1998 to 2012. There are such models – see the first image above – but most show such phases of slow warming or “hiatus” at other times.

The IPCC has therefore never tried to predict the climate evolution over 15 years, because that’s just too much influenced by random internal variability (such as ENSO), which we cannot predict (at least as yet).

However, all models show such variability – no one who understands this issue could have been surprised that there can be such hiatus phases. They’ve also occurred in the past, for example from 1982, as Trenberth shows in his Figure 4.

The following graph shows a comparison of observational data with the CMIP5 ensemble of model experiments that have been made for the current IPCC report. The graph shows that the El Niño year 1998 is at the top and the last two cool La Niña years are at the bottom of the model projection range (for the various reasons explained above). However, the temperatures (at least according to the data of Cowtan & Way) are within the range which is spanned by 90% of the models.

Figure 6 Comparison of 42 CMIP5 simulations with the observational data. The HadCRUT4 value for 2013 is provisional of course, still without November and December. (Source: DeepClimate.org)

So there is no evidence for model errors here (for more on this see this article) . This is also no evidence for a lower climate sensitivity, even if this was proposed some time ago by Otto et al. (2013). Trenberth et al. suggest that even the choice of a different data set of ocean heat content would have increased the climate sensitivity estimate of Otto et al. by 0.5 degrees. In addition, Otto et al. used the HadCRUT4 temperature data with its particularly low recent warming. With an honest appraisal of the full uncertainty, also in the forcing, one must come to the conclusion that such a short period is not sufficient to draw conclusions about the climate sensitivity.

Conclusion

Global temperature has in recent years increased more slowly than before, but this is within the normal natural variability that always exists, and also within the range of predictions by climate models – even despite some cool forcing factors such as the deep solar minimum not included in the models. There is therefore no reason to find the models faulty. There is also no reason to expect less warming in the future – in fact, perhaps rather the opposite as the climate system will catch up again due its natural oscillations, e.g. when the Pacific decadal oscillation swings back to its warm phase. Even now global temperatures are very high again – in the GISS data, with an anomaly of + 0.77 °C November was warmer than the previous record year of 2010 (+ 0.67 °), and it was the warmest November on record since 1880.

PS: This article was translated from the German original at RC’s sister blog KlimaLounge. KlimaLounge has been nominated as one of 20 blogs for the award of German science blog of the year 2013. If you’d like to vote for us: simply go to this link, select KlimaLounge in the list and press the “vote” button.

References

- K. Cowtan, and RG Way, “Coverage bias in the HadCRUT4 temperature series and its impact on recent temperature trends”, Quarterly Journal of the Royal Meteorological Society, pp. n / an / a, 2013. http://dx.doi.org/10.1002/qj.2297

- RR Neely, OB Toon, S. Solomon, J. Vernier, C. Alvarez, JM English, KH Rosenlof, MJ Mills, CG Bardeen, JS Daniel, and JP Thayer, ”

Recent Increases in anthropogenic SO2from Asia have minimal impact on stratospheric aerosol

“Geophysical Research Letters, vol. 40, pp. 999-1004, 2013. http://dx.doi.org/10.1002/grl.50263 - RK Kaufmann, H. Kauppi, ML man, and JH Stock, “Reconciling anthropogenic climate change with observed-temperature 1998-2008”, Proceedings of the National Academy of Sciences, vol. 108 pp. 11790-11793, 2011. http://dx.doi.org/10.1073/pnas.1102467108

- F. Estrada, P. Perron, and B. Martínez-López, “Statistically derived Contributions of diverse human Influences to twentieth-century temperature changes”, Nature Geoscience, vol. 6, pp. 1050-1055, 2013. http://dx.doi.org/10.1038/ngeo1999

- G. Foster, and S. Rahmstorf, “Global temperature evolution 1979-2010”, Environmental Research Letters, vol. 6, pp. 044 022, 2011. http://dx.doi.org/10.1088/1748-9326/6/4/044022

- Y. Kosaka, and S. Xie, “Recent global-warming hiatus tied to equatorial Pacific surface cooling”, Nature, vol. 501 pp. 403-407, 2013. http://dx.doi.org/10.1038/nature12534

- S. Rahmstorf, G. Foster, and A. Cazenave, “Comparing Projections to climate observations up to 2011,” Environmental Research Letters, vol. 7, pp. 044 035, in 2012. http://dx.doi.org/10.1088/1748-9326/7/4/044035

- AJ Simmons, KM Willett, PD Jones, PW Thorne, and DP Dee, “Low-frequency variations in surface atmospheric humidity, temperature, and precipitation: Inferences from reanalyses and monthly gridded observational data sets”, Journal of Geophysical Research, vol. 115, 2010. http://dx.doi.org/10.1029/2009JD012442

- JL Cohen, JC Furtado, MA Barlow, VA Alexeev, and JE Cherry, “Arctic warming, Increasing snow cover and wide spread boreal winter cooling”, Environmental Research Letters, vol. 7, pp. 014 007, in 2012. http://dx.doi.org/10.1088/1748-9326/7/1/014007

- JE Overland, KR Wood, and M. Wang, “Warm Arctic-cold continents: climate impacts of the newly open Arctic Sea”, Polar Research, vol. 30, 2011. http://dx.doi.org/10.3402/polar.v30i0.15787

- A. Otto, Otto FEL, O. Boucher, J. Church, G. Hegerl, PM Forster, NP Gillett, J. Gregory, GC Johnson, R. Knutti, N. Lewis, U. Lohmann, J. Marotzke, G. Myhre, D. Shindell, B. Stevens, and MR Allen, “Energy budget constraints on climate response”, Nature Geoscience, vol. 6, pp. 415-416, 2013. http://dx.doi.org/10.1038/ngeo1836

WebHubTelescope

As I pointed out elsewhere calculating harmonics of Hale cycle (11, 7.3, 5.5, 4.4, and 3.7 years) has no physical meaning. Periodicity of solar activity is a variable, and do remember ‘von Neumann elephant’ !.

If you wish to employ Hale cycle then you need to look at each sunspot period individually and incorporate it into your model, and that is exactly what I have done here

http://www.vukcevic.talktalk.net/GSC1.htm

This is NO model it is actual data, and since no one knows period of SC24 let alone any future cycles, then it has no predictive capacity.

However you can back extrapolate since we have data on the sunspot cycles going back to 1700, and that is exactly what is done here:

http://www.vukcevic.talktalk.net/AMO-recon.htm

Compare with two other AMO, Scottish rainfall and NAO reconstructions.

In years to come it will become apparent if the AMO is a major player or inconsequential. I do not claim either.

Ray Ladbury

“Even the Solar Cycle is not a true periodic phenomenon.”

I shall use you other quote:

QFFT! Thank you.

This is what I said to WHT (whoever he is, why not be brave and put the real name as many other contributors do)

“Simply because (you) got Hale cycle periodicity wrong, assuming it is constant 22 years, it ain’t, it is continuously variable (changes with periods of actual cycles, since 1900, the HC periods were : 21.67, 20.75, 20.66, 21.42, 22.58 ), hence it doesn’t have fixed harmonics. Further more ‘harmonics’ periodicity you quote (11, 7.3, 5.5, 4.4, and 3.7 years) have no physical meaning in this context and makes nonsense of whole thing.

If you whish to go down that path then you could consider odd number ‘sub-harmonics’ i.e. 3x @ ~64/65 (the AMO etc) and important one 5x @ ~ 105 years …. (~1705, ~1810, ~1915, ~2020? solar cycle minima)”

[Response: Numerology is tedious. No more please. – gavin]

Response: Numerology is tedious. No more please. – gavin

Hi

I agree, numerology is indeed tedious, and I’ve done more than most, but someone has to do it, and I happen to have the time and inclination. Many data sets reminiscent of a string of pearls do sparkle in a ‘polarised’ light, but alas not easy to tell the real one.

Happy Xmas to you and the readers of your blog.

Would it be correct to say that a doubling of co2 from 280 to 560ppm means, before feedbacks, 3.7 Watts less IR radiated to space and 3.7 more radiated downwards to the surface? And that this would cause ~1C of warming to restore equilibrium? Thanks.

#2 Matti Virtanen:

Warming over periods of 13 years has always been statistically insignificant in the instrumental record.

Nothing unusual about that.

So, what are we going to do about this? Sit here whining and writing ever more papers while emissions continue to go up, and up and up? Is there a reason scientists haven’t voiced their concerns in an organized public and angry fashion? We know what’s coming, and yet we do not act to at least minimize impacts. How many of you were on the National Mall last February? Or have you even tried to organize an event that can’t be missed by the media? You know, something with Balls for a change.

If not, that begs the question of whether we deserve to survive.

#2 Matti Virtanen:

Only if you deliberately choose to ignore ocean heating. You know, the 70% of earth’s surface that can actually store accumulated heat.

Jackie Heinl says:

22 Dec 2013 at 11:07 AM

……

Have you considered that the global warming, which does exist, may be on the turn, and we may have decade or two of stagnation or even cooling. Beside, the CO2 gas is beneficial to biosphere and food production, more vegetation around more diverse and plentiful wild life is.

It may or may not come to you as a surprise, but radical actions attract small minority of zealots and alienate wider more sedate masses, who are bombarded to saturation from all kind of ‘impending disasters’.

Credibility of science, which you espouse, would be far better promoted by calm and considerate explanation of the reasons for the current GW ‘hiatus’.

As I am in minority on these pages, I would like to make it clear that I do not deny existence of climate change. I think that CO2 role is only minor contributor; further rise in the N. Hemisphere temperature in decades to come is unlikely, but if does materialise it could be more beneficial than damaging since the winter fuel consumption would go down + other benefits as mentioned above.

John @53.

Yes, it would be incorrect to say that.

The 1.1ºC caused by a doubling of atmospheric CO2 will not leave unchanged the climate system. The warmer globe will, for instance, result in more atmospheric H2O which will add to the 3.7 Wm^-2 and so to the 1.1ºC rise. How much the 1.1ºC will be amplified by positive feedbacks of this sort (and diminished by negative ones), that is not known with great accuracy but the way the globe has responded in the past and is responding now suggest the resulting rise will be in the range 1.5ºC to 4.5ºC.

[too far]

#55

Not much we can do while our political masters are in denial of the problem.

vukcevic said about AGW:

Rationalization is a hallmark of denialism. If you don’t agree with that, you may want to change the Wikipedia entry for Denial. Look at bullet point #2 on minimisation.

I notice that whenever skeptics start to lose an argument pertaining to the science, they turn to rationalizing/minimizing the outcomes, or to projecting the blame (bullet point #3).

So if you don’t want the denial peg, don’t do #2 and #3, and stick to the original science.

Something that is becoming increasingly clear is that there isn’t a simple equation of increased planetary energy = increased surface temperature – in the short term anyway. Big variations in how energy is distributed between the surface and oceans for example can change the apparent trend. A big El Niño year redistributes energy from the oceans to the atmosphere. A big Arctic sea ice melt year like 2012 redistributes ocean energy to latent heat of melting ice.

Surface temperature is important because the planetary imbalance caused by increased GHG can only be corrected by more radiation to space, which means a higher surface temperature. As long as that imbalance still exists, evidenced by increased ice melt, increased ocean heat content and other measures of increased planetary energy, a slowdown in surface temperature rise has to be temporary, and no cause for complacency, as the energy imbalance, continuing unabated, has to result eventually in surface temperature increase. If we are in a phase where more of that imbalance than usual is going into oceans or melting ice, that does not help at all – the next big El Niño could dwarf 1998, and less ice means lower albedo, increasing the energy imbalance.

For John, you make a short statement in English and ask if it’s correct; without knowing what else you think, it’s not easy to just say yes or no to that question.

Have you read Spencer Weart yet? If not, the “Start Here” button at the top of the page, or the link to his work in the right sidebar, will be a good start.

The math is complicated; the system of feedbacks is complicated; the results don’t all happen all at once.

And some of the answers out there are attempts to explain the math in English; others are bafflegab meant to confuse people.

https://www.google.com/search?q=3.7+W%2Fm^2+is+the+total+absorbed+or+captured+power+for+a+doubling+of+CO2%2C+correct%3FI’ve wondered why there hasn’t been some comment on RealClimate regarding the paper by Abe-Ouchi, et al. (Nature, Vol. 500, p. 190, 2013) entitled “Insolation-driven 100,000-year glacial cycles and hysteresis of ice-sheet volume” and reviewed in Science (Vol. 341, p. 599, 2013). It seemed to me that the above model (based on Milanković orbital variations and corresponding fluctuations in atmospheric CO2) of Earth’s ice ages over the last million years or so, when considered along with the paper by Marcott et al., (Science, Vol. 339, p. 1198, 2013 and discussed in the RealClimate , p=15665, of 9/16/13) would strongly suggest that we humans have (unknowingly, unwittingly, and to tragic excess) succeeded in geoengineering our climate sufficiently to avoid our next scheduled ice age toward which we clearly had begun to descend about 4,000 years ago, and thence more rapidly beginning about 1,000 years ago. A curious irony, is it not? Now, if we can only stop pumping more CO2 into the atmosphere in time to have the excess CO2 disposed of by the time the next warm period begins some 80,000 years hence.

for C.W. Dingman: the idea has been around for a decade or more; you’ll find mentions if you search on “next ice age” here

Jackie Heinl,

What are we actually going to do about this?

Evidently, this is coming. So it’s people power or nothing much.

Compare with two other AMO, Scottish rainfall and NAO reconstructions. In years to come it will become apparent if the AMO is a major player or inconsequential. I do not claim either.

I think we can already see the AMO (blue) is having a small effect on anthropogenic warming on a global basis. The pronounced temperature anomaly drop that commenced at around 1940 appears to be ignoring the AMO. It does appear the PDO (green) might have had something to do with it. When the AMO does go negative around 1958, it appears to have minimal effect on the temperature anomaly. The PDO again appears it could be a player in the change in direction of the temperature anomaly around 1958. But when the PDO goes negative around 1954, the temperature anomaly appears to be minimally effected.

The 15 years commencing in 1980 warmed at a rate fairly close to the rate we have seen over the 21st Century: .07C per decade versus .06C (Von Storch). From 1990 to 1995 the anomaly is actually negative. So 15 years of a rate not at all unlike the 21st Century: slope = 0.00729652 per year versus 0.00624414 per year.

I’m just a amateur, but I do not see how people like Judith Curry can seriously say things implying natural variation is currently dominating AGW. If anything, the observation appears to be that natural variation is waning in its ability to change the direction of the global temperature anomaly as it has not summoned enough muscle to do so significantly since around 1940. That was the year my father had no idea he was about to become a highly decorated member of The Greatest Generation.

Before J-NG wrote his post about the possibility of record hottest year with 12 months of ENSO neutral, I was thinking we are on the verge of that happening. 2013 is all La Nina leaning ENSO so far, and it is currently a top-5 year. This is just another sign natural variation is waning in its ability to change the direct of the temperature anomaly in a significant negative direction for a prolonged length of time.

I don’t know what numerology is, and I understand these graphs are simplistic, but I ask of natural variation, where’s the beef?

JCH hi

Pythagoras is considered father of numerology, science of numbers, as astrology was science of stars. However, both terms have lost the original meaning and are now used as derogatory terms.

I am not bothered by either attribute or for that matter being referred to as denialist (see WHT’s post above).

There are solar and terrestrial data which contain similar or identical past trends as those found in the global temperature data. Since no solid physical mechanism is clear to provide the ‘missing’ link, those who are convinced that they know everything that needs to be known, refer to those types of calculations as numerology, and that is fine by me.

As far as 2013 is concerned, most of the ordinary people and that includes myself, are selfishly only seriously concerned by climate change in their own back yard. Due to past circumstances, I happen to live in London UK, here we have longest and most studied local temperature record referred to as the CET.

2013 for the CET area was for the most of the year noticeably colder than the past 20 year average (only the summer was warmer than the average to everyone’s delight )

http://www.vukcevic.talktalk.net/CET-dMm.htm

so it is far from being warmest on the record.

Where is the beef in the natural variability?

Difficult to tell, we just have to wait and see. If my calculations prove to be correct then ‘the beef’ will show itself, in which case most of the currently promoted climate models may prove to be closer to numerology than their authors would care to admit.

On personal note, something in common, in 1943 my dad escaped Nazi’s firing squad, but alas didn’t get a medal for his 4 years resistance activities, since didn’t much care for the victorious forces regime either, fortunately he lived long enough to see its demise.

Happy Xmas & n.y.

Another piece of the jigsaw puzzle is van Loon and Meehl’s analysis

H. van Loon and G. A. Meehl, “Interactions between externally-forced climate signals from sunspot peaks and the internally-generated Pacific Decadal and North Atlantic Oscillations,” Geophys. Res. Lett., p. 2013GL058670, Dec. 2013.

Small pieces will help solve the puzzle at the expense of information complexity.

“However, the temperatures (at least according to the data of Cowtan & Way) are within the range which is spanned by 90% of the models. So there is no evidence for model errors here.”

No, they are not. Cowtan and Way is not measured data. The recent HadCrut4 values lie below the 5-95% model range. Therefore greater than 95% of CMIP models are now overpredicting temperatures, a mere handful of years into their projections, no less. To make this claim directly in front of a figure (6) that clearly contradicts it is bizarrely inaccurate, to phrase it politely.

[Response: Please read this. – gavin]

In addition to the underestimate in HadCRUT4 indicated by Cowtan & Way, when examining some of its use in reports and papers, I find some students seem to misunderstand the degree and manner to which HadCRUT4 ensembles represent surface temperature variability. To really get at that variability, the covariance estimates published by HadCRUT4 need to be used. I have seen people expect to find such variability in the ensemble ranges themselves, even to the point of resampling from them and expecting to capture the underlying variation. This cannot work, and, without a significant correction based upon covariance, will understate variability and, so, torpedo credibility of inferences that these temperatures are not consistent with predictions, projections, or models.

I’m hoping to finish a write-up on a specific case of this for arXiv.org release in the next couple of weeks.

I am very interested in the Subject. Retired IBM employee Electrical engineering.

Now at Olli at the University of Arizona. Moderated a class in Energy and Climate Change.

Want to learn about Oceans – Earth and its processes with History and current understanding of ICE AGE.

azizra2@msn.com

Are all climate models taking account of the new value for TSI of 1361.5W/sq.m? This is down 4.5W/sq.m from the previously accepted value of 1366W/sq.m. See the SORCE website. (Kopp & Lean, GRL, 38, L01706, 2011)

This equates to a reduction of solar radiation at TOA of about 1.1W/sq.m from what was previously used in models of warming imbalance. If a constant albedo portion (about 30% reflected) is maintained then the the 70% incoming radiation portion is reduced by about 0.77W/sq.m.

This is pretty significant when the postulated warming imbalance is anywhere from 0.6 to 0.9W/sq.m.

How are the latest incoming and outgoing radiation numbers being adjusted to maintain this imbalance with the reduced TSI?

[Response: This has much less impact than you might think because the models are calibrated with respect to the TSI number. This is a different issue to when the TSI changes after the models have been frozen. See Rind et al (in press) for an exploration of exactly this point. – gavin]

Gavin,

I had a look at the Rind et al extract thus: quote “The results indicate that by altering cloud cover the model properly compensates for the different absolute solar irradiance values on a global level when simulating both the pre-industrial and doubled CO2 climates” endquote

Am I to assume this to mean that the albedo number has been altered to produce the desired incoming solar radiation?

If so we are back to the Hansen story where the imbalance number is theorised and not actually measured, and then ‘corrections’ are made to actual observations to preserve the theory.

[Response: Huh? This has nothing to do with Hansen (though I don’t get your reference in any case). Because models are not perfect calculations from first principles, there are always a number of relatively unconstrained parameters that can be varied as part of the model calibration. One target to get right is the global albedo and the fact that the pre-industrial atmospheric runs need to be in quasi-equilibrium. If you have a model that is calibrated with a TSI at 1365 W/m2, those parameters have been set to give a reasonable albedo and TOA radiative balance. Now, change the TSI to 1361 W?m2 – the model will be now be out of balance by about 0.7 W/m2. This will be fixed via a new calibration (whereby there will be a little more high cloud and a little less low cloud) in order to get a new equilibrium. Once these calibrations have been done, they are fixed for all the perturbation runs (like the 20th Century hindcasts, RCPs and paleo runs etc.). This has nothing to do with the imbalance that you get from increasing GHGs since it is exactly how the climate responds to that that you are trying to understand. Basic point – calibrations come before experiments and once the calibration is fixed it doesn’t change during any experiment. – gavin]

“This has nothing to do with the imbalance that you get from increasing GHGs since it is exactly how the climate responds to that that you are trying to understand.”

Well this is very interesting because how the climate responds to increasing GHG’s is also a function of clouds and TSI. The TOA altitude will change, changing the effective radiation temperature. Calibration to an imperfect TSI will achieve an imperfect response to GHG perturbation. In at least one way I can think of, a lower TSI would actually lead to higher sensitivity to GHG’s, though I have no idea how the different mechanisms ledger out. I am interested in the answer to Ken’s question: are climate models taking account (calibrated to) the value of 1361.5 W/m2?

[Response: The CMIP5 models were generally calibrated to 1365 W/m2 or thereabouts. There might be some small variation. I expect that models developed now will use 1361 W/m2. – gavin]

Fred #33, just another virtanen to waste your time

If I take the second plot published, and isolate the period after 2013 to 2100, it looks pretty insignificant. How anyone can add this exponential increase to it is beyond me. They might as well add an exponential decrease – it would be just as valid.

R. James, Dude, what the hell are you talking about?

> How anyone can add this exponential increase to it is beyond me

They don’t show you the amount of fossil fuel being burned in the future; each line reflects a different set of assumptions about that.

To get the exponential decrease you imagine could be equally possible, you need to provide a scenario with a vast rapid decrease in CO2 in the atmosphere. Other than the science fiction — a massive outbreak of replicating green goo — nobody has that yet.

Gavin, thanks for your responses at 74 and 75.

I had a look for an updated Trenberth energy balance diagram using a TSI of 1361.5 and could not find.

Does such a revision exist? Last time I looked we were still not accurately measuring the warming imbalance, so therefore a more accurate albeit reduced TSI measurement must drive adjustments to the assumed albedo reflection and/or OLR.

Are these adjustments producing Trenberth’s 0.9W/sq.m imbalance or Hansen’s 0.6W/sq.m imbalance?

[Response: The imbalance is inferred from heat content changes (ocean and elsewhere). It therefore has nothing to do with the measurements of TOA radiation – which are still not accurate enough to constrain it directly. The change in the energy balance diagram is only 0.7W/m2 in the absorbed SW, so it really doesn’t make that much difference. The Stephens et al (2012) picture is a more recent update though. – gavin]

Fred Moolten,

To belatedly pick up this thread again…

the comparison I was making (and has been made previously by others, including Stefan, Trenberth and more, apparently supported by observations) is that a failure of the surface to warm due to a La Nina-like process will increase energy accumulation by reducing OLR relative to the OLR that would have been produced by a warmer surface.

I think what people have said is that La Nina states tend to enhance energy accumulation at depth instead of near the surface. That means a situation where a greater than normal fraction of an extant imbalance will be sequestered in the oceans, reducing the planet’s immediate ability to diminish the imbalance via Planck response, thereby largely sustaining that extant imbalance.

What I’m saying is that the magnitude of imbalance is heavily dependent on feedbacks to surface warming. Therefore an “external” (in this case I mean unforced variability, somewhat confusingly) cause of surface temperature stagnation can diminish the growth of an imbalance compared to what would happen during “normal” conditions.

Looking at model outputs (correlating global average thermosteric sea level with global average surface temperature) suggests, as we’ve been saying, that the two factors act against each other but there appears to be a threshold, related to climate sensivitity, where one dominates. With the caveat that I only had a small sample size, a couple of high sensitivity models clearly correlated periods of enhanced (above trend) near-surface warming with enhanced ocean heat accumulation, and vice versa. In a couple of low-end sensitivity models the picture was less clear. Some periods appeared to show anti-correlation, some correlation.

I think one key factor here is that most models appear to have stronger water vapour and cloud feedbacks over ocean areas (which makes sense) whereas Planck response tends to be largest over land areas, particularly during transient warming. That seems to be why primarily oceanic variability can have a greater effect on water vapour and cloud feedbacks than on the Planck response, at least temporarily.

I have a sincere question. I am told that human CO2 emmissions became high enough to start changing the climate as a result of post war industrialization, so starting in about 1950. I am told that we must keep the total temperature rise, natural and human induced, below 2 degrees C. When I look at the graphs above they show about .45 degrees C warming from 1950 to now, or about .0071 degrees per year. At that rate of warming, which is over a period which I presume is long enough to average out natural fluctuations, it will take over 280 years to get to 2 degrees of warming. What am I missing?

gordon johnson – There are a few things you need to take into account.

The acceleration of industrialisation since 1950 also brought an acceleration of aerosol emissions which have a net cooling influence on climate and have therefore counteracted some of the expected warming due to well-mixed greenhouse gases (primarily CO2, methane, N2O, CFC/HCFC variants).

In model simulations which incorporate only historical changes in well-mixed greenhouse gases you’ll typically see about twice as much warming from pre-industrial to present as in simulations which include all historical factors, with aerosols being the main factor in that difference.

It’s anticipated in all future scenarios (I think) that nations will bring in clean air legislation which will reduce aerosol influence. Whereas in the past aerosols have tended to counteract WMGHG warming, this future reduction will enhance warming rates.

———————————————-

There are also natural buffering effects in Earth’s climate system which delay the full response in terms of surface temperature increase. The energy imbalance which has been discussed here can be thought of as a manifestation of this buffering.

The year-on-year forcing increase due to WMGHGs is only about 0.05W/m2 whereas the current imbalance appears to be at least 0.5W/m2 so you can see the energy accumulation is not instantly translated to surface temperature increase.

Idealised model simulations in which CO2 concentration is increased by 1% per year can provide some insight into how this can affect rates of warming over time. The year-on-year CO2 forcing increase is almost exactly constant in this setup but the rate of warming for the final 30 years in a 70-year run is typically about 50% greater than the rate for the first 30 years.