Readers will be aware of the paper by Shaun Marcott and colleagues, that they published a couple weeks ago in the journal Science. That paper sought to extend the global temperature record back over the entire Holocene period, i.e. just over 11 kyr back time, something that had not really been attempted before. The paper got a fair amount of media coverage (see e.g. this article by Justin Gillis in the New York Times). Since then, a number of accusations from the usual suspects have been leveled against the authors and their study, and most of it is characteristically misleading. We are pleased to provide the authors’ response, below. Our view is that the results of the paper will stand the test of time, particularly regarding the small global temperature variations in the Holocene. If anything, early Holocene warmth might be overestimated in this study.

Update: Tamino has three excellent posts in which he shows why the Holocene reconstruction is very unlikely to be affected by possible discrepancies in the most recent (20th century) part of the record. The figure showing Holocene changes by latitude is particularly informative.

____________________________________________

Summary and FAQ’s related to the study by Marcott et al. (2013, Science)

Prepared by Shaun A. Marcott, Jeremy D. Shakun, Peter U. Clark, and Alan C. Mix

Primary results of study

Global Temperature Reconstruction: We combined published proxy temperature records from across the globe to develop regional and global temperature reconstructions spanning the past ~11,300 years with a resolution >300 yr; previous reconstructions of global and hemispheric temperatures primarily spanned the last one to two thousand years. To our knowledge, our work is the first attempt to quantify global temperature for the entire Holocene.

Structure of the Global and Regional Temperature Curves: We find that global temperature was relatively warm from approximately 10,000 to 5,000 years before present. Following this interval, global temperature decreased by approximately 0.7°C, culminating in the coolest temperatures of the Holocene around 200 years before present during what is commonly referred to as the Little Ice Age. The largest cooling occurred in the Northern Hemisphere.

Holocene Temperature Distribution: Based on comparison of the instrumental record of global temperature change with the distribution of Holocene global average temperatures from our paleo-reconstruction, we find that the decade 2000-2009 has probably not exceeded the warmest temperatures of the early Holocene, but is warmer than ~75% of all temperatures during the Holocene. In contrast, the decade 1900-1909 was cooler than~95% of the Holocene. Therefore, we conclude that global temperature has risen from near the coldest to the warmest levels of the Holocene in the past century. Further, we compare the Holocene paleotemperature distribution with published temperature projections for 2100 CE, and find that these projections exceed the range of Holocene global average temperatures under all plausible emissions scenarios.

Frequently Asked Questions and Answers

Q: What is global temperature?

A: Global average surface temperature is perhaps the single most representative measure of a planet’s climate since it reflects how much heat is at the planet’s surface. Local temperature changes can differ markedly from the global average. One reason for this is that heat moves around with the winds and ocean currents, warming one region while cooling another, but these regional effects might not cause a significant change in the global average temperature. A second reason is that local feedbacks, such as changes in snow or vegetation cover that affect how a region reflects or absorbs sunlight, can cause large local temperature changes that are not mirrored in the global average. We therefore cannot rely on any single location as being representative of global temperature change. This is why our study includes data from around the world.

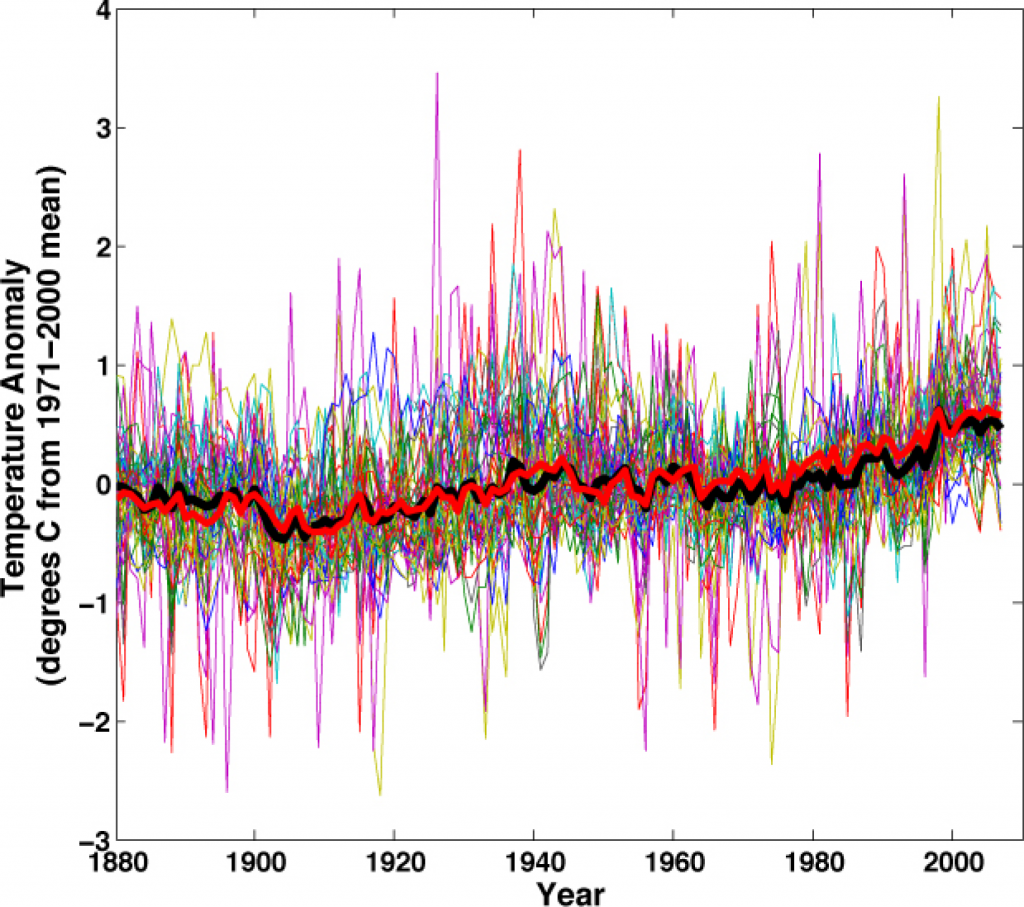

We can illustrate this concept with temperature anomaly data based on instrumental records for the past 130 years from the National Climatic Data Center (http://www.ncdc.noaa.gov/cmb-faq/anomalies.php#anomalies). Over this time interval, an increase in the global average temperature is documented by thermometer records, rising sea levels, retreating glaciers, and increasing ocean heat content, among other indicators. Yet if we plot temperature anomaly data since 1880 at the same locations as the 73 sites used in our paleotemperature study, we see that the data are scattered and the trend is unclear. When these same 73 historical temperature records are averaged together, we see a clear warming signal that is very similar to the global average documented from many more sites (Figure 1). Averaging reduces local noise and provides a clearer perspective on global climate.

Figure 1: Temperature anomaly data (thin colored lines) at the same locations as the 73 paleotemperature records used in Marcott et al. (2013), the average of these 73 temperature anomaly series (bold black line), and the global average temperature from the National Climatic Data Center blended land and ocean dataset (bold red line) (data from Smith et al., 2008).

New Scientist magazine has an “app” that allows one to point-and-plot instrumental temperatures for any spot on the map to see how local temperature changes compare to the global average over the past century (http://warmingworld.newscientistapps.com/).

Q: How does one go about reconstructing temperatures in the past?

A: Changes in Earth’s temperature for the last ~160 years are determined from instrumental data, such as thermometers on the ground or, for more recent times, satellites looking down from space. Beyond about 160 years ago, we must turn to other methods that indirectly record temperature (called “proxies”) for reconstructing past temperatures. For example, tree rings, calibrated to temperature over the instrumental era, provide one way of determining temperatures in the past, but few trees extend beyond the past few centuries or millennia. To develop a longer record, we used primarily marine and terrestrial fossils, biomolecules, or isotopes that were recovered from ocean and lake sediments and ice cores. All of these proxies have been independently calibrated to provide reliable estimates of temperature.

Q: Did you collect and measure the ocean and land temperature data from all 73 sites?

A: No. All of the datasets were previously generated and published in peer-reviewed scientific literature by other researchers over the past 15 years. Most of these datasets are freely available at several World Data Centers (see links below); those not archived as such were graciously made available to us by the original authors. We assembled all these published data into an easily used format, and in some cases updated the calibration of older data using modern state-of-the-art calibrations. We made all the data available for download free-of-charge from the Science web site (see link below). Our primary contribution was to compile these local temperature records into “stacks” that reflect larger-scale changes in regional and global temperatures. We used methods that carefully consider potential sources of uncertainty in the data, including uncertainty in proxy calibration and in dating of the samples (see step-by-step methods below).

NOAA National Climate Data Center: http://www.ncdc.noaa.gov/paleo/paleo.html

PANGAEA: http://www.pangaea.de/

Holocene Datasets: http://www.sciencemag.org/content/339/6124/1198/suppl/DC1

Q: Why use marine and terrestrial archives to reconstruct global temperature when we have the ice cores from Greenland and Antarctica?

A: While we do use these ice cores in our study, they are limited to the polar regions and so give only a local or regional picture of temperature changes. Just as it would not be reasonable to use the recent instrumental temperature history from Greenland (for example) as being representative of the planet as a whole, one would similarly not use just a few ice cores from polar locations to reconstruct past temperature change for the entire planet.

Q: Why only look at temperatures over the last 11,300 years?

A: Our work was the second half of a two-part study assessing global temperature variations since the peak of the last Ice Age about 22,000 years ago. The first part reconstructed global temperature over the last deglaciation (22,000 to 11,300 years ago) (Shakun et al., 2012, Nature 484, 49-55; see also http://www.people.fas.harvard.edu/~shakun/FAQs.html), while our study focused on the current interglacial warm period (last 11,300 years), which is roughly the time span of developed human civilizations.

Q: Is your paleotemperature reconstruction consistent with reconstructions based on the tree-ring data and other archives of the past 2,000 years?

A: Yes, in the parts where our reconstruction contains sufficient data to be robust, and acknowledging its inherent smoothing. For example, our global temperature reconstruction from ~1500 to 100 years ago is indistinguishable (within its statistical uncertainty) from the Mann et al. (2008) reconstruction, which included many tree-ring based data. Both reconstructions document a cooling trend from a relatively warm interval (~1500 to 1000 years ago) to a cold interval (~500 to 100 years ago, approximately equivalent to the Little Ice Age).

Q: What do paleotemperature reconstructions show about the temperature of the last 100 years?

A: Our global paleotemperature reconstruction includes a so-called “uptick” in temperatures during the 20th-century. However, in the paper we make the point that this particular feature is of shorter duration than the inherent smoothing in our statistical averaging procedure, and that it is based on only a few available paleo-reconstructions of the type we used. Thus, the 20th century portion of our paleotemperature stack is not statistically robust, cannot be considered representative of global temperature changes, and therefore is not the basis of any of our conclusions. Our primary conclusions are based on a comparison of the longer term paleotemperature changes from our reconstruction with the well-documented temperature changes that have occurred over the last century, as documented by the instrumental record. Although not part of our study, high-resolution paleoclimate data from the past ~130 years have been compiled from various geological archives, and confirm the general features of warming trend over this time interval (Anderson, D.M. et al., 2013, Geophysical Research Letters, v. 40, p. 189-193; http://www.agu.org/journals/pip/gl/2012GL054271-pip.pdf).

Q: Is the rate of global temperature rise over the last 100 years faster than at any time during the past 11,300 years?

A: Our study did not directly address this question because the paleotemperature records used in our study have a temporal resolution of ~120 years on average, which precludes us from examining variations in rates of change occurring within a century. Other factors also contribute to smoothing the proxy temperature signals contained in many of the records we used, such as organisms burrowing through deep-sea mud, and chronological uncertainties in the proxy records that tend to smooth the signals when compositing them into a globally averaged reconstruction. We showed that no temperature variability is preserved in our reconstruction at cycles shorter than 300 years, 50% is preserved at 1000-year time scales, and nearly all is preserved at 2000-year periods and longer. Our Monte-Carlo analysis accounts for these sources of uncertainty to yield a robust (albeit smoothed) global record. Any small “upticks” or “downticks” in temperature that last less than several hundred years in our compilation of paleoclimate data are probably not robust, as stated in the paper.

Q: How do you compare the Holocene temperatures to the modern instrumental data?

A: One of our primary conclusions is based on Figure 3 of the paper, which compares the magnitude of global warming seen in the instrumental temperature record of the past century to the full range of temperature variability over the entire Holocene based on our reconstruction. We conclude that the average temperature for 1900-1909 CE in the instrumental record was cooler than ~95% of the Holocene range of global temperatures, while the average temperature for 2000-2009 CE in the instrumental record was warmer than ~75% of the Holocene distribution. As described in the paper and its supplementary material, Figure 3 provides a reasonable assessment of the full range of Holocene global average temperatures, including an accounting for high-frequency changes that might have been damped out by the averaging procedure.

Q: What about temperature projections for the future?

A: Our study used projections of future temperature published in the Fourth Assessment of the Intergovernmental Panel on Climate Change in 2007, which suggest that global temperature is likely to rise 1.1-6.4°C by the end of the century (relative to the late 20th century), depending on the magnitude of anthropogenic greenhouse gas emissions and the sensitivity of the climate to those emissions. Figure 3 in the paper compares these published projected temperatures from various emission scenarios to our assessment of the full distribution of Holocene temperature distributions. For example, a middle-of-the-road emission scenario (SRES A1B) projects global mean temperatures that will be well above the Holocene average by the year 2100 CE. Indeed, if any of the six emission scenarios considered by the IPCC that are shown on Figure 3 are followed, future global average temperatures, as projected by modeling studies, will likely be well outside anything the Earth has experienced in the last 11,300 years, as shown in Figure 3 of our study.

Technical Questions and Answers:

Q. Why did you revise the age models of many of the published records that were used in your study?

A. The majority of the published records used in our study (93%) based their ages on radiocarbon dates. Radiocarbon is a naturally occurring isotope that is produced mainly in the upper atmosphere by cosmic rays. This form of carbon is then distributed around the world and incorporated into living things. Dating is based on the amount of this carbon left after radioactive decay. It has been known for several decades that radiocarbon years differ from true “calendar” years because the amount of radiocarbon produced in the atmosphere changes over time, as does the rate that carbon is exchanged between the ocean, atmosphere, and biosphere. This yields a bias in radiocarbon dates that must be corrected. Scientists have been able to determine the correction between radiocarbon years and true calendar year by dating samples of known age (such as tree samples dated by counting annual rings) and comparing the apparent radiocarbon age to the true age. Through many careful measurements of this sort, they have demonstrated that, in general, radiocarbon years become progressively “younger” than calendar years as one goes back through time. For example, the ring of a tree known to have grown 5700 years ago will have a radiocarbon age of ~5000 years, whereas one known to have grown 12,800 years ago will have a radiocarbon age of ~11,000 years.

For our paleotemperature study, all radiocarbon ages needed to be converted (or calibrated) to calendar ages in a consistent manner. Calibration methods have been improved and refined over the past few decades. Because our compilation included data published many years ago, some of the original publications used radiocarbon calibration systems that are now obsolete. To provide a consistent chronology based on the best current information, we thus recalibrated all published radiocarbon ages with Calib 6.0.1 software (using the databases INTCAL09 for land samples or MARINE09 for ocean samples) and its state-of-the-art protocol for site-specific locations and materials. This software is freely available for online use at http://calib.qub.ac.uk/calib/.

By convention, radiocarbon dates are recorded as years before present (BP). BP is universally defined as years before 1950 CE, because after that time the Earth’s atmosphere became contaminated with artificial radiocarbon produced as a bi-product of nuclear bomb tests. As a result, radiocarbon dates on intervals younger than 1950 are not useful for providing chronologic control in our study.

After recalibrating all radiocarbon control points to make them internally consistent and in compliance with the scientific state-of-the-art understanding, we constructed age models for each sediment core based on the depth of each of the calibrated radiocarbon ages, assuming linear interpolation between dated levels in the core, and statistical analysis that quantifies the uncertainty of ages between the dated levels. In geologic studies it is quite common that the youngest surface of a sediment core is not dated by radiocarbon, either because the top is disturbed by living organisms or during the coring process. Moreover, within the past hundred years before 1950 CE, radiocarbon dates are not very precise chronometers, because changes in radiocarbon production rate have by coincidence roughly compensated for fixed decay rates. For these reasons, and unless otherwise indicated, we followed the common practice of assuming an age of 0 BP for the marine core tops.

Q: Are the proxy records seasonally biased?

A: Maybe. We cannot exclude the possibility that some of the paleotemperature records are biased toward a particular season rather than recording true annual mean temperatures. For instance, high-latitude proxies based on short-lived plants or other organisms may record the temperature during the warmer and sunnier summer months when the organisms grow most rapidly. As stated in the paper, such an effect could impact our paleo-reconstruction. For example, the long-term cooling in our global paleotemperature reconstruction comes primarily from Northern Hemisphere high-latitude marine records, whereas tropical and Southern Hemisphere trends were considerably smaller. This northern cooling in the paleotemperature data may be a response to a long-term decline in summer insolation associated with variations in the earth’s orbit, and this implies that the paleotemperature proxies here may be biased to the summer season. A summer cooling trend through Holocene time, if driven by orbitally modulated seasonal insolation, might be partially canceled out by winter warming due to well-known orbitally driven rise in Northern-Hemisphere winter insolation through Holocene time. Summer-biased proxies would not record this averaging of the seasons. It is not currently possible to quantify this seasonal effect in the reconstructions. Qualitatively, however, we expect that an unbiased recorder of the annual average would show that the northern latitudes might not have cooled as much as seen in our reconstruction. This implies that the range of Holocene annual-average temperatures might have been smaller in the Northern Hemisphere than the proxy data suggest, making the observed historical temperature averages for 2000-2009 CE, obtained from instrumental records, even more unusual with respect to the full distribution of Holocene global-average temperatures.

Q: What do paleotemperature reconstructions show about the temperature of the last 100 years?

A: Here we elaborate on our short answer to this question above. We concluded in the published paper that “Without filling data gaps, our Standard5×5 reconstruction (Figure 1A) exhibits 0.6°C greater warming over the past ~60 yr B.P. (1890 to 1950 CE) than our equivalent infilled 5° × 5° area-weighted mean stack (Figure 1, C and D). However, considering the temporal resolution of our data set and the small number of records that cover this interval (Figure 1G), this difference is probably not robust.” This statement follows from multiple lines of evidence that are presented in the paper and the supplementary information: (1) the different methods that we tested for generating a reconstruction produce different results in this youngest interval, whereas before this interval, the different methods of calculating the stacks are nearly identical (Figure 1D), (2) the median resolution of the datasets (120 years) is too low to statistically resolve such an event, (3) the smoothing presented in the online supplement results in variations shorter than 300 yrs not being interpretable, and (4) the small number of datasets that extend into the 20th century (Figure 1G) is insufficient to reconstruct a statistically robust global signal, showing that there is a considerable reduction in the correlation of Monte Carlo reconstructions with a known (synthetic) input global signal when the number of data series in the reconstruction is this small (Figure S13).

Q: How did you create the Holocene paleotemperature stacks?

A: We followed these steps in creating the Holocene paleotemperature stacks:

1. Compiled 73 medium-to-high resolution calibrated proxy temperature records spanning much or all of the Holocene.

2. Calibrated all radiocarbon ages for consistency using the latest and most precise calibration software (Calib 6.0.1 using INTCAL09 (terrestrial) or MARINE09 (oceanic) and its protocol for the site-specific locations and materials) so that all radiocarbon-based records had a consistent chronology based on the best current information. This procedure updates previously published chronologies, which were based on a variety of now-obsolete and inconsistent calibration methods.

3. Where applicable, recalibrated paleotemperature proxy data based on alkenones and TEX86 using consistent calibration equations specific to each of the proxy types.

4. Used a Monte Carlo analysis to generate 1000 realizations of each proxy record, linearly interpolated to constant time spacing, perturbing them with analytical uncertainties in the age model and temperature estimates, including inflation of age uncertainties between dated intervals. This procedure results in an unbiased assessment of the impact of such uncertainties on the final composite.

5. Referenced each proxy record realization as an anomaly relative to its mean value between 4500 and 5500 years Before Present (the common interval of overlap among all records; Before Present, or BP, is defined by standard practice as time before 1950 CE).

6. Averaged the first realization of each of the 73 records, and then the second realization of each, then the third, the fourth, and so on, to form 1000 realizations of the global or regional temperature stacks.

7. Derived the mean temperature and standard deviation from the 1000 simulations of the global temperature stack.

8. Repeated this procedure using several different area-weighting schemes and data subsets to test the sensitivity of the reconstruction to potential spatial and proxy biases in the dataset.

9. Mean-shifted the global temperature reconstructions to have the same average as the Mann et al. (2008) CRU-EIV temperature reconstruction over the interval 510-1450 years Before Present. Since the CRU-EIV reconstruction is referenced as temperature anomalies from the 1961-1990 CE instrumental mean global temperature, the Holocene reconstructions are now also effectively referenced as anomalies from the 1961-1990 CE mean.

10. Estimated how much higher frequency (decade-to-century scale) variability is plausibly missing from the Holocene reconstruction by calculating attenuation as a function of frequency in synthetic data processed with the Monte-Carlo stacking procedure, and by statistically comparing the amount of temperature variance the global stack contains as a function of frequency to the amount contained in the CRU-EIV reconstruction. Added this missing variability to the Holocene reconstruction as red noise.

11. Pooled all of the Holocene global temperature anomalies into a single histogram, showing the distribution of global temperature anomalies during the Holocene, including the decadal-to century scale high-frequency variability that the Monte-Carlo procedure may have smoothed from the record (largely from the accounting for chronologic uncertainties).

12. Compared the histogram of Holocene paleotemperatures to the instrumental global temperature anomalies during the decades 1900-1909 CE and 2000-2009 CE. Determined the fraction of the Holocene temperature anomalies colder than 1900-1909 CE and 2000-2009 CE.

13. Compared global temperature projections for 2100 CE from the Fourth Assessment Report of the Intergovernmental Panel on Climate Change for various emission scenarios.

14. Evaluated the impact of potential sources of uncertainty and smoothing in the Monte-Carlo procedure, as a guide for future experimental design to refine such analyses.

This is a very clear exposition of the research. As I recall this information was already in the paper and supplementary material but it’s really nice to have it summarised here so clearly.

I do hope certain people will take note of this little dig :) (but by their past behaviours not a chance!):

Just as it would not be reasonable to use the recent instrumental temperature history from Greenland (for example) as being representative of the planet as a whole…

Thanks.

[Response: No one would make such a mistake when, er., presenting “science” to the state legislature of Washington, would they? ;). (Link).

The format of this response (brief summary plus FAQ addressing questions and criticisms) is especially helpful, very effective.

I think this paper represents a very nice advance in the characterization of Holocene climate; ever since the various papers on cooling trends of the past 2000 years pre-industrial started coming out, I had been yearning for something that covered back to the Altithermal. This kind of paper will be very valuable in refining our understanding of how the climate responds to the precessional cycle, particularly once it becomes clearer whether the reconstructed temperatures are really annual averages, as opposed to biased toward summer.

I do think, however, that some of the commentary in the blogosphere regarding how unprecedented the warming of the past century looks (notably the “wheel chair” graph comparing Marcott et al with the instrumental record) risks going beyond what can really be concluded from the study. As noted in the FAQ, the time resolution of reconstruction is approximately a century. Thus, it is not quite fair to compare the reconstruction to instrumental data that is not smoothed to the same time resolution. It is conceivable that there are individual centuries in the Altithermal where the temperature rose as fast as today, and to the same extent or more, but these would not show up in a record smoothed to 100 year time resolution. I think this is very unlikely, but the paper doesn’t strictly rule out the possibility. This remark applies only to the warming of the past 100 years. Where we are going in the next century is so extreme it would show up even if smoothed down to the centennial resolution, I think.

Note also the response in the FAQ concerning Fig. 3, where the authors attempt to back out some information regarding possible high frequency Holocene variability that is damped by the smoothing. I would welcome further discussion regarding the nature and validity of those arguments.

Is the response to criticisms about core-top dating adjustments clear enough to end that particular dispute? The radio-carbon dating calibrations were not at issue.

[Response: I doubt any amount of rational discussion will “end” criticism. In some quarters this goes on and on regardless of the relevance. I’ve yet to see the relevance of this issue — which I think Marcott explain perfectly well — to the conclusions of the paper. The question that seems to be of interest here really is what the sharp rise in temperature in the last century reflects; I think this is addressed well at Tamino’s blog, making it clear that this aspect of the paper has no influence on the subject of the paper, which is the long term Holocene variations.–eric]

Regarding “spikes” that might have escaped this reconstruction, I think it’s an interesting scientific question to ask:

For a current spike to be missed by Marcott, eta al:

a) There needs to be plausible mechanisms for both an upspike like the current, and then a downspike of approximately similar size.

b) Any such mechanism must not be caught by higher-resolutiuon proxies like ice-cores.

So the question is: how well can possible jiggles be bounded?

I note that the usual line+uncertainty zone graphs do not constrain possible paths as much as physics and other data do. It seems fairly hard for the real line to be at the bottom of the zone at one point, leap to the top at the next, and then go back … unless someone can provide a real mechanism.

[Response: John and Ray: See my update above with link to Tamino’s post addressing some of these questions.–eric]

[Response: Tamino’s posts have a good description of the issues with the uptick, but as far as I can tell they don’t address the issue of whether a 50 year spike in temperature of similar magnitude to the past half century, followed by a recovery, would be picked up by the proxies. I agree with John that it’s an issue of what physics could cause such a thing, but the point here is whether one is invoking physics or reasoning from the proxy reconstruction. What I’m going after here is the issue of what the Holocene record can tell us about the maximum excursions that could be caused by things like the AMO. A warming like the present, which is caused by CO2, could not be followed by a recovery because of the long lifetime of CO2, but we already know that CO2 doesn’t vary enough in the pre-industrial Holocene to do very much. The issue is whether some other cause (notably ocean heat uptake and release) could cause a centennial scale excursion of anything like the magnitude of the present CO2 induced warming. Constraining the magnitude of centennial natural variabiity over the Holocene would give us some further information about how much variation in rate of warming we should expect on top of the upward CO2-induced trend. Unless I misunderstand the authors’ remark about time scale, the Marcott et al paper doesnt tell us much about the centennial variability in the Holocene. It does put the CO2-induced warming of the present into a valuable perspective, though, because we know that the CO2 induced warming will last for millennia, and that the millennial warming we will get even if we hold the line at a trillion tons of carbon emissions is vast in comparison with the millennial temperature variations over the whole span of human civilization. That’s the real “big picture” here. –raypierre ]

Thanks for this.

Anyone so inclined may pile on over at DotEarth. I hope I have not inserted my foot too far into my mouth there; it’s usually at a level that does not exceed my abilities.

http://dotearth.blogs.nytimes.com/2013/03/31/fresh-thoughts-from-authors-of-a-paper-on-11300-years-of-global-temperature-changes/

(I’m “verified” so don’t get upset if your comments are held forever – don’t think Andy Revkin spends his weekends passing on comments.)

Step 9 in the stacking procedure describes the proxies being ‘mean-shifted’ to reference them to the 1961-1990 CE mean.

Is this saying the various proxies are providing relative temperature estimates, and those need to be tied into an external source (like an overlap with the instrument record at their ends) to make them absolute?

Or is this simply describing how they locate “0 C” on the y-axis in the figures?

[Response: I think this is just the location of the 0º C anomaly baseline. – gavin]

Andy Revkin has a comment on the FAQ, pointedly commenting:

there’s also room for more questions — one being how the authors square the caveats they express here with some of the more definitive statements they made about their findings in news accounts.

How about it?

[Response: Can you be specific? The press release they put out doesn’t have anything contradictory. – gavin]

The post which Eric links to is the final installment of a three-part series on the Marcott et al. reconstruction. It deals primarily with the regional differences revealed by the proxies.

The issue of the 20th-century uptick is the main topic of part 2.

What the “critics” are desperately trying to ignore is the real point of this work, the subject of part 1 of the series, namely the big picture of temperature change throughout the holocene.

The point of Marcott et al. is to study global temperature change in the past, not the present — specifically the last 11,300 years. In fact, for this reconstruction the number of proxies used dwindles as time goes forward, rather than dwindling as one goes back further in the past like in most other paleo reconstructions. One hardly needs Marcott et al. to tell us about recent global temperature changes; we already know what happened in the 20th century. For the given purpose, re-calibrating the proxy dates is absolutely the right thing to do.

What happened in the past is most definitely not like what happened in the 20th century. In spite of the low time resolution of the Marcott et al. reconstruction, the variations (even — perhaps especially — without the smoothing induced by the Monte Carlo procedure) are just not big enough to permit change like we’ve seen recently to be believable. In my opinion, the Marcott et al. reconstruction absolutely rules out any global temperature increase or decrease of similar magnitude with the rapidity we’ve witnessed over the last 100 years. And the fact is, we already know what happened in the 20th century.

Critics of the recent uptick, and of the re-dating procedure, either have utterly missed the point, or they staunchly refuse to see it and want everyone else not to see it either. Their foolishness is appalling.

[Response: Tamino, thanks for clarifying regarding your post, and weighing in. I think your answer addresses John Mashey’s question rather well too — the celebrated “uptick” that McIntyre is so obsessed with was never the point of the paper. It may have been an oversimplification to plot it this way — but to my reading nothing actually said in the paper is problematic. Your statement that you think that Marcott et al. “absolutely rules out …” is a very strong statement coming from you, and makes me want to read it again, and your posts again. Very interesting if you are right. –eric]

[Response: I edited the update to point to all three posts. – gavin]

Perhaps you didn’t read the PR. Start with the headline. It reads: “Earth Is Warmer Today Than During 70 to 80 Percent of the Past 11,300 Years” Fair enough but above we are now told…

“Thus, the 20th century portion of our paleotemperature stack is not statistically robust, cannot be considered representative of global temperature changes, and therefore is not the basis of any of our conclusions.”

Without the uptick there is no basis for the headline.

[edit]

[Response: Sorry, but no. It is not Marcott et al that provide the estimates of the modern warming – they don’t have sufficient proxies in the last 50 to 100 years to robustly estimate the global mean anomaly. This was stated in the paper and above. Their conclusions, as discussed in the paper and above, come from comparisons of their Holocene reconstruction with CRU-EIV from Mann et al and the instrumental data themselves. The headline conclusion follows from that, not the ‘uptick’ in their last data point. – gavin]

Tamino, after all these years, surely you no longer expect honesty in either fact or tactics from the denial industry? The point is to find something to criticize and go to town on it. This one is easy for them, all they have to do is separate the parts of the record and go after the level of certainty of each part, then indicate that until they are all perfectly linked by methodology and accuracy, it’s all a tissue of loosely sewn lies. Many innocent worker bees will spread the “word”, not necessarily even knowing they are being used. Andy Revkin, IMO, values his reputation for fair mindedness and I’m guessing he also has difficulty swallowing the level of danger we face and grasps at straws. All those “honest brokers” – gag.

Speaking of lies, Steve, the one man rapid response squad, has a pack of them up as a comment on this piece. Sometimes I think he is being deliberately obstuse.

Re #11. Can you be specific? Steve’s post doesn’t have anything untruthful.

Here are a few unanswered questions posed by Steve McIntyre.

[Response: Some suggestions below, but hopefully one of the authors can chime in if I get something wrong. (some editing to your comment for clarity). – gavin]

They did not discuss or explain why they deleted modern values from the MD01-2421 splice at CA

[Response: I imagine it’s just a tidying up because the three most recent points are more recent than the last data point in the reconstruction (1940). As stated above, there are not enough cores to reconstruct the global mean robustly in the 20th C. This is obvious in the spread of figure S3. Since this core is quite well dated in the modern period, the impact of this on the uncertainty derived using the randomly perturbed age models will likely be negligible. – gavin]

Or the deletion of modern values from OCE326-GGC300.

[Response: Same as previous. – gavin]

Nor do they discuss the difference between the results that had (presumably) been in the submission to Nature (preserved as a chapter in Marcott’s thesis).

[Response: You are jumping to conclusions here. I have no idea what the initial submission to Nature looked like, and nor do you. From my experience working on Science/Nature submissions, there is an enormous amount of work done to take them from a first concept to the actual submission. In any case, with respect to the thesis work, the basic picture for the Holocene is the same. – gavin]

[Further Response: Turns out there wasn’t a Nature submission. The thesis chapter was eventually prepared for Science, and submitted (and accepted) there. – gavin]

Nor did they discuss the implausibility of their coretop redating of MD95-2043 and MD95-2011.

[Response: The whole point of the age model perturbation analysis (one of the important novelties in this reconstruction) is to assess the impact of age model uncertainties – it is not as if the coretop date is set in stone (or mud). For MD95-2011, I understand that Marcott et al were notified by Richard Telford that the coretop was redated since the original data were published and that the impact of this on the stack, and therefore the conclusions, is negligible. – gavin]

Perhaps Marcott et al can provide the answers.

[Response: I will ask, and if there is anything else to add, I’ll include it as an update here. (Just as an aside, please note that I am more than capable of reading McIntyre’s blog, and so let’s not get involved in some back and forth by proxy. I am not interested in playing games.) – gavin]

Rattus, you too are underestimating the intent and intensity of the response. When the article first appeared in multiple locations (DE version below), the denialbots were caught off base, but they’ve been going to town ever since. I watched it flower and grow, which is why I was prepared for this round at DE. Curry has also been busy.

http://dotearth.blogs.nytimes.com/2013/03/07/scientists-find-an-abrupt-warm-jog-after-a-very-long-cooling/

Time for you guys to return to science, though, sorry for the interruption.

(It was tempting to repeat the captcha, which married erinyes with ninjas! The current one could be stretched to include cabal.)

Since this paper has been the subject of a lot of hostile attention as well as genuine interest, it is worth reminding people of the comment policy, and in particular the injunction against posting unfounded insinuations of misconduct. Being polite and respectful is much more likely to lead to useful interactions (such as the authors answering more questions) than jumping to conclusions or insulting people because you don’t like their conclusions. This goes for everyone. – gavin

re: 3 Raypierre and 5 Eric: thanks.

I’d seen tamino’s nice post, but I still haven’t seen a good analysis of possible forcing changes and causes to raise the temperature equivalent to the modern rise, and then nullify enough of it to escape notice by the higher-resolution proxies. I understand that spikes are not ruled out by the statistics of the proxy-resolution they used, but I’m trying to understand a combination of events that could produce such a spike.

The thought experiment would be: take the modern rise, place that anywhere on the Marcott, et al curve, with start at the lower uncertainty edge, and propose a model for what could have caused an upspike, and then enough of a downspike to get back to the line in a way that doesn’t contradict the ice-core records.

Offhand, I can only think of:

1) Major state change gyrations like Younger Dryas (before this) or the 8.2ky event … but they show strongly in ice-core CH4 2 records, and seems like it would be very noticeable in a set of marine records. And we certainly know that isn’t what’s happening now, i.e., the upswing at end of YD.

2) A strong volcanic period, followed by a period with none.

3) A BIG boost in solar insolation.

4) A big rise in CO2 … but the ice cores rule that out.

Anyway, the question remains: what combination of events could cause a current-equivalent spike and escape the higher-frequency proxies?

Re your point at #10. Marcott et at “Mean-shifted the global temperature reconstructions to have the same average as the Mann et al. (2008) CRU-EIV temperature reconstruction over the interval 510-1450 years Before Present.”

How is this anything other than an arbitrary shift, and as such how can this be regarded as a robust conclusion, that would support the press release?

[Response: Perhaps you can explain why this a problem. It’s this just calculating anomalies relative to the same baseline?–eric]

Re 17, Eric, This is a problem because by choosing different reconstructions and/or time intervals you can shift the baseline almost as you wish!

[Response: Not so. The baseline is set according a reasonable overlap period for any specific reconstruction. There will be small differences for sure, but they aren’t going to be large compared to the 20th C increase and so won’t impact the conclusions. – gavin]

Gavin, saw you the other night with Stossel. You represented yourself well. I think you have the integrity to enable a true scientific discussion, reminiscent of the one a couple of years ago at ourchangingclimate.org with the statistician VS. Let McIntyre go mano-to-mano with Marcott. Science will be better for it.

[Response: I am neither of these people’s keeper and they can do what they like. I will point out that this desire for cage-matches focused on the minutia of specific studies has nothing to do with how big picture issues actually get resolved. Our understanding of Holocene climate trends will not be enhanced by insinuations of malfeasance and bad faith, but rather by constructively exploring the real uncertainties that exist. If McIntyre was interested in the latter I, for one, would be quite happy. But as I said, I speak for no-one but myself. – gavin]

Thanks Gavin/Eric. Look forward to Marcott’s expanded response.

Gavin,thanks for your reply. I realize you are not their keeper, but you do realize you occupy a pivotal role in the world debate. I would urge you, despite your protestations that this will turn into a cage match on the minutia of this particular study, to encourage the two to go at it. Trust me, it WILL be good for science.

[Response: This is a very naïve view of how science progresses. The “go at it” approach is at best appropriate for the courtroom, though even there some level of civility is usually enforced.–eric]

Hmm. Just a thought. From Marcott et al, published March 8th

From today’s Marcott FAQ

And McIntyre’s blog post today

It does seem to me that somebody’s reading commprehension may be a little off and may not have the first clue on what they are critisizing. I wonder if any press agents will demand a response from McI on that one.

As has been pointed out by any number of folks – while a 300 year spike/recovery in temperature would have been filtered out by the Marcott proxies and methods, those making strong assertions in that regard (including McIntyre) have missed several important points:

(1) Temperature changes such as postulated in a spike require some physics, not just arm-waving about “it might be possible”. Just because a method has a particular lower resolution limit does not make gremlins in the gaps a likely postulate.

(2) Finer resolution proxies such as ice cores should have picked up such temperature changes in isotope ratios or in CO2 concentrations driven by ocean temperatures.

(3) Most important of all – even if such a spike occurred (doubtful based on energy conservation), it would not be relevant to recent temperature changes – because although temperatures are rising at a geologically fantastic rate, it will take a minimum of thousands of years to return to previous temperatures due to the concentration lifespan of CO2. Meaning that an event like the current warming would inevitably have been seen in the Marcott data. So arguing about possible but unlikely spikes and returns during the Holocene is irrelevant to the situation we find ourselves in today.

According to the supplementary information and data to your Nature Article:

Shakun, J. D., P. U. Clark, F. He, S. A. Marcott, A. C. Mix, Z. Y. Liu, B. Otto-Bliesner, A. Schmittner, and E. Bard (2012), Global warming preceded by increasing carbon dioxide concentrations during the last deglaciation, Nature, 484(7392), 49, doi:10.1038/nature10915.

you use many of the same cores and you also redate them using the same methods as Marcott et al. However, you handle the core tops differently. In Shakun (2012) you linearly extrapolated beyond the latest radiocarbon date to the top of the core using the mean sedimentation rate, but in Marcott 2013 you simply set it to 0BP. Given that the change in methodology has resulted in some date changes of more than a thousand years could you answer the following:

Why the change in methodology between the two papers?

Since the 2 papers are closely related do you not think it would have been a good idea to explain why the core top dating method was changed?

What impact would using the core top dating method used in Shakun et al (2012) have on Marcott et al (2013)?

What impact would using the core top dating method used in Marcott et al (2013) have on Shakun et al (2012)?

Since the Shakun et al (2012) and Marcott et al (2013) share the same analytical code (the Shakun et al SI states the analytical code will be published in Marcott et al) can you show the figures that you got when you used the Shakun et al (2012) core top dating method?

[Response: I don’t know for sure, but since the core-top dating only affects the very recent dating, it is going to be irrelevant for the radio-carbon dated portion in the deglacial. Using the published dates as is you get the following (from Tamino):

– gavin]

Notice the similar temperature profile from James Ross Island ice cap:

http://www.sciencepoles.org/news/news_detail/ice_core_provides_comprehensive_history_of_antarctic_peninsula_since_last_i/

No temperature spike lasting ca. 100 years is present in the far south.

I agree that science is ill suited to “cage matches”. I also apologize for beginning to go over the edge in characterization. I’ve been under more or less continuous attack for years because of my views that science as it is practiced is worthy of respect and should be taken at face value. I find these attacks disingenuous, and they are full of personal innuendo. However, it must be remembered that when, as in my particular case, we give up our self-respect and react, we demean the basic premise that truth is at the center of all scientific investigation.

I’ve been reminded several times recently that my voice has its greatest value when I stay away from the personal fringes and don’t react. It may be hard, but it is necessary.

Re 18:

How is this “not so” in the category of “being able to set the baseline to any reconstruction you wish”?

From what you’re saying, it seems instead that you can set it to others, but the paper isn’t supposed to speak to whether or not (for example) the MWP is warmer than present (a popular reference-point for any reconstruction). But instead, all Marcott et al needs to do is to show similar enough alignment of warming and cooling periods within the reconstruction dates (nevermind the magnitude) in order to be deemed ‘consistent’.

Do I have this right? This means that Marcott et al can also be ‘consistent’ with any of the others, including something like Loehle et al, because all we need to see is the similar mimicking of ups and downs (more slopes than magnitudes) through AD 0-1850…and then what we already know of 1850-present becomes another implied conclusion of the paper (meaning the specific reconstruction doesn’t itself speak to it, but taking the known instrumental record along with it you have a dramatic shift upward in temperature).

If I have this right, this means that Marcott et al can still be correct and informative even if the WMP was warmer than present (which can be achieved by re-aligning the proxy mean to a different reconstruction, the defense of which then becomes a necessary component to the paper).

[Response: This is not the paper you should be interested in to discuss the details of medieval/modern differences. Given the resolution and smoothing implied by the age model uncertainties, you are only going to get an approximation. Note though the Marcott reconstruction is not being scaled to anything – it was just that the baseline was adjusted to have the same anomaly as the more recent reconstructions (which are in turn calibrated to the instrumental period). Loehle’s reconstruction is not useful for many reasons, but if you wanted to baseline to Ljundqvist or Moberg you certainly could (being a little careful with hemispheric extent) and I don’t think it would affect anything much. – gavin]

@11

Susan Anderson says:

31 Mar 2013 at 2:47 PM

‘until they are all perfectly linked by methodology and accuracy’

Are you saying that published peer reviewed science papers have levels of certainty that are imperfectly linked by methodology and accuracy.

As I understand it, without the core-top re-dating, the proxy reconstruction takes a rather steep dive in the 20th century.

[Response: The error bars on the reconstruction once age uncertainties and proxy drop out are taken into account are large – and so many of the Monte Carlo members in the stack go up, but many go down.

– gavin]

After re-dating there is a sharp rise, the opposite. If the whole reconstruction is done without the core-top re-dating it appears that the 20th century is “cooler” than a large part of the Holocene (looking at proxies only).

[Response: No. You’d still need to run this through the age model perturbations, and the differences are not enough to change the uncertainties on the most recent points. – gavin]

If there were issues with the most recent proxies, why weren’t they truncated instead of specifically re-dated [edit – no assumption of motive please]? Why were the original dates not acceptable?

[Response: Core top dates are uncertain for many reasons (core recovery, dating method uncertainty, reservoir age etc.). So you need to take that into account. – gavin]

Also, with original dating in the reconstruction there is a significant divergence with the 20th century instrumental record. I don’t understand how the reconstruction in total is “robust” with regards to the proxies ability to represent temperature to the resolution that makes any analysis of recent years possible.

[Response: The most recent points are affected strongly by proxy dropout and so their exact behaviour is not robust. Tamino’s post does a good job analysing that, and the overall reconstruction is robust. – gavin]

I see nothing in the FAQ that addresses the authors reasons for the re-dating of core-tops or how they can apply their re-construction to any recent warming, a very small time frame.

[Response: They specifically state that this reconstruction is not going to be useful for the recent period – there are many more sources of data for that which are not used here – not least the instrumental record. – gavin]

How does this reconcile with the following excerpt from the abstract?

[Response: As they state above:

– gavin]

Now that DotEarth comments from the day are being rolled out it looks like a concerted negative campaign. As to “cage fighting” this would be a perfect example of why it wouldn’t work.

A plasterer, I’m saying that the effort to discredit science is fond of claiming that nothing short of perfection is acceptable. The point is that we will never have consistent temperature records over centuries and millenia. This is a ready-made to deceive the gullible.

29

Susan Anderson says:

31 Mar 2013 at 10:01 PM

‘claiming that nothing short of perfection is acceptable’

Sorry Susan,dont want to appear gullible but I don’t understand you.

I thought for science to be ‘perfect’ it would have to be correct.Are you saying it is not correct?Wether or not it is ‘acceptable’ is beside the point,it is either correct or not correct and if we will never have consistent temperature records over centuries and millenia,why are we trying to predict future temperatures from them.?

[Response: Not quite sure what you are asking here, but no-one is predicting future temperatures based on reconstructions of the Holocene. Future predictions are made based on physics-based models of the climate under plausible scenarios for future changes in climate drivers. Reconstructions are of use in model evaluation in some circumstances, but similar predictions existed long before any reconstructions were published. – gavin]

I know they are uncertain, the question is why they re-dated previously published core tops. They only re-dated (not re-calibrated) several and have not addressed it in the FAQ. If they are uncertain etc., how are the authors dates more valid than the published dates? The affect of this is a later issue in the chain.

I understand instrumental records are more accurate than some sediment but how can you compare so vastly different resolutions with any certainty spanning the entire Holocene? Tenths of a degree decadal?

Thank you for the link to Tamino, I am going through the post now.

I know you can’t speak for the authors, I just don’t understand where the Qs in the FAQs came from.

I’m just a guy who has to pay for this stuff.

So when the NYT trumpets the following, asking the world to spend trillions more on “green” energy, sans nuclear, what am I supposed to think?

“Global temperatures are warmer than at any time in at least 4,000 years, scientists reported Thursday, and over the coming decades are likely to surpass levels not seen on the planet since before the last ice age. ”

http://www.nytimes.com/2013/03/08/science/earth/global-temperatures-highest-in-4000-years-study-says.html?_r=1&

While I hope for humanity, you guys have it wrong, I hope for science you have it right. I can’t imagine the backlash to all this expense if you are wrong.

[Response: Perhaps I’m being obtuse, but I don’t see how a better understanding of global temperature history forces either the NYT or you to spend any money on anything. Whether the energy mix of the future includes an expansion of nuclear or not, this has nothing to do with temperatures 5000 years ago. Nonetheless, the observations here, and in other reconstructions and in the instrumental record indicate that temperatures since the Early Holocene (particularly in the high Northern hemisphere) have been falling slightly (mostly in line with expectations from orbital forcing), and that over the last 100 years or so, something anomalous has happened – coincident with the dramatic uptick in greenhouse gases due to the industrial revolution. GHGs are continuing to rise (even accelerating) and basic physics, as well as more sophisticated models, indicate that future changes will be larger still. This implies a risk to society since we have an enormous investment in the climate status quo. However, how society deals with that risk is a decision for politicians and the public to make – it does not follow linearly from the science I just outlined. If you think the benefits of nuclear outweigh the potential costs (or vice versa), you should make your voice heard on that topic. Likewise if you think that energy efficiency, or wind or solar, or carbon capture or adaptation and mitigation are more sensible responses. Whatever you decide, it is not not determined uniquely by the science in general, and certainly not by this single reconstruction. – gavin]

A Plasterer: What exactly do you mean by ‘correct’? In most data collected in science, there is some degree of uncertainty as regards how close it is to the ‘true’ data (chemical formulas are the only exception I can think of). Much of science consists of finding new ways to get closer to the ‘true” values, while a large part of statistics is aimed at determining the probable lower and upper bounds within which the ‘true’ value lies. For most data, exactly correct values are not known and likely will never be known. The important thing is,’are the data good enough to be useful?’ For climate science, physical and historical data are known with much more precision than is needed to be able to predict rapidly increasing global temperatures.

“9. Mean-shifted the global temperature reconstructions to have the same average as the Mann et al. (2008) CRU-EIV temperature reconstruction over the interval 510-1450 years Before Present.”

How much was it shifted?

[edit]

[Response: This is just a setting of the zero anomaly line. Something needed to be used as the baseline, and this is as good as anything. Differences in using Ljundqvist or Moberg et al would be around 0.1 or 0.2ºC – not large enough to affect the conclusions related to the 20th C rise. – gavin]

@ 23 Thank you very much. Ditto, everyone at every level of the discussion and in every capacity. There’s a new standard being set here–and taken higher. Thank you all for your contributions.

@gavin “Perhaps I’m being obtuse, but I don’t see how a better understanding of global temperature history forces either the NYT or you to spend any money on anything.”

I think he means you are paid by the taxpayer.

The Marcott et al reconstruction has been widely reported as confirming other “hockey stick” reconstructions such as Mann 2008.

It is now reported that the 20th century portion of the reconstruction is “Not robust”

Would it not be more accurate to say the study supports other hockey stick handles but is silent on the blade?

Given that the authors state that “20th century portion of our paleotemperature stack is not statistically robust, cannot be considered representative of global temperature changes, and therefore is not the basis of any of our conclusions”

Why was this portion included initially and why did the paper’s authors draw attention to it in the NSF press release and in many interviews?

[Response: The uncertainties increase towards the recent end because of proxy drop out, and that is reflected in the error bars and in the sensitivity to method. The authors had to make a decision where to end it, and they chose 1940 which is a balance btw inclusion and uncertainty. In interviews they discussed the Holocene trends with respect to the 20th Century warming for which there is abundant additional evidence. Given that people are understandably perhaps very focused on the relationship between long term trends and the 20th C, not discussing this would have been untenable. – gavin]

Links to such interviews upon Pielke Jnr’s blog http://rogerpielkejr.blogspot.co.uk/2013/03/fixing-marcott-mess-in-climate-science.html

[Response: I find it amusing that Roger thinks that NSF should withdraw a claim that 20th Century temperatures have risen, and that figures should be censored to ‘hide the incline’. – gavin]

Would the authors care to comment upon why this non-significant uptick was absent from Marcott’s thesis that this paper was based upon?

[Response: The thesis was finished 2 years ago, and there has clearly been further work done on the reconstruction since then. Some differences are in the amount of ‘jitter’ introduced into the Monte Carlo age model variations and the smoothing (100 years vs. 50), plus much of the exploration of spatial and variance-related sensitivities. The treatment of the core-top dates may have also changed – but I will ask the authors to comment further. – gavin]

There does seem to be something a bit odd going on since yesterday’s FAQ. Roger Pielke Jr. also suffers from the same reading comprehension malady as McIntyre. Both seem to have missed that Marcott et al already discussed the 20th century ‘tick’ as not robust. Now Roger, with an assist from Andy Revkin, seems to be embarking on something a bit more troubling and that is the belief that a paper/scientist that does not deal with the 20th century temperature rise cannot comment on the differences between their own findings and the known temperature changes. He is coming amazingly close to asking scientists to censor themselves from making logical extrapolations from their own work. This is worth noting

Thanks for this post. A nice summary indeed.

Some of the comments above are (sadly) quite telling–their obsession with the ‘uptick’ and the headlines despite the clear statements in the original abstract (and again in the present piece) that the uptick is probably not robust show clearly that for some, it’s never really about the science. For them, it’s ‘politics all the way down.’ Hence, the whole point of the paper ‘must be’ that current warming is unprecedented (even though it actually says otherwise!)

However, Marcott et al. (like many other papers, including MBH ’98, back in the day) presents clear internal evidence (in the form of inordinate amounts of time and effort expended) that yes, some folks really do care what the temperatures in the early Holocene (or the the early modern era) were–regardless of what that may or may not say about our present predicament.

My reading of the reaction from the ‘usual crowd’ (ie Pielke Jrs, auditors, WUWT rabble etc) is that:

a) they haven’t read the paper or the supplement (eg “finally concedes” nonsense);

b) they have not the wit or maybe not the will to understand or appreciate science;

c) they believe we are still stuck in the Little Ice Age (eg Pielke diatribe).

I marvel at all the achievements that allowed this research and other work like it to come to fruition. The effort that must have gone into the collecting proxy samples by so many people over so many years (in some cases the work quite probably entailed personal danger). The painstaking measurements and analysis. Not to mention all the previous research that identified what proxies to collect in the first place and how they can be used to indicate past temperatures and other things, development of tests etc. Then came the idea for this work and the further analysis by Shaun Marcott, Jeremy Shakun et al.

Clever and dedicated people have been quietly working to build up a vast amounts of knowledge in only a few decades. They deserve congratulations and recognition.

It’s only the illiterati who scoff at and belittle such achievements instead of making the effort to understand and learn from them. This small but loud group are not deserving of any respect at all IMO.

During the past three weeks, I have watched for complaints by the original authors about redating the published cores. Since no-one has spoken I concluded that the original researchers have no problem with this procedure.

Now we see that Richard Telford notified Marcott et al about redating after original publication. I this particular case, Marcott et al did not redate a core: they simply used the best information available.

In honest discussion, the redating complaints should end.

I still don’t understand why the uptick is included if it is “not robust”. Surely the authors, editors, and reviewers are aware of the publicity and scrutiny that studies on this topic receive. News articles and bloggers aren’t all going to care about qualifying discussions in the paper nor will they ensure conclusions they draw are consistent with the authors’. I understand that the authors had to draw the line somewhere but to not question the inclusion of the uptick and then later wonder why it is being picked on seems disingenuous (or obtuse;)).

[Response: The main point of the paper is the Holocene reconstruction. As you get to the end, there is data drop out which increases uncertainty. Deciding where to end it is a judgement call and as long as the increase in uncertainty is made clear (and it was), I don’t see that the authors have done anything untoward. People are always free to draw their own conclusions from papers. It was indeed predictable that this paper would be attacked regardless of where they ended the reconstruction since it does make the 20th C rise stand out in a longer context than previously, and for some people that is profoundly disquieting. Thus if it wasn’t this issue that got the attention something else would have – see for instance the 17(!) posts attacking the paper on WUWT as they try to find some mud that will stick. – gavin]

I think RC would come off as a bit less partisan if they would note honestly in Revkin’s dialogue with Shakun, Shakun was every bit euphoric about the uptick. To pretend that the uptick was never of any consequence is to dissemble.

[Response: Euphoric? Hardly. But he did strongly note the contrast between the long term cooling trends since the Holocene and the recent rise in temperatures. This ‘new rule’ that people aren’t allowed to talk about conclusions drawn from other work when speaking to journalists is an odd one, and indeed one I haven’t ever seen applied to anyone else. I wonder why that may be? – gavin]

If not “euphoric,” Shakun was quite pleased about the uptick, and – far from dismissing its importance,as so many here now do – he made it a highlight of his 30-second “elevator speech.”

[Response: No – his elevator pitch was talking about actual temperatures and the contrast between the 10,000 year trends, the recent changes and where we are headed (‘outside the elevator – boom!’). Obviously you aren’t claiming that he can’t talk about model projections because none of his proxies go out to 2100, so why you feel he can’t talk about the 20th Century rise in temperature is strange. – gavin]

Gavin – “This ‘new rule’ that people aren’t allowed to talk about conclusions drawn from other work when speaking to journalists is an odd one”

If the authors were clear that they were comparing the recent measured temperature record against their proxies, then your statement would be valid. Is this what you are asserting?

It is certainly a valid interpretation that the authors were comparing *** their own *** last century proxy results against the past millennium, which they now declare as not robust.

Inquiring why the authors made these statements, without qualification, is a legitimate question.

[Response: No it’s not. It’s just another red herring ‘question’ that always comes up when people don’t like the results. It is just so much easier to try and shoot the messenger and convince yourself that there is nothing here to be understood that these things don’t surprise me in the least. But I’m not going to convince you of anything, so I’ll pass on further micro-parsing of their statements. The fact of the matter is that the 20th C rise is real, anomalous, pretty well understood, and because of where we are economically/societally/technologically, it presages what we can expect in the future. People harassing newly-minted postdocs doesn’t change any of that. – gavin]

Since Marcott et al ends in 1950 and the IPCC has concluded the temperature increases pre 1950 are due to natural causes, Macrott cannot be showing us anything about the human influences on temperature.

[Response: Marcott ends 1940. IPCC concluded no such thing, and Marcott’s work does place the 20th Century rise in the context of the long term natural trends. – gavin]

Rather, it must be concluded that what Marcott is showing is only the natural variability in temperature and if any weight is to be given to the uptick, it shows that at higher resolutions there may be significant temperature spikes due to natural causes.

[Response: Of the size and magnitude of the 20th Century – unlikely. Even the 8.2kyr event which is the biggest thing in the Holocene records in the North Atlantic is small comparatively. It would definitely be good to get more high resolution well-dated data included though, and Marcott’s work is good basis for that to be built from. – gavin]

Response: 20th Century rise in the context of the long term natural trends… Even the 8.2kyr event which is the biggest thing in the Holocene records

==========

NOAA shows the 8.2kyr event as greater than 3.0C, while GISS shows the 20th Century rise as less that 0.7C.

http://www.ncdc.noaa.gov/paleo/pubs/alley2000/alley2000.html

http://en.wikipedia.org/wiki/File:Greenland_Gisp2_Temperature.svg

http://www.woodfortrees.org/plot/gistemp/from:1900/to:2000/trend

[Response: Those are local signals around the high North Atlantic; averaged over the planet they are much smaller. – gavin]

When one looks at long duration events we have the Younger Dryas event, with a temperature change of approximately 15C as compared to 2oth century warming of less than 0.7C

“Near-simultaneous changes in ice-core paleoclimatic indicators of local, regional, and more-widespread climate conditions demonstrate that much of the Earth experienced abrupt climate changes synchronous with Greenland within thirty years or less. Post-Younger Dryas changes have not duplicated the size, extent and rapidity of these paleoclimatic changes”.

again from NOAA

http://www.ncdc.noaa.gov/paleo/pubs/alley2000/alley2000.html

[Response: This is becoming a habit – the YD change of ’15ºC’ is a Greenland signal. It is not in phase with (much smaller) changes in the high Southern Latitudes, and in ocean cores, even in the N. Atlantic, it is much smaller. An estimate of the impact of the YD on global mean temperatures is found in the Shakun et al (2012) paper, and is likely less than a degree. Note that the whole glacial to interglacial change is only about 5ºC in total! – gavin]

I went back to the Skype call. Shakun was engaged and animated; I couldn’t see any euphoria. I did however think he would be a good guy to drink beer with.

Revkin wanted to talk about the 20C so Shakun did. He was , however, always careful to make the distinction between the paper and the instrumental record. In this , he was consistent with the paper and the FAQ’s.

that some want to hold Marcott et al responsible for what others write about the paper or the headlines that others compose does seem a bit silly.