Time for the 2012 updates!

As has become a habit (2009, 2010, 2011), here is a brief overview and update of some of the most discussed model/observation comparisons, updated to include 2012. I include comparisons of surface temperatures, sea ice and ocean heat content to the CMIP3 and Hansen et al (1988) simulations.

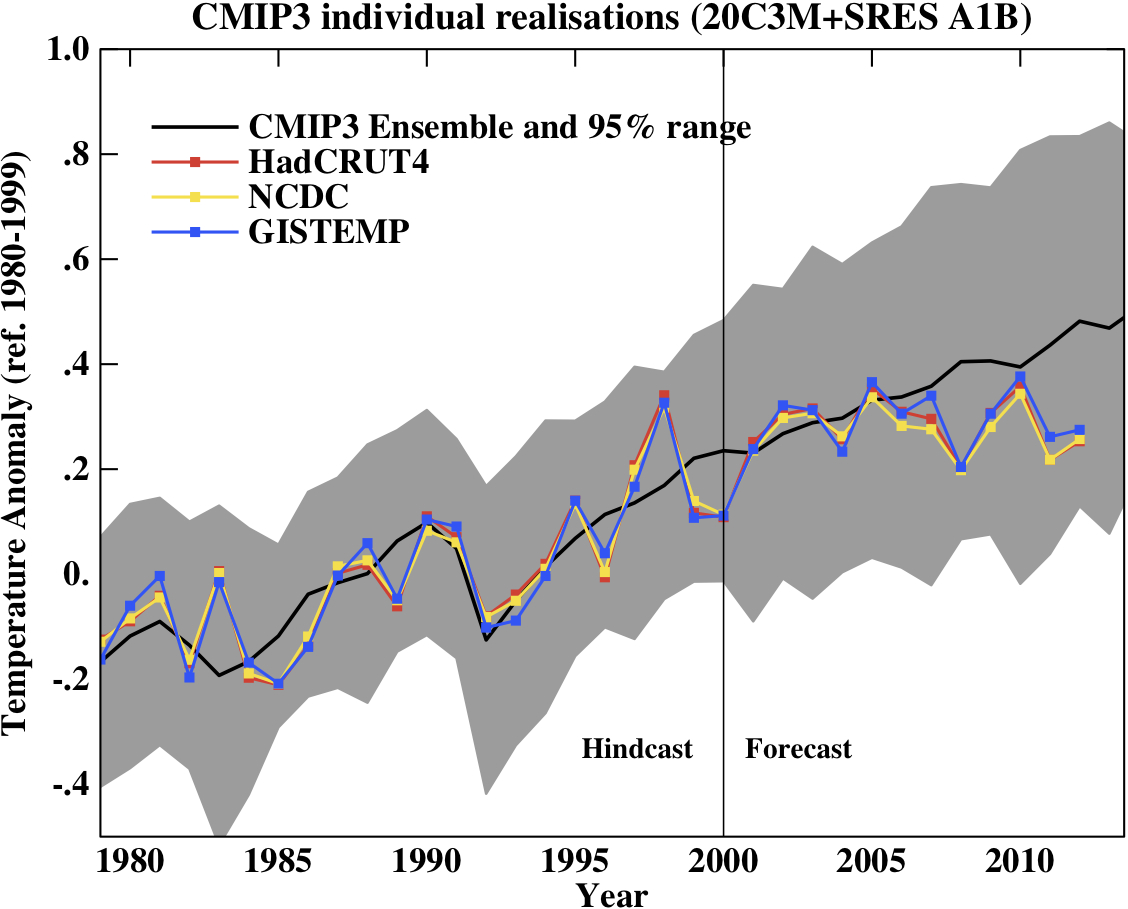

First, a graph showing the annual mean anomalies from the CMIP3 models plotted against the surface temperature records from the HadCRUT4, NCDC and GISTEMP products (it really doesn’t matter which). Everything has been baselined to 1980-1999 (as in the 2007 IPCC report) and the envelope in grey encloses 95% of the model runs.

Correction (02/11/12): Graph updated using calendar year mean HadCRUT4 data instead of meteorological year mean.

The La Niña event that persisted into 2012 (as with 2011) produced a cooler year in a global sense than 2010, although there were extensive regional warm extremes (particularly in the US). Differences between the observational records are less than they have been in previous years mainly because of the upgrade from HadCRUT3 to HadCRUT4 which has more high latitude coverage. The differences that remain are mostly related to interpolations in the Arctic. Checking up on the predictions from last year, I forecast that 2012 would be warmer than 2011 and so a top ten year, but still cooler than 2010 (because of the remnant La Niña). This was true looking at all indices (GISTEMP has 2012 at #9, HadCRUT4, #10, and NCDC, #10).

This was the 2nd warmest year that started off (DJF) with a La Niña (previous La Niña years by this index were 2008, 2006, 2001, 2000 and 1999 using a 5 month minimum for a specific event) in all three indices (after 2006). Note that 2006 has recently been reclassified as a La Niña in the latest version of this index (it wasn’t one last year!); under the previous version, 2012 would have been the warmest La Niña year.

Given current near ENSO-neutral conditions, 2013 will almost certainly be a warmer year than 2012, so again another top 10 year. It is conceivable that it could be a record breaker (the Met Office has forecast that this is likely, as has John Nielsen-Gammon), but I am more wary, and predict that it is only likely to be a top 5 year (i.e. > 50% probability). I think a new record will have to wait for a true El Niño year – but note this is forecasting by eye, rather than statistics.

People sometimes claim that “no models” can match the short term trends seen in the data. This is still not true. For instance, the range of trends in the models for cherry-picked period of 1998-2012 go from -0.09 to 0.46ºC/dec, with MRI-CGCM (run3 and run5) the laggards in the pack, running colder than the observations (0.04–0.07 ± 0.1ºC/dec) – but as discussed before, this has very little to do with anything.

In interpreting this information, please note the following (mostly repeated from previous years):

- Short term (15 years or less) trends in global temperature are not usefully predictable as a function of current forcings. This means you can’t use such short periods to ‘prove’ that global warming has or hasn’t stopped, or that we are really cooling despite this being the warmest decade in centuries. We discussed this more extensively here.

- The CMIP3 model simulations were an ‘ensemble of opportunity’ and vary substantially among themselves with the forcings imposed, the magnitude of the internal variability and of course, the sensitivity. Thus while they do span a large range of possible situations, the average of these simulations is not ‘truth’.

- The model simulations use observed forcings up until 2000 (or 2003 in a couple of cases) and use a business-as-usual scenario subsequently (A1B). The models are not tuned to temperature trends pre-2000.

- Differences between the temperature anomaly products is related to: different selections of input data, different methods for assessing urban heating effects, and (most important) different methodologies for estimating temperatures in data-poor regions like the Arctic. GISTEMP assumes that the Arctic is warming as fast as the stations around the Arctic, while HadCRUT4 and NCDC assume the Arctic is warming as fast as the global mean. The former assumption is more in line with the sea ice results and independent measures from buoys and the reanalysis products.

- Model-data comparisons are best when the metric being compared is calculated the same way in both the models and data. In the comparisons here, that isn’t quite true (mainly related to spatial coverage), and so this adds a little extra structural uncertainty to any conclusions one might draw.

Given the importance of ENSO to the year to year variability, removing this effect can help reveal the underlying trends. The update to the Foster and Rahmstorf (2011) study using the the latest data (courtesy of Tamino) (and a couple of minor changes to procedure) shows the same continuing trend:

Similarly, Rahmstorf et al. (2012) showed that these adjusted data agree well with the projections of the IPCC 3rd (2001) and 4th (2007) assessment reports.

Ocean Heat Content

Figure 3 is the comparison of the upper level (top 700m) ocean heat content (OHC) changes in the models compared to the latest data from NODC and PMEL (Lyman et al (2010) ,doi). I only plot the models up to 2003 (since I don’t have the later output). All curves are baselined to the period 1975-1989.

This comparison is less than ideal for a number of reasons. It doesn’t show the structural uncertainty in the models (different models have different changes, and the other GISS model from CMIP3 (GISS-EH) had slightly less heat uptake than the model shown here). Neither can we assess the importance of the apparent reduction in trend in top 700m OHC growth in the 2000s (since we don’t have a long time series of the deeper OHC numbers). If the models were to be simply extrapolated, they would lie above the observations, but given the slight reduction in solar, uncertain changes in aerosols or deeper OHC over this period, I am no longer comfortable with such a simple extrapolation. Analysis of the CMIP5 models (which will come at some point!) will be a better apples-to-apples comparison since they go up to 2012 with ‘observed’ forcings. Nonetheless, the long term trends in the models match those in the data, but the short-term fluctuations are both noisy and imprecise.

Summer sea ice changes

Sea ice changes this year were again very dramatic, with the Arctic September minimum destroying the previous records in all the data products. Updating the Stroeve et al. (2007)(pdf) analysis (courtesy of Marika Holland) using the NSIDC data we can see that the Arctic continues to melt faster than any of the AR4/CMIP3 models predicted. This is no longer so true for the CMIP5 models, but those comparisons will need to wait for another day (Stroeve et al, 2012).

Hansen et al, 1988

Finally, we update the Hansen et al (1988) (doi) comparisons. Note that the old GISS model had a climate sensitivity that was a little higher (4.2ºC for a doubling of CO2) than the best estimate (~3ºC) and as stated in previous years, the actual forcings that occurred are not the same as those used in the different scenarios. We noted in 2007, that Scenario B was running a little high compared with the forcings growth (by about 10%) using estimated forcings up to 2003 (Scenario A was significantly higher, and Scenario C was lower), and we see no need to amend that conclusion now.

Correction (02/11/12): Graph updated using calendar year mean HadCRUT4 data instead of meteorological year mean.

The trends for the period 1984 to 2012 (the 1984 date chosen because that is when these projections started), scenario B has a trend of 0.29+/-0.04ºC/dec (95% uncertainties, no correction for auto-correlation). For the GISTEMP and HadCRUT4, the trends are 0.18 and 0.17+/-0.04ºC/dec respectively. For reference, the trends in the CMIP3 models for the same period have a range 0.21+/-0.16 ºC/dec (95%).

As discussed in Hargreaves (2010), while this simulation was not perfect, it has shown skill in that it has out-performed any reasonable naive hypothesis that people put forward in 1988 (the most obvious being a forecast of no-change). However, concluding much more than this requires an assessment of how far off the forcings were in scenario B. That needs a good estimate of the aerosol trends, and these remain uncertain. This should be explored more thoroughly, and I will try and get to that at some point.

Summary

The conclusion is the same as in each of the past few years; the models are on the low side of some changes, and on the high side of others, but despite short-term ups and downs, global warming continues much as predicted.

References

- G. Foster, and S. Rahmstorf, "Global temperature evolution 1979–2010", Environmental Research Letters, vol. 6, pp. 044022, 2011. http://dx.doi.org/10.1088/1748-9326/6/4/044022

- S. Rahmstorf, G. Foster, and A. Cazenave, "Comparing climate projections to observations up to 2011", Environmental Research Letters, vol. 7, pp. 044035, 2012. http://dx.doi.org/10.1088/1748-9326/7/4/044035

- J.M. Lyman, S.A. Good, V.V. Gouretski, M. Ishii, G.C. Johnson, M.D. Palmer, D.M. Smith, and J.K. Willis, "Robust warming of the global upper ocean", Nature, vol. 465, pp. 334-337, 2010. http://dx.doi.org/10.1038/nature09043

- J. Stroeve, M.M. Holland, W. Meier, T. Scambos, and M. Serreze, "Arctic sea ice decline: Faster than forecast", Geophysical Research Letters, vol. 34, 2007. http://dx.doi.org/10.1029/2007GL029703

- J.C. Stroeve, V. Kattsov, A. Barrett, M. Serreze, T. Pavlova, M. Holland, and W.N. Meier, "Trends in Arctic sea ice extent from CMIP5, CMIP3 and observations", Geophysical Research Letters, vol. 39, 2012. http://dx.doi.org/10.1029/2012GL052676

- J. Hansen, I. Fung, A. Lacis, D. Rind, S. Lebedeff, R. Ruedy, G. Russell, and P. Stone, "Global climate changes as forecast by Goddard Institute for Space Studies three‐dimensional model", Journal of Geophysical Research: Atmospheres, vol. 93, pp. 9341-9364, 1988. http://dx.doi.org/10.1029/JD093iD08p09341

- J.C. Hargreaves, "Skill and uncertainty in climate models", WIREs Climate Change, vol. 1, pp. 556-564, 2010. http://dx.doi.org/10.1002/wcc.58

With respect to the Hansen 1988 model comparison, why can’t someone just re-run the model with the from 1988 to present using the actual observed forcings from 1988 to 2012? Wouldn’t that be the best way to test the model in a validation run?

The biggest deviation from models I can see on a quick glance is Arctic summer sea ice, and that is dramatically worse than predicted. So much for “alarmism”.

I wonder if you took predictions from Lindzen or anyone else trying to claim climate sensitivity is much lower than 3°C per doubling and compared them with reality how they would look today. Skeptical Science did this a couple of years back with Lindzen – I would guess a not much has changed. And here’s another.

Could be interesting to put these all in one graph, considering one of the contrarian memes is our polyannaism is better than your alarmism.

I second that.

Doesn’t CPC use the ENSO numbers from just the first 3 months to establish El Nino/La Nina “event” conditions, and not 5 months..?

“I only plot the models up to 2003 (since I don’t have the later output).”

It’s very boring because the situation of ocean heat is interesting after 2003.

[edit]

And as I told you some weeks ago, the aerosol forcing and the observations are not compatible with a climate sensitivty of 3.0°C but maybe with 1.8°C at best 2.0°C.

There may be a slight misalignment of tick marks with data in figs. 2 and 3. There are missing tick marks in fig. 3 on the lower axis. Levitus et al. is referenced in fig. 3 but does not appear in the reference list.

Nice work.

I’ve been running a similar model to Tamino for a few years now, and have been showing much the same results. (I was looking for Tamino’s 2012 update yesterday, so thanks for posting it here!). Like Tamino, 2012 actuals came in about 0.1C lower than my model. I checked for autocorrelation of the model error from year to year, and it fitted to about 2%, which is practically nil (in other words, the fact that 2012 was below model estimate is not a predictor of whether 2013 will be above or below estimate). Net CO2 forcing is about 0.02C/year (0.06C total), and solar activity has climbed somewhat (worth about 0.02C). All else being equal, 2013 can break the 2010 record with a much weaker ENSO reading. I think my model estimates around 0.72C anomaly (GISS) for 2013 with ENSO conditions projected to be virtually identical to the 1951-1980 base period average. Margin of error is approximately +/-0.10C (given the correct ENSO values), so I don’t think one can confidently predict 0.66C will be broken, but Nielsen-Gammon’s estimate of 2/3 chance seems about right to me. There’s also a chance the record could be broken by a significant margin (have to consider the margin of error in either direction). But I understand the need to be conservative with published estimates. Top 5 seems >85% likely to me.

The residual oscillation on the adjusted number is puzzling.

My guess is this might be related to multiyears cycle not related to the ENSO. I have asked a former student to project this residual on a temperature map. However, not being a climatologist, I don’t know how to interpret the result.

If there is a climatologist volunteer to write a paper let me know ;)

For upper-ocean heat content, you can download the values for our 17-member ECHAM5 ensemble (same as in CMIP3) at http://climexp.knmi.nl/getindices.cgi?WMO=ESSENCE/heat700_essence_%%&STATION=Essence_heat700&TYPE=i&id=someone@somewhere&NPERYEAR=1 . That should give you an estimate of the natural variability and the difference with your GISS model a rough idea of model spread. (See Katsman & van Oldenborgh GRL 2011 for our analysis).

[Response: I’ll make a comparison figure, but are there no volcanoes in the ESSENCE runs? That has a big impact on OHC anomalies… – gavin]

Ernst,

I believe Hansen only used greenhouse gases for his forcings, as he felt all other forcings were insignificant. Considering that temperatures are following scenario C, whereas greenhouse gases are following scenario A, I think his original model needs could use a little updating.

[Response: you believe wrong. The forcings were discussed in the linked post and scenario B is v. likely an overestimate of the forcings – but this is dependent on what aerosols actually did. – gavin]

Maybe it’s there and I missed it, but what are the main lessons to be learned, if any, from these comparisons between the modeled trend and the actual data?

Are the periods too short to draw conclusions or apply lessons learned to improve models?

Particularly, what are the best guesses/judgments at this point about why the Arctic sea ice extent numbers are so far below all predictions?

And excuse the possibly bone-headed question, but could the rapid melt of Arctic sea ice (and now land ice), whatever the cause of that fast rate, be part of the cause of the recent plateauing of global atmospheric temperatures?

That is, did all the energy needed to melt all that ice have a cooling effect on the rest of the planet?

Note, that the ocean heat content includes loss of sea ice area, but not loss of sea ice thickness. Melting a centimeter of ice is the same amount of energy required to raise a centimeter layer of temperate ocean water ~80C. In the North Atlantic such heat would be noticed, but in the Arctic, it is not addressed. Failure to include this aspect of ocean heat content changes the shape of the curve.

Gavin – this is a very useful post on a number of fronts, especially for those of us teaching AGW. As I’ve been going through the material this quarter, I was struck that the current global temperature plateau has started to look a bit like what occurred post-WWII. Assuming the hypothesis that aerosol growth outpaced GHG growth explains the post-WWII plateau, is there any way to better assess if this could also be the case today, sensu Kaufmann et al., 2011, PNAS, and as supported by China’s coal use rising 9% per yr?

The linked post states that the original 1998 projections were based on future concentrations of greenhouse gases, although scenarios B and C included a volcanic eruption. There was no mention of solar, ENSO, or sulphates. While I agree than scenario B is likely an overestimate, atmospheric concentrations are growing at slightly more than a linear rate (scenario B), similar to the existing exponential trend (scenario A) when he first make his predictions. https://www.realclimate.org/index.php/archives/2007/05/hansens-1988-projections/

If you look at last year’s plots, the difference from HadCRUT3 and HadCRUT4 is pretty obvious. Looks like HadCRUT4 line has moved up quite a bit.

David Lea,

The “plateau” looks more like the one in the 80s and the one in the 90s–it is most instructive to look at the figure from Foster and Rahmstorf. We know that ENSO, solar irradiance and volcanic eruptions are important. Chinese aerosols…maybe.

@Ray #15. You’re not going to get very far with volcanic aerosols in explaining the current plateau. Also, I do not see any sequence of years in the 80’s and 90’s that includes a 12 year stretch with no temperature rise, as the current situation illustrates.

How are the aerosols treated in the models? Are they continuously added according to some measured local amount and type or are they “averaged in” in some more generic way? According to a recent article on the last decade, there was a large global increase 2000-2005 followed by an even larger decrease 2005-2010 where China had a massive reduction (i.e. if you can trust their official numbers…). But I suppose the geographical patterns are critical for the total effect, including clouds. For example, India is very different from Europe.

[Response: There are no 3D time varying aerosol fields from observations, so all models use model-generated fields. Those fields are driven by emissions of aerosols and aerosol precursors, the atmospheric chemistry, and the transport, settling and washout calculated from the dynamics, rainfall patterns etc. Some models do this once ahead of time, and then use the calculated fields directly, other models have the calculations interactively so that changes in climate or other forcings can impact them. The fields themselves are validated against what observations there are, but there is still a lot of variation in composition and spatial patterns -and of course, they are only as good as the emission inventories. These are very much a work in progress and not all official statistics of emissions are as reliable as others. And don’t get e started on the indirect effects… – gavin]

For Dutch speakers, a similar data update has been posted to my Dutch blog by Jos Hagelaars: http://klimaatverandering.wordpress.com/2013/01/27/een-data-update-van-2012/

#18 Thanks for your answer, Gavin. I suppose one can’t really blame you for not being able to reduce the sensitivity interval. Rather, that could be interpreted as sign of honesty :-)

Dr. Lea, it’s really nice for you to drop by. I always enjoy external scientists who are big names in the field coming in and discussing issues on RealClimate- just one thing that separates this blog from many others.

I personally don’t find the last decade nearly as interesting as many others do, but Jim Hansen’s “recent” paper (“Earth’s energy imbalance and implications”, Atmos. Chem. Phys., 11, 13421-13449, 2011) argue that the volcanic forcing from Pinatubo and decreased solar irradiance are relevant over this period, and other work (e.g., Solomon et al, The Persistently Variable “Background” Stratospheric Aerosol Layer and Global Climate Change, 2011, Science) and also John Vernier has a paper in GRL, arguing that smaller tropical eruptions and high-altitude aerosol fluctuations are relevant too. Susan Solomon also had that interesting paper on stratospheric water vapor fluctuations. Of course, anthropogenic aerosol emissions, wherever they might be increasing or decreasing, must physically have *some* impact.

But you have something like Avogrado’s number of “small forcings” operating over the last decade that have been proposed and lots of debate about how many centi- and milli-kelvins you can attribute to each one. This is all super-imposed on natural variability, of which the recent (decade-long) observed global temperature evolution falls well within the range of anyway. This all compliments issues like the distribution of the surplus energy in the system (Trenberth, etc) and the unknown climate sensitivity.

To use your own research as an analog, I find it somewhat like trying to figure out what part of the deep-sea oxygen isotope record is due to ice-volume vs. temperature changes (and all other issues) to within less than a percent understanding. I applaud the people working on it, but it’s very tough, and one reason why recent comments like “the additional decade of temperature data from 2000 onwards…can only work to reduce estimates of sensitivity” from James Annan just don’t fly.

By the way, the attribution for the post-WW2 period seems harder. I certainly think aerosol emissions have to have some role, and I think the differential NH/SH temperature evolution during that period is good evidence of this, but natural variability has also been invoked to explain some of this too (Ray Pierrehumbert talked about that issue in his AGU session on successful predictions).

Ernst said I believe Hansen only used greenhouse gases for his forcings, as he felt all other forcings were insignificant.

Hansen 1988 did use other forcing but he decoupled the ocean feedback writing, “we stress that this “surprise-free” representation of the ocean excludes the effects of natural variability of ocean transports and the possibility of switches in the basic mode of ocean circulation.”

His models and many that are derived from his legacy, typically show uniform ocean warming and completely miss the observed alternating temperatures of the Pacific Decadal Oscillation(PDO). In Hansen’s defense the PDO wasn’t named for another decade. However current models have not yet correctly incorporated the PDO which is why models such as those used by DAI (2012) failed to capture droughts in Africa or the wetter trend in North America from 1950 to 2000.

Does 2012 represent the worst performance of models against observation of annual mean anomaly, or was 2008 worse?

there’s a typo in the Title. Comparions ?

[Response: oops! – gavin]

Re- Comment by AJ — 8 Feb 2013 @ 8:05 PM

Because of the noisy nature of climate data, your question is a non sequitur (a logical error).

Steve

@Chris #21. thanks for your kinds remarks. I agree it’s complex but still very interesting to try to figure out. The cause of the plateau will probably be clear in hindsight, although I’m not sure this has proven to be the case for the 1946-76 plateau. But we obviously have more info to work with now. I also think it’s interesting in this context to consider the Hansen 2012 temperature update, which demonstrates (Fig. 5) that GHG forcing growth rates are lower today than at their peak between 1975-1990 as well as in any of the IPCC SRES scenarios. I would assume that this could not have been anticipated in Hansen (1988).

Curious, educated non-scientist here. I find it very frustrating that the ‘aerosal’ component is not easily quantified and incorporated into the models. As a non-scientist I can only go by my ‘gut’…I look at the fairly similar surface warming ‘plateau’ in the 1940-1970 period (which is, I believe, mostly ascribed to WW II and post war industrial boom aerosals) and I look at the exponential and explosive industrial output of Asia/CHINA! (fueled by coal-fire plants) since 2000 and…gosh it certainly looks eerily similar. I have read extensively on this and other blogs re: OHC and ENSO and lower solar output and Foster and Rahmstorf, etc…Is there anyone out there more science-y than myself who also feels that we may mostly be looking at a massive (and temporary) episode of aerosal damping?…and that as the aerosals necessarily diminish (see the extreme pollution episode in Beijing last week) a bunch more energy is gonna come in and make itself at home?

@ Steve Fish, my question regarding whether this past year’s modeling results were the most inaccurate since the models were developed is most certainly not a “non sequitur”. It is an objective question with an easily determined answer.

Yet I find myself amused at your comment. A non-sequitur is when someone comes to a conclusion with a logical disconnect from the premise. Now feel free to re-read your comment, note where the comma falls, and decide for yourself if the conclusion is a logical result of the premise. Hint: it is not.

David Goldstein @27 — It is spelled aerosol, but yes you have it about right.

It’s tempting to try to “explain” the temperature record for the last 10 or 12 years by things like aerosols etc. But before taking such speculation too far, remember that it’s by no means certain that there’s anything to explain.

The very first graph shows that temperature has not strayed from its expected path during this time span. The second shows that when some of the known factors are accounted for, there isn’t any “12 year stretch with no temperature rise.” Those factors aren’t just “possible” influences — if you deny that ENSO, volcanic eruptions, and the sun influence global temperature, then you’re not being sensible. And if you deny that they have tended to drive temperature down during the purported “12 year stretch,” then you haven’t looked at the numbers. That issue is addressed here:

http://tamino.wordpress.com/2012/04/05/decadal-trend-in-temperature/

Speculation is fine, and I too would love to know more about the impact of a lot of things, like aerosol loading, on climate. But I repeat, don’t get too fixated on the idea that we need to “explain” the last dozen or so years — consider whether or not there’s really anything to explain.

David Lea #26,

My understanding is that the Kaufmann et al. Asian sulfate hypothesis is essentially ruled out by satellite observations of the top of the atmosphere energy imbalance.

Kevin Trenberth, for instance, noted that the satellite observations are accurate enough to track the change in solar insolation from the 11-year sunspot cycle.

http://www.skepticalscience.com/Tracking_Earths_Energy.html

So if forcing from more aerosols had overwhelmed the GHG forcing from CO2 we ought to be able to observe this. Someone please correct me if I’m wrong.

Do we have models for the effects of global warming that can be updated in a similar fashion to these models?

Sea level, number of hurricanes, malaria, water supply, crop loss, etc.

@Jim Cross, #32. Great question. I’m not an expert, but I would think sea level rise would be the easiest to model. Chaos & spatial variability would be big factors in the other effects you mention. And I doubt all the interactions are understood well enough. Lot of debate about hurricanes–one possibility is that the frequency of hurricanes doesn’t change, or even declines, but the frequency of large, high-energy hurricanes increases. Inland, I suspect if big areas in the ‘bread basket’ move to a regime of extended droughts punctuated by intense storms (6-15 inch rainfalls in a day or two), that it will have horrible effects on both water supply & crop loss. We’ll mine aquifers when it’s dry (we already do); much of the water in intense downpours will run off before it soaks into the ground. In addition to reducing greenhouse gas emissions, we need to be making the right adaptive decisions now. Big agriculture, as well as urbanites with lawns, need to be thinking about how they can reduce & conserve water resources, increase infiltration (how we develop & farm has a big effect on infiltration of water into the ground to recharge aquifers), and create some safety nets for when the primary water system runs dry.

Re #2 Philip Machanick. Your links.

What error bars did Lindzen think should be attached to his estimates? The same question applies to your second link about his disciple Matt Ridley?

The only evidence I can find about these, is an early investigation by Morgan and Keith 1995, Env. Sci.& Tech.vol. 29 A468-A476 in which a number of experts were asked questions e.g about the climate sensitivity. It includes a table (Fig.2) of 16 estimates by different un-named experts together with confidence ranges. Just one entry, from expert number 5, stands out apart from the rest with a very low estimate 0.3K for the sensitivity asserted with an amazingly low ‘standard deviation’ (confidence interval) of 0.2K.

If , as suggested elsewhere, this entry was due to Richard Lindzen, that would give the impression that he was, in 1995, one of the most supremely confident workers in the subject.

“it has shown skill in that it has out-performed any reasonable naive hypothesis that people put forward in 1988”

That is a very kind interpretation. I think “naive” would certainly be an accurate description. The choice of a null model here for comparison is not appropriate, nor reasonable.

Nobody in 1998 reasonably asserted they expected temperatures to maintain their same trajectory they had been on for the last 75 years? Hansen and his huge budget and super computers has been out performed by a ruler and graph paper.

Hansen projected an accelerating temperature trend based on BAU carbon output. That hasn’t happened, yet. Why not is certainly an interesting question. But the continued assertion that his results are “accurate” fly in the face of common sense.

If this plateau continues for another 5 years or so, the current models will have exited the 95% thresholds in only the first 15 years of the forecast. I hope somebody is questioning performance at this point instead of manufacturing error adjustments. Are they? It’s a serious question.

What tamino #30 said.

Let me add for clarity (though most readers will already be aware of this) that, while the model runs are all different realizations of the modelled system, the empirical time series (HadCRUT4, NCDC and GISTemp in the first figure) all represent a single run of the Earth’s real climate system. Every time series within this set contains the same “copy” of natural variability: ENSO, volcanism and the Sun are what they are, and every now and then they just trend down.

Re- Comment by AJ — 9 Feb 2013 @ 12:37 AM

The topic of this thread is climate model projections to which comparisons of noisy mean temperatures from individual years provide no useful information (see Tamino’s post, above, regarding comparisons to multiple years of data). In this context the descriptive word, “worse,” is a pre-judgment that makes your comment a non sequitur.

If it will make you happy, just reread the first graph of the topical post of these comments. Steve

Can someone explain why graph 1 shows a rise in temperature for HadCRUT4 between 2006 and 2007?

I’ve tried to replicate this, offsetting everything to the 1980-1999 base period, and I keep getting slight cooling between 2006 and 2007 for HadCRUT4; not warming, as suggested by graph 1.

Thanks.

[Response: thanks for double checking. Turns out I was plotting the Dec-Nov means by mistake instead of the Jan-Dec values. I will update the graphs accordingly. (DONE). – gavin]

Aside on sulfates — I recall reading some years back that sulfates from the original coal burning (at high latitudes, England, W. Europe and the US in particular) turn out to behave differently in the atmosphere than sulfates from contemporary China and India — because the latter are mostly being burned much closer to the equator, get different insolation and different chemistry results as the particles are carried through the atmosphere. There wasn’t enough info at the time to say much other than “isn’t acting the same way” but perhaps more has been found. Someone else can find it ….

What was the method with which ENSO was removed from the adjusted temperature trend? Intuitively it makes no sense. IF ENSO is deemed cyclical, then the temperatures curve should not become steeper. If ENSO is believed to add warmth to the average then the 1980’s and 90’s should be lowered more tha appears to have been done

Adjustments should lower those 2 decades much more than it appears to have been done.

Furthermore, if adjustments are being made why isn’t Arctic amplification removed? The shift in the Arctic Oscillation shifted winds causing an increase loss of sea ice (Rigor 20002, 2004). The amplified warming of the Arctic was not due to added heat but due to ventilated heat. Thus much of the warming in the Arctic should be subtracted from the global average before we can infer how much CO2 has contributed. LIkewise growing urbanization clear increased minimum temperatures (Karl 1995) and much of the warming has been due to increased minimum temperatures. A more realistic trend should consider maximum and minimum temperatures separately.

On the missing tick marks in fig. 3. If it is an IDL script, sometimes just adding a carriage return before closing the output file can get the plotting to complete. Usually tick marks drawn first though, so it seems strange.

[Response: Fixed. Sorry about that. – gavin]

What tamino #30 and Martin Vermeer #36 said.

adding that usually, when models go wrong, one identifies a couple of the largest deviations between reality and the model, and proceeds to find out why the heck were they wrong. To take a specific example, the largest deviation (missing heat) was adequately explanained by increased heat exchange between 0-700 and 700-2000m ocean layers, so would it now be time to get the Arctic ice loss and the China-India brown cloud effect on Siberia and North Pacific correct? or might the biggest uncertainty be the underside melting of Antarctic and Arctic outlet glaciers? or maybe the various ecological effects (such as bark beetle) might be worth of note? CO2 might well be the biggest control knob in the atmosphere and the whole planet (unless it’s the methane). The thing is, one can measure these variables, find out what explains most of the leftover noise, and do a better model.

Don’t we have far more detailed observations than just extent regarding Arctic sea ice? How do models perform against thickness data?

Deconvoluter #34: when have alleged skeptics ever been skeptical of their own work? On the contrary, they are absurdly confident of the correctness of their own results in my experience, even when taken apart on the content.

To be fair, in this instance it’s the Skeptical Science site that doesn’t include error bars and their “Lindzen” graphs are their interpretation of his comments, not his data.

tamino #30: curiously, it’s a denier meme that climate scientists ignore natural influences. It says a lot about their side of the “debate” that they attempt to score points by accusing the opposition of the logic flaws in their own arguments.

Skeptical Science also looks at the effect of natural influences though in this case over the last 16 years.

Philip,

Unfortunately, people on both sides of the aisle have been absurdly confident in their own results. Some to the point of claiming that recent data is inaccurate or needs adjustments to fall into order. Your second point can also be applied to both; that they use their own interpretation of others comments, often by using illogical arguments. I find that this becomes more common the closer one gets to either extreme. While there may not exist a happy medium (yet), there are many scientists who seek to include both natural and manmade influences. However, there are a few (on both sides) who minimize either influence on climate changes, almost to the point of ignoring them.

Naive question coming up: while the CMIP3 models are showing the cooling effect of volcanoes quite well, they are not showing the ENSO-caused temperature fluctuations. Why is it not possible simply to turn up that “gain” on ocean currents in hindcasts until they match the temperature record more closely?

Re- Comment by Dan H. — 10 Feb 2013 @ 4:46 PM

You say- “both sides of the aisle have been absurdly confident in their own results.”

Could you please identify who these two sides are that are so at odds and provide a couple of the “illogical arguments” you mention.

Captcha may have identified the problem- “further geboop”

Steve

Richard Lawson-

While individual models all simulate ENSO dynamics, they won’t match up with the specific years observed in the instrumental record (models will simulate an el nino event during different years, etc). This is not much different from the fact that models all simulate weather, but storms won’t coincide with the timing of storms in nature…the initial condition problem applies just as much on decadal timescales as well as on synoptic timescales. Reality itself is just one member of an ensemble of possibilities, whereas volcanic eruptions are all forced at a specific time. Moreover, when you average over all the ensemble members, you lose some of the “weather variability” that you’d get in an individual realization.

Goldstein 27 – overall, aerosals might not dampen warming. The thinking now is that soot warms.

http://www.nytimes.com/2013/01/16/science/earth/burning-fuel-particles-do-more-damage-to-climate-than-thought-study-says.html?_r=0

[Response: No. Soot has been known to drive warming for years and the effects of reflective aerosols is significantly larger in the net. -Gavin]