There is a new paper on Science Express that examines the constraints on climate sensitivity from looking at the last glacial maximum (LGM), around 21,000 years ago (Schmittner et al, 2011) (SEA). The headline number (2.3ºC) is a little lower than IPCC’s “best estimate” of 3ºC global warming for a doubling of CO2, but within the likely range (2-4.5ºC) of the last IPCC report. However, there are reasons to think that the result may well be biased low, and stated with rather more confidence than is warranted given the limitations of the study.

Climate sensitivity is a key characteristic of the climate system, since it tells us how much global warming to expect for a given forcing. It usually refers to how much surface warming would result from a doubling of CO2 in the atmosphere, but is actually a more general metric that gives a good indication of what any radiative forcing (from the sun, a change in surface albedo, aerosols etc.) would do to surface temperatures at equilibrium. It is something we have discussed a lot here (see here for a selection of posts).

Climate models inherently predict climate sensitivity, which results from the basic Planck feedback (the increase of infrared cooling with temperature) modified by various other feedbacks (mainly the water vapor, lapse rate, cloud and albedo feedbacks). But observational data can reveal how the climate system has responded to known forcings in the past, and hence give constraints on climate sensitivity. The IPCC AR4 (9.6: Observational Constraints on Climate Sensitivity) lists 13 studies (Table 9.3) that constrain climate sensitivity using various types of data, including two using LGM data. More have appeared since.

It is important to regard the LGM studies as just one set of points in the cloud yielded by other climate sensitivity estimates, but the LGM has been a frequent target because it was a period for which there is a lot of data from varied sources, climate was significantly different from today, and we have considerable information about the important drivers – like CO2, CH4, ice sheet extent, vegetation changes etc. Even as far back as Lorius et al (1990), estimates of the mean temperature change and the net forcing, were combined to give estimates of sensitivity of about 3ºC. More recently Köhler et al (2010) (KEA), used estimates of all the LGM forcings, and an estimate of the global mean temperature change, to constrain the sensitivity to 1.4-5.2ºC (5–95%), with a mean value of 2.4ºC. Another study, using a joint model-data approach, (Schneider von Deimling et al, 2006b), derived a range of 1.2 – 4.3ºC (5-95%). The SEA paper, with its range of 1.4 – 2.8ºC (5-95%), is merely the latest in a series of these studies.

Definitions of sensitivity

The standard definition of climate sensitivity comes from the Charney report in 1979, where the response was defined as that of an atmospheric model with fixed boundary conditions (ice sheets, vegetation, atmospheric composition) but variable ocean temperatures, to 2xCO2. This has become a standard model metric (because it is relatively easy to calculate. It is not however the same thing as what would really happen to the climate with 2xCO2, because of course, those ‘fixed’ factors would not stay fixed.

Note then, that the SEA definition of sensitivity includes feedbacks associated with vegetation, which was considered a forcing in the standard Charney definition. Thus for the sensitivity determined by SEA to be comparable to the others, one would need to know the forcing due to the modelled vegetation change. KEA estimated that LGM vegetation forcing was around -1.1+/-0.6 W/m2 (because of the loss of trees in polar latitudes, replacement of forests by savannah etc.), and if that was similar to the SEA modelled impact, their Charney sensitivity would be closer to 2ºC (down from 2.3ºC).

Other studies have also expanded the scope of the sensitivity definition to include even more factors, a definition different enough to have its own name: the Earth System Sensitivity. Notably, both the Pliocene warm climate (Lunt et al., 2010), and the Paleocene-Eocene Thermal Maximum (Pagani et al., 2006), tend to support Earth System sensitivities higher than the Charney sensitivity.

Is sensitivity symmetric?

The first thing that must be recognized regarding all studies of this type is that it is unclear to what extent behavior in the LGM is a reliable guide to how much it will warm when CO2 is increased from its pre-industrial value. The LGM was a very different world than the present, involving considerable expansions of sea ice, massive Northern Hemisphere land ice sheets, geographically inhomogeneous dust radiative forcing, and a different ocean circulation. The relative contributions of the various feedbacks that make up climate sensitivity need not be the same going back to the LGM as in a world warming relative to the pre-industrial climate. The analysis in Crucifix (2006) indicates that there is not a good correlation between sensitivity on the LGM side and sensitivity to 2XCO2 in the selection of models he looked at.

There has been some other work to suggest that overall sensitivity to a cooling is a little less (80-90%) than sensitivity to a warming, for instance Hargreaves and Annan (2007), so the numbers of Schmittner et al. are less different from the “3ºC” number than they might at first appear. The factors that determine this asymmetry are various, involving ice albedo feedbacks, cloud feedbacks and other atmospheric processes, e.g., water vapor content increases approximately exponentially with temperature (Clausius-Clapeyron equation) so that the water vapor feedback gets stronger the warmer it is. In reality, the strength of feedbacks changes with temperature. Thus the complexity of the model being used needs to be assessed to see whether it is capable of addressing this.

Does the model used adequately represent key climate feedbacks?

Typically, LGM constraints on climate sensitivity are obtained by producing a large ensemble of climate model versions where uncertain parameters are systematically varied, and then comparing the LGM simulations of all these models with “observed” LGM data, i.e. proxy data, by applying statistical approach of one sort or another. It is noteworthy that very different models have been used for this: Annan et al. (2005) used an atmospheric GCM with a simple slab ocean, Schneider et al. (2006) the intermediate-complexity model CLIMBER-2 (with both ocean and atmosphere of intermediate complexity), while the new Schmittner et al. study uses an oceanic GCM coupled to a simple energy-balance atmosphere (UVic).

These models all suggest potentially serious limitations for this kind of study: UVic does not simulate the atmospheric feedbacks that determine climate sensitivity in more realistic models, but rather fixes the atmospheric part of the climate sensitivity as a prescribed model parameter (surface albedo, however, is internally computed). Hence, the dominant part of climate sensitivity remains the same, whether looking at 3ºC cooling or 3ºC warming. Slab oceans on the other hand, do not allow for variations in ocean circulation, which was certainly important for the LGM, and other intermediate models have all made key assumptions that may impact these feedbacks. However, in view of the fact that cloud feedbacks are the dominant contribution to uncertainty in climate sensitivity, the fact that the energy balance model used by Schmittner et al cannot compute changes in cloud radiative forcing is particularly serious.

Uncertainties in LGM proxy data

Perhaps the key difference of Schmittner et al. to some previous studies is their use of all available proxy data for the LGM, whilst other studies have selected a subset of proxy data that they deemed particularly reliable (e.g., in Schneider et al. SST data from the tropical Atlantic, Greenland and Antarctic ice cores and some tropical land temperatures). Uncertainties of the proxy data (and the question of knowing what these uncertainties are) are crucial in this kind of study. A well-known issue with LGM proxies is that the most abundant type of proxy data, using the species composition of tiny marine organisms called foraminifera, probably underestimates sea surface cooling over vast stretches of the tropical oceans; other methods like alkenone and Mg/Ca ratios give colder temperatures (but aren’t all coherent either). It is clear that this data issue makes a large difference in the sensitivity obtained.

The Schneider et al. ensemble constrained by their selection of LGM data gives a global-mean cooling range during the LGM of 5.8 +/- 1.4ºC (Schnieder Von Deimling et al, 2006), while the best fit from the UVic model used in the new paper has 3.5ºC cooling, well outside this range (weighted average calculated from the online data, a slightly different number is stated in Nathan Urban’s interview – not sure why).

Curiously, the mean SEA estimate (2.4ºC) is identical to the mean KEA number, but there is a big difference in what they concluded the mean temperature at the LGM was, and a small difference in how they defined sensitivity. Thus the estimates of the forcings must be proportionately less as well. The differences are that the UVic model has a smaller forcing from the ice sheets, possibly because of an insufficiently steep lapse rate (5ºC/km instead of a steeper value that would be more typical of dryer polar regions), and also a smaller change from increased dust.

Model-data comparisons

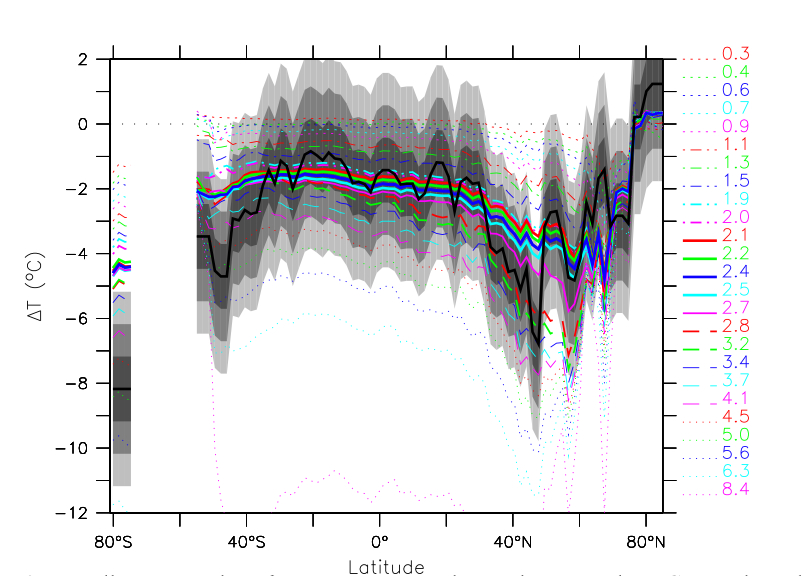

So there is a significant difference in the headline results from SEA compared to previous results. As we mentioned above though, there are reasons to think that their result is biased low. There are two main issues here. First, the constraint to a lower sensitivity is dominated by the ocean data – if the fit is made to the land data alone, the sensitivity would be substantially higher (though with higher uncertainty). The best fit for all the data underpredicts the land temperatures significantly.

However, even in the ocean the fit to the data is not that good in many regions – particular the southern oceans and Antarctica, but also in the Northern mid-latitudes. This occurs because the tropical ocean data are weighing more heavily in the assessment than the sparser and possibly less accurate polar and mid-latitude data. Thus there is a mismatch between the pattern of cooling produced by the model, and the pattern inferred from the real world. This could be because of the structural deficiency of the model, or because of errors in the data, but the (hard to characterise) uncertainty in the former is not being carried into final uncertainty estimate. None of the different model versions here seem to get the large polar amplification of change seen in the data for instance.

Response and media coverage

All in all, this is an interesting paper and methodology, though we think it slightly underestimates the most likely sensitivity, and rather more seriously underestimates the chances that the sensitivity lies at the upper end of the IPCC range. Some other commentaries have come to similar conclusions: James Annan (here and here), and there is an excellent interview with Nathan Urban here, which discusses the caveats clearly. The perspective piece from Gabi Hegerl is also worth reading.

Unfortunately, the media coverage has not been very good. Partly, this is related to some ambiguous statements by the authors, and partly because media discussions of climate sensitivity have a history of being poorly done. The dominant frame was set by the press release which made a point of suggesting that this result made “extreme predictions” unlikely. This is fair enough, but had already been clear from the previous work discussed above. This was transformed into “Climate sensitivity was ‘overestimated'” by the BBC (not really a valid statement about the state of the science), compounded by the quote that Andreas Schmittner gave that “this implies that the effect of CO2 on climate is less than previously thought”. Who had previously thought what was left to the readers’ imagination. Indeed, the latter quote also prompted the predictably loony IBD editorial board to declare that this result proves that climate science is a fraud (though this is not Schmittner’s fault – they conclude the same thing every other Tuesday).

The Schmittner et al. analysis marks the insensitive end of the spectrum of climate sensitivity estimates based on LGM data, in large measure because it used a data set and a weighting that may well be biased toward insufficient cooling. Unfortunately, in reporting new scientific studies a common fallacy is to implicitly assume a new study is automatically “better” than previous work and supersedes this. In this case one can’t blame the media, since the authors’ press release cites Schmittner saying that “the effect of CO2 on climate is less than previously thought”. It would have been more appropriate to say something like “our estimate of the effect is less than many previous estimates”.

Implications

It is not all that earthshaking that the numbers in Schmittner et al come in a little low: the 2.3ºC is well within previously accepted uncertainty, and three of the IPCC AR4 models used for future projections have a climate sensitivity of 2.3ºC or lower, so that the range of IPCC projections already encompasses this possibility. (Hence there would be very little policy relevance to this result even if it were true, though note the small difference in definitions of sensitivity mentioned above).

What is more surprising is the small uncertainty interval given by this paper, and this is probably simply due to the fact that not all relevant uncertainties in the forcing, the proxy temperatures and the model have been included here. In view of these shortcomings, the confidence with which the authors essentially rule out the upper end of the IPCC sensitivity range is, in our view, unwarranted.

Be that as it may, all these studies, despite the large variety in data used, model structure and approach, have one thing in common: without the role of CO2 as a greenhouse gas, i.e. the cooling effect of the lower glacial CO2 concentration, the ice age climate cannot be explained. The result — in common with many previous studies — actually goes considerably further than that. The LGM cooling is plainly incompatible with the existence of a strongly stabilizing feedback such as the oft-quoted Lindzen’s Iris mechanism. It is even incompatible with the low climate sensitivities you would get in a so-called ‘no-feedback’ response (i.e just the Planck feedback – apologies for the terminological confusion).

It bears noting that even if the SEA mean estimate were correct, it still lies well above the ever-more implausible estimates of those that wish the climate sensitivity were negligible. And that means that the implications for policy remain the same as they always were. Indeed, if one accepts a very liberal risk level of 50% for mean global warming of 2°C (the guiderail widely adopted) since the start of the industrial age, then under midrange IPCC climate sensitivity estimates, then we have around 30 years before the risk level is exceeded. Specifically, to reach that probability level, we can burn a total of about one trillion metric tonnes of carbon. That gives us about 24 years at current growth rates (about 3%/year). Since warming is proportional to cumulative carbon, if the climate sensitivity were really as low as Schmittner et al. estimate, then another 500 GT would take us to the same risk level, some 11 years later.

References

- A. Schmittner, N.M. Urban, J.D. Shakun, N.M. Mahowald, P.U. Clark, P.J. Bartlein, A.C. Mix, and A. Rosell-Melé, "Climate Sensitivity Estimated from Temperature Reconstructions of the Last Glacial Maximum", Science, vol. 334, pp. 1385-1388, 2011. http://dx.doi.org/10.1126/science.1203513

- C. Lorius, J. Jouzel, D. Raynaud, J. Hansen, and H.L. Treut, "The ice-core record: climate sensitivity and future greenhouse warming", Nature, vol. 347, pp. 139-145, 1990. http://dx.doi.org/10.1038/347139a0

- P. Köhler, R. Bintanja, H. Fischer, F. Joos, R. Knutti, G. Lohmann, and V. Masson-Delmotte, "What caused Earth's temperature variations during the last 800,000 years? Data-based evidence on radiative forcing and constraints on climate sensitivity", Quaternary Science Reviews, vol. 29, pp. 129-145, 2010. http://dx.doi.org/10.1016/j.quascirev.2009.09.026

- T. Schneider von Deimling, H. Held, A. Ganopolski, and S. Rahmstorf, "Climate sensitivity estimated from ensemble simulations of glacial climate", Climate Dynamics, vol. 27, pp. 149-163, 2006. http://dx.doi.org/10.1007/s00382-006-0126-8

- D.J. Lunt, A.M. Haywood, G.A. Schmidt, U. Salzmann, P.J. Valdes, and H.J. Dowsett, "Earth system sensitivity inferred from Pliocene modelling and data", Nature Geoscience, vol. 3, pp. 60-64, 2009. http://dx.doi.org/10.1038/NGEO706

- M. Pagani, K. Caldeira, D. Archer, and J.C. Zachos, "An Ancient Carbon Mystery", Science, vol. 314, pp. 1556-1557, 2006. http://dx.doi.org/10.1126/science.1136110

- M. Crucifix, "Does the Last Glacial Maximum constrain climate sensitivity?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL027137

- J.C. Hargreaves, A. Abe-Ouchi, and J.D. Annan, "Linking glacial and future climates through an ensemble of GCM simulations", Climate of the Past, vol. 3, pp. 77-87, 2007. http://dx.doi.org/10.5194/cp-3-77-2007

- J.D. Annan, J.C. Hargreaves, R. Ohgaito, A. Abe-Ouchi, and S. Emori, "Efficiently Constraining Climate Sensitivity with Ensembles of Paleoclimate Simulations", SOLA, vol. 1, pp. 181-184, 2005. http://dx.doi.org/10.2151/sola.2005-047

- T. Schneider von Deimling, A. Ganopolski, H. Held, and S. Rahmstorf, "How cold was the Last Glacial Maximum?", Geophysical Research Letters, vol. 33, 2006. http://dx.doi.org/10.1029/2006GL026484

Thanks for this article. I agree on the risk being only a little postponed if Schmittner is right. The risk is still there.

“the best fit from the UVic model used in the new paper has 3.5ºC cooling, well outside this range (weighted average calculated from the online data, a slightly different number is stated in Nathan Urban’s interview – not sure why)”

This is a typo in the published paper. An earlier version of our analysis found a different best-fit ECS (2.5 ºC, I think), with a LGM SAT cooling of 3.6 ºC. The published version of our analysis has a slightly lower ECS and less LGM cooling, but we neglected to update the manuscript.

Also, I think our supplemental material says our best fitting model is 2.4 ºC, which is also from an earlier version of the analysis. For the final analysis, I didn’t actually calculate the posterior mode (the “best fit”), just the mean and median.

The 3.3 ºC LGM cooling I quote in my interview is for the 2.35 ºC ECS UVic run, close to our median ECS estimate of 2.25 ºC. I haven’t computed the LGM cooling directly at the median estimate, which doesn’t correspond to any of our UVic runs. (I’d have to train an emulator on the full model output instead of just the data-present grid cells. This is possible, but I haven’t done it yet.)

[Response: Thanks. But the sat_sst_aice_2.35.dat file in the SI has -3.5 deg C cooling in SAT (assuming I didn’t mess up the area weighting). Can you check? – gavin]

As stated, it doesn’t really buy us much breathing space. At 2.3, the 2 degree limit is exceeded when CO2e reaches 550ppm. Does anyone thing the world is going to get serious about mitigation, quickly enough, to stablilise below 550ppm?

We still hear talk of stablising GHG concentrations at 450ppm CO2e. The only emissions scenarios the IPCC reported in AR4 that stablised at 450ppm or lower saw global emissions PEAK in 2015. The big emitters in Durban, so the BBC tells me, aren’t even thinking of a DEAL before 2015, far less emissions peaking.

So 450ppm WILL be exceeded, the policy-makers seem to have decided. How long before the window to stablisation at or below 500ppm is exceed? (The scenarios in AR4 suggest that if CO2 emissions peak after 2020)

Anyone want to take a bet on when CO2 emissions are going to peak?

Group: the following excerpt sums the Schmittner Report for me:

“The first thing that must be recognized regarding all studies of this type is that it is unclear to what extent behavior in the LGM is a reliable guide to how much it will warm when CO2 is increased from its pre-industrial value.”

It is all about time lengths and CO2 concentration increases.

A drag racer can go from 0 to 150 in three minutes without much noticeable change save for the vehicle speed.

That can can go to 120 in seven seconds and the effects are dramatic. The car vibrates heavily. Great clouds of smoke pour from the burning rubber. The car is never really in control during that time.

I see the LGM as the former and the industrial world’s contribution to global warming as the latter. A simplistic opiniin but I only know what I read.

Xref – some discussion of paper (with links to more at SKS (Schmittner comment), Planet3.0 interview, Tamino’s Open Thead) here at Tamino’s (link)

Gavin,

You’re right. For the 2.35 ºC ECS run the area-weighted SAT cooling is 3.5 ºC. The value 3.3 ºC comes from the 2.22 ºC ECS run. This latter run is actually closest to our posterior median ECS of 2.25 ºC, so 3.3 ºC is probably closer to our “best” estimate of LGM cooling.

[Response: Thanks. – gavin]

I agree with the critiques of this paper, so far as I can clearly understand them, and had hoped there would be a response here when I came across it yesterday.

An additional critique that may be inherent in your comments, but missed by me (or be so much dreck), is that the glacial maximum seems an odd place to start from. Climate sensitivity is important for the range and rate of change more so than what the ultimate change is. It’s the part between the peaks and valleys in the record that is interesting. We know pretty well the long-period cycles that trigger the glacial/interglacial cycle, after all. We also know humans were pretty much only in Africa for most of the glacial cycles and was highly mobile during the most recent. There is literally almost nothing analogous to the current climate system and our response to it.

Climate sensitivity is always stated as a range, but we tend to want, with our human brains, to think of it as a number, say, 2.4C. We like averages and means because they give us a comfortable place to think from. But climate does not appear to be this simple. Earth System Sensitivity, as noted, is the key, but for me it’s not a “maybe” or a “perhaps”, but is definitive.

I think of it this way, the potential change is the high end while the minimum change is the low end, right? At any given point in time climate sensitivity is dependent on which, if any, tipping points have been breached. If we approach a tipping point without passing it, the swing in response will be a given amplitude from which it will recover toward the center. If a given tipping point is breached, a greater amplitude follows.

The risk assessment must be based on every tipping point being breached, so for all intents and purposes, climate sensitivity is unconstrained. The worst it can get is the sensitivity we must do our risk assessment for because it is, in this case, a/an societal/existential threat. What happens if burn another trillion barrels of oil/oil equivalents and/or the methane in the permafrost and clathrates goes kablooey?

Most, if not all, posting here are well aware rate of change is perhaps more important than total change. Adapting to 2C over 1,000 years was probably easily done. Adjusting to it over 250 years, particularly with most of it coming in the latter 3/5 of the time period, is a different matter altogether. Adjusting t o 3 – 6C over the same time frames is a joke.

Rapid change acts as a multiplier because events that can be mitigated at one time scale may not be at another. Yet, there is no rate of change factor in climate sensitivity, but perhaps should be so the references for public consumption are more easily digested.

As a non-scientist, I am constantly tracking in my head that which I can understand clearly and usefully, and what I see is a planet that is responding at a rate and extent greater than any but our most pessimistic models suggest. Ipso facto, climate sensitivity is at the high end, so discussions of anything else are so much number-crunching. Valuable, useful and needed number-crunching, but number-crunching all the same so long as the work continues to trail the observed.

What is also not commonly discussed as being part of the models is the effect of having diminished the ability of the ecosystem to respond to stress because we have altered the entire system. In previous eras some key change/tipping point would trigger others in a cascade, but each of those triggers was hit by a change that preceded it so the rate of change was slowed by the magnitude of change needed to cause the change. I don’t mean to imply this is a purely linear process, but we have created a preexisting condition where every element of the climate system is already sensitive to change due to already having been altered before the tipping point that would have triggered it arrives. ALL of them have already been diminished.

Forest ecosystems will decline faster because there are far fewer of them than would be the case from purely natural change. Oceans are already altered in terms of oxygen-depleted zones, pollution, depleted populations, erosion and eradication of coastal ecosystems, etc.

Etc, etc.

There is no analog for climate change as humans have triggered it, so our sensitivities are even less sure than the science suggests, even with Earth System Sensitivity since it also presumably doesn’t account for rate of change nor the preconditioning the human presence has resulted in.

The risks are far greater than ever before, imho, and any implication otherwise is a dangerous message.

reCaptcha offers: “style: srdinge” I ask, What is how a drunken sailor orders small, salty fish on his pizza?

Re: It would have been more appropriate to say something like “our estimate of the effect is less than many previous estimates”.

I can’t see how that re-wording would make any difference to the press coverage. Remember that the media responds to this very differently from how scientists respond. Journalists know that the vast majority of their readers will never read the original paper, and don’t care about what this study actually did and how it might compare to previous studies. Their readers just want a sound bite, along the lines of “can we stop worrying about it” or “should we worry about it more than we did?”. As “less than” tends towards the former, and as journalists have to sex up the story to win eyeballs, the result is inevitable. The further downmarket you go in the media industry, the more “sexing up” there will be.

One of the things we scientists and academics have to keep reminding ourselves is that many of the people outside the science community who write about this don’t regard it as their job to educate people. Their salaries depend on selling copy, which usually means telling people what they want to hear. In that situation, how likely is it that they will ever do a good job of placing this paper within it’s scientific context?

There may be a bias in the SST estimates used in this (and other LGM-sensitivity papers). Many of the SST estimates are derived from planktonic foraminifera community composition, calibrated against 10m water depth SST. However, much of the foram community lives at much greater depths. This discrepancy between the depths at which foraminifera live and the depth at which they are calibrated to SST could introduce a bias if the thermocline structure has changed (i.e. steeper or shallower temperature gradients over the top 100 or so metres). I’m currently working to determine how large this bias is (probably small) and in which direction.

This may not be the Hegerl & Russon Perspective linked above but it is a pretty good substitute.

I read the popular summaries of this as something of a good-news/bad-news story. If true, the good news is that global temperature appears modestly less sensitive to C02 forcing than the IPCC mean sensitivity suggests. (Though this posting presents a clear description of the caveats.) The bad news is that habitability of the earth’s surface appears more sensitive to temperature change than had been previously thought. The hostile environment of the glacial maximum was produced by just ~3.5C cooling. That’s part and parcel of the low sensitivity estimate. Since I care about the habitability of the surface, not temperature per se, I don’t see the results as reassuring in the least.

How does this paper relate to the recent draft paper by Hansen et al on Energy Imbalance:

http://www.columbia.edu/~jeh1/mailings/2011/20110826_EnergyImbalancePaper.pdf

And to Hansen and Sato’s paper on Paleoclimate Implication

http://www.columbia.edu/~jeh1/mailings/2011/20110720_PaleoPaper.pdf

Climate or Earth System Sensitivity, however defined and at whatever geological period (see the discussion in de Energy Imbalance draft), is one thing, but polar amplification is another, it seems to me. Hansen and others now argue that that the Eemian was probably less warm globally than previously thought (so lower sensitivity?), but polar amplification was therefore higher, and the risk of sea level rise as well.

What’s the merit in this argument, if any, and how does it relate to the climate sensitivity discussion?

Someone please tell me the relevance anymore of man’s continued contribution to atmospheric CO2 levels, when enough momentum has already been unleashed to begin the thaw of the permafrost region, which of course contains an immense self-feeding mechanism.

Are rational people still discussing the possibility of stopping or limiting the thaw?

for Walt Bennett

You are assuming the worst is unavoidable and that we can’t make it any worse — or better — by managing what we can manage.

There’s no science supporting that conclusion.

further for Walt Bennett:

http://www.sciencedirect.com/science/article/pii/S0012825211000687

Earth-Science Reviews

Volume 107, Issues 3-4, August 2011, Pages 423-442

doi:10.1016/j.earscirev.2011.04.006

Atmospheric methane from organic carbon mobilization in sedimentary basins — The sleeping giant?

Lennart van der Linde (apologies for comment length),

You’re right that “climate sensitivity” and “polar amplification” refer to two different things, though there is some overlap.

Climate sensitivity, in the context of this post, refers to a globally averaged quantity. Specifically, it refers to the ratio of the global temperature change to the radiative perturbation that causes it (and thus has units of degrees C per Watts per square meter, for example).

Polar amplification, on the other hand, relates to the fact that the high latitudes typically exhibit a larger temperature change to a given perturbation than does low, equatorial latitudes (or for that matter, the global mean).

You could think of the polar regions as having a higher climate sensitivity than the low latitudes, but in the context of the Schmittner paper, they are only talking about a single globally-averaged number for sensitivity. Ideally, that would include the amplified polar regions (assuming you have good contribution of data from those areas).

As for the Hansen and Sato (2011) paper, they use a different methodology to diagnose sensitivity than the Schmittner paper, though both Hansen and the Schmittner paper are focusing on the same overall issue and like to use the LGM as a good target for tackling the problem.

In many of Hansen’s papers, he uses data for the global temperature change during a specific period (such as the LGM) compared to modern. He then uses what information is available to quantify (in Watts per square meter) what radiative terms drive that temperature change (for the LGM this is primarily increased surface albedo from more ice/snow cover, and also changes in greenhouse gases…the former is treated as a forcing, not a feedback; also, the orbital variations which technically drive the process are rather small in the global mean). Then, he takes the ratio of the temperature change to the total forcing and defines this as the sensitivity. This method tries to maximize using pure observations to find the temperature change and the forcing (you might need a model to constrain some of the forcings, but there’s a lot of uncertainty about how the surface and atmospheric albedo changed during glacial times…a lot of studies only look at dust and not other aerosols, there is a lot of uncertainty about vegetation change, etc).

Sometimes various factors like aerosols or vegetation change aren’t considered, and thus whatever effect they might have would just be lumped into the value of climate sensitivity value that emerges from this method.

Hansen also extends this analysis further (see also his 2008 Target CO2 paper for a lot of the methodology). He takes the climate sensitivity value derived from the LGM, based on the above methodology (about 3 C per doubling of CO2), treats that as a constant, and then applies it to the whole 800,000 yr ice core record. In order to establish a time series of global radiative forcing over the whole record he needs to assume a particular relationship between sea level/temperature records and the extent of the ice sheets over the last 800,000 years, which in turn defines the albedo forcing. Since there is no time-series of global temperature change, he also needs to assume a particular relationship between Antarctica temperature change (or the deep-sea change) and the global mean, and that the relationship always holds (probably untrue). He gets a good fit to the calculated temperature reconstruction when he uses the fixed climate sensitivity multiplied by the time series of radiative forcing.

In contrast to this observational approach, Schmittner (as well as a number of other papers, like reference #4 in this post) take advantage of both models and observations, and try to use the observations to constrain which feedback parameters in the model are consistent (e.g., an overly sensitive model with the same forcings as another model will produce too big of a temperature change than observations allow). There are different ways to weight the ensemble members, such as by assuming a Gaussian distribution in the paleo-data, or by brackering the “acceptance limit” of an ensemble member at the 1σ limit of the paleo-data. Note that the observational approach needs to assume a constant climate sensitivity between different states, whereas perturbed physics ensembles don’t (though you still need to understand what feedback processes are important between different climate states to have confidence in the results).

Hope that helps

> planktonic foraminifera

Lots out there. Calibration is always an issue — the BEST folks will have to discuss these questions when looking at sea temps.

A high-school science exercise:

http://www.ucmp.berkeley.edu/fosrec/Olson3.html

“… the deepest dwelling forms represent the true water-depth…. foraminifera will be found there which preferred to live in that environment as well as foraminifera which were brought in from shallower water by downslope processes (i.e., the Amazon Fan moves sediment, including foraminifera, in a downslope direction off of Brazil).”

Core drilling reports:

Deep Sea Drilling Project Initial Reports Volume 47 Part 1

http://www.deepseadrilling.org/47_1/volume/dsdp47pt1_36.pdf

GF Lutze – Cited by 23 – Related articles

… both benthic and planktonic foraminifers follow the surface-water oxygen-isotope curve, which served to calibrate the benthic foraminiferal record …

Correlations:

On the fidelity of shell-derived δ18Oseawater estimates

http://www.ldeo.columbia.edu/~peter/site/…/Arbuszewski.etal.2010.pdf

My interest is not in a “best fit” point estimate, but in their characterization of the distribution of possible fits. I had a quick look at their paper and I worry about the possibility of statistical misspecification they raise (page 3 of SCIENCE EXPRESS article). In particular, although they have considered spatial dependencies in their covariance (Supporting Online Material or “SOM”, section 6.3), they do insist upon a Gaussian shape. There’s no reason why that should be the case. Also troubling in their report in the SOM that the marginal distribution for ECS2xC is strongly multimodal (see their Figure S13) for which they have no real explanation, although they offer possibilities.

I’m willing to buy their “best fit” point estimate. As a result of this look, I’m far more skeptical of the accuracy of their tight bound on its range. It’ll come out in the wash. I’m sure there’ll be many remarks on this paper and its methods.

More:

http://www.nature.com/nature/journal/v470/n7333/full/nature09751.html

Corrected online 14 April 2011

Erratum (April, 2011)

“… results, based on TEX86 sea surface temperature (SST) proxy evidence from a marine sediment core …”

and (for calibration, this sort of work will be useful for ‘et al. and Robert Rohde’ for sea surface reconstructions)

doi:10.1016/j.palaeo.2011.08.014

The effect of sea surface properties on shell morphology and size of the planktonic foraminifer Neogloboquadrina pachyderma in the North Atlantic

Tobias Moller, Hartmut Schulza, Michal Kuceraa

Received 14 February 2011; revised 27 June 2011; Accepted 8 July 2011. Available online 3 September 2011.

Abstract

“The variability in size and shape of shells of the polar planktonic foraminifer Neogloboquadrina pachyderma have been quantified in 33 recent surface sediment samples …. shell size showed a strong correlation with sea surface temperature. …”

18 Jan Galkowski: Roger that. I think you are too young to remember when the wheels fell off of certain cars. [1968] Other things fell off of the same cars. The statistician at that company assumed that all distributions were Gaussian. I was taking a statistics course from the Army Management Engineering Training Agency at the time.

The shape of the distribution of climate sensitivities is definitely a critical issue and it has been discussed recently at RealClimate.

https://www.realclimate.org/index.php/archives/2011/10/unforced-variations-nov-2011/comment-page-7/#comments

Is the climate sensitivities distribution possibly 2 humped? The second hump being the methane hydrate gun? The fat right tail is a big question. The distributions I have seen recently look like a lot needs to be researched. No criticism of the science that has been done.

Jan Galkowski (#18),

Re: Gaussianity, see Figure S10 of the SOM. The residual errors around the best fit (posterior mean) appear somewhat non-Gaussian, but this appears to be due to the land and ocean data not centering on the same ECS value. If you separate them into land errors and ocean errors (as our analysis does), there doesn’t appear to be strong evidence of non-Gaussianity. Nevertheless, we also tested one non-Gaussian assumption, using a Student-t distribution with heavier tails (figures S14 and S15). It doesn’t change the result much.

If there is statistical misspecification, I think it’s probably in regional biases or nonstationary covariances, not in non-Gaussianity. Either one could make the uncertainty interval too narrow (or too wide, I suppose, but it usually winds up the other way around).

Re: multimodality, we haven’t proven a cause. But I suspect it is mostly an artifact of interpolation (though maybe a bit physical), as we discuss. I don’t think it has much to do with the width of our uncertainty interval.

Edward Greisch (#20):

I think you may be conflating two separate concepts. Jan is talking about non-Gaussian errors in the data-model residuals. You appear to be talking about non-Gaussian distributions of climate sensitivity.

Thank you for the article. One thing I picked up on is your closing paragraph indicating how much time we have. You’ve estimated 24 years to eat up the emissions budget for reaching 2 degree rise based on current IPCC estimates, or 35 years if the Schmittner estimate were closer to the mark – assuming the current rate of growth of emissions (3%/year).

If both are too low then we have even less time to act.

Numbers like this should bring it home to more people just how urgent it is to reduce emissions – (to those over 40 who probably have a different perspective on time than many of those under 30).

When I read the Schmittner et al. paper, I was taken aback by the fact that the SOM contained important information which I might expect to see in the main paper. There are two rather different issues mixed together. First is the temperature re-construction for the LGM and the comparison between the LGM and present temperatures. Second is the use of a computer model to compare the change between the LGM and the present, then extrapolate this comparison to assess the effect of 2x pre-industrial CO2 relative to our recent climate.

The first finding, the lessened change in temperature between LGM and the present, is an important issue in itself and suggests to me that the climate may be more sensitive to a perturbation than previously suspected. Also, the first effort shows that during the LGM, some areas may have been warmer than present, such as over the Nordic Seas and northwestern Pacific. We now see a world in which Siberia is considered to be the coldest area during NH winters, yet, Siberia appears to have been free of glaciers during the LGM from what little I’ve seen. Given the various sources of uncertainty mentioned in other posts, the temperature reconstruction is the first question that needs to be studied.

The second issue is the modeling effort, which uses the UVic model of “intermediate complexity”. I think this work may be seriously flawed for several reasons, many of which are acknowledged in the SOM, but not the main paper. The simple energy balance model of the atmosphere does not include a term for the fourth power of temperature. Given that all energy leaving the TOA is IR, which is a function of T^4 at some effective elevation in the atmosphere, surely this should be included. The original polynomial model was published in a by Thompson and Warren in 1982 at a time when GCM development was still primitive. I think that much has been learned since then. The ocean model does not include any flow between the North Pacific and the Arctic Ocean via the Bering Strait, which would be appropriate during the LGM when sea level was lower, but not for today’s climate and into the expected warmer future. The atmospheric model uses prescribed wind stresses on the ocean and sea-ice, with variations for the LGM using anomalies calculated from a GCM, which produces a stronger AMOC than that found in the GCM. Clouds are not calculated, but set by climatology. Finally, the authors excluded cases where the AMOC collapsed during LGM, noting that these cases would imply a larger climate sensitivity than that presented in their report (SOM, section 5 & 7.5).

These questions would not be so serious, except that the paper is to appear in SCIENCE and thus will be taken as evidence against the prospect of dangerous climate change. As noted above, there are already commentaries claiming this report shows little need for concern over future climate changes.

[Response: I agree that there are serious problems with the representation of atmospheric feedbacks in the model, but the lack of a fourth-power dependence in infrared emission vs T is not the key one them. Over ranges of temperature of a few degrees, you can linearize sigma*T^4 about the base temperature T0 with little loss of accuracy. Moreover, for temperatures similar to the present global mean, water vapor feedback actually cancels out some of the positive curvature from the fourth-power law (see Chapter 4 of my book, Principles of Planetary Climate). So it’s not a manifestly fatal flaw to have a linearized emission representation. It is true that the linearization does substantially misrepresent some aspects of the north-south gradient in temperature, given that over the temperature difference between tropics and Antarctic winter the nonlinearity becomes significant. What is more severe, in my view, is that the energy balance model cannot represent the geographic distribution of lapse rate, relative humidity or clouds. In the interview over on Planet 3, Nathan Urban clearly doesn’t understand the full limitations of the model even though he is one of the authors of the paper. It’s more than just failing to represent the albedo effects of clouds — the model doesn’t represent the geographical variation of cloud infrared effects either, or the way these change with climate. Given that clouds are known to be the primary source of uncertainty in climate sensitivity, how much confidence can you place in a study based on a model that doesn’t even attempt to simulate clouds? –raypierre]

This discussion reminds me of philosophers of the Middle Ages debating how many angels could stand on the head of a pin.

Another analogy might be a group of naval ship designers on the Titanic discussing, while the ship slowly fills with water, technical detail about the hull steel plates size, the number of water tight compartments, the angle of the iceberg strike, etc.

Gentlemen – the last ten years are the hottest on historical record. We are now at nearly 400 parts per million CO2 in our atmosphere. Carbon in our atmosphere increased by an estimated huge 6% just last year, crops were wiped out all across the US south by what many see as a clear global warming event. It goes on and on.

Don’t we have more urgent business than to measure the rapidity and other obscure scientific details of how our Titanic is doing and the number of seconds or hours it will take before it sinks?

Talk about Nero fiddling while Rome burns. We are fiddling with scientific minutia while the world burns.

“Be that as it may, all these studies, despite the large variety in data used, model structure and approach, have one thing in common: without the role of CO2 as a greenhouse gas, i.e. the cooling effect of the lower glacial CO2 concentration, the ice age climate cannot be explained…”

Indeed, the ice age cycles cannot be explained without lower glacial CO2 concentration if a positive feedback is involved.

I guess all these studies have one thing in common (positive feedback), so have all reached similar conclusions (CO2 is a major factor).

I’m really surprised at the result, despite the fact you have reached similar conclusions without really changing anything that would significantly change the outcome.

Informative & critical review; thank you.

In the response by raypierre- I agree about the problems with simple energy balance model and its lack of spatial representation, but it’s tough to fault the authors for the lack of cloud detail, since the science is not up to the task of solving that problem (and doing so would be outside the scope of the paper; very few paleoclimate papers that tackle the sensitivity issue do much with clouds).

The paper states that “Non-linear cloud feedbacks in different complex models make the relation between LGM and 2×CO2 derived climate sensitivity more ambiguous than apparent in our simplified model ensemble” so they do recognize this (it is of course hand-waving, but fair hand-waving). Absent understanding of cloud feedback processes, the best you can really do is mesh it into the definition of the emergent climate sensitivity, but I think probing (at least some of) the uncertainties in effects like this is one of the whole points of these ensemble-based studies.

[Response: Chris, you are way off the mark here. The lack of certainty in our knowledge of cloud behavior is no excuse for leaving it out of a model entirely. GCM’s vary in their could effects — that’s one of the things one should be trying to test against the LGM. But they do at least have certain basic physical principles in their cloud representations — clouds over ice have less albedo effect than clouds over water, you don’t get high clouds in regions of subsidence, stable boundary layers lead to marine stratus, etc. These are all things that are crucial in determining the relation between LGM behavior of a model and 2xCO2 behavior. To make such strong claims about climate sensitivity based on a model that represents none of this verges on irresponsibility. Yes, I fault them. –raypierre]

Eric Swanson (#23),

Science has severe length constraints on what can go into the main body of the paper, hence the SOM. And no, we didn’t exclude AMOC collapsed cases. The main result includes all the data, but in the SOM we tested the exclusion of data from the AMOC collapsed region. We also tested the sensitivity to stronger or weaker wind stresses (though with the same spatial pattern).

raypierre (#23),

I don’t really feel it’s necessary here to use phrases like “clearly doesn’t understand”. I am fully aware that UVic doesn’t explicitly resolve the longwave physics of clouds (nor the shortwave physics), and nothing in my interview states otherwise. Perhaps you overlooked “e.g.”.

[Response: No, Nathan, I didn’t overlook the e.g. Your remark in the interview clearly implied that it was only the shortwave effect of the clouds that was excluded. If you meant to say that the model flat out doesn’t compute clouds at all, why not come right out and say so? –raypierre]

An interesting paper, and thanks for the review of it. I’m still finding it quite hard to see where the cause for optimism comes from given the results presented. Notably, the last line of the abstract: ” [with caveats] … these results imply lower probability of imminent extreme climatic change than previously thought.”

Media articles jumped on this I think, not least the BBC, yet I don’t see the justification for this statement. The paper seems to support a climate in which you get more bang for your buck, or more “change” (however you quantify it) for every degree Celsius warming.

We may not know exactly how many degrees Celsius we’ll get for each doubling of atmospheric CO2, but we do know that the world was a helluva lot different at the LGM compared to now. Schmittner et al hints that a comparable change will not take too many degrees Celsius, as the temperature difference to LGM is smaller than in other estimates. At our present rate of warming, this smaller change will come about quicker than previously suggested, not slower. Thus we’ll effect the equivalent of an LGM-Holocene change really quite fast. Climate may be less sensitive to CO2 change according to the paper, but it is more sensitive to each degree Celsius temperature change – this, to me, is saying that we should be more worried, not less worried based on these results.

If I’m off the mark here, that’s good, but can anyone clarify this point?

Your understanding is correct skywatcher. No reason for optimism.

While not a climate scientist, I am an active experimental atomic physicist, with decades of experience in data analysis and uncertainty analysis. I am disturbed with the quoted uncertainty in the Schmittner paper, in light of the significant discrepancies between the sensitivity extracted from the ocean and the land data (their figure 3). Their quoted 95% result of 2.8C is in contradiction with at least half of their sensitivity range as measured from the land data. The discrepancy between the land and ocean results clearly point to a serious problem – whether in the data or model. But given that, I do not see how one can make any combined statistical prediction – the discrepancies point to a clearly systematic problem.

I can point to numerous cases in precision measurements in my field where improved results are many sigma different than previous results – precisely because systematic effects were underestimated or not even known. But here we have a clear demonstration of a dramatic systematic effect, and yet it is treated as statistical in nature. I would much prefer the results to be reported as a sensitivity of 2.4K extracted from ocean data and 3.4K as extracted from land data.

I can certainly tell you that it would not be acceptable to report such a narrow combined uncertainty in a physics measurement (where uncertainties are much more under control) if the result of two measurements showed such discrepancies.

raypierre (#28),

My remark in the interview was not intended to imply any such thing. I’m sorry if you read it that way. I gave one example of longwave feedbacks (water vapor) and one example of shortwave feedbacks (clouds). Those were not intended to be exclusive or exhaustive examples, hence the “e.g.” I know we both know what an energy-balance atmosphere model is. If you want to assume otherwise, I can’t really help that, and I don’t see any point arguing about it. However, I do appreciate your remarks concerning the importance of resolving cloud physics.

[Response: Here is the exact quote from the interview: “One limitation of our study is that we assume that the physical feedbacks affecting climate sensitivity occur through changes in outgoing infrared radiation (e.g., through the greenhouse effect of water vapor). In reality, feedbacks affecting reflected visible light are also important (e.g., cloud behavior). Our study did not account for these feedbacks explicitly. Also, as I mentioned earlier, our simplified atmospheric model does not represent these feedbacks in a fully physical manner. ” I’ll leave it for people to judge for themselves what this is supposed to mean. I can’t make much sense myself of what you are trying to say about water vapor feedback here. But as you say, there’s no point in arguing about it. The important thing is that we all understand that with regard to clouds it’s not just that they’re not represented “in a fully physical manner.” How about,like, not in any physical manner at all? –raypierre]

Isotopious @25 — Please first study Ray Pierrehumbert’s “Principles of Planetary Climate” after reading “The Discovery of Global Warming” by Spencer Weart:

http://www.aip.org/history/climate/index.html

The effects of atmospheric CO2 were completely worked out by 1979, at the latest.

[Response: And for a historical perspective don’t forget The Warming Papers, by Dave Archer and myself. –raypierre]

Steve R @31 — That the climate sensitivity of oceans and land surfaces are quite different comes as no surprise.

[Response: Actually, the issue regarding land vs. ocean in the Schmittner et al paper is more perplexing than just that. Warming over land is amplified relative to global mean by a model-dependent amount that is often around 50%. The Schmittner et al result does not simply say that land is more sensitive than ocean. Rather, their analysis shows that if you compare the LGM land cooling with the model land cooling, then the model that fits the land best has much higher GLOBAL climate sensitivity than you get for best fit if you use ocean data. As stated in the paper, that could reflect an error in the land temperature reconstruction (too cold), an error in the ocean reconstruction (not cold enough) or an error in the models land/ocean ratio. It has been argued that the land amplification is associated with lapse rate changes (not represented in the UVic model), and it is certain that drying of the land can play a role (not reliable in the UVic model since diffusing water vapor gives you a crummy hydrological cycle, especially over land). To his credit, Nathan Urban flagged the land-ocean mismatch as one of the caveats in the study indicating all is not right. That makes it particularly egregious that Pat Michaels erased the land curve from the sensitivity histogram in his World Climate Report piece (see Nathan’s interview at Planet 3). I just wish the paper and especially the press release had been as up front about the study’s shortcomings as Nathan’s interview. –raypierre]

Patrick “halfgraph” Michaels strikes again.

That aside, what would be a good model to use in this study?

[Response: The right way to do this would be to use a perturbed physics GCM ensemble akin to ClimatePrediction.net. Even better to do it with perturbed physics ensembles from several different models. It’s clear this is very computationally intensive, and one can’t fault the authors for beginning with a study that is less ambitious. Still, in my view it would have been far better if the authors had presented their study as a “proof of concept” with some intriguing speculative results, rather than making the exaggerated (and insufficiently caveated) claims made in the paper, and more especially the press release. –raypierre]

I don’t suppose that will be coming out in e-book form? Too many diagrams, equations, which don’t render well in e-readers? I’m just a bit reluctant to spend nearly $90 CND on another book when I still have another climate book (Principles of Planetary Climate :) I’m struggling through in my spare time.

[Response: I don’t think the publishers have immediate plans for an e-book edition. It wouldn’t work on a Kindle or Nook, but it would do fine on an iPad, but I don’t think our publishers are set up for that yet. But the main thing is that the price for an ebook wouldn’t be significantly less, since almost all of the price is due to the extortionate copyright fees the publisher had to pay to the journals for permission to reprint. The only thing that would bring that down would be selling LOT’s and LOT’s of copies. Hope that happens eventually :) . Meanwhile the best bet would be to persuade your local public library to buy it, or maybe form a little reading group and chip in $10 each then share it. I really do regret the price. –raypierre]

[raypierre response @ 33] — Right, also The Warming Papers.

Thanks also for the addional comment regarding the Schmittner et al paper [which I found certainly helpful]. My lack of surprise regarding solely the ‘actual’ difference in the ocean and land sensitivities is motivated by this LGM vegetation map:

http://www.ncdc.noaa.gov/paleo/pubs/ray2001/ray_adams_2001.pdf

which certainly suggests it was quite dry during LGM. My amateur understanding is that this should affect the averaged land temperature more than the averaged ocean temperature.

1998:

“… the average turnover time of phytoplankton carbon in the ocean is on the order of a week or less, total and export production are extremely sensitive to external forcing and consequently are seldom in steady state. … oceanic biota responded to and affected natural climatic variability in the geological past, and will respond to anthropogenically influenced changes in coming decades.”

Science Volume: 281, Issue: 5374, Pages: 200-206, DOI: 10.1126/science.281.5374.200

2009:

“Recently, unprecedented time-series studies conducted over the past two decades in the North Pacific and the North Atlantic at >4,000-m depth have revealed unexpectedly large changes in deep-ocean ecosystems significantly correlated to climate-driven changes in the surface ocean that can impact the global carbon cycle. Climate-driven variation affects oceanic communities from surface waters to the much-overlooked deep sea ….”

http://www.pnas.org/content/106/46/19211.abstract

Low-oxygen and low-pH events are an increasing concern and threat in the Eastern Pacific coastal waters, and can be lethal for benthic and demersal organisms on the continental shelf. The normal seasonal cycle includes uplifting of isopycnals during upwelling in spring, which brings low-oxygen and low-pH water onto the shelf. Five years of continuous observations of subsurface dissolved oxygen off Southern California, reveal large additional oxygen deficiencies relative to the seasonal cycle during the latest La Niña event…. the observed oxygen changes are 2–3 times larger …. the additional oxygen decrease beyond that is strongly correlated with decreased subsurface primary production and strengthened poleward flows by the California Undercurrent. The combined actions of these three processes created a La Niña-caused oxygen decrease as large and as long as the normal seasonal minimum during upwelling period in spring, but later in the year. With a different timing of a La Niña, the seasonal oxygen minimum and the La Niña anomaly could overlap to potentially create hypoxic events of previously not observed magnitudes…..”

Nam S., Kim H.-J., & Send U., 2011. Amplification of hypoxic and acidic events by La Niña conditions on the continental shelf off California. Geophysical Research Letters 38: L22602.

——-

Rate of change, rate of change, rate of change

David Benson,

Actually the issue of different sensitivity between land and ocean is not immediately obvious, and has been the subject of various papers in recent years. The larger thermal inertia of the ocean is important, but the higher sensitivity over land than in the ocean is also seen in equilibrium simulations when the ocean has had time to “catch up,” so that argument doesn’t hold as equilibrium is approached. As raypierre mentioned, the amplification of land:ocean is about 1.5 (see Sutton et al., 2007, GRL). There are some various proposed mechanisms to explain this that involve the surface energy balance (e.g., less coupling between the ground temperature and lower air temperature over land because of less potential for evaporation), and also lapse rate differences over ocean and land (see Joshi et al 2008, Climate Dynamics), as well as vegetation or cloud changes.

In any case, there’s been a surprisingly large number of people that have (incorrectly) interpreted the figure in Dr. Urban’s interview as just meaning a higher land sensitivity than ocean sensitivity. You can certainly define land vs. ocean sensitivity meaningfully, but then you can also talk about polar vs tropical sensitivity too (which probably has a much higher ratio than land:ocean), or whatever else you feel like calculating. But the Schmittner paper is only focused on a global climate sensitivity, and that’s what they calculate and report. It’s important to understand that the method used in the paper involves using observations to constrain which model version (of 47 ensemble members that range from a sensitivity of 0.3 to 8.3 K/2xCO2) are compatible with the obs. The different sensitivities are made possible by tweaking a parameter that relates temperature to OLR (a weaker slope of OLR vs. T implies a higher sensitivity).

Thus, the interpretation of the figure is that the ocean SST data favor a lower global climate sensitivity value than the land SAT data, which is why people suspect the results are not robust (and probably that the temperature anomaly of the LGM-modern is too low).

Quoting the two sets of median values given above, if a 55% increase in CO2 from LGM to pre-industrial caused a 3.3-5.8C warming, how does one calculate a 2.3-3C doubling sensitivity?

We’re told that in the depths of an ice age orbital changes provide a small forcing which warms the planet a tad, causing natural systems to release a small amount of CO2. This causes a warming/CO2 feedback loop and we end up perhaps 5C warmer in an interglacial. Now, isn’t that entire 5C the natural response to the small orbital forcing? Assuming the current anthropogenic CO2 forcing is larger than orbital forcings, shouldn’t we expect more than 5C warming as an ultimate result? It seems that current sensitivity estimates ignore feedback CO2 for the modern era. This might work in the short term, as feedback CO2 takes a long time to show up (the 400-800 year lag problem), but ultimately, isn’t this wrong? Instead, isn’t the scenario: we inject CO2 into the atmosphere, causing a spike. This forcing CO2 gets spread around the planetary system over hundreds of years, which mitigates the increase in the atmosphere. Meanwhile, over thousands of years feedback and re-feedback CO2 enters the atmosphere, and we’re at a supercharged version of emerging from an ice age. However, the orbital signal will still be pointed at “cooling”, so perhaps that will save the day.

Thanks in advance for clearing up my confusion!

Very informative post and a really good discussion thread. Thanks to all, and especially to Nathan Urban for joining in.

[Response: I, too appreciate Nathan having joined us, as well as Andreas Schmittner (see comment below). –raypierre]

Thank you for the article. One thing I picked up on is your closing paragraph indicating how much time we have. You’ve estimated 24 years to eat up the emissions budget for reaching 2 degree rise based on current IPCC estimates, or 35 years if the Schmittner estimate were closer to the mark – assuming the current rate of growth of emissions (3%/year).

If both are too low then we have even less time to act.

Numbers like this should bring it home to more people just how urgent it is to reduce emissions – (to those over 40 who probably have a different perspective on time than many of those under 30).

Comment by Sou — 28 Nov 2011 @ 7:47 PM

This is why I have encouraged everyone – literally – to take a true systems approach to these issues. Climate divorced from energy divorced from how long it takes to cycle through infrastructure minus embedded energy of infrastructure minus EROEI…. and on and on…. is meaningless.

The IEA’s annual World Energy Report (WEO) stressed that by 2017 we will have built the infrastructure that will carry us to 450 ppm CO2.

And some are expecting an ice-free Arctic by 2016.

And others believe clathrates of a whatever kind are already accelerating in their melt rates (which, paradoxically may show up better in atmospheric CO2 than methane since a recent study said 50% of methane is converted to CO2 via methanogenesis, perhaps helping with the accounting re: last year’s massive increase)…

#33

David Benson. Thanks for the advice. A positive feedback works well for some of the ice age climate, just not all of it (specifically the ‘cycle’ part). I understand the boom part of it, just not the bust. What is the bust, or switch, or tipping point that turns off, or (dare I say it) ‘negates’ the positive feedback?

A made up example…..

Let’s say there is an initial change of 1 deg C.

Which causes a change in CO2 giving 0.75 deg C,

Which both causes a change in water vapour giving a total of 6 deg C.

This is now what you call a changed ‘state’. It’s completely different from the initial conditions.

In fact, now that the positive feedback has been ‘kick started’, the initial mechanism which began the whole positive feedback is no longer of prime importance. Taking it away will not reverse the effect of the positive feedback. Sure, there will be a change if you take away the initial forcing, but it will be relative to the new state of 6 deg C.

It will still be 5 deg C. And sure, I know what others think. Take away the forcing and the water vapour will follow. And to an extent, that will happen. But that’s the problem with a positive feedback loop that is dependent on temperature change.

It is the temperature that the system is dependent on. Once the state has changed, that’s it, it a new state. The feedback loop doesn’t care how it got there.

[Response: The loop doesn’t, but the thing that starts the loop can change direction and as long as the entire feedback loop is not unstable, you simply have an amplified response to the drivers (in this case, orbital forcing). – gavin]

@16 Chris Colose and 40 RichardC:

That certainly helps, although it leaves me wondering what climate sensitivity we should use in our collective risk assessment, both for mitigation and adaptation? Hansen looks at both faster and slower feedbacks, but how slow are the slower feedbacks is uncertain. So how much time do we have for an overshoot and to how much warming are we committed in the longer term of several centuries? It seems to me we should use the higher values for climate sensitivity, including the slower feedbacks, for a complete assessment of risks upto the seventh generation, so to speak. All this discussion of the Schmittner et al paper should not distract from the point that Hansen and others (including RichardC in #40 and William P in #24) try to make: that there seems to be a significant risk that climate sensitivity could be on the higher end of the various ranges, especially if we include the slower feedbacks and take into account that these could kick in faster than generally assumed.

Chris Colose – I was looking at Sutton et al. 2007 with regards to this paper on SkS a couple of days ago. The mean and median of the equilibrium land-ocean warming contrast (using a slab ocean) in the GCMs considered is ~1.3 (with a spread of about 1.2-1.5). To properly interpret the Schmittner et al. paper on this point wouldn’t we need to know the equilibrium land-ocean warming contrast inherent in the model used? Tom Curtis at SkS reports a warming ratio of about 1.7 in Schmittner et al., though I don’t know if this derives from the model or the data (I confess I haven’t read the paper).

Raypierre, regarding missing cloud effects. Wouldn’t including cloud changes simply alter the effect of their parameterisation tweaks for each run – they would simply use different values to get a spread of sensitivities. The probability distribution will likely be different but presumably their best fit ECS to the data would remain at about 2.3-2.4?

[Response: Computed cloud feedbacks would mainly have the potential to affect the results by changing the asymmetry between the climate sensitivity going into the LGM vs. going into a 2xCO2 world. –raypierre]

Hi guys, (I don’t know exactly who you are so I hope you forgive me that I call you guys)

thanks for the criticism of our paper. (Nothing new for me there, though, since we already discuss all these points explicitly in the paper).

I’d just like to quickly comment on a few incorrect statements:

1. In the main article you state “the fact that the energy balance model used by Schmittner et al cannot compute cloud radiative forcing is particularly serious.”

2. Raypierre (#23, thanks for identifying yourself), you state “how much confidence can you place in a study based on a model that doesn’t even attempt to simulate clouds?”

3. Raypierre (#32) ‘The important thing is that we all understand that with regard to clouds it’s not just that they’re not represented “in a fully physical manner.” How about,like, not in any physical manner at all?’

All three of these statements are incorrect. The UVic model computes cloud radiative effects. The effect of clouds on the longwave radiation is included in the Thompson and Warren (1982) parameterization. The radiative effect of clouds on the shortwave fluxes is computed as a seasonally varying (but fixed from one year to the next) and spatially varying atmospheric albedo.

Since we vary the Thompson & Warren parameterization we do implicitly take into account the uncertainty of clouds on the longwave fluxes. And as Nathan correctly states, and as we have already pointed out in our paper, we do not take into account the uncertainty of clouds on shortwave fluxes (atmospheric albedo was not varied).

[Response: Hi Andreas, thanks for stopping by. I think the actual point that we were making was that the cloud feedback (how clouds change as a function of the temperature, circulation, humidity etc., and how that impacts the radiative balance) is not being calculated here. I’ve edited the main article to make that clearer. – gavin]

[Response: Yes, that is indeed the point I was making. A model without any dynamics in the atmosphere doesn’t even have the right information in it to even have a chance of doing cloud feedbacks correctly. But regarding missing cloud effects, I do think the one emphasized by Nathan in his Planet 3 interview is probably the key one. Bony et. al find that the spread in 2xCO2 climate sensitivity among CMIP GCM’s is largely due to differences in low cloud behavior, and that’s primarily an albedo effect. –raypierre]

Can someone point me to how the Icesheet and Vegetation forcing is simulated. I’m looking for something fairly detailed.

Nathan Urban (#21),

Thanks, Professor Urban, for your explanation.

Regarding “Re: multimodality, we haven’t proven a cause. But I suspect it is mostly an artifact of interpolation (though maybe a bit physical), as we discuss. I don’t think it has much to do with the width of our uncertainty interval”, while the main probability mass is in the range advertised, and I certainly understand the convention of coming out with a point estimate and estimate of dispersion, the multimodality, taken at face value, suggests such a single point estimate may be inappropriate. The paper does present the entire density, and that’s great, and lines up components.

Regarding the Gibbs-like towers on the density (S13, and main paper, Figure 3), if there’s some way of gauging the uncertainty in the density itself, perhaps using bootstrap resampling, perhaps the bumps might be seen to be not statistically significant, and so the question would go away.

William P (#24),

Are you suggesting that all scientist should stop studying the climate? That’s a bold statement on a site about “climate science from climate scientists”.

At first glance the SEA sensitivity result is largely a consequence of the LGM data set they used. But as the top post here says

It’s hard to say what KEA conclude about the LGM temperature:

But returning to what I started quoting from the top post here:

Perhaps these latter points can be checked with the UVic model itself. Ideally we might prefer a full Earth Systems Sensitivity approach with the computationally expensive modeling that has evidently been deemed too expensive to date. But are more extensive and less uncertain LGM data needed to justify this? And meanwhile is Hansen’s approach the best?