Readers may recall discussions of a paper by Thompson et al (2008) back in May 2008. This paper demonstrated that there was very likely an artifact in the sea surface temperature (SST) collation by the Hadley Centre (HadSST2) around the end of the second world war and for a few years subsequently, related to the different ways ocean temperatures were taken by different fleets. At the time, we reported that this would certainly be taken into account in revisions of data set as more data was processed and better classifications of the various observational biases occurred. Well, that process has finally resulted in the publication of a new compilation, HadSST3.

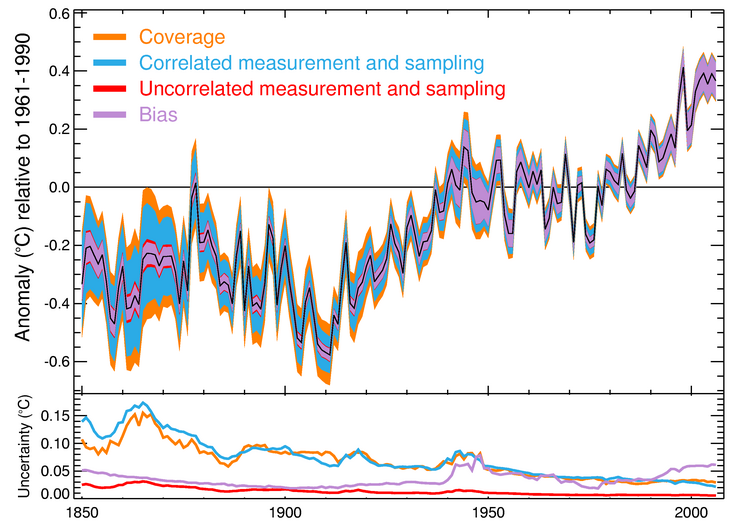

Figure: The new HadSST3 compilation of global sea surface temperature anomalies and the uncertainty.

HadSST3 not only greatly expands the amount of raw data processed, it makes some important improvements in how the uncertainties are dealt with and has a more Bayesian probabilistic treatment of the necessary bias corrections. That is to say that instead of picking the most likely factors and providing a single reconstruction, they perform a Monte Carlo experiment using a distribution of factors and provide a set of 100 reconstructions – the average and spread of which inform the uncertainties. This is a noteworthy approach and one which is likely to set a new standard for other reconstructions. (The details of the procedures are outlined in two new papers Kennedy et al, part I and part II).

One potential problem is going to be keeping the analysis up-to-date. Currently, HadSST2 (and HadCRUT3) use the real time updating related to NCEP-GTS. However, this service was scheduled to be phased out in March 2011 (was it?), in lieu of near-real time updating of the underlying ICOADS dataset. This is what HadSST3 uses, but unfortunately, the bias corrections in the modern period rely on being able to track individual fleets. For security reasons, data since 2007 has been anonymized (so you can’t tell what ship reported what data), and so the HadSST3 analysis currently stops at 2006. Apparently this is being worked on, so hopefully a solution can be found. Note that the ocean temperatures in the GISTEMP analysis use the Reynolds satellite SST data from 1979 and so are unaffected by the ICOADS security issue.

The ocean temperature history is obviously a big part of the global surface air temperature history and these new estimates will be used eventually in updates of the HadCRUT3 product. Currently HadCRUT uses HadSST2 and we can expect that to be updated soon.

Impacts

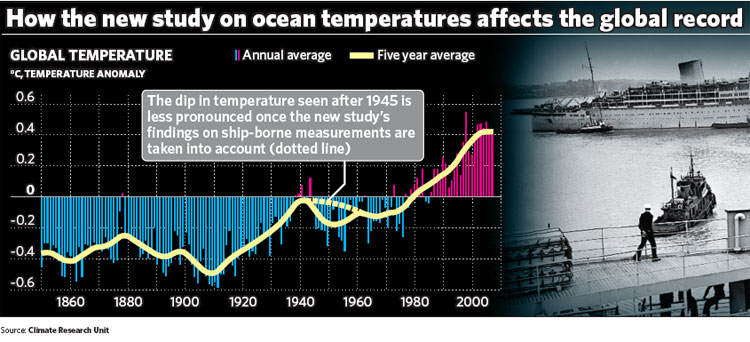

Obviously, when a new analysis is performed, it is interesting to see how it differs from previous ones. The differences between HadSST3 and HadSST2 are shown here:

and are important in a few key time-periods – the 1940s (because of issues highlighted previously), the 1860s to 1890s (more extensive data), and perhaps the last few years (related to more minor changes in technologies and corrections). The biggest difference around 1946-8 is just over 0.2ºC.

One odd feature of the HadSST2 collation was that the temperature impact of the 1883 Krakatoa eruption – which is very clear in the land measurements – didn’t really show up in the SST. Thus comparisons to model simulations (which generally estimate an impact comparable to that of Pinatubo in 1991) showed a pretty big mismatch (see Hansen et al (2007)). With the larger amount of data in this period in HadSST3, did the situation change?

Figure: 1880’s comparison of (left) global surface air temperature anomalies using HadSST2 (as part of GISTEMP) and the GISS AR4 simulations, (right) global SST estimates from HadSST2 and HadSST3.

It seems clear that the new data (including HadSST3) will be closer to the models than previously, if not quite perfectly in line (but given the uncertainties in the magnitude of the Krakatoa forcing, a perfect match is unlikely). There will also be improvements in model/data comparisons through the 1940s, where the divergence from the multi-model mean is quite large. There will still be a divergence, but the magnitude will be less, and the observations will be more within the envelope of internal variability in the models. Neither of these cases imply that the forcings or models are therefore perfect (they are not), but deciding whether the differences are related to internal variability, forcing uncertainties (mostly in aerosols), or model structural uncertainty is going to be harder.

So how well did the blogosphere do?

Back in 2008, a cottage industry sprang up to assess what impact the Thompson et al related changes would make on the surface air temperature anomalies and trends – with estimates ranging from complete abandonment of the main IPCC finding on attribution to, well, not very much. While wiser heads counselled patience, Steve McIntyre predicted that the 1950 to 2000 global temperature trends would be reduced by half while Roger Pielke Jr predicted a decrease by 30% (Update: a better link). The Independent, in a imperfectly hand drawn graphic, implied the differences would be minor and we concurred, suggesting that the graphic was a ‘good first guess’ at the impact (RP Jr estimated the impact from the Independent’s version of the correction to be about a 15% drop in the 1950-2006 trend). So how did science at the speed of blog match up to reality?

Here are the various graphics that were used to illustrate the estimated changes on the global surface temperature anomaly:

Figure: graphics from Steve McIntyre, Roger Pielke Jr and the Independent. Update: RP Jr’s graph shown above is an emulation of McIntyre’s adjustments, his own attempt is here (though note that the title on the graph is misleading).

Now, we don’t yet have the real updates to the HadCRUT data, but we can calculate the difference between mean HadSST3 values and HadSST2, and, making the (rough) assumption that ocean anomalies determine 70% of the global SAT anomaly (that’s an upper limit because of the influence of spatial sampling and sea ice coverage), estimate an adjusted HadCRUT index as SAT_new=SAT_old + 0.7*(HadSST3-HadSST2).

Figure: Estimated differences in the global surface air temperature anomaly from updating from HadSST2 to HadSST3. The smoothed curve uses a 21 point binomial filter.

While not perfect, the Independent graphic is shown to have been pretty good – especially for a hand-drawn schematic, while the more dramatic implications from McIntyre or Pielke were large overestimates (as is often the case). The impact on trends is maximised for trends starting in 1946 (a 21% drop in the 1946-2006 case), but are smaller for the 1956-2006 trend (11% decrease from 0.127±0.02 to 0.113±0.02 ºC/decade) (the 50 year period highlighted in IPCC AR4). More recent trends (i.e. 1970 or 1975 onwards) or much longer trends (1900 for instance) are barely changed. For reference, the 1950-2006 trend changes from 0.11±0.02 to 0.09±0.02 ºC/decade – a 17% drop in line with what was inferred from the Independent graphic. Note that while the changes appear to lie within the uncertainties quoted, those uncertainties are related solely to the fitting of an regression line and have nothing to do with structural problems and so aren’t really commensurate. The final analysis will probably show slightly smaller changes because of the coverage/sea ice issues. Needless to say the 50% or 30% reductions in trends that so excited the bloggers did not materialize.

In summary, the new HadSST3 analysis is a big step forward in both data coverage and error analysis. The differences are much smaller however than the somewhat exaggerated (and erroneous) blog speculations that appeared in the immediate aftermath of the Thompson et al paper. Those speculations were wrong because of a lack of familiarity with the data (McIntyre confused his buckets), basic mistakes (such as applying ocean-only corrections to global temperature series) and an over-eagerness to overturn established positions. Among the lessons to be drawn should be that science does not travel at the speed of blog and that confirmation biases and desires to be first on the block sometimes get in the way of the rational accumulation of knowledge. Making progress in science takes work, and with big projects like this, patience.

Nice to see this progress in science.

At the risk of making a self-fulfilling prophecy:

Guess we can anticipate some blogospheric corners to use this to bolster their claim that when model-data comparisons are imperfect, the data are matched towards the models though. After all, conspiracies live by virtue of seeing them bolstered wherever you look.

In the middle of the article you wrote:

“(…) deciding whether the differences are related to internal variability, forcing uncertainties (mostly in aerosols), or model structural uncertainty is going to be harder.”

Guess you meant to write “easier”?

[Response: Not really. The remaining differences are smaller and so the given the various uncertainties, will be harder to attribute. But it also becomes less important. – gavin]

A general question from a non-expert. Intuitively it seems to me that measuring the temperature at a large number of points in the oceans is the most reliable way of assessing temperature change. There should be far less short term variation and none of the problems associated with the locations of land-based thermometers. Why were these relied upon in the past, when ocean measurement was always available? I can see that a drawback could be the slow response to temperature changes in the air; and perhaps satellite measurment is considered superior for this and other reasons.

[Response: The problem is that the ocean is very large and the number of ships traversing it – particularly in the early years – are small. There is a significant component of ‘synoptic’ variability in the ocean as well (eddies etc.) and so while the variation is less than in the atmosphere, for many areas there aren’t/weren’t sufficient independent observations to be sure of the mean values. Try having a look at the data (there is a netdcf file you can download, and use Panolpy to look at the various monthly coverages) and you’ll get a sense of what data there is when. – gavin]

It still looks like the same old hockey stick to me. Thanks much for the links to papers. I am downloading them.

The problem with the Pielke/McIntyre predictions is not so much their magnitude as it is the implication that the 1950-200X trend is somehow a uniquely relevant measure of climate change. Pielke’s deconstruction of the IPCC ‘mid-century’ statement is particularly silly in this respect.

What is shockingly ill-advised to me is that the Pielke and McIntyre projections both required, in order to fit with their hoped for story line, that the adjustments not only affect the period from 1945 to 1960, but also extend beyond that into the late 90s, in order to level the more recent temperature increases so as to both make the rate appear less dramatic and the amount of recent, CO2 forced warming less of a concern.

And yet Thomspon et al 2008 explicitly states (emphasis mine):

and

How could they be that… hmmm, what word should I use to be polite?

Where did I “predict” a 30% decrease? I don’t recall providing such a prediction and the link you have to Prometheus has no prediction. Thanks.

[Response: It’s possible I got confused, but the very next post after the one linked says:

right next to another figure that you (erroneously) claim was we had suggested would be the impact. The only person who said ‘a 30% reduction’ appears to be you, since it is clear from our original text we said nothing of the sort. – gavin]

I didn’t seem to do too bad, :^)

“The overall warming trend declines from the “observed” rate of 0.116°C/decade to a “corrected” rate of 0.092°C/decade. ”

http://www.masterresource.org/2010/02/why-the-epa-is-wrong-about-recent-warming/

-Chip

[Response: Indeed, you appear to be able to read a graph correctly. This is not usually cause for specific praise, but you were up against a pretty poor field. ;-) – gavin]

Thanks for posting this. I’m very glad to see this cleared up. I suppose GISSTEMP will also be revised. Can anyone give a brief explanation of the 21 point binomial filter?

[Response: Binomial filters have weights in proportion to the binomial expansion coefficients (i.e. 1,2,1 or 1,3,3,1 or 1,4,6,4,1 etc.) ( with n as one less than the number of terms). A 21-point filter has weights 1,20,190,…..,190,20,1 etc. I used it just because that was what was used in the Indpendent figure (despite their caption saying otherwise). – gavin]

with n as one less than the number of terms). A 21-point filter has weights 1,20,190,…..,190,20,1 etc. I used it just because that was what was used in the Indpendent figure (despite their caption saying otherwise). – gavin]

Predicted denier comment on the first chart: “See! It says “BIAS” right there on the chart! That proves it’s not objective!”

Are the data to construct the figure below

“The differences between HadSST3 and HadSST2 are shown here:”

available? I’ve found only grided data on the metoffice-page.

[Response: I took the TS_all_realisations.zip file (monthly averages for each of the 100 realisations) and calculated the annual means for each and then calculated the mean and sd for each year. If you can’t find it, I’ll post the net result when I get back to my desk. – gavin]

Whether or not GISS updates GISTEMP, the Climate Code Foundation intends to update ccc-gistemp to allow it to optionally use HadSST3 for historical SSTs (current SSTs are Reynolds). This relates to some work already on our to-do list.

I believe the CA acccount of this story given by Steve McIntyre. He is 100% honest.

[Response: The quality of McIntyre’s character is not of the slightest interest to me. What matters is whether something is correct or not, and he was not correct. – gavin]

Mr. McIntyre is claiming , in his response post, “More Bilge from RealClimate”:

In the comment section of the relevant post, Mr. McIntyre says to a scientist, Peter Webster of GTECH:

This is the prediction you are talking about, correct?

[Response: Indeed. And at the time Roger interpreted the “effects of McIntyre’s proposed adjustments” in the same way. – gavin]

The Kennedy papers look like papers I would have to read if I was a scientist and doing some heavy duty faunistic ecological analysis, just to warn aspiring ecologists. I didn’t read them through yet, maybe I never will but the 1st 3 pages of both were still understandable enough. Sometimes I’m glad I didn’t make the grade to be a Dr. but just a M.Sc. :-).

Thanks Gavin, indeed you are confused, there was no such prediction of 30% reduction from me, can you correct this post? I appreciate it, Thanks!

[Response: Well I’m confused now. You took three posts to estimate the impacts of the Thompson et al paper and now you claim that none of these were ‘consistent with’ a 30% prediction despite the quote above and the three figures you produced showing various impacts (showing a 50% changes, 30% changes and 15% changes). (I have taken the liberty of editing your second figure to correct the impression that you tried to give that this was based on something we said). You are welcome to tell us what you think you predicted in the comments but I don’t see any errors in the post. Perhaps you could explain where the 30% number came from since it only appears on your blog. Thanks! – gavin]

[Further response: Actually there is a slight error. The graph I attribute to you in the above post is your emulation of what McIntyre said (a ‘50% decrease’), not your independent emulation of a 30% decrease linked above. – gavin]

Well, that RPJr post and subsequent thread is an interesting read.

The “I” in this snippet is RPJr. The “guess” in this snippet is the 30% impact.

Thanks Gavin, on Prometheus I provided 3 emulations (and requested others if people did not like those 3), they were:

1. What I interpreted that McIntyre said (50%)

2. What I interpreted that you said (30%, which you dispute, fine, I accept that)

3. What I interpreted that the Independent said (15%)

Once again, at no time did I generate an independent prediction. I am now for the third time asking you to correct this post.

I did however say that any nonzero value would be scientifically significant, which I suggest is more interesting than the blog debate that you are trying to start.

Please just correct it, OK? It is not a big deal. Then let’s get back to the science.

Thanks!

[Response: Since your #2 emulation was not ‘consistent with’ anything anyone else said, it must have originated independently with you. But your statement in this comment can stand as a rebuttal to my interpretation of what you said. I have no desire to have a ‘blog debate’ but rather I am making the point that hurried ‘interpretations’ of results aren’t generally very accurate and that people can be in too much of a hurry to jump to conclusions about the IPCC, attribution, impacts on policy etc. I do not anticipate that this will stop any time soon – speculation unattached to data is too tempting for many. But for readers of the latest shock horror interpretation of some new study, this might serve as a cautionary tale. – gavin]

Gavin-

You seem to be upset that people were discussing and speculating about interesting new science. Instead of tut-tutting that some of this proved not to pan out, you should welcome such discussions. They happen all the time and are an indication of the interest that people have in the science.

By its nature such speculation will include some explorations/views that don’t pan out (sounds a lot like what happens in the peer reviewed literature). So what? That is why on my blog I presented a wide range of views and asked for yours as well.

Are you really suggesting that that McIntyre’s speculation was inappropriate but the Independent’s was OK? Would you prefer that people not discuss science and its implications if they are at risk of being mistaken? Silence would ensure everywhere.

Since you will not correct this post after I have explained your confusion, I will put up a blog post to set the record straight. There is a lot of misinformation out there;-)

[Response: The misinformation is greatly enhanced by people jumping to ‘interpret’ things that require actual work. Discussion of issues is not the problem (and your misrepresentation of my statements is nicely ironic in this context), but jumping to conclusions is. I would certainly support greater reflective discussion of real issues – perhaps you could actually help with that this time. – gavin]

17, gavin in response: But for readers of the latest shock horror interpretation of some new study, this might serve as a cautionary tale.

Well said.

So RPJr’s post was basically suggesting that this IPCC conclusion might not be valid:

“Most of the observed increase in global average temperatures since the mid-20th century is very likely due to the observed increase in anthropogenic greenhouse gas concentrations.”

He wrote, “I interpret “mid-20th century” to be 1950, and “most” to be >50%.”

Regardless of whether he said 30% or something else, it sure doesn’t look like this adjustment materially affects the amount of observed increase in global average temperatures, just the date on which it occurred.

Start with Pascal’s triangle. The way the numbers are added in one row to get the next row adds each number twice so that the sum across a row is a power of two. The sum of the nth row is 2 to the n-1 power.

1

1 1

1 2 1

1 3 3 1

1 4 6 4 1

1 5 10 10 5 1

1 6 15 20 15 6 1

1 7 21 35 35 21 7 1

…

The coefficients we want to use as weights will be related to these rows although these rows have no physical significance – the numbers are just easy for computers to generate so why not be a little fancy?

The coefficients will be used as weights for years so they must sum to 1. This is easily achieved: divide each by their total. This also means that years far from the year of interest have little influence. See this pdf for more explanation.

For our 21 point filter the final Pascal row will sum to 2 to the 20th power. Recall that 2 to the tenth is just over 1000, 1024 to be exact. Square this to get the 20th power of 2.

1000 squared is a million. 2 to the 20th is 1,048,576.

Next, note that the leftmost two numbers in the 21st Pascal row are 1 and 20.

The smallest weight should be 1 divided by 1,048,567 or just under 1 divided a million, or just under

.000001

and the next weight should be twenty times that, or just under

.000020

Now see Kennedy’s worked example. He’s got it right! ;)

It finally ends up showing the same trend that you see using a simple moving average. Evidently the trend is robust to these niceties.

Some time ago Gavin, seconded by Chris Colose, urged us all to download EdGCM. But it costs $250, which I suspect is $250 more than the original but now unusable code. Taking care of this little matter ought to be duck soup for the Climate Code Foundation, he said hopefully.

[Response: This is a shame (and I have said this to the people concerned), but they have been unable to find an alternative funding model that allows them to provide support at the level required. – gavin]

Gavin-

“but jumping to conclusions is”

I am curious as to what conclusion you think that I jumped to you and that you are objecting to? Please provide a direct quote from me.

In my discussion that you link to I was careful to note: “Other adjustments are certainly plausible, and will certainly be proposed and debated in the literature and on blogs”

My promised blog on this is here:

http://rogerpielkejr.blogspot.com/2011/07/making-stuff-up-at-real-climate.html

Thanks

Roger has demonstrated yet again his propensity for misrepresentation that was evident in the first go around on this topic (and on multiple other occasions). It is not enough to accept that someone disagrees with him, any disagreement has to be based on ‘a fabrication’ because, of course, since Roger is always right, anyone who claims he isn’t must be lying. This kind of schoolyard level discourse is pointless and only adds to the already-excessive amount of noise. I for one am plenty glad that Roger has decided to expend his talents on other subjects. Perhaps those other audiences will be more appreciative of his unique contributions.

From Pielke’s original analysis:

http://cstpr.colorado.edu/prometheus/archives/climate_change/001445does_the_ipccs_main.html

The answer is C. That’s C. We’re awaiting the answer to be shown prominently!

Roger Pielke Jr.,

What concerns me most these days is not what you, or others say, per se, but what is implied or inferred. If one uses reasonably comprehensive reasoning, one can see the most logical inference of a post, or combined related communications.

Your father uses similar red herring style arguments as well. Inferred points that allow for ambiguity and reinterpretation may allow for politically comfortable talking points and sufficiency in obfuscation presentation, but are not helpful when attempting to get closer to the truth of a matter.

So while you and so many others love to play in the wiggle room, still others are diligently trying to get at the truth of the matters at hand. And no, I’m not talking merely about data but how some choose to represent their arguments and opinion about data.

The take away from a particular or combined communication is the total representation, not the specifics of a particular detail. Do you see the difference and the importance of the difference?

-24-Gavin

Last time ;-)

On the question of whether or not I offered an independent prediction of how ocean temperature adjustments would work out, there is an objective answer to this question — as I have carefully explained to you here, I did not. Anyone can read my posts and see that this is the case. It is not a matter of interpretation.

More generally, if you want to avoid a playground fight then don’t write posts like this one, because as you have seen, both McIntyre and I have taken issue with your comments (what did you expect?). That is always a danger on a playground, if you call people out they will sometimes react … So maybe in the future stick to calling out Willie Soon and Fred Signer? ;-)

[Response: Roger, that you would jump up and down calling people names when someone attempts to hold you accountable for your statements was eminently predictable. Indeed, I have no doubt you’d be happier if we didn’t criticise your rush to judgements and misrepresentations of other people’s statements, but overall I think it’s better if people are made aware of the credibility of prior performances. I think that readers can easily judge whose judgement back in 2008 was the most credible and I’m happy to leave them the last word. – gavin]

Pete,

Some time ago Gavin, seconded by Chris Colose, urged us all to download EdGCM. But it costs $250, which I suspect is $250 more than the original but now unusable code. Taking care of this little matter ought to be duck soup for the Climate Code Foundation, he said hopefully.

What are you proposing? If it involves us spending money, then I infer you are labouring under the misapprehension that we have some. If it’s something else, get in touch.

I’m finding the reaction to this amusing. On one hand you have a bunch of apologists complaining that Gavin is somehow stifling skeptic creativity by pointing out that they were wrong (if this is the new mantra, I believe Micheal Mann is due some arts and crafts time!). Mcintyre is now saying that his prediction was ‘conditional’, as if this somehow changes Gavin’s point about wild speculation being wrong — in fact, it only adds to point. Probably just as bad (but definitely more funny) RPJ is attempting to get Gavin to correct the original post, where his wrong interpretation of an RC post turned out to be wildly speculative and wrong. Here’s an idea. Admit that your wild speculation turned out to be erroneous and likely led many people to believe things are untrue (for examples check the trackbacks or just google) and then stop doing that. Do the work, it’s much more fulfilling to both yourselves and the science.

The approach of providing an ‘envelope’ of temperature histories in HadSST3 is a nice one. It also means that for say, the 50 yr trend (1946 – 2006) you can get a distribution of trend values. How does that distribution look like? how big is the spread?

Is it accounted for in the 0.02 range you use ( in 0.127±0.02 & 0.113±0.02)?

[Response: No. Those uncertainties were from the OLS regression fitting to the mean reconstructions. I’ll see if I can calculate what you want later this evening. – gavin]

[Further response: The range of 1956-2006 trends in the SST is 0.080±0.01, compared to 0.081±0.02 for the trends in the mean reconstruction – thus the errors in the ‘updated’ HadCRUT3v series trend will likely be just a little larger than the 0.022 (adding the terms in quadrature). – gavin]

This bickering is absurd.

What I do not understand is why people play the Judith Curry style game of creating several posts filled with other people´s quotations, making up hypothetical graphs, asking questions to the commenters, and playing ¨what if¨ scenarios in their head, only to back off and play the ¨I´m innocent¨ card when someone tries to call them out on the bulls**t.

I can give Roger the benefit of the doubt that he did not directly predict a 30% decrease in trend, and gavin may have misunderstood him. Instead, he made up something that RC never said (see emulation #2 in post 17), and artificially introduced the notion that RC thought the Thompson et al. issue never mattered, when gavin emphatically wanted to reduce the noise brought about by speculation and wait for the experts to do the analysis. Roger considered this an attempt to dodge the issue.

Meanwhile, Steve McIntyre DID predict a reduction in trend by half, or at least made several very confident and authoritative statements about what would happen, which Roger interpreted similarly. Steve´s predictions also extended to what he perceived as a big coming change to aerosol climatology. Naturally, Steve now denies this and puts an odd spin on his quote, and claims gavin is fabricating claims against his blog.

Most humerous, is that Steve M tried to take credit for the discovery of this bucket issue, even though scientists knew about it many years before the Thompson et al paper. The issue is actually quantifying and saying something useful about it, not blogging about speculations and why ¨the Team¨ won´t handle your data requests the way you like. In fact, according to McIntyre, all the members of Thompson et al. get the humble position as being members of ¨the Team.¨ But noticing a potential issue is not enough. ClimateAudit had absolutely no role in pushing the science of bucket corrections and temperature measurements any better. I can arm-wave about an unsettled issue too, and then claim that I should get credit for noticing it when a peer-reviewed paper comes out a couple years later dealing with it, but I´d only do so if my intent was to confuse and obfuscate. Just as all of CA whines about the dozens of hockey sticks, they have not once offered a better reconstruction.

It is a great tactic they have going…they do not contribute to the literature, but they cannot lose the fight.

Pete Dunkelberg,

Sorry. When I used EdGCM, it was setup at my University, but I think it was down-loadable on a personal computer for free (at the time). I heard Mark Chandler was having a lot of issues keeping it going, which may account for the need to charge for the product. It is a shame, because it´s a great educational tool, and a rather realistic model for being able to run ~100 yr simulation in 24 hours or so on a regular laptop. It also gave the user the freedom to tinker with many different scenarios and setup a real simulation.

[Response: It’s not inconceivable that the current GISS model code run at the old resolution (8×10) would produce similar performance. It might be worth someone’s time to investigate…. – gavin]

I think Gavin’s reading of what McIntyre said is a bit off, though I can see how that could happen in this convoluted topic.

First of all McIntyre said, “If the benchmark is an all-engine inlet measurement system… all temperatures from 1946 to 1970 will have to be increased by 0.25-0.3 deg C since there is convincing evidence that 1970 temperatures were bucket (Kent et al 2007), and that this will be phased out from 1970 to present according to the proportions in the Kent et al 2007 diagram… Recent trends will be cut in half or more, with a much bigger proportion of the temperature increase occurring prior to 1950 than presently thought.”

Obviously, that large an impact was predicated on the “benchmark” of “all-engine inlet” measurements in the “proportions in the Kent et al 2007 diagram.”

“If” the reference really was “all-engine” and if the proportions really matched Kent 2007, then half of “recent” trends could be affected, with more warming occurring prior to 1950. That is a lot necessary conditions to fill.

You’ve represented that in the post as a prediction that “the 1950 to 2000 global temperature trends would be reduced by half.”

That isn’t quite what he said in two ways. The first, because of the erasure of all the “ifs” and predicates. The second, is that he did not say that the trends from 1950-2000 would be cut in half. He said that the recent trends might be cut in half with more of the remaining warming present prior to 1950 than is shown now. Those are not equivalent, interchangeable statements. As a mental shorthand, it might be fine to reduce the complexity into more simple and memorable portions, but when attributing a statement to someone, it would be better to use the full complexity version of what they actually said, rather than the reduced complexity mental shorthand version of what they said.

Moreover, that wasn’t his only commentary on the topic. Three days later (2008-05-31, over three years ago, and fully search indexed) he publicly speculated on a smaller magnitude based on different types of buckets, “Let’s say that the delta between engine inlet temperatures and uninsulated buckets is ~0.3 deg C… [i]nsulated buckets would presumably be intermediate. Kent and Kaplan 2006 suggest a number of 0.12-0.18 deg C.”

He also refers to the previous writing as, “[M]y first cut at estimating the effect,” obviously implying that this and future estimates might be better than his “first cut.”

While I can see how one might misread what he said, particularly given a haze of three years, there are some important differences between what he said, and what this post claims that he said.

[Response: I’ll point out that McIntyre didn’t complain when Roger Pielke interpreted his statements exactly I as I did. And while he was subsequently informed that he’d got his buckets confused he never came back to the issue (as far as I can tell) after promising “I’ll redo my rough guess based on these variations in a day or two”. The fact is that he only produced one graph of what he thought the impact would be and speculated wildly on how this would undermine all the previous D&A work (it didn’t, and it won’t). He had plenty of time to update it, but he never did. – gavin]

I think Roger is playing a game of obfuscation, whereas every step forward by RC should be met by ten confusing step backwards by contrarians. Its part of their generalized game plan of sorts. All while back at the ranch (planet):

http://nsidc.org/data/seaice_index/images/daily_images/N_stddev_timeseries.png

the real action is for a warming, proving and complementing the shown above sst graphs quite well. I shutter in dismay a whole lot more when arguing about details on how things are calculated becomes the main topic of discussion, the main signal is lost in this case, SST’s do not seem to be cooling, I note Roger not writing about that, again part of the process of denying reality. May be if we don’t talk about it the problem will fade away, like shutting eye lids in front of ghosts, or by cherry picked poorly placed point to point smoothing graphs… While arguing substance leading to nowhere, polar bears are missing a piece of ice to sleep on, not that they have a say in all this, but its starting to be far worse than that. a warming planet is a serious concern, is happening big time, Roger should regularly admit what mere animals already know.

“Ocean heat

An article published recently in the journal Science showed that the flow of ocean heat into the Arctic Ocean from the Atlantic is now higher than any time in the past 2000 years. The warm, salty Atlantic water flows up from the mid-latitudes and then cools and sinks below the cold, fresh water from the Arctic. The higher salt content of the Atlantic water means that it is denser than fresher Arctic water, so it circulates through the Arctic Ocean at a depth of around 100 meters (328 feet). This Atlantic water is potentially important for sea ice because the temperature is 1 to 2 degrees Celsius (1.5 to 3 degrees Fahrenheit) above freezing. If that water rose to the surface, it could add to sea ice melt.”

http://nsidc.org/arcticseaicenews/

I was wondering how Steve McIntyre made such a large error in the scale of the correction, and found something that may be relevant –

“Steve’s adjustment is based on assuming:

that 75% of all measurements from 1942-1945 were done by engine inlets, falling back to business as usual 10% in 1946 where it remained until 1970 when we have a measurement point – 90% of measurements in 1970 were still being made by buckets as indicated by the information in Kent et al 2007- and that the 90% phased down to 0 in 2000 linearly.” Roger Pielke https://web.archive.org/web/20080907044024/http://sciencepolicy.colorado.edu/prometheus/archives/climate_change/001445does_the_ipccs_main.html

“To put the problems inherent in recording ‘bucket’ temperatures in this fashion into their proper context, I can do no better than record the conversation I had some years ago with someone who had served in the British Navy in the 1940’s and 50’s when the bucket readings were still common (they finally finished in the early 60’s).” Judith Curry at http://judithcurry.com/2011/06/27/unknown-and-uncertain-sea-surface-temperatures/

Obviously, they can’t both be right, and McIntyre’s 50% overestimation should have some basis. Maybe he fooled himself into a calculating a large, inaccurate correction by making an unwarranted assumption about the transition from buckets to engine intakes and back to buckets, because it fits his narrative. It’s not like I’ve never made that misteak.

Nick Barnes and others, I was not proposing that you personally finance anything. Your work on ccc-gistemp is already a giant contribution. I hoped that with that experience you could convert a smaller program without too much more effort. But I see that ccc-gistemp is not done yet.

We all agree that a people’s GCM would be good to have. There ought to be some foundation that would support the work. Maybe it just takes the right person to make the pitch.

#37

“We all agree that a people’s GCM would be good to have. There ought to be some foundation that would support the work.”

Well, there is NCAR’s CAM

Re 36:

it’s not that hard to understand. If you read a McIntyre post as carefully as one reads a wegman report looking for sources, you’ll find the source of the idea:

“Let’s suppose that Carl Smith’s idea is what happened. I did the same calculation assuming that 75% of all measurements from 1942-1945 were done by engine inlets, falling back to business as usual 10% in 1946 where it remained until 1970 when we have a measurement point – 90% of measurements in 1970 were still being made by buckets as indicated by the information in Kent et al 2007- and that the 90% phased down to 0 in 2000 linearly. This results in the following graphic:”

You see, The lines left out indicate that what Steve was doing was exploring a suggestion made by a reader. That’s why he labelled the graphic ( a version is shown on RC without the label) as Carl Smith’s proposal. You could look at it as a thought experiment. Anyone who has ever worked with what if analysis is used to doing these sorts of things. You could think of GCM projections as ‘what if’ scenarios. The problem is, of course, that people are not very cautious about reading print or the fine print or the labels on charts. Given the current environment it’s become hazardous to even engage in absolutely ordinary what if analysis or first order guesses. Some people take them too seriously.on both sides i’m afraid.

GISTEMP uses HadISST for pre-Satellite SST. This is an interpolated version of the data, and as such is considerably different than HadSST2. Presumably, they’ll create a version of HadISST based on these new adjustments.

It seems that McI and PJnr are both following the standard playbook, yet again, on this issue: “Always offended, never embarrassed”.

In their zeal to attack ‘the team’ at all costs, what they have clearly if unwisely said must be spun to mean something else when they’re confronted with it. The comments by Grypo @ 29 and Chris Colose @ 31 have it right.

In what other field would scientists apparently have to tolerate such nonsense from the usual repeat offenders?

#33, Thomas L – It seems to me that Gavin was highlighting the wild-eyed speculation about ‘bucket adjustments’ in the blogosphere. That McIntyre’s inference of large alterations wasn’t so much a considered prediction as guesswork based on incomplete information doesn’t really detract from that point.

It could be argued that the Independent’s figure was equally speculative. Was there an a priori reason why that was felt to be a better guess?

[Response: Yes – the information on the likely changes came from the people who knew most about the data and the inconsistencies. – gavin]

#10: Thanks Gavin!

Hazardous? I think it’s more like, if you are wrong about something, that is misleading on an important subject, be prepared for people to notice and issue corrections, especially when the original party fails to do so. Speculate all you want, just do the work and get it right, and when you’re wrong, don’t become a drama queen when people start to notice past digressions.

Yes, many people took that analysis very seriously.

Pete,

Nick Barnes and others, I was not proposing that you personally finance anything. Your work on ccc-gistemp is already a giant contribution. I hoped that with that experience you could convert a smaller program without too much more effort. But I see that ccc-gistemp is not done yet.

It will never be “done”, but it’s usable already, and we are certainly working on other things as we continue to improve it. One of our Google Summer of Code students is working on making a faster and more user-friendly ccc-gistemp; one of the others is working on a new homogenization codebase (with input from Matt Menne, Claude Williams, and Peter Thorne), and the third is working on a web-facing common-era temperature reconstruction system (mentored by Julien Emile-Geay, Jason Smerdon, and Kevin Anchukaitis). For more information on all three, see their mid-term progress-report posts on our blog, later this week.

Are we interested in GCMs? Absolutely, and I hope to do something in that space later this year.

I’d like to see an anomaly chart relative to 1850-1960 — one with a lower horizontal line that more clearly reflects “before the impact of climate change.” Why is relative to 1961-1990 used?

I find it rather amazing that no matter how often someone points out that Roger Pielke Jr. is wrong, he just tucks in his head, brushes it all aside, and keeps on moving. Truly amazing.

Re44.

I guess you are still taking a what if scenario seriously. I don’t think a careful reading of the text supports your position. yes, careless people failed to read carefully. There are two over reactions at play here: the first over reaction is the one taken by those who saw the assumptive analysis as a fact; the second over reaction is one that makes too much over “correcting” the analysis. I Try not to fall into either camp. As for corrections. I’ll avoid the comparing track records on corrections. I will note that the notion of correcting a what if analysis doesnt really make sense. How best to illustrate that?

For example, I have no idea how much you weigh. I have no idea how tall you are. I dont know if you are a male.

Assuming you’re a male, assuming you’re about 70 inches tall, I’ll propose you weigh about 190lbs.

Now some people will walk away from this analysis and conclude that mosher claimed you were a 5 foot 10 190lb male. They are poor readers. And, if you end up being a woman, I’m sure some people will laugh and say, “stupid mosher thought she was a he.” They too are poor readers.

On my view they are no more careful than those who made the mistake of misconstruing a projection as a prediction. But the idea that I should “correct” my “what if”, doesn’t really make sense does it? When, the IPCC does a projection of future temperatures based on an assumption about emissions, we do not clamor to have them issue a correction if the emissions scenario doesnt materialize. The whole POINT of doing what if analysis is our uncertainty about what certain facts will be.

would you insist that the IPCC issue a correction if A1F1 or B2 doesnt come to pass? would you argue that they got it wrong? divorce yourself from the parties involved and just look at the logical form of the argument.

The sampling deficiencies audited by McIntyre (“the Team’s Pearl Harbour adjustment”) begged the question from Pielke “Does the IPCC’s Main Conclusion Need to be Revisited?” (since “…we know now that the trend since 1950 included a spurious factor due to observational discontinuities, which reduces the entire trend to 0.06″). Emphasis mine.

I’ve noticed that it also apparently affected the meteorological stations. Were these data adjusted to account for a change from buckets to engine cooling intakes too?

I’ve come across one site – http://www.climate-ocean.com/ – that argues that it wasn’t the sampling, but the effect that military actions had on the sea surface, by mixing in cooler deeper water. His thesis is that explosions in the water from shells, bombs, depth charges, and sinking ships were much more effective than winds & waves at mixing the top 100 m or so. This led to cooler surface water, which in turn caused cooler air temperatures.

There’s also a decline during the Vietnam war which was primarily fought on land, not sea. I’ll go out on a limb here and engage in a hazardous first order SWAG, and posit that the cause might be aerosols from explosives. Cheng & Jenkins observed 10^6-10^7 particles per cc, with multimodal size distributions with peaks at 700-900 nm and smaller than 100 nm, which grew 2-3 orders of magnitude more rapidly than normally seen in air. Add the dust raised by explosions (the “fog of war”?), smoke from burning, maybe other stuff I haven’t thought of yet, and there might be enough temporary albedo increase to cause short term cooling.

[Response: This war/climate connection is nonsense – orders of magnitude too small to have global impacts. No more on this please. – gavin]

would you insist that the IPCC issue a correction if A1F1 or B2 doesnt come to pass? would you argue that they got it wrong? divorce yourself from the parties involved and just look at the logical form of the argument.

Yah. Except the IPCC issue dozens of scenarios and variants upon them, with absolutely no indication of which they think is most likely. When a party just states the outcome of a single ‘what if’ can we not infer that they believe it likely?

I seem to remember somebody calling for resignations because the IPCC highlighted a particular ‘what if’ scenario in a press release on renewable energy not that long ago.